BERT入门实践: sentence-pair classification(colab +tensorflow2.5)

文章目录

-

- 概要描述

- 详细说明

-

- 下载GLUE数据

- 下载bert源码

- 下载并解压chinese_L-12_H-768_A-12.zip

- Fine-Tuning

参考文章:

- 一文读懂BERT(实践篇)

概要描述

根据参考文章1中使用bert fine-tuning MRPC任务的代码,我整理了一个适合colab平台tensorflow2.5环境的版本(原文中应该是tensorflow1.x),当作是对文章1的一个补充说明,对于文章1的内容就不赘述了。笔者水平有限,疏漏难免,如果发现有错误或是不规范的地方,欢迎与我讨论。

详细说明

下载GLUE数据

这里使用download_glue_data.py这个脚本下载

!python3 download_glue_data.py --data_dir glue_data --tasks all

为了能保存colab中的代码和数据,挂载到了google drive,将数据目录,代码文件放到colab的/content/drive/MyDrive/ColabNotebooks中

(注意:Colab Notebooks这个挂载google drive自动生成的目录中间是有空格的,后期设置环境变量转义没有成功,故直接重命名了)。

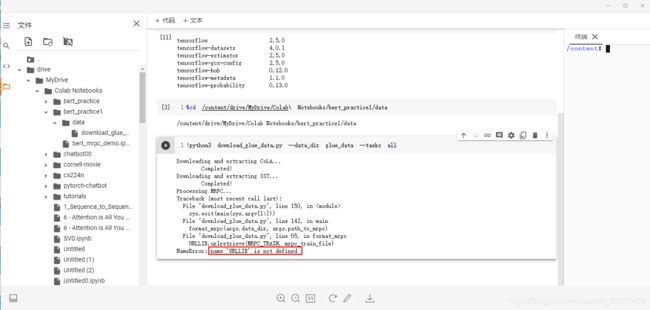

本文演示项目文件放在bert_practice1目录中。 下载glue数据集报错:

下载glue数据集报错:NameError: name 'URLLIB' is not defined

解决方案:

在脚本中添加以下两行:

import io

URLLIB = urllib.request

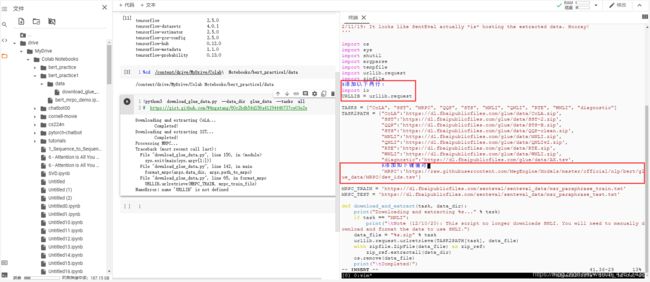

在TASK2PATH字典中添加键值对:

‘MRPC’:‘https://raw.githubusercontent.com/MegEngine/Models/master/official/nlp/bert/glue_data/MRPC/dev_ids.tsv’

设置环境变量GLUE_DIR

%env GLUE_DIR=/content/drive/MyDrive/ColabNotebooks/bert_practice1/data/glue_data

下载bert源码

在bert_practice目录下载

!git clone https://github.com/google-research/bert.git

设置环境变量BERT_DIR

%env BERT_DIR=/content/drive/MyDrive/ColabNotebooks/bert_practice1/bert

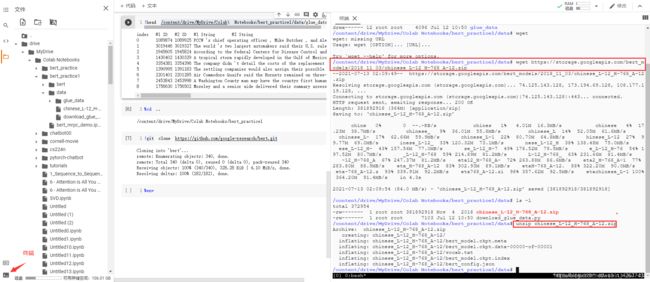

下载并解压chinese_L-12_H-768_A-12.zip

在data目录wget https://storage.googleapis.com/bert_models/2018_11_03/chinese_L-12_H-768_A-12.zip chinese_L-12_H-768_A-12.zip,然后unzip解压

设置环境变量BERT_BASE_DIR:

%env BERT_BASE_DIR=/content/drive/MyDrive/ColabNotebooks/bert_practice1/data/chinese_L-12_H-768_A-12

Fine-Tuning

提前设置环境变量OUTPUT_DIR:

%env OUTPUT_DIR=/content/drive/MyDrive/ColabNotebooks/bert_practice1/output

!python3 run_classifier.py \

--task_name=MRPC \

--do_train=true \

--do_eval=true \

--data_dir=$GLUE_DIR/MRPC \

--vocab_file=$BERT_BASE_DIR/vocab.txt \

--bert_config_file=$BERT_BASE_DIR/bert_config.json \

--init_checkpoint=$BERT_BASE_DIR/bert_model.ckpt \

--max_seq_length=128 \

--train_batch_size=8 \

--learning_rate=2e-5 \

--num_train_epochs=3.0 \

--output_dir=OUTPUT_DIR

后面会遇到不少报错,但基本都是tensorflow2环境运行tensorflow1代码造成的。

报错1:

`AttributeError: module 'tensorflow._api.v2.train' has no attribute 'Optimizer'`

这个是因为tf版本兼容问题,建议降级tf,临时解决方案是兼容处理:

将bert源码中的run_classifier.py,modeling.py,optimization.py,tokenization.py中的

import tensorflow as tf

换成如下两行:

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

报错2:

File "/content/drive/MyDrive/ColabNotebooks/bert_practice1/bert/run_classifier.py", line 829, in main

is_per_host = tf.contrib.tpu.InputPipelineConfig.PER_HOST_V2

AttributeError: module 'tensorflow.compat.v1' has no attribute 'contrib'

将run_classifier.py中的829行

is_per_host = tf.contrib.tpu.InputPipelineConfig.PER_HOST_V2

替换为

is_per_host = tf.estimator.tpu.InputPipelineConfig.PER_HOST_V2

(即将contrib改为estimator,另外第679,684,830,835,861行都需要改)

报错3:

File "/content/drive/MyDrive/ColabNotebooks/bert_practice1/bert/run_classifier.py", line 549, in input_fn

tf.contrib.data.map_and_batch(

AttributeError: module 'tensorflow.compat.v1' has no attribute 'contrib'

将第549行

tf.contrib.data.map_and_batch

替换为

tf.data.experimental.map_and_batch

(此处未深究源码,存疑)

报错4:

File "/content/drive/MyDrive/ColabNotebooks/bert_practice1/bert/modeling.py", line 365, in layer_norm

return tf.contrib.layers.layer_norm(

AttributeError: module 'tensorflow.compat.v1' has no attribute 'contrib'

将

def layer_norm(input_tensor, name=None):

"""Run layer normalization on the last dimension of the tensor."""

return tf.contrib.layers.layer_norm(

inputs=input_tensor, begin_norm_axis=-1, begin_params_axis=-1, scope=name)

替换为

def layer_norm(input_tensor, name=None):

"""Run layer normalization on the last dimension of the tensor."""

layer_norma = tf.keras.layers.LayerNormalization(name=name,axis=-1,epsilon=1e-12,dtype=tf.float32)

return layer_norma(input_tensor)

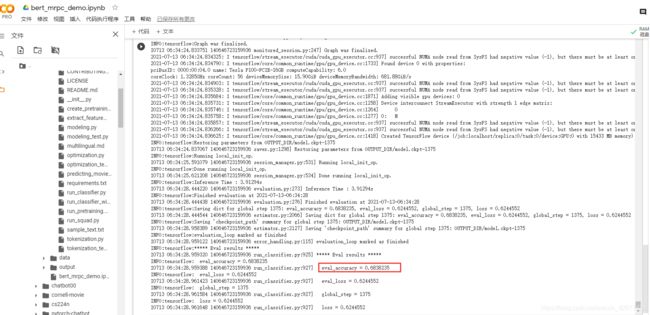

运行结果

***** Eval results *****

eval_accuracy = 0.6838235

eval_loss = 0.6244552

global_step = 1375

loss = 0.6244552