fashion mnist数据是怎么制作的_【Microsfot nni】使用NNI的fashion-mnist例子分析

使用NNI的fashion-mnist例子分析

- 测试系统 Ubuntu 16.04

- python 3.5

fashion-mnist

fashion-mnist训练集包含60000张图片,测试集包含10000张图片,图片格式都是28*28的灰度图,总共包含10个类别。它是对于传统mnist数据集的代替。CNN在传统的mnist上可以达到99.7%的准确率,mnist过于简单和完美了,并且无法代替所有的CV任务。

fashion-mnist数据集

代码详解

主程序basic_classification.py

from __future__ import absolute_import, division, print_function

import argparse

import logging

import os

import tensorflow as tf

from tensorflow import keras

from keras.callbacks import TensorBoard

import numpy as np

import matplotlib.pyplot as plt

import nni

LOG = logging.getLogger('basic_classification')

TENSORBOARD_DIR = os.environ['NNI_OUTPUT_DIR'] # '/media/ning/CE0AC1720AC157DB/basic_classification'

# import data

fashion_mnist = keras.datasets.fashion_mnist

(train_images, train_labels), (test_images, test_labels) = fashion_mnist.load_data()

# class names

class_names = ['T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot']

train_images = np.expand_dims(train_images, -1).astype(np.float) / 255.0

test_images = np.expand_dims(test_images, -1).astype(np.float) / 255.0

train_labels = keras.utils.to_categorical(train_labels, 10)

test_labels = keras.utils.to_categorical(test_labels, 10)

def create_mnist_model(hyper_params):

layers = [

keras.layers.Flatten(input_shape=(28, 28, 1)),

keras.layers.Dense(128, activation=tf.nn.relu),

keras.layers.Dense(10, activation=tf.nn.softmax)

]

model = keras.Sequential(layers)

if hyper_params['optimizer'] == 'Adam':

optimizer = keras.optimizers.Adam(lr=hyper_params['learning_rate'])

elif hyper_params['optimizer'] == 'SGD':

optimizer = keras.optimizers.SGD(lr=hyper_params['learning_rate'], momentum=0.9)

elif hyper_params['optimizer'] == 'Adadelta':

optimizer = keras.optimizers.Adadelta(lr=hyper_params['learning_rate'], rho=0.95, epsilon=None, decay=0.0)

elif hyper_params['optimizer'] == 'Adagrad':

optimizer = keras.optimizers.Adagrad(lr=hyper_params['learning_rate'], epsilon=None, decay=0.0)

model.compile(loss=keras.losses.categorical_crossentropy, optimizer=optimizer, metrics=['accuracy'])

return model

def generate_default_params():

return {

'optimizer': 'Adam',

'learning_rate': 0.001

}

class SendMetrics(keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs={}):

'''

Run on end of each epoch

'''

LOG.debug(logs)

nni.report_intermediate_result(logs["val_acc"])

def train(args, params):

model = create_mnist_model(params)

model.fit(train_images, train_labels, batch_size=args.batch_size, epochs=args.epochs, verbose=1,

validation_data=(test_images, test_labels), callbacks=[SendMetrics(), TensorBoard(log_dir=TENSORBOARD_DIR)])

_, acc = model.evaluate(test_images, test_labels, verbose=0)

LOG.debug('Final result is: %d', acc)

nni.report_final_result(acc)

if __name__ == '__main__':

PARSER = argparse.ArgumentParser()

PARSER.add_argument("--batch_size", type=int, default=200, help="batch size", required=False)

PARSER.add_argument("--epochs", type=int, default=10, help="Train epochs", required=False)

PARSER.add_argument("--num_train", type=int, default=60000, help="Number of train samples to be used, maximum 60000", required=False)

PARSER.add_argument("--num_test", type=int, default=10000, help="Number of test samples to be used, maximum 10000", required=False)

ARGS, UNKNOWN = PARSER.parse_known_args()

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

# get parameters from tuner

RECEIVED_PARAMS = nni.get_next_parameter()

LOG.debug(RECEIVED_PARAMS)

PARAMS = generate_default_params()

PARAMS.update(RECEIVED_PARAMS)

# train

train(ARGS, PARAMS)这段代码是执行的主程序,主要注意以下几个部分。这几部分也就是在一个python程序中嵌入nni需要修改的地方。

- 要import nni

- 获取下一组参数和更新参数

RECEIVED_PARAMS = nni.get_next_parameter()

PARAMS.update(RECEIVED_PARAMS)- 在执行期间要向nni报告结果,包括报告中间结果(可选)和最终结果。

# 在SendMetrics类中on_epoch_end函数:

nni.report_intermediate_result(logs["val_acc"])

# 在train函数中

nni.report_final_result(acc)搜索空间文件search_space.json

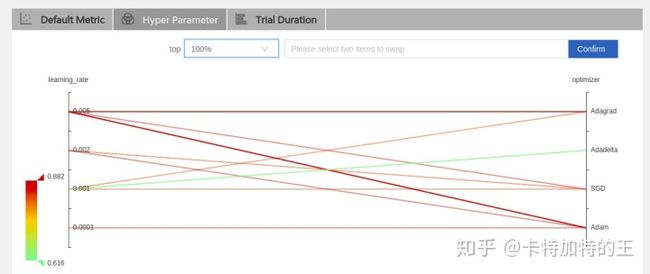

{

"optimizer":{"_type":"choice","_value":["Adam", "SGD", "Adadelta", "Adagrad"]},

"learning_rate":{"_type":"choice","_value":[0.0001, 0.001, 0.002, 0.005]}

}这里使用了不同种类的优化器和不同的学习率。

choice指的是从中选择,还有其他许多种情况,具体可以参见文档

search space

配置文件config.yml

authorName: default

experimentName: fashion-mnist

trialConcurrency: 1

maxExecDuration: 1h

maxTrialNum: 15

#choice: local, remote, pai

trainingServicePlatform: local

searchSpacePath: search_space.json

#choice: true, false

useAnnotation: false

tuner:

#choice: TPE, Random, Anneal, Evolution, BatchTuner

#SMAC (SMAC should be installed through nnictl)

builtinTunerName: TPE

classArgs:

#choice: maximize, minimize

optimize_mode: maximize

trial:

command: python3 basic_classification.py

codeDir: .

gpuNum: 0具体内容可以参考文档,重点强调一下以下几个:

- useAnnotation:如果为Ture,意味着从代码中生成搜索空间文件,此时不应该有search space.json;反之,则应该有。此时的注释应该是

@nni.variable(nni.choice(option1,option2,...,optionN),name=variable)形式,具体见文档 - optimize_mode:可以选择maximize或者minimize。这里因为向NNI报告的是准确率(acc),所以使用最大化。如果是损失函数(loss),则应该使用最小化

- command :这里是执行的文件

config.yml reference

执行与结果

执行

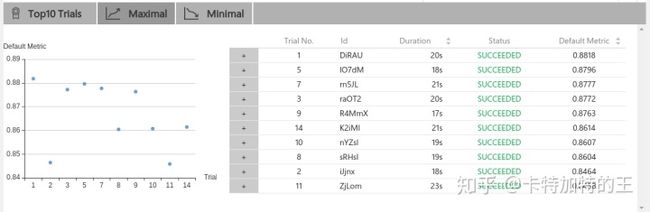

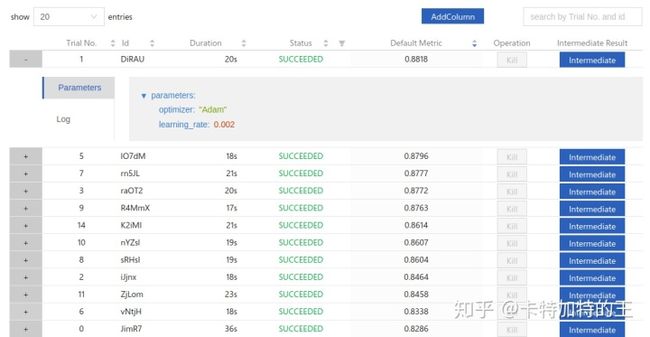

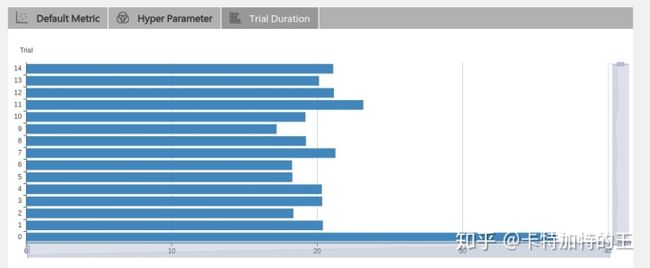

nnictl create --config config.yml结果

webUI

从结果中可以看出,使用adam优化器和0.002的学习率可以达到0.8818的准确率

debug技巧

- 先要保证不嵌入nni的python程序可以执行。这里使用注释形式生成search space.json比较好。如果是自己写的search space.json,调试时在主程序还需要注释掉嵌入的nni代码

- 如果trial fail,可以输入

nnictl log stdder,或者打开log查看出错信息,一般是在/home/directory/nni/experiments/实验ID/trial/每组参数实验id下的stdder中