资金流入流出预测-挑战Baseline几种模型对比

资金流入流出预测-挑战Baseline 赛题的一些常用方法总结:

(一)赛题说明

竞赛中使用的数据主要包含四个部分,分别为用户基本信息数据、用户申购赎回数据、收益率表和银行间拆借利率表。下面分别介绍四组数据。

1.用户信息表

用户信息表: user_profile_table 。 我们总共随机抽取了约 3 万用户,其中部分用户在 2014 年 9 月份第一次出现,这部分用户只在测试数据中 。因此用户信息表是约 2.8 万 个用户的基本数据,在原始数据的基础上处理后,主要包含了用户的性别、城市和星座。具体的字段如下表 1 :

表1用户信息表

| 列名 |

类型 |

含义 |

示例 |

| user_id |

bigint |

用户 ID |

1234 |

| Sex |

bigint |

用户性别( 1 :男, 0 :女 ) |

0 |

| City |

bigint |

所在城市 |

6081949 |

| constellation |

string |

星座 |

射手座 |

2. 用户申购赎回数据表

用户申购赎回数据表: user_balance_table 。里面有 20130701 至 20140831 申购和赎回信息、以及所有的子类目信息, 数据经过脱敏处理。脱敏之后的数据,基本保持了原数据趋势。数据主要包括用户操作时间和操作记录,其中操作记录包括申购和赎回两个部分。金额的单位是分,即 0.01 元人民币。 如果用户今日消费总量为0,即consume_amt=0,则四个字类目为空。

表格 2 :用户申购赎回数据

| 列名 |

类型 |

含义 |

示例 |

| user_id |

bigint |

用户 id |

1234 |

| report_date |

string |

日期 |

20140407 |

| tBalance |

bigint |

今日余额 |

109004 |

| yBalance |

bigint |

昨日余额 |

97389 |

| total_purchase_amt |

bigint |

今日总购买量 = 直接购买 + 收益 |

21876 |

| direct_purchase_amt |

bigint |

今日直接购买量 |

21863 |

| purchase_bal_amt |

bigint |

今日支付宝余额购买量 |

0 |

| purchase_bank_amt |

bigint |

今日银行卡购买量 |

21863 |

| total_redeem_amt |

bigint |

今日总赎回量 = 消费 + 转出 |

10261 |

| consume_amt |

bigint |

今日消费总量 |

0 |

| transfer_amt |

bigint |

今日转出总量 |

10261 |

| tftobal_amt |

bigint |

今日转出到支付宝余额总量 |

0 |

| tftocard_amt |

bigint |

今日转出到银行卡总量 |

10261 |

| share_amt |

bigint |

今日收益 |

13 |

| category1 |

bigint |

今日类目 1 消费总额 |

0 |

| category2 |

bigint |

今日类目 2 消费总额 |

0 |

| category3 |

bigint |

今日类目 3 消费总额 |

0 |

| category4 |

bigint |

今日类目 4 消费总额 |

0 |

注 1 :上述的数据都是经过脱敏处理的,收益为重新计算得到的,计算方法按照简化后的计算方式处理,具体计算方式在下节余额宝收益计算方式中描述。

注 2 :脱敏后的数据保证了今日余额 = 昨日余额 + 今日申购 - 今日赎回,不会出现负值。

3.收益率表

收益表为余额宝在 14 个月内的收益率表: mfd_day_share_interest 。具体字段如表格 3 中所示

表格 3 收益率表

| 列名 |

类型 |

含义 |

示例 |

| mfd_date |

string |

日期 |

20140102 |

| mfd_daily_yield |

double |

万份收益,即 1 万块钱的收益。 |

1.5787 |

| mfd_7daily_yield |

double |

七日年化收益率( % ) |

6.307 |

4.上海银行间同业拆放利率(Shibor)表

银行间拆借利率表是 14 个月期间银行之间的拆借利率(皆为年化利率): mfd_bank_shibor 。具体字段如下表格 4 所示:

表格 4 银行间拆借利率表

| 列名 |

类型 |

含义 |

示例 |

| mfd_date |

String |

日期 |

20140102 |

| Interest_O_N |

Double |

隔夜利率(%) |

2.8 |

| Interest_1_W |

Double |

1周利率(%) |

4.25 |

| Interest_2_W |

Double |

2周利率(%) |

4.9 |

| Interest_1_M |

Double |

1个月利率(%) |

5.04 |

| Interest_3_M |

Double |

3个月利率(%) |

4.91 |

| Interest_6_M |

Double |

6个月利率(%) |

4.79 |

| Interest_9_M |

Double |

9个月利率(%) |

4.76 |

| Interest_1_Y |

Double |

1年利率(%) |

4.78 |

5.收益计算方式

本赛题的余额宝收益方式,主要基于实际余额宝收益计算方法,但是进行了一定的简化,此处计算简化的地方如下:

首先,收益计算的时间不再是会计日,而是自然日,以 0 点为分隔,如果是 0 点之前转入或者转出的金额算作昨天的,如果是 0 点以后转入或者转出的金额则算作今天的。

然后,收益的显示时间,即实际将第一份收益打入用户账户的时间为如下表格,以周一转入周三显示为例,如果用户在周一存入 10000 元,即 1000000 分,那么这笔金额是周一确认,周二是开始产生收益,用户的余额还是 10000 元,在周三将周二产生的收益打入到用户的账户中,此时用户的账户中显示的是 10001.1 元,即 1000110 分。其他时间的计算按照表格中的时间来计算得到。

表格 5 : 简化后余额宝收益计算表

| 转入时间 |

首次显示收益时间 |

| 周一 |

周三 |

| 周二 |

周四 |

| 周三 |

周五 |

| 周四 |

周六 |

| 周五 |

下周二 |

| 周六 |

下周三 |

| 周天 |

下周三 |

6.选手需要提交的结果表:

表 格 6 选手提交结果表: tc_comp_predict_table

| 字段 |

类型 |

含义 |

示例 |

| report_date |

bigint |

日期 |

20140901 |

| purchase |

bigint |

申购总额 |

40000000 |

| redeem |

bigint |

赎回总额 |

30000000 |

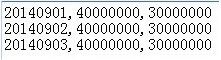

每一行数据是一天对申购、赎回总额的预测值, 2014 年 9 月每天一行数据,共 30 行数据。 Purchase 和 redeem 都是金额数据,精确到分,而不是精确到元。

评分数据格式要求与“选手结果数据样例文件”一致,结果表命名为:tc_comp_predict_table, 字段之间以逗号为分隔符,格式如下图:

7.评估指标

评估指标的设计主要期望选手对未来 30 天内每一天申购和赎回的总量数据预测的越准越好,同时考虑到可能存在的多种情况。譬如有些选手在 30 天中 29 天预测都是非常精准的但是某一天预测的结果可能误差很大,而有些选手在 30 天中每天的预测都不是很精准误差较大,如果采用绝对误差则可能导致前者的成绩比后者差,而在实际业务中可能更倾向于前者。所以最终选用积分式的计算方法:每天的误差选用相对误差来计算,然后根据用户预测申购和赎回的相对误差,通过得分函数映射得到一个每天预测结果的得分,将 30 天内的得分汇总,然后结合实际业务的倾向,对申购赎回总量预测的得分情况进行加权求和,得到最终评分。具体的操作如下:

1) 计算所有用户在测试集上每天的申购及赎回总额与实际情况总额的误差。

2) 申购预测得分与 Purchasei 相关,赎回预测得分与 Redeemi 相关 , 误差与得分之间的计算公式不公布,但保证该计算公式为单调递减的,即误差越小,得分越高,误差与大,得分越低。当第 i 天的申购误差 Purchasei =0 ,这一天的得分为 10 分;当 Purchasei > 0.3 ,其得分为 0 。

3) 最后公布总积分 = 申购预测得分 *45%+ 赎回预测得分 *55% 。

(二)数据集下载

数据集下载地址

(三)几种解题算法

1.Arima和机器学习模型

import pandas as pd

import matplotlib.pyplot as plt

import statsmodels.api as sm

# 给数据添加时间维度

def add_timestamp(data):

# 时间维度解析

data['report_date'] = pd.to_datetime(data['report_date'],format='%Y%m%d')

# 添加时间维度

data['day'] = data['report_date'].dt.day

data['month'] = data['report_date'].dt.month

data['year'] = data['report_date'].dt.year

data['week'] = data['report_date'].dt.week

data['weekday'] = data['report_date'].dt.weekday

return data

def get_total_balance(data,date):

#在内存中copy一份

df_temp = data.copy()

#按照report_date进行聚合

df_temp = df_temp.groupby(['report_date'])['total_purchase_amt','total_redeem_amt'].sum()

#还原report_date字段,重新索引

df_temp.reset_index(inplace=True)

# 筛选大于date的数据

df_temp = df_temp[(df_temp['report_date'] >= date)].reset_index(drop=True)

return df_temp

#生成测试数据

import datetime

import numpy as np

def generate_test_data(data):

total_balance=data.copy()

# 生成2014-09-01到2014-09-30

start = datetime.datetime(2014, 9, 1)

end = datetime.datetime(2014,10,1)

testdata = []

while start != end:

# 三个字段:date,total_purchase_amt,total_redeem_amt

temp = [start,np.nan, np.nan]

testdata.append(temp)

# 日期+1

start += datetime.timedelta(days=1)

# 封装testdata

testdata = pd.DataFrame(testdata)

testdata.columns = total_balance.columns

#将testdata合并到total_balance中

total_balance = pd.concat([total_balance,testdata],axis=0)

return total_balance.reset_index(drop=True)

from lightgbm import LGBMRegressor

from xgboost import XGBRegressor # 环境

from sklearn.ensemble import RandomForestRegressor

from sklearn.ensemble import StackingRegressor

class Cash:

def __init__(self,file_path):

data = pd.read_csv(file_path)

data = add_timestamp(data)

#全局用原始数据

self.data = data

# 0 代表0+1,周一;6代表6+1 周日

# print(data['weekday'].value_counts())

# 筛选稳定期的数据,即2014-03-01之后的数据

total_balance = get_total_balance(data, '2014-03-01')

# 生成测试数据

total_balance = generate_test_data(total_balance)

# 对total_balance 添加时间维度

total_balance = add_timestamp(total_balance)

from chinese_calendar import is_workday, is_holiday

total_balance['is_holiday'] = total_balance['report_date'].apply(lambda x: is_holiday(x))

total_balance['is_holiday'] = total_balance['is_holiday'].replace({True: 1, False: 0})

'''

异常情况修正(调整周期因子,因为按周期因子没有考虑中国假期因素)

1 不是真的工作日,那么设为假日(按周日处理)

2 不是真的假日,工作日为True的,按周一处理

'''

re_weekday = []

for index, (weekday, is_holiday) in enumerate(

zip(total_balance['weekday'].values, total_balance['is_holiday'].values)):

r = total_balance['weekday'].values[index]

# 如果不是周六日,但是假日,按周日处理

if weekday not in [5, 6] and is_holiday == 1:

r = 6

if weekday in [5, 6] and is_holiday == 0:

r = 0

re_weekday.append(r)

# 更新total_balance的weekday

total_balance['weekday'] = re_weekday

self.total_balance = total_balance

'''

通过周期因子预测

month_index,预测几月份数据

'''

def predit_weekday(self,month_index = 9):

temp = self.total_balance.copy()

total_balance = temp.copy() # 选择2014年3月1日到8月31日的数据

total_balance = total_balance[['report_date', 'total_purchase_amt', 'total_redeem_amt']]

# 3月到目前的数据

total_balance = total_balance[(total_balance['report_date'] >= pd.to_datetime('2014-03-01')) & (

total_balance['report_date'] < pd.to_datetime('2014-' + str(month_index) + '-01'))]

# 对total_balance 添加时间维度

total_balance = add_timestamp(total_balance)

weekday_weight = total_balance[['weekday', 'total_purchase_amt', 'total_redeem_amt']].groupby('weekday',

as_index=False).mean()

weekday_weight = weekday_weight.rename(

columns={'total_purchase_amt': 'purchase_weekday', 'total_redeem_amt': 'redeem_weekday'})

# 除以均值,得到weekday factor

weekday_weight['purchase_weekday'] /= np.mean(total_balance['total_purchase_amt'])

weekday_weight['redeem_weekday'] /= np.mean(total_balance['total_redeem_amt'])

# 合并到原数据集,增加了purchase_weekday,redeem_weekday 周期因子字段

total_balance = pd.merge(total_balance, weekday_weight, on='weekday', how='left')

# 分别统计周一到周日在1-31号出现的频次

weekday_count = total_balance[['weekday', 'day', 'report_date']].groupby(['weekday', 'day'],

as_index=False).count()

weekday_count = pd.merge(weekday_count, weekday_weight, on='weekday')

# 根据频次对周期因子purchase_weekday,redeem_weekday进行加权,获得日期因子(day_factor)

# 日期因子=周期因子*(周一到周日在(1-31)号出现的次数/共有几个月)

weekday_count['purchase_weekday'] *= weekday_count['report_date'] / len(np.unique(total_balance['month']))

weekday_count['redeem_weekday'] *= weekday_count['report_date'] / len(np.unique(total_balance['month']))

# 按照day进行求和 => 日期因子

day_rate = weekday_count.drop(['weekday', 'report_date'], axis=1).groupby('day', as_index=False).sum()

# 按照day 得到均值,即:1号均值,2号均值... 基线

day_mean = total_balance[['day', 'total_purchase_amt', 'total_redeem_amt']].groupby('day',

as_index=False).mean()

day_pred = pd.merge(day_mean, day_rate, on='day', how='left')

# 去掉周期以后的amt,作为base也就是去掉day weight

# 1-31号申购赎回平均值 / 日期因子

day_pred['total_purchase_amt'] /= day_pred['purchase_weekday']

day_pred['total_redeem_amt'] /= day_pred['redeem_weekday']

# 生成测试数据

for index, row in day_pred.iterrows():

if month_index in (2, 4, 6, 9) and row['day'] == 31:

break

# 添加report_date字段

day_pred.loc[index, 'report_date'] = pd.to_datetime(

'2014-0' + str(month_index) + '-' + str(int(row['day'])))

# 基于周期因子计算

day_pred['weekday'] = day_pred.report_date.dt.weekday

day_pred = day_pred[['report_date', 'weekday', 'total_purchase_amt', 'total_redeem_amt']]

# 与weekday_weight因子合并

day_pred = pd.merge(day_pred, weekday_weight, on='weekday')

day_pred['total_purchase_amt'] *= day_pred['purchase_weekday']

day_pred['total_redeem_amt'] *= day_pred['redeem_weekday']

day_pred = day_pred.sort_values('report_date')[['report_date', 'total_purchase_amt', 'total_redeem_amt']]

day_pred = day_pred.reset_index(drop=True)

day_pred['report_date'] = day_pred['report_date'].apply(lambda x: str(x).replace('-', '')[:8])

# day_pred.to_csv('result_weekday.csv', header=None, index=None)

day_pred = day_pred.rename(columns={'total_purchase_amt': 'purchase'})

day_pred = day_pred.rename(columns={'total_redeem_amt': 'redeem'})

self.day_pred = day_pred

self.day_pred.to_csv('day_pred_table.csv', index=None, header=None)

def predit_arima(self,start_date='2014-09-01',end_date='2014-09-30'):

data = self.data.copy()

total_balance = data.groupby(['report_date'])['total_purchase_amt', 'total_redeem_amt'].sum()

# 提取puechase 和 redeem

purchase = total_balance[['total_purchase_amt']]

redeem = total_balance[['total_redeem_amt']]

# 进行一阶差分

# 一阶差分之后,-7.9小于1%,5%,10%的统计,所以很好的拒绝了原假设(不稳定)=>稳定

diff1 = purchase.diff(1)

sm.tsa.stattools.adfuller(diff1[1:])

# 购买预测

# 使用ARIMA进行预测

from statsmodels.tsa.arima_model import ARIMA

# 选择p,q

model = ARIMA(purchase, order=(7, 1, 5)).fit()

# 对购买purchase进行预测,使用typ='levels' 得到原始数据Level的预测值

purchase_pred = model.predict(start_date, end_date, typ='levels')

# 赎回预测

# 进行一阶差分

# 一阶差分之后,-7.9小于1%,5%,10%的统计,所以很好的拒绝了原假设(不稳定)=>稳定

diff2 = redeem.diff(1)

sm.tsa.stattools.adfuller(diff2[1:])

# 选择p,q

model = ARIMA(redeem, order=(7, 1, 5)).fit()

# 对购买purchase进行预测,使用typ='levels' 得到原始数据Level的预测值

redeem_pred = model.predict(start_date, end_date, typ='levels')

result = pd.DataFrame()

result['report_date'] = purchase_pred.index

result['purchase'] = purchase_pred.values

result['redeem'] = redeem_pred.values

result['report_date'] = result['report_date'].apply(lambda x: str(x).replace('-', '')[:8])

# print(result)

self.arima_pred = result

self.arima_pred.to_csv('arima_pred_table_result.csv', index=None, header=None)

# 划分训练集和测试集

def _init_split_test(self):

total_balance = self.total_balance.copy()

# 对total_balance 添加时间维度和周期因子

total_balance = add_timestamp(total_balance)

weekday_weight = total_balance[['weekday', 'total_purchase_amt', 'total_redeem_amt']].groupby('weekday', as_index=False).mean()

weekday_weight = weekday_weight.rename(

columns={'total_purchase_amt': 'purchase_weekday', 'total_redeem_amt': 'redeem_weekday'})

# 除以均值,得到weekday factor

weekday_weight['purchase_weekday'] /= np.mean(total_balance['total_purchase_amt'])

weekday_weight['redeem_weekday'] /= np.mean(total_balance['total_redeem_amt'])

# 合并到原数据集,增加了purchase_weekday,redeem_weekday 周期因子字段

total_balance = pd.merge(total_balance, weekday_weight, on='weekday', how='left')

# 切分测试集和训练集

train = total_balance[total_balance['report_date'] <= '2014-08-31']

test = total_balance[total_balance['report_date'] > '2014-08-31']

#因为测试集中没有购买和赎回信息,所以训练集不考虑购买和赎回的关系,全都不做特征咧

train_purchase_y = train.pop('total_purchase_amt')

train_redeem_y = train.pop('total_redeem_amt')

train_X = train.drop(columns=['report_date'], axis=1)

test_X = test.drop(columns=['total_purchase_amt', 'total_redeem_amt','report_date'], axis=1)

return train_X,test_X,train_purchase_y,train_redeem_y,train,test

def simple_predict(self):

train_X, test_X, train_purchase_y, train_redeem_y, train, test = self._init_split_test()

# LGB 模型预测

model_LGBMRegressor = LGBMRegressor()

model_LGBMRegressor.fit(train_X, train_purchase_y)

pred_purchase = model_LGBMRegressor.predict(test_X)

model_LGBMRegressor = LGBMRegressor()

model_LGBMRegressor.fit(train_X, train_redeem_y)

redeem_pred = model_LGBMRegressor.predict(test_X)

# XGB预测

# model_XGBRegressor = XGBRegressor()

# model_XGBRegressor.fit(train_X, train_purchase_y)

# pred_purchase = model_XGBRegressor.predict(test_X)

#

# model_XGBRegressor = XGBRegressor()

# model_XGBRegressor.fit(train_X, train_redeem_y)

# redeem_pred = model_XGBRegressor.predict(test_X)

result = pd.DataFrame()

result['report_date'] = test['report_date'].apply(lambda x: str(x).replace('-', '')[:8])

result['purchase'] = pred_purchase

result['redeem'] = redeem_pred

self.lgb_pred = result

self.lgb_pred.to_csv('lgb_table_result.csv', index=None, header=None)

# 使用融合模型测试

def stacking_regressor(self):

estimators = [

('xgb', XGBRegressor()),

('lgb', LGBMRegressor(random_state=42))]

reg = StackingRegressor(

estimators=estimators,

final_estimator=RandomForestRegressor(n_estimators=10, random_state=42))

train_X, test_X, train_purchase_y, train_redeem_y, train, test = self._init_split_test()

reg.fit(train_X, train_purchase_y)

pred_purchase = reg.predict(test_X)

reg.fit(train_X, train_redeem_y)

redeem_pred = reg.predict(test_X)

result = pd.DataFrame()

result['report_date'] = test['report_date'].apply(lambda x: str(x).replace('-', '')[:8])

result['purchase'] = pred_purchase

result['redeem'] = redeem_pred

self.lgb_pred = result

self.lgb_pred.to_csv('reg_table_result.csv', index=None, header=None)

if __name__ == "__main__":

cash = Cash('data/user_balance_table.csv')

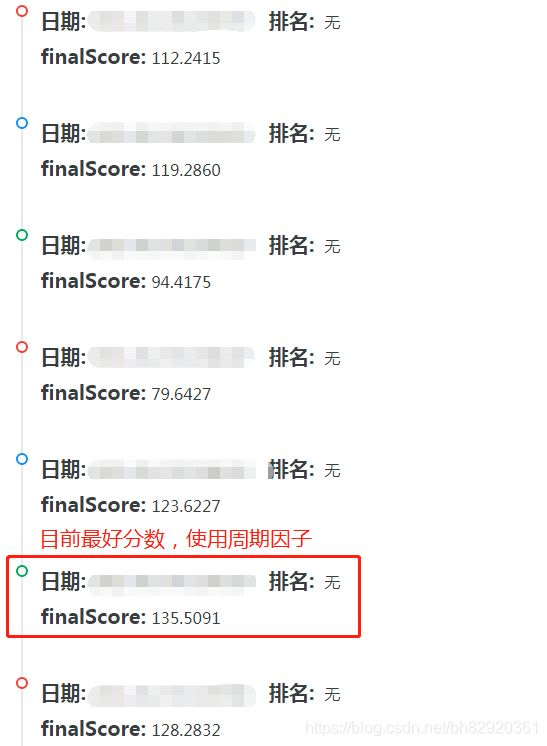

# arima预测baseline 101分

cash.predit_arima()

# 周期因子打印 135分

cash.predit_weekday()

# 简单模型预测模型预测 lgb : 128分 ; xgb : 113.5856

cash.simple_predict()

# 使用融合模型(lgb,xgb+随机森林) 113.2798

cash.stacking_regressor()2.CNN模型

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from keras import optimizers

from keras.callbacks import EarlyStopping

from keras.layers import Input, Conv1D, MaxPooling1D, Dense, Dropout, Flatten

from keras.models import Model

from sklearn.metrics import mean_absolute_error

from sklearn.preprocessing import MinMaxScaler

#119.2860分

user_balance = pd.read_csv('data/user_balance_table.csv')

# user_profile = pd.read_csv('user_profile_table.csv')

df_tmp = user_balance.groupby(['report_date'])['total_purchase_amt', 'total_redeem_amt'].sum()

df_tmp.index = pd.to_datetime(df_tmp.index, format='%Y%m%d')

holidays = ('20130813', '20130902', '20131001', '20131111', '20130919', '20131225', '20140101', '20140130', '20140131',

'20140214', '20140405', '20140501', '20140602', '20140802', '20140901', '20140908')

def create_features(timeindex):

n = len(timeindex)

features = np.zeros((n, 4))

features[:, 0] = timeindex.day.values / 31

features[:, 1] = timeindex.month.values / 12

features[:, 2] = timeindex.weekday.values / 6

for i in range(n):

if timeindex[i].strftime('%Y%m%d') in holidays:

features[i, 3] = 1

return features

features = create_features(df_tmp.index)

september = pd.to_datetime(['201409%02d' % i for i in range(1, 31)])

features_sep = create_features(september)

scaler_pur = MinMaxScaler()

scaler_red = MinMaxScaler()

data_pur = scaler_pur.fit_transform(df_tmp.values[:, 0:1])

data_red = scaler_red.fit_transform(df_tmp.values[:, 1:2])

def create_dataset(data, back, forward=30):

n_samples = len(data) - back - forward + 1

X, Y = np.zeros((n_samples, back, data.shape[-1])), np.zeros((n_samples, forward, data.shape[-1]))

for i in range(n_samples):

X[i, ...] = data[i:i + back, :]

Y[i, ...] = data[i + back:i + back + forward, :]

return X, Y

def build_cnn(X_trn, lr, n_outputs, dropout_rate):

inputs = Input(X_trn.shape[1:])

z = Conv1D(64, 14, padding='valid', activation='relu', kernel_initializer='he_uniform')(inputs)

# z = MaxPooling1D(2)(z)

z = Conv1D(128, 7, padding='valid', activation='relu', kernel_initializer='he_uniform')(z)

z = MaxPooling1D(2)(z)

z = Conv1D(256, 3, padding='valid', activation='relu', kernel_initializer='he_uniform')(z)

z = Conv1D(256, 3, padding='valid', activation='relu', kernel_initializer='he_uniform')(z)

z = MaxPooling1D(2)(z)

z = Flatten()(z)

z = Dropout(dropout_rate)(z)

z = Dense(128, activation='relu', kernel_initializer='he_uniform')(z)

z = Dropout(dropout_rate)(z)

z = Dense(84, activation='relu', kernel_initializer='he_uniform')(z)

outputs = Dense(n_outputs)(z)

model = Model(inputs=inputs, outputs=outputs)

adam = optimizers.Adam(lr=lr)

model.compile(loss='mse', optimizer=adam, metrics=['mae'])

model.summary()

return model

back = 60

forward = 30

X_pur_data, Y_pur_data = create_dataset(data_pur, back, forward)

X_red_data, Y_red_data = create_dataset(data_red, back, forward)

X_features, Y_features = create_dataset(features, back, forward)

Y_features = np.concatenate((Y_features, np.zeros((Y_features.shape[0], back-forward, Y_features.shape[-1]))), axis=1)

# X_pur, X_red = np.concatenate((X_pur_data, X_features, Y_features), axis=-1), np.concatenate((X_red_data, X_features, Y_features), axis=-1)

# X_pur_trn, X_pur_val, X_red_trn, X_red_val = X_pur[:-forward, ...], X_pur[-1:, ...], X_red[:-forward, ...], X_red[-1:, ...]

# Y_pur_trn, Y_pur_val, Y_red_trn, Y_red_val = Y_pur_data[:-forward, ...], Y_pur_data[-1:, ...], Y_red_data[:-forward, ...], Y_red_data[-1:, ...]

Y_fea_sep = np.concatenate((features_sep, np.zeros((back-forward, features_sep.shape[-1]))), axis=0)

# X_pur_tst = np.concatenate((data_pur[-back:, :], features[-back:, :], Y_fea_sep), axis=-1)[None, ...]

# X_red_tst = np.concatenate((data_red[-back:, :], features[-back:, :], Y_fea_sep), axis=-1)[None, ...]

X = np.concatenate((X_pur_data, X_red_data, X_features, Y_features), axis=-1)

Y = np.concatenate((Y_pur_data, Y_red_data), axis=1)

X_trn, X_val, Y_trn, Y_val = X[:-forward, ...], X[-1:, ...], Y[:-forward, ...], Y[-1:, ...]

X_tst = np.concatenate((data_pur[-back:, :], data_red[-back:, :], features[-back:, :], Y_fea_sep), axis=-1)[None, ...]

cnn = build_cnn(X_trn, lr=0.0008, n_outputs=2 * forward, dropout_rate=0.5)

history = cnn.fit(X_trn, Y_trn, batch_size=32, epochs=1000, verbose=2,

validation_data=(X_val, Y_val),

callbacks=[EarlyStopping(monitor='val_mae', patience=200, restore_best_weights=True)])

plt.figure(figsize=(8, 5))

plt.plot(history.history['mae'], label='train mae')

plt.plot(history.history['val_mae'], label='validation mae')

plt.ylim([0, 0.2])

plt.legend()

plt.show()

def plot_prediction(y_pred, y_true):

plt.figure(figsize=(16, 4))

plt.plot(np.squeeze(y_pred), label='prediction')

plt.plot(np.squeeze(y_true), label='true')

plt.legend()

plt.show()

print('MAE: %.3f' % mean_absolute_error(np.squeeze(y_pred), np.squeeze(y_true)))

pred = cnn.predict(X_val)

plot_prediction(pred, Y_val)

history = cnn.fit(X, Y, batch_size=32, epochs=500, verbose=2,

callbacks=[EarlyStopping(monitor='mae', patience=30, restore_best_weights=True)])

plt.figure(figsize=(8, 5))

plt.plot(history.history['mae'], label='train mae')

plt.legend()

plt.show()

print(cnn.evaluate(X, Y, verbose=2))

pred_tst = cnn.predict(X_tst)

pur_sep = scaler_pur.inverse_transform(pred_tst[:, :forward].transpose())

red_sep = scaler_red.inverse_transform(pred_tst[:, forward:].transpose())

test_user = pd.DataFrame({'report_date': [20140900 + i for i in range(1, 31)]})

test_user['pur'] = pur_sep.astype('int')

test_user['red'] = red_sep.astype('int')

test_user.to_csv('cnn_table_result.csv', encoding='utf-8', index=False, header=False)3.RNN(LSTM模型)

import pandas as pd

import matplotlib.pyplot as plt

import math

import numpy

import pandas

from keras.layers import LSTM, RNN, GRU, SimpleRNN

from keras.layers import Dense, Dropout

from keras.callbacks import EarlyStopping

import matplotlib.pyplot as plt

from keras.models import Sequential

from sklearn.preprocessing import MinMaxScaler

import os

numpy.random.seed(2019)

class RNNModel(object):

def __init__(self, look_back=1, epochs_purchase=20, epochs_redeem=40, batch_size=1, verbose=2, patience=10,

store_result=False):

self.look_back = look_back

self.epochs_purchase = epochs_purchase

self.epochs_redeem = epochs_redeem

self.batch_size = batch_size

self.verbose = verbose

self.store_result = store_result

self.patience = patience

self.purchase = df_tmp.values[:, 0:1]

self.redeem = df_tmp.values[:, 1:2]

def access_data(self, data_frame):

# load the data set

data_set = data_frame

data_set = data_set.astype('float32')

# LSTMs are sensitive to the scale of the input data, specifically when the sigmoid (default) or tanh activation functions are used. It can be a good practice to rescale the data to the range of 0-to-1, also called normalizing.

scaler = MinMaxScaler(feature_range=(0, 1))

data_set = scaler.fit_transform(data_set)

# reshape into X=t and Y=t+1

train_x, train_y, test = self.create_data_set(data_set)

# reshape input to be [samples, time steps, features]

train_x = numpy.reshape(train_x, (train_x.shape[0], 1, train_x.shape[1]))

return train_x, train_y, test, scaler

# convert an array of values into a data set matrix

def create_data_set(self, data_set):

data_x, data_y = [], []

for i in range(len(data_set) - self.look_back - 30):

a = data_set[i:(i + self.look_back), 0]

data_x.append(a)

data_y.append(list(data_set[i + self.look_back: i + self.look_back + 30, 0]))

# print(numpy.array(data_y).shape)

return numpy.array(data_x), numpy.array(data_y), data_set[-self.look_back:, 0].reshape(1, 1, self.look_back)

def rnn_model(self, train_x, train_y, epochs):

model = Sequential()

model.add(LSTM(64, input_shape=(1, self.look_back), return_sequences=True))

model.add(LSTM(32, return_sequences=False))

model.add(Dense(32))

model.add(Dense(30))

model.compile(loss='mean_squared_error', optimizer='adam')

model.summary()

early_stopping = EarlyStopping('loss', patience=self.patience)

history = model.fit(train_x, train_y, epochs=epochs, batch_size=self.batch_size, verbose=self.verbose,

callbacks=[early_stopping])

return model

def predict(self, model, data):

prediction = model.predict(data)

return prediction

def plot_show(self, predict):

predict = predict[['purchase', 'redeem']]

predict.plot()

plt.show()

def run(self):

purchase_train_x, purchase_train_y, purchase_test, purchase_scaler = self.access_data(self.purchase)

redeem_train_x, redeem_train_y, redeem_test, redeem_scaler = self.access_data(self.redeem)

purchase_model = self.rnn_model(purchase_train_x, purchase_train_y, self.epochs_purchase)

redeem_model = self.rnn_model(redeem_train_x, redeem_train_y, self.epochs_redeem)

purchase_predict = self.predict(purchase_model, purchase_test)

redeem_predict = self.predict(redeem_model, redeem_test)

test_user = pandas.DataFrame({'report_date': [20140900 + i for i in range(1, 31)]})

purchase = purchase_scaler.inverse_transform(purchase_predict).reshape(30, 1)

redeem = redeem_scaler.inverse_transform(redeem_predict).reshape(30, 1)

test_user['purchase'] = purchase

test_user['redeem'] = redeem

print(test_user)

"""Store submit file"""

if self.store_result is True:

test_user.to_csv('lstm_table_result.csv', encoding='utf-8', index=None, header=None)

"""plot result picture"""

self.plot_show(test_user)

if __name__ == '__main__':

# 112.2415分

ubt = pd.read_csv('data/user_balance_table.csv', parse_dates=(['report_date']))

'''

plt.style.use('fivethirtyeight') # For plots

plt.rcParams['figure.figsize'] = (25, 4.0) # set figure size

ubt[['total_purchase_amt', 'total_redeem_amt']].plot()

plt.grid(True, linestyle="-", color="green", linewidth="0.5")

plt.legend()

plt.title('purchase and redeem of every month')

plt.gca().spines["top"].set_alpha(0.0)

plt.gca().spines["bottom"].set_alpha(0.3)

plt.gca().spines["right"].set_alpha(0.0)

plt.gca().spines["left"].set_alpha(0.3)

plt.show()

'''

df_tmp = ubt.groupby(['report_date'])['total_purchase_amt', 'total_redeem_amt'].sum()

initiation = RNNModel(look_back=40, epochs_purchase=150, epochs_redeem=230, batch_size=16, verbose=2, patience=50,

store_result=True)

initiation.run()4.成绩对比

(四)免责声明

以上内容部分资料参考来自网络,如有侵权还请告知