使用FATE在两台机器实现横向联邦学习的逻辑回归任务

目录

一、环境准备

二、横向联邦学习

1.数据处理

2.数据上传

3.修改训练用配置文件(在机器B上)

4.提交任务进行训练(在机器B上)

三、模型评估

1.修改配置文件

2.提交任务进行训练

3.查看评估结果

四、删除部署

一、环境准备

关于FATE环境配置可以参考之前的《使用KubeFATE部署多机联邦学习环境(二)》

https://blog.csdn.net/SAGIRIsagiri/article/details/124127258![]() https://blog.csdn.net/SAGIRIsagiri/article/details/124127258基本流程:

https://blog.csdn.net/SAGIRIsagiri/article/details/124127258基本流程:

- 数据处理

- 数据上传

- 模型训练

- 模型预测

二、横向联邦学习

1.数据处理

数据集使用sklearn库内置的乳腺癌肿瘤数据集,为了模拟横向联邦学习,将数据集切分为特征相同的横向联邦形式。

该数据集一共有569条数据,30个特征数(其实是10个属性,分别以均值mean、标准差std、最差值worst出现了三次),一个标签(1:良性肿瘤、0:恶行肿瘤,1:0=357:212)。

现将前200个作为机器A的数据,存储为breast_1_train.csv,后269个数据作为机器B的数据,存储为breast_2_train.csv,最后100个数据作为测试数据,存储为breast_eval.csv。

在装有所需python运行库的环境下运行以下代码,执行后会在当前路径下生成 breast_1_train、breast_2_train 和breast_evl 三个文件,它们分别是host 、guest 的训练数据集以及验证数据集。

from sklearn.datasets import load_breast_cancer

import pandas as pd

breast_dataset = load_breast_cancer()

breast = pd.DataFrame(breast_dataset.data, columns=breast_dataset.feature_names)

breast = (breast-breast.mean())/(breast.std()) #z-score标准化

col_names = breast.columns.values.tolist()

columns = {}

for idx, n in enumerate(col_names):

columns[n] = "x%d"%idx

breast = breast.rename(columns=columns)

breast['y'] = breast_dataset.target

breast['idx'] = range(breast.shape[0])

idx = breast['idx']

breast.drop(labels=['idx'], axis=1, inplace = True)

breast.insert(0, 'idx', idx)

breast = breast.sample(frac=1) #打乱数据

train = breast.iloc[:469]

eval = breast.iloc[469:]

breast_1_train = train.iloc[:200]

breast_1_train.to_csv('breast_1_train.csv', index=False, header=True)

breast_2_train = train.iloc[200:]

breast_2_train.to_csv('breast_2_train.csv', index=False, header=True)

eval.to_csv('breast_eval.csv', index=False, header=True)

2.数据上传

首先将数据文件上传到虚拟机中,运行FATE后,在/data/projects/fate中,可以看到集群文件,进入集群(confs-10000)里面的shared_dir文件夹,这个文件夹是外部与docker容器中的共享文件夹,进入examples,在里面创建mydata文件夹,可以将数据上传到这里,使用rz文件上传工具,输入以下命令安装,运行后会弹出文件选择框,选择文件上传即可,将文件在两台机器上都上传一遍。

# sudo apt-get install lrzsz

# rz -be然后进入到python容器中

# docker exec -it confs-10000_python_1 bash

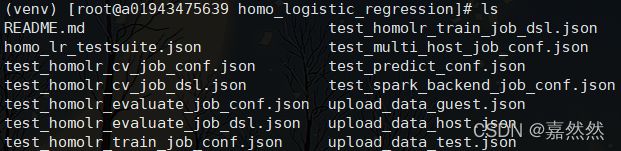

# cd examples/mydata //刚才上传的数据就在这里FATE中集成了很多常用的机器学习模型参考,在examples下的federatedml-1.x-examples中,这次选用逻辑回归模型,即homo_logistic_regression。

首先在机器A上,进入homo_logistic_regression中,修改upload_data_host.json,将训练数据1上传到系统中。

# vi upload_data_host.json

{

//指定数据文件,使用绝对路径不容易出错

"file": "/data/projects/fate/python/examples/mydata/breast_1_train.csv",

"head": 1,

"partition": 10,

"work_mode": 1,

"table_name": "homo_breast_1_train", //指定表名

"namespace": "homo_host_breast_train" //指定命名空间

}

//使用fate_flow上传数据,注意自己的fate_flow的路径是否一致

# python ../../../fate_flow/fate_flow_client.py -f upload -c upload_data_host.json

//返回输出,可以从board_url中的网址中看到执行结果

{

"data": {

"board_url": "http://fateboard:8080/index.html#/dashboard?job_id=202204200159215834845&role=local&party_id=0",

"job_dsl_path": "/data/projects/fate/python/jobs/202204200159215834845/job_dsl.json",

"job_runtime_conf_path": "/data/projects/fate/python/jobs/202204200159215834845/job_runtime_conf.json",

"logs_directory": "/data/projects/fate/python/logs/202204200159215834845",

"namespace": "homo_host_breast_train",

"table_name": "homo_breast_1_train"

},

"jobId": "202204200159215834845",

"retcode": 0,

"retmsg": "success"

}然后修改upload_data_test.json文件,将测试数据上传到系统中。

# vi upload_data_test.json

{

"file": "/data/projects/fate/python/examples/mydata/breast_eval.csv",

"head": 1,

"partition": 10,

"work_mode": 1,

"table_name": "homo_breast_1_eval",

"namespace": "homo_host_breast_eval"

}

# python ../../../fate_flow/fate_flow_client.py -f upload -c upload_data_test.json

然后在机器B上,进入homo_logistic_regression中,修改upload_data_guest.json,将训练数据2上传到系统中。

# vi upload_data_guest.json

{

"file": "/data/projects/fate/python/examples/mydata/breast_2_train.csv",

"head": 1,

"partition": 10,

"work_mode": 1,

"table_name": "homo_breast_2_train",

"namespace": "homo_guest_breast_train"

}

# python ../../../fate_flow/fate_flow_client.py -f upload -c upload_data_guest.json然后修改upload_data_test.json文件,将测试数据上传到系统中。

# vi upload_data_test.json

{

"file": "/data/projects/fate/python/examples/mydata/breast_eval.csv",

"head": 1,

"partition": 10,

"work_mode": 1,

"table_name": "homo_breast_2_eval",

"namespace": "homo_guest_breast_eval"

}

# python ../../../fate_flow/fate_flow_client.py -f upload -c upload_data_test.json 3.修改训练用配置文件(在机器B上)

FATE进行联邦学习需要用到两个配置文件dsl和conf。

dsl:用来描述任务模块,将任务模块以有向无环图的形式组合在一起

conf:用来设置各个组件的参数,比如输入模块的数据表名、算法模块的学习率、batch大小、迭代次数等。

在homo_logistic_regression里面有很多预设文件,挑选其中两个来修改,即test_homolr_train_job_conf.json和test_homolr_train_job_dsl.json。

# vi test_homolr_train_job_dsl.json

//当前dsl中已经定义了三个组件模块,构成了最基本的横向联邦模型流水线,直接使用即可

{

"components" : {

"dataio_0": {

"module": "DataIO",

"input": {

"data": {

"data": [

"args.train_data"

]

}

},

"output": {

"data": ["train"],

"model": ["dataio"]

}

},

"homo_lr_0": {

"module": "HomoLR",

"input": {

"data": {

"train_data": [

"dataio_0.train"

]

}

},

"output": {

"data": ["train"],

"model": ["homolr"]

}

},

"evaluation_0": {

"module": "Evaluation",

"input": {

"data": {

"data": [

"homo_lr_0.train"

]

}

},

"output": {

"data": ["evaluate"]

}

}

}

}# vi test_homolr_train_job_conf.json

{

"initiator": {

"role": "guest",

"party_id": 9999 //此处修改了guest的id

},

"job_parameters": {

"work_mode": 1 //将工作模式设为集群模式

},

"role": {

"guest": [9999], //修改guest的id

"host": [10000],

"arbiter": [10000]

},

"role_parameters": {

"guest": {

"args": {

"data": { //修改此处的name和namespace和upload文件中的一致

"train_data": [{"name": "homo_breast_2_train", "namespace": "homo_guest_breast_train"}]

}

}

},

"host": {

"args": {

"data": { //修改此处的name和namespace和upload文件中的一致

"train_data": [{"name": "homo_breast_1_train", "namespace": "homo_host_breast_train"}]

}

},

"evaluation_0": {

"need_run": [false]

}

}

},

"algorithm_parameters": {

"dataio_0":{

"with_label": true,

"label_name": "y",

"label_type": "int",

"output_format": "dense"

},

"homo_lr_0": {

"penalty": "L2",

"optimizer": "sgd",

"eps": 1e-5,

"alpha": 0.01,

"max_iter": 10,

"converge_func": "diff",

"batch_size": 500,

"learning_rate": 0.15,

"decay": 1,

"decay_sqrt": true,

"init_param": {

"init_method": "zeros"

},

"encrypt_param": {

"method": "Paillier"

},

"cv_param": {

"n_splits": 4,

"shuffle": true,

"random_seed": 33,

"need_cv": false

}

}

}

}4.提交任务进行训练(在机器B上)

# python ../../../fate_flow/fate_flow_client.py -f submit_job -d test_homolr_train_job_dsl.json -c test_homolr_train_job_conf.json

//返回输出,可以从board_url中查看任务运行情况

{

"data": {

"board_url": "http://fateboard:8080/index.html#/dashboard?job_id=202204200254141918057&role=guest&party_id=9999",

"job_dsl_path": "/data/projects/fate/python/jobs/202204200254141918057/job_dsl.json",

"job_runtime_conf_path": "/data/projects/fate/python/jobs/202204200254141918057/job_runtime_conf.json",

"logs_directory": "/data/projects/fate/python/logs/202204200254141918057",

"model_info": {

"model_id": "arbiter-10000#guest-9999#host-10000#model",

"model_version": "202204200254141918057"

}

},

"jobId": "202204200254141918057",

"retcode": 0,

"retmsg": "success"

}训练时可以从fateboard中查看任务运行情况,从evaluation中点击view the outputs可以查看具体训练结果。

三、模型评估

模型评估是机器学习算法设计中的重要一环,在联邦学习场景下也是如此,常用的模型评估方法包括留出法和交叉验证法。

- 留出法(Hold-Out):将数据按照一定的比例进行切分,预留一部分数据作为评估数据集,用于评估联邦学习的模型效果。

- 交叉验证法(Cross-Validation):将数据集D切成k份,D1,D2,...,Dk,每一次随机选用其中的k-1份数据作为训练集,剩余的一份数据作为评估数据。这样可以获得k组不同的训练数据集和评估数据集,得到k个评估的结果,取其均值作为最终模型评估结果。

1.修改配置文件

在上面将数据集切分出了一份breast_eval.csv用于测试(即采用留出法),并上传到了系统中,现在修改配置文件来进行模型的评估。

# vi test_homolr_train_job_dsl.json //编写dsl配置文件

{

"components" : {

"dataio_0": {

"module": "DataIO",

"input": {

"data": {

"data": ["args.train_data"]

}

},

"output": {

"data": ["train"],

"model": ["dataio"]

}

},

"dataio_1": { //此处添加dataio_1模块,用于读取测试数据

"module": "DataIO",

"input": {

"data": {

"data": ["args.eval_data"]

},

"model": ["dataio_0.dataio"]

},

"output":{

"data":["eval_data"]

}

},

"homo_lr_0": {

"module": "HomoLR",

"input": {

"data": {

"train_data": [

"dataio_0.train"

]

}

},

"output": {

"data": ["train"],

"model": ["homolr"]

}

},

"homo_lr_1": { //此处添加homo_lr_1模块,用于对测试数据进行训练

"module": "HomoLR",

"input": {

"data": {

"eval_data": ["dataio_1.eval_data"]

},

"model": ["homo_lr_0.homolr"]

},

"output": {

"data": ["eval_data"],

"model": ["homolr"]

}

},

"evaluation_0": {

"module": "Evaluation",

"input": {

"data": {

"data": [

"homo_lr_0.train"

]

}

},

"output": {

"data": ["evaluate"]

}

},

"evaluation_1": { //此处添加evaluation_1模块,用于对测试数据进行评估

"module": "Evaluation",

"input": {

"data": {

"data": ["homo_lr_1.eval_data"]

}

},

"output": {

"data": ["evaluate"]

}

}

}

}# vi test_homolr_train_job_conf.json //编写conf配置文件

{

"initiator": {

"role": "guest",

"party_id": 9999

},

"job_parameters": {

"work_mode": 1

},

"role": {

"guest": [9999],

"host": [10000],

"arbiter": [10000]

},

"role_parameters": {

"guest": { //此处添加guest的测试数据

"args": {

"data": {

"train_data": [{"name": "homo_breast_2_train", "namespace": "homo_guest_breast_train"}]

"eval_data": [{"name": "homo_breast_2_eval", "namespace": "homo_guest_breast_eval"}]

}

}

},

"host": { //此处添加host的测试数据

"args": {

"data": {

"train_data": [{"name": "homo_breast_1_train", "namespace": "homo_host_breast_train"}]

"eval_data": [{"name": "homo_breast_1_eval", "namespace": "homo_host_breast_eval"}]

}

},

"evaluation_0": {

"need_run": [false]

},

"evaluation_1": { //此处添加evaluation_1

"need_run": [false]

}

}

},

"algorithm_parameters": {

"dataio_0":{

"with_label": true,

"label_name": "y",

"label_type": "int",

"output_format": "dense"

},

"homo_lr_0": {

"penalty": "L2",

"optimizer": "sgd",

"eps": 1e-5,

"alpha": 0.01,

"max_iter": 10,

"converge_func": "diff",

"batch_size": 500,

"learning_rate": 0.15,

"decay": 1,

"decay_sqrt": true,

"init_param": {

"init_method": "zeros"

},

"encrypt_param": {

"method": "Paillier"

},

"cv_param": {

"n_splits": 4,

"shuffle": true,

"random_seed": 33,

"need_cv": false

}

}

}

}2.提交任务进行训练

# python ../../../fate_flow/fate_flow_client.py -f submit_job -d test_homolr_train_job_dsl.json -c test_homolr_train_job_conf.json3.查看评估结果

四、删除部署

如果需要删除部署,则在部署机器上运行以下命令可以停止所有FATE集群:

# bash docker_deploy.sh --delete all如果想要彻底删除在运行机器上部署的FATE,可以分别登录节点,然后运行命令:

# cd /data/projects/fate/confs-/ //此处的ID就是集群的ID

# docker-compose down

# rm -rf ../confs-/