PyTorch搭建BP神经网络识别MNIST数据集

PyTorch搭建BP神经网络识别MNIST数据集

一、数据集介绍

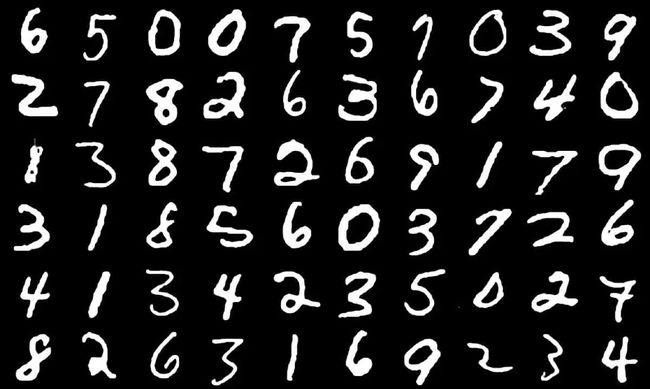

MNIST数据集(Mixed National Institute of Standards and Technology database)是美国国家标准与技术研究院收集整理的大型手写数字数据库,包含60,000个示例的训练集以及10,000个示例的测试集。其中,每张图像的尺寸为28×28。

二、导入依赖

# coding=gbk

import numpy as np

import torch

import torchvision

import torchvision.transforms as transforms

from torch.utils.data import DataLoader

三、搭建BP神经网络

该BP神经网络由3个隐含层和1个输出层,每个隐含层为20个节点,输出层为10个节点。该网络采用Sigmoid激活函数。

# input layer:784 nodes; hidden layer:three hidden layers with 20 nodes in each layer

# output layer:10 nodes

class BP:

def __init__(self):

self.input = np.zeros((100, 784)) # 100 samples per round

self.hidden_layer_1 = np.zeros((100, 20))

self.hidden_layer_2 = np.zeros((100, 20))

self.hidden_layer_3 = np.zeros((100, 20))

self.output_layer = np.zeros((100, 10))

self.w1 = 2 * np.random.random((784, 20)) - 1 # limit to (-1, 1)

self.w2 = 2 * np.random.random((20, 20)) - 1

self.w3 = 2 * np.random.random((20, 20)) - 1

self.w4 = 2 * np.random.random((20, 10)) - 1

self.error = np.zeros(10)

self.learning_rate = 0.1

def sigmoid(self, x):

return 1 / (1 + np.exp(-x))

def sigmoid_deri(self, x):

return x * (1 - x)

def forward_prop(self, data, label): # label:100 X 10,data: 100 X 784

self.input = data

self.hidden_layer_1 = self.sigmoid(np.dot(self.input, self.w1))

self.hidden_layer_2 = self.sigmoid(np.dot(self.hidden_layer_1, self.w2))

self.hidden_layer_3 = self.sigmoid(np.dot(self.hidden_layer_2, self.w3))

self.output_layer = self.sigmoid(np.dot(self.hidden_layer_3, self.w4))

# error

self.error = label - self.output_layer

return self.output_layer

def backward_prop(self):

output_diff = self.error * self.sigmoid_deri(self.output_layer)

hidden_diff_3 = np.dot(output_diff, self.w4.T) * self.sigmoid_deri(self.hidden_layer_3)

hidden_diff_2 = np.dot(hidden_diff_3, self.w3.T) * self.sigmoid_deri(self.hidden_layer_2)

hidden_diff_1 = np.dot(hidden_diff_2, self.w2.T) * self.sigmoid_deri(self.hidden_layer_1)

# update

self.w4 += self.learning_rate * np.dot(self.hidden_layer_3.T, output_diff)

self.w3 += self.learning_rate * np.dot(self.hidden_layer_2.T, hidden_diff_3)

self.w2 += self.learning_rate * np.dot(self.hidden_layer_1.T, hidden_diff_2)

self.w1 += self.learning_rate * np.dot(self.input.T, hidden_diff_1)

三、加载数据

我们可以使用torchvision.datasets.MNIST()函数加载数据

torchvision.datasets.MNIST(root: str, train: bool = True, transform: Optional[Callable] = None, target_transform: Optional[Callable] = None, download: bool = False)

- root (string): 表示数据集的根目录,其中根目录存在MNIST/processed/training.pt和MNIST/processed/test.pt的子目录

- train (bool, optional): 如果为True,则从training.pt创建数据集,否则从test.pt创建数据集

- download (bool, optional): 如果为True,则从internet下载数据集并将其放入根目录。如果数据集已下载,则不会再次下载

- transform (callable, optional): 接收PIL图片并返回转换后版本图片的转换函数

- target_transform (callable, optional): 接收PIL接收目标并对其进行变换的转换函数

# from torchvision load data

def load_data():

# 第一次运行时download=True

datasets_train = torchvision.datasets.MNIST(root='./data/', train=True, transform=transforms.ToTensor(), download=False)

datasets_test = torchvision.datasets.MNIST(root='./data/', train=False, transform=transforms.ToTensor(), download=False)

data_train = datasets_train.data

X_train = data_train.numpy()

X_test = datasets_test.data.numpy()

X_train = np.reshape(X_train, (60000, 784))

X_test = np.reshape(X_test, (10000, 784))

Y_train = datasets_train.targets.numpy()

Y_test = datasets_test.targets.numpy()

real_train_y = np.zeros((60000, 10))

real_test_y = np.zeros((10000, 10))

# each y has ten dimensions

for i in range(60000):

real_train_y[i, Y_train[i]] = 1

for i in range(10000):

real_test_y[i, Y_test[i]] = 1

index = np.arange(60000)

np.random.shuffle(index)

# shuffle train_data

X_train = X_train[index]

real_train_y = real_train_y[index]

X_train = np.int64(X_train > 0)

X_test = np.int64(X_test > 0)

return X_train, real_train_y, X_test, real_test_y

四、网络训练

def bp_network():

nn = BP()

X_train, Y_train, X_test, Y_test = load_data()

batch_size = 100

epochs = 6000

for epoch in range(epochs):

start = (epoch % 600) * batch_size

end = start + batch_size

print(start, end)

nn.forward_prop(X_train[start: end], Y_train[start: end])

nn.backward_prop()

return nn

五、网络测试

def bp_test():

nn = bp_network()

sum = 0

X_train, Y_train, X_test, Y_test = load_data()

# test:

for i in range(len(X_test)):

res = nn.forward_prop(X_test[i], Y_test[i])

res = res.tolist()

index = res.index(max(res))

if Y_test[i, index] == 1:

sum += 1

print('accuracy:', sum / len(Y_test))

if __name__ == '__main__':

bp_test()

运行代码,得到的预测准确率为:

accuracy: 0.9291