svc实战fashion-mnist图像分类

支持向量机 (SVMs) 可用于以下监督学习算法: 分类, 回归 和 异常检测.

支持向量机的优势在于:

- 在高维空间中非常高效.

- 即使在数据维度比样本数量大的情况下仍然有效.

- 在决策函数(称为支持向量)中使用训练集的子集,因此它也是高效利用内存的.

- 通用性: 不同的核函数 核函数 与特定的决策函数一一对应.常见的 kernel 已经提供,也可以指定定制的内核.

支持向量机的缺点包括:

-

如果特征数量比样本数量大得多,在选择核函数 核函数 时要避免过拟合, 而且正则化项是非常重要的.

-

支持向量机不直接提供概率估计,这些都是使用昂贵的五次交叉验算计算的.

SVC, NuSVC 和 LinearSVC 能在数据集中实现多元分类.可选用 linear 、rbf、degree kernel

二.下面来看看代码

import matplotlib.pyplot as plt

import matplotlib as mpl

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import sys

import os

import time

import tensorflow as tf

from tensorflow import keras

from sklearn.model_selection import train_test_split

from tensorflow.keras.optimizers import Adam,SGD,Adagrad,Adadelta,RMSprop

from tensorflow.keras.utils import to_categorical

#加载fashion-mnist数据集

train = pd.read_csv('../input/fashionmnist/fashion-mnist_train.csv')

test = pd.read_csv('../input/fashionmnist/fashion-mnist_test.csv')

df_train = train.copy()

df_test = test.copy()

#fashion-mnist数据集中的分类

class_name={0 : 'T-shirt/top',

1 : 'Trouser',

2 : 'Pullover',

3 : 'Dress',

4 : 'Coat',

5 : 'Sandal',

6 : 'Shirt',

7 : 'Sneaker',

8 : 'Bag',

9 : 'Ankle boot'}

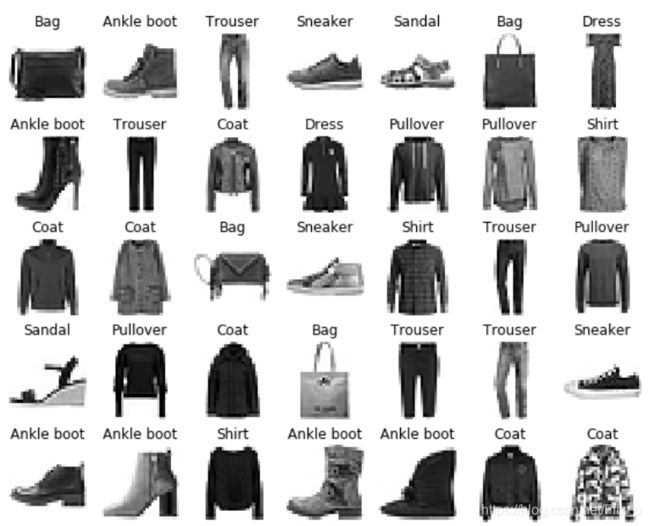

#数据可视化

def show_imgs(n_rows,n_cols,x_data,y_data,class_name):

assert len(x_data)==len(y_data)

plt.figure(figsize=(n_cols*1.4,n_rows*1.6))

for row in range(n_rows):

for col in range(n_cols):

index=n_cols*row+col

plt.subplot(n_rows,n_cols,index+1)

plt.imshow(x_data[index].reshape(28,28),cmap="binary",interpolation='nearest')

plt.axis('off')

plt.title(class_name[y_data[index]])

plt.show()

#分离data和label

X = df_train.iloc[:,1:].values

Y = df_train.iloc[:,0].values

#切分训练集验证集

x_train, x_test, y_train, y_test = train_test_split(X, Y, test_size=0.1, random_state=0)

y_train.shape,x_train.shape

#数据可视化

show_imgs(7,7,x_train,y_train,class_name)

x_train.shape,y_train.shape此时 x_train.shape,y_train.shape=((54000, 784), (54000,))

2.2 定义svc

from sklearn import svm

# clf=svm.SVC(gamma='scale',decision_function_shape='ovo')

clf=svm.SVC(gamma='scale')

clf.fit(x_train,y_train)

此时我们定义的模型参数如下:

SVC(C=1.0, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape='ovr', degree=3, gamma='scale', kernel='rbf',

max_iter=-1, probability=False, random_state=None, shrinking=True,

tol=0.001, verbose=False)

2.3验证训练结果

def getAccuracy(y_test,y_pre):

res=0

for k,v in zip(y_test,y_pre):

if k==v:

res+=1

return res/y_test.shape[0]

y_pred=clf.predict(x_test)

print("acc:",getAccuracy(y_test,y_pred))

此时输出准确率:acc: 0.8926666666666667

2.4再次验证结果: