slurm-gpu集群搭建详细步骤

初衷

首先,slurm搭建的初衷是为了将我多个GPU机器连接起来,从来利用多台机器的计算能力,提高计算效率,之前使用过deepops去搭建,结果最后好像deepops对GPU的卡有要求,我的每台机器卡都不一样,所以后面就开始研究slurm集群的方式了。

1、参考文档

之前参考过诸多文档,中间会出现各种奇怪的错误,后来还是通过docker的方式去装才成功,不通过docker的还在探索中,以后成功会出新视频。

(1)官网文档

slurm官网

(2)git上docker集群

docker集群

(3)操作系统集群(未成功)

操作系统集群

2、搭建步骤

2.1 三个镜像

搭建的时候,其实完全按照docker集群的方式就可以,这个过程需要用到三个镜像centos:7,mysql:5.7,slurm-docker-cluster_19.05.1.tar,我已经搭建完了,镜像会上传到资源,资源位置:slurm镜像包。

2.2 文件配置

其实,启动docker集群的时候,最主要的一步就是各种配置文件,我的配置文件如下:

(1)slurm.conf

# slurm.conf

#

# See the slurm.conf man page for more information.

#

ClusterName=linux

ControlMachine=slurmctld

ControlAddr=slurmctld

#BackupController=

#BackupAddr=

#

SlurmUser=slurm

#SlurmdUser=root

SlurmctldPort=6817

SlurmdPort=6818

AuthType=auth/munge

#JobCredentialPrivateKey=

#JobCredentialPublicCertificate=

StateSaveLocation=/var/lib/slurmd

SlurmdSpoolDir=/var/spool/slurmd

SwitchType=switch/none

MpiDefault=none

SlurmctldPidFile=/var/run/slurmd/slurmctld.pid

SlurmdPidFile=/var/run/slurmd/slurmd.pid

ProctrackType=proctrack/linuxproc

#PluginDir=

CacheGroups=0

#FirstJobId=

ReturnToService=0

#MaxJobCount=

#PlugStackConfig=

#PropagatePrioProcess=

#PropagateResourceLimits=

#PropagateResourceLimitsExcept=

#Prolog=

#Epilog=

#SrunProlog=

#SrunEpilog=

#TaskProlog=

#TaskEpilog=

#TaskPlugin=

#TrackWCKey=no

#TreeWidth=50

#TmpFS=

#UsePAM=

#

# TIMERS

SlurmctldTimeout=300

SlurmdTimeout=300

InactiveLimit=0

MinJobAge=300

KillWait=30

Waittime=0

#

# SCHEDULING

SchedulerType=sched/backfill

#SchedulerAuth=

#SchedulerPort=

#SchedulerRootFilter=

SelectType=select/cons_res

SelectTypeParameters=CR_CPU_Memory

FastSchedule=1

#PriorityType=priority/multifactor

#PriorityDecayHalfLife=14-0

#PriorityUsageResetPeriod=14-0

#PriorityWeightFairshare=100000

#PriorityWeightAge=1000

#PriorityWeightPartition=10000

#PriorityWeightJobSize=1000

#PriorityMaxAge=1-0

#

# LOGGING

SlurmctldDebug=3

SlurmctldLogFile=/var/log/slurm/slurmctld.log

SlurmdDebug=3

SlurmdLogFile=/var/log/slurm/slurmd.log

JobCompType=jobcomp/filetxt

JobCompLoc=/var/log/slurm/jobcomp.log

#

# ACCOUNTING

JobAcctGatherType=jobacct_gather/linux

JobAcctGatherFrequency=30

DebugFlags=CPU_Bind,gres

#

AccountingStorageType=accounting_storage/slurmdbd

AccountingStorageHost=slurmdbd

AccountingStoragePort=6819

AccountingStorageLoc=slurm_acct_db

#AccountingStoragePass=

#AccountingStorageUser=

#

# COMPUTE NODES

#

NodeName=c1 RealMemory=30000 State=UNKNOWN

NodeName=c3 RealMemory=30000 State=UNKNOWN

GresTypes=gpu

NodeName=c2 Gres=gpu:1 CPUs=4 RealMemory=30000 State=UNKNOWN

#

# PARTITIONS

PartitionName=normal Default=yes Nodes=c[1-3] Priority=50 Nodes=ALL Shared=NO MaxTime=5-00:00:00 DefaultTime=5-00:00:00 State=UP

如上,我配置了三个节点,按照git上面的说明,其他他启动集群的时候只是启动了三个容器,每个容器其实认为是一个机器,但是实际上是在同一台物理机上面运行的,所以,我自己加了一个c3,c3其实表示的是一个真实的物理机,c3上面启动的是一个容器。

2.3 master物理机文件配置

前面说了,我现在一共是三个计算节点,c1、c2在机器A上面,c3在B上面,那么A机器上的各种配置文件如下。

(1)docker-compose.yml

version: "2.3"

services:

mysql:

image: mysql:5.7

hostname: mysql

container_name: mysql

environment:

MYSQL_RANDOM_ROOT_PASSWORD: "yes"

MYSQL_DATABASE: slurm_acct_db

MYSQL_USER: slurm

MYSQL_PASSWORD: password

volumes:

- var_lib_mysql:/var/lib/mysql

- /etc/hosts:/etc/hosts

ports:

- "3306:3306"

slurmdbd:

image: slurm-docker-cluster:19.05.1

command: ["slurmdbd"]

container_name: slurmdbd

hostname: slurmdbd

volumes:

- etc_munge:/etc/munge

- etc_slurm:/etc/slurm

- var_log_slurm:/var/log/slurm

- /etc/hosts:/etc/hosts

expose:

- "6819"

ports:

- "6819:6819"

depends_on:

- mysql

slurmctld:

image: slurm-docker-cluster:19.05.1

command: ["slurmctld"]

container_name: slurmctld

hostname: slurmctld

volumes:

- etc_munge:/etc/munge

- etc_slurm:/etc/slurm

- slurm_jobdir:/data

- var_log_slurm:/var/log/slurm

- /ml/home/mcldd/slurm/slurm-docker-cluster/slurm.conf:/etc/slurm/slurm.conf

- /ml/home/mcldd/slurm/slurm-docker-cluster/gres.conf:/etc/slurm/gres.conf

- /etc/hosts:/etc/hosts

#- /ml/home/mcldd/slurm/slurm-docker-cluster/cgroup.conf:/etc/slurm/cgroup.conf

- /dev/:/dev/

deploy:

resources:

reservations:

devices:

- driver: nvidia

device_ids: ['1']

capabilities: [gpu]

expose:

- "6817"

ports:

- "6817:6817"

depends_on:

- "slurmdbd"

c1:

image: slurm-docker-cluster:19.05.1

command: ["slurmd"]

hostname: c1

container_name: c1

volumes:

- etc_munge:/etc/munge

- etc_slurm:/etc/slurm

- slurm_jobdir:/data

- var_log_slurm:/var/log/slurm

- /ml/home/mcldd/slurm/slurm-docker-cluster/slurm.conf:/etc/slurm/slurm.conf

- /etc/hosts:/etc/hosts

expose:

- "6818"

depends_on:

- "slurmctld"

c2:

image: slurm-docker-cluster:19.05.1

command: ["slurmd"]

hostname: c2

container_name: c2

volumes:

- etc_munge:/etc/munge

- etc_slurm:/etc/slurm

- slurm_jobdir:/data

- var_log_slurm:/var/log/slurm

- /ml/home/mcldd/slurm/slurm-docker-cluster/slurm.conf:/etc/slurm/slurm.conf

- /ml/home/mcldd/slurm/slurm-docker-cluster/gres.conf:/etc/slurm/gres.conf

#- /ml/home/mcldd/slurm/slurm-docker-cluster/cgroup.conf:/etc/slurm/cgroup.conf

- /dev/:/dev

- /etc/hosts:/etc/hosts

deploy:

resources:

reservations:

devices:

- driver: nvidia

device_ids: ['1']

capabilities: [gpu]

expose:

- "6818"

depends_on:

- "slurmctld"

volumes:

etc_munge:

etc_slurm:

slurm_jobdir:

var_lib_mysql:

var_log_slurm:如上,此处需要注意,docker-compose调用gpu的时候版本必须是1.28.0+,所以你可以先按照下面操作看看是否符合,不符合要升级,还有就是docker-compose.yml的版本号上面是2.3.

(2)gres.conf

NodeName=c2 Name=gpu File=/dev/nvidia1 CPUs=2

如上,这个配置文件是用于调GPU的,吸引在File上面指定gpu设备地址,一般几块卡就有几个nvidia名字,所以可以看到docker-compose.yml里面c2节点也需要把这个文件给映射进去。

(3)hosts

127.0.0.1 titanrtx2

10.33.43.19 c3如上,需要在master机器上配置c3的ip地址,然后通过docker挂载进去,他就能找到c3了,另外,很重要一点就是,他们通讯,有时候是需要打开端口号和关闭防火墙的,这一点要保证,不然可能无法连上。

2.3 c3物理机文件配置

c3是单独的一台机器,他与master交流需要通过端口和hostname去找,所以他的配置和主文件稍有不同。

(1)docker-compose.yml

version: "2.3"

services:

c3:

image: slurm-docker-cluster:19.05.1

command: ["slurmd"]

hostname: c3

container_name: c3

volumes:

- etc_munge:/etc/munge

- etc_slurm:/etc/slurm

- slurm_jobdir:/data

- var_log_slurm:/var/log/slurm

- /etc/hosts:/etc/hosts

- /ml/home/mcldd/slurm/slurm-docker-cluster/slurm.conf:/etc/slurm/slurm.conf

expose:

- "6818"

ports:

- "6818:6818"

volumes:

etc_munge:

etc_slurm:

slurm_jobdir:

var_lib_mysql:

var_log_slurm:

(2)hosts

10.33.43.27 slurmctld c1 c2

::1 localhost6 localhost6.localdomain看上面,需要把c1,c2和slurmctld的地址都配上 ,其他文件都和master一致了,完成后,启动即可。

3、启动步骤

3.1 启动master

启动:

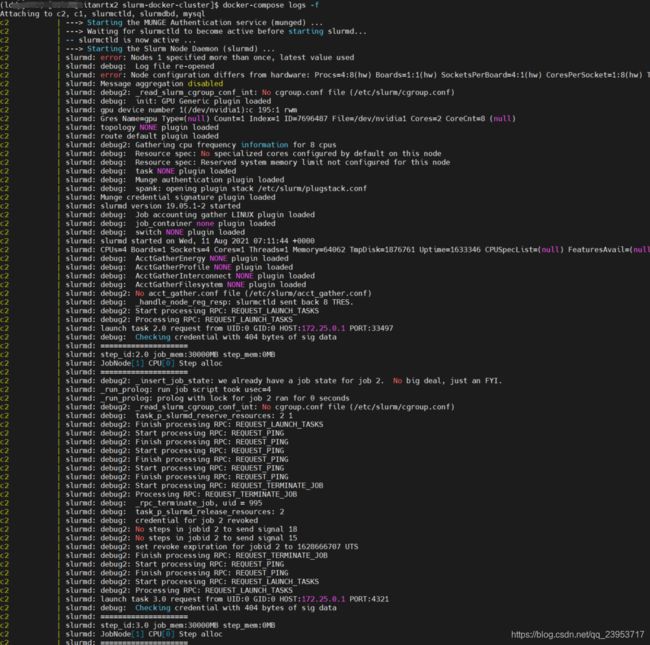

日志:

3.2 启动c3

启动:

日志:

4、测试任务分配步骤

4、测试任务分配步骤

# 执行命令

srun -N 3 hostname

# 返回结果

c1

c2

c3

# gpu调用

此部分后期再研究,基本上都可以,但是分配节点的时候版本容易不匹配