LeNet 模型原理及pytorch代码

1. 概述

LeNet-5[1] 诞生于1994年,是最早的深层卷积神经网络之一,推动了深度学习的发展。从1988年开始,在多次成功的迭代后,这项由Yann LeCun完成的开拓性成果被命名为LeNet-5。最初被用于手写数字识别,当年美国大多数银行就是用它来识别支票上面的手写数字的,它是早期卷积神经网络中最有代表性的实验系统之一。

2. 算法基本思想

2.1 LeNet-5的网络结构

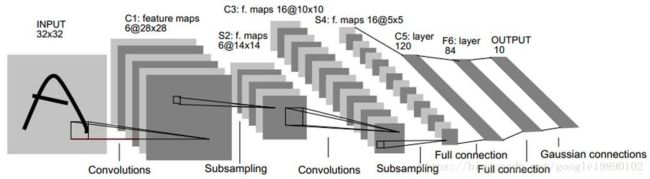

LeNet-5中主要由2个卷积层、2个下抽样层(池化层)、3个全连接层组成(两个隐藏层、一个输出层),其中:

- INPUT为输入,32 × 32 的图片;

- C1为卷积,由6个卷积核的大小为 5 × 5 组成,stride为1,得到6个28 × 28的feature maps;

- S2为下采样,以2 × 2为单位的下抽样,使用的平均池化(Average Pooling),得到6个maps;

- C3为卷积,由16个卷积核的大小为5 × 5,stride为1,得到16个10 × 10的feature maps;

- S4为下采样,以2 × 2为单位的下抽样,使用的平均池化(Average Pooling),得到16个maps;

- C5为卷积,由120个卷积核的大小为5 × 5,stride为1,得到120个1 × 1的feature maps;

虽然LeNet-5现在已经很少使用,但是其是奠定了现代卷积神经网络的基石之作。

3. 模型代码

import time

import torch

from torch import nn, optim

import sys

sys.path.append("..")

import d2lzh_pytorch as d2l

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

class LeNet(nn.Module):

def __init__(self):

super(LeNet, self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(1, 6, 5), # in_channels, out_channels, kernel_size

nn.Sigmoid(),

nn.MaxPool2d(2, 2), # kernel_size, stride

nn.Conv2d(6, 16, 5),

nn.Sigmoid(),

nn.MaxPool2d(2, 2)

)

self.fc = nn.Sequential(

nn.Linear(16*4*4, 120),

nn.Sigmoid(),

nn.Linear(120, 84),

nn.Sigmoid(),

nn.Linear(84, 10)

)

def forward(self, img):

feature = self.conv(img)

output = self.fc(feature.view(img.shape[0], -1))

return output

打印网络结构:

net = LeNet()

print(net)

输出:

LeNet(

(conv): Sequential(

(0): Conv2d(1, 6, kernel_size=(5, 5), stride=(1, 1))

(1): Sigmoid()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(6, 16, kernel_size=(5, 5), stride=(1, 1))

(4): Sigmoid()

(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(fc): Sequential(

(0): Linear(in_features=256, out_features=120, bias=True)

(1): Sigmoid()

(2): Linear(in_features=120, out_features=84, bias=True)

(3): Sigmoid()

(4): Linear(in_features=84, out_features=10, bias=True)

)

)

小结:

- 卷积神经网络就是含卷积层的网络。

- LeNet交替使用卷积层和最大池化层后接全连接层来进行图像分类。

参考资料:

[1] LeCun, Y., Bottou, L., Bengio, Y., & Haffner, P. (1998). Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11), 2278-2324.

【深度学习算法原理】经典CNN结构——LeNet-5