OpenCL配置及测试,使用visual studio

依赖库

OpenCL是一套标准,由Khronos Group管理,Khronos在github上有一个仓库,另外各个硬件厂家也都有自己的实现。

KhronosGroup

github地址:https://github.com/KhronosGroup/OpenCL-SDK

点击页面右侧的Releases,根据自己的环境进行下载。

可以下载编译好的压缩包,比如:OpenCL-SDK-v2022.09.30-Win-x64.zip

解压后可以看到bin,include,lib等文件夹,这些是主要要用的,习惯上我会把最外层文件夹名字后缀全部干掉,只留下OpenCL。

Nvidia

Nvidia的OpenCL库在装Cuda的时候会顺带装上,需要去Cuda安装目录里面扒出来。

Cuda下载:https://developer.nvidia.com/cuda-downloads

官方Demo:https://developer.nvidia.com/opencl

安装之后去下面目录中把CL文件夹拷贝出来:

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.4\include

然后去下面两个文件夹中把OpenCL.lib拷贝出来:

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.4\lib\Win32

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.4\lib\x64

最后形成这样一个文件夹结构:

- OpenCL

- include

- CL:一堆头文件

- lib

- Win32:OpenCL.lib

- x64:OpenCL.lib

- include

Intel

Intel比较坑,需要下载sdk并安装,但是下载sdk的过程必须要求注册。

Intel OpenCL sdk下载:https://software.intel.com/en-us/intel-opencl/download

安装后的sdk的路径为:

C:\Program Files (x86)\IntelSWTools\system_studio_2020\OpenCL\sdk

这个路径下有include和lib,拷贝出来形成与上述相同的文件夹结构。

在Visual Studio中配置依赖库

下面以KhronosGroup的SDK为例。

创建一个新的VS空项目,平台改成x64,然后把OpenCL依赖库拷贝进去。

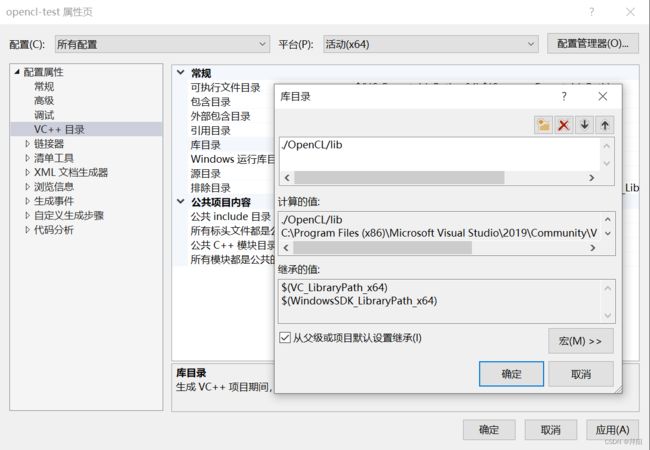

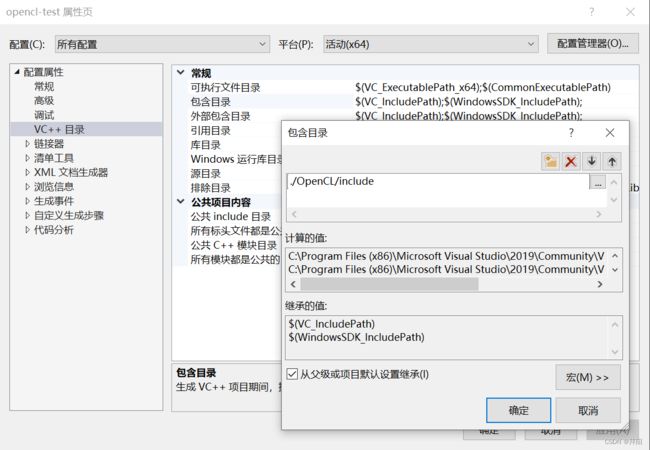

配置include和lib目录

右键点击项目 - 属性,按如下方式配置include和lib路径。

请注意配置成相对路径,这样把工程拷贝给别人后,别人不需要更改直接就可以运行,下同。

配置附加依赖项

点击链接器 - 输入 - 附加依赖项,把OpenCL.lib写进去。

配置dll目录(可选)

在调试 - 环境中配置dll路径,注意这时候路径前面要写PATH=。

这一步常常是可选的,为什么呢?因为如果安装过cuda或者其他类似驱动的话,Windows\System32文件夹下常常已经有OpenCL.dll了,这个系统文件夹下的dll可以默认被调用到,所以不用配置也可以使用。(不过System32文件夹下的dll和KhronosGroup的dll不是一套代码编译出来的,可能会存在接口不一致情况。)

测试代码

入门可以找一些公开的代码库学习一下,比如Nvidia的Samples:https://developer.nvidia.com/opencl

但是Nvidia自己搞了一些公用库,并且有些工程项目使用了非常老的vs版本(vs08,vs10之类),不太好配。

因此下面以其中一个DotProduct为例,把代码重新梳理了一下,把下面的两个文件main.cpp和dot_product.cl放入工程目录下,并把main.cpp添加到工程源文件中,即可点F5执行测试。

dot_product.cl是kernel文件,内容是Nvidia sample里面贴过来的,一点没改。注意文件名不要改,因为主程序要通过文件名去找kernel文件。

main.cpp

#include dot_product.cl

/*

* Copyright 1993-2010 NVIDIA Corporation. All rights reserved.

*

* Please refer to the NVIDIA end user license agreement (EULA) associated

* with this source code for terms and conditions that govern your use of

* this software. Any use, reproduction, disclosure, or distribution of

* this software and related documentation outside the terms of the EULA

* is strictly prohibited.

*

*/

__kernel void DotProduct (__global float* a, __global float* b, __global float* c, int iNumElements)

{

// find position in global arrays

int iGID = get_global_id(0);

// bound check (equivalent to the limit on a 'for' loop for standard/serial C code

if (iGID >= iNumElements)

{

return;

}

// process

int iInOffset = iGID << 2;

c[iGID] = a[iInOffset] * b[iInOffset]

+ a[iInOffset + 1] * b[iInOffset + 1]

+ a[iInOffset + 2] * b[iInOffset + 2]

+ a[iInOffset + 3] * b[iInOffset + 3];

}