VINS-Fusion运行Kaist数据集

目录

- Kaist数据集转rosbag

- 运行Kaist rosbag数据集

Kaist数据集转rosbag

使用Kaist序列38,转换rosbag参考GithubKaist2bag,这里主要使用IMU,双目相机以及GPS数据。

附:其他的合并rosbag方法数据参考bag_merge。

#!/usr/bin/env python

import sys

import argparse

from fnmatch import fnmatchcase

from rosbag import Bag

def main():

parser = argparse.ArgumentParser(description='Merge one or more bag files with the possibilities of filtering topics.')

parser.add_argument('outputbag',

help='output bag file with topics merged')

parser.add_argument('inputbag', nargs='+',

help='input bag files')

parser.add_argument('-v', '--verbose', action="store_true", default=False,

help='verbose output')

parser.add_argument('-t', '--topics', default="*",

help='string interpreted as a list of topics (wildcards \'*\' and \'?\' allowed) to include in the merged bag file')

args = parser.parse_args()

topics = args.topics.split(' ')

total_included_count = 0

total_skipped_count = 0

if (args.verbose):

print("Writing bag file: " + args.outputbag)

print("Matching topics against patters: '%s'" % ' '.join(topics))

with Bag(args.outputbag, 'w') as o:

for ifile in args.inputbag:

matchedtopics = []

included_count = 0

skipped_count = 0

if (args.verbose):

print("> Reading bag file: " + ifile)

with Bag(ifile, 'r') as ib:

for topic, msg, t in ib:

if any(fnmatchcase(topic, pattern) for pattern in topics):

if not topic in matchedtopics:

matchedtopics.append(topic)

if (args.verbose):

print("Including matched topic '%s'" % topic)

o.write(topic, msg, t)

included_count += 1

else:

skipped_count += 1

total_included_count += included_count

total_skipped_count += skipped_count

if (args.verbose):

print("< Included %d messages and skipped %d" % (included_count, skipped_count))

if (args.verbose):

print("Total: Included %d messages and skipped %d" % (total_included_count, total_skipped_count))

if __name__ == "__main__":

main()

运行Kaist rosbag数据集

Kaist相机配置文件:

%YAML:1.0

---

model_type: PINHOLE

camera_name: camera

image_width: 1280

image_height: 560

distortion_parameters:

k1: -5.6143027800000002e-02

k2: 1.3952563200000001e-01

p1: -1.2155906999999999e-03

p2: -9.7281389999999998e-04

projection_parameters:

fx: 816.90378992770002

fy: 811.56803828490001

cx: 608.50726281690004

cy: 263.47599764440002

总的配置文件:

%YAML:1.0

#common parameters

imu: 1

num_of_cam: 1

imu_topic: "/imu0"

image0_topic: "/cam0/image_raw"

image1_topic: "/cam1/image_raw"

output_path: "/home/Workspace/RosWorkspace/catkin_ws_vins_fusion/src/VINS-Fusion/output/"

cam0_calib: "kaist_cam.yaml"

cam1_calib: "kaist_cam.yaml"

image_width: 1280

image_height: 560

# Extrinsic parameter between IMU and Camera.

estimate_extrinsic: 0 # 0 Have an accurate extrinsic parameters. We will trust the following imu^R_cam, imu^T_cam, don't change it.

# 1 Have an initial guess about extrinsic parameters. We will optimize around your initial guess.

body_T_cam0: !!opencv-matrix

rows: 4

cols: 4

dt: d

data: [-6.80499000e-03, -1.53215000e-02, 9.99850000e-01, 1.71239,

-9.99977000e-01, 3.34627000e-04, -6.80066000e-03, 0.247401,

-2.30383000e-04, -9.99883000e-01, -1.53234000e-02, -0.11589,

0, 0, 0, 1]

body_T_cam1: !!opencv-matrix

rows: 4

cols: 4

dt: d

data: [-6.80499000e-03, -1.53215000e-02, 9.99850000e-01, 1.71239,

-9.99977000e-01, 3.34627000e-04, -6.80066000e-03, 0.247401,

-2.30383000e-04, -9.99883000e-01, -1.53234000e-02, -0.11589,

0, 0, 0, 1]

#Multiple thread support

multiple_thread: 1

#feature traker paprameters

max_cnt: 200 # max feature number in feature tracking

min_dist: 30 # min distance between two features

freq: 10 # frequence (Hz) of publish tracking result. At least 10Hz for good estimation. If set 0, the frequence will be same as raw image

F_threshold: 1.0 # ransac threshold (pixel)

show_track: 1 # publish tracking image as topic

flow_back: 1 # perform forward and backward optical flow to improve feature tracking accuracy

#optimization parameters

max_solver_time: 0.08 # max solver itration time (s), to guarantee real time

max_num_iterations: 10 # max solver itrations, to guarantee real time

keyframe_parallax: 15 # keyframe selection threshold (pixel)

#imu parameters The more accurate parameters you provide, the better performance

acc_n: 0.02 # accelerometer measurement noise standard deviation. #0.2 0.04

gyr_n: 0.003 # gyroscope measurement noise standard deviation. #0.05 0.004

acc_w: 0.005 # accelerometer bias random work noise standard deviation. #0.02

gyr_w: 3.0e-5 # gyroscope bias random work noise standard deviation. #4.0e-5

g_norm: 9.81007 # gravity magnitude

#unsynchronization parameters

estimate_td: 0 # online estimate time offset between camera and imu

td: 0.0 # initial value of time offset. unit: s. readed image clock + td = real image clock (IMU clock)

#loop closure parameters

load_previous_pose_graph: 0 # load and reuse previous pose graph; load from 'pose_graph_save_path'

pose_graph_save_path: "/home/Workspace/RosWorkspace/catkin_ws_vins_fusion/src/VINS-Fusion/output/pose_graph/" # save and load path

save_image: 1 # save image in pose graph for visualization prupose; you can close this function by setting 0

运行指令:

roslaunch vins vins_rviz.launch

rosrun vins vins_node ~/catkin_ws/src/VINS-Fusion/config/kaist/kaist_config.yaml

rosrun global_fusion global_fusion_node

rosbag play Kaist38_gps.bag

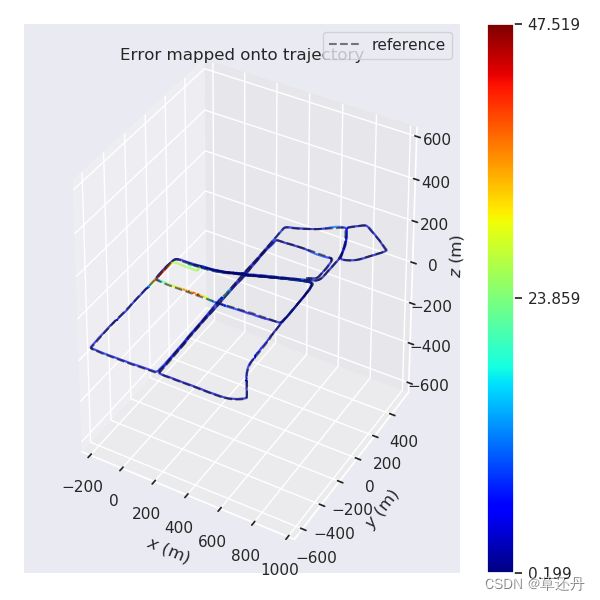

运行结果:

前期由于ENU坐标系与VIO坐标系没有对齐,误差稍大,收敛之后整体效果尚可,但是在个别路段由于GPS的定位偏差较大,优化出的位姿效果没有预期的好。

前期由于ENU坐标系与VIO坐标系没有对齐,误差稍大,收敛之后整体效果尚可,但是在个别路段由于GPS的定位偏差较大,优化出的位姿效果没有预期的好。