AI小项目

闯红灯检测项目,给定特定场景,检测非机动车是否闯红灯,并形成完整证据链

1、首先检测三种物体(person、bicycle、motorbike)

拟采用Yolov5进行检测,Yolov5在目标检测领域中十分常用,官方Yolov5包括四个版本:Yolov5s、Yolov5m、Yolov5l、Yolov5x四个模型,本次采用Yolov5m。

1.1、数据集介绍

项目拟采用MS COCO数据集,COCO 2017版本包括79个类:

- person

- bicycle

- car

- motorbike

- aeroplane

- bus

- train

- truck

- boat

- traffic light

- ...

本次仅检测person、bicycle、motorbike,下载COCO数据集,源数据集目录格式如下:

train2017

val2017

annotations

caption_train2017.json

caption_val2017.json

instances_train2017.json

instances_val2017.json

person_keypoints_train2017.json

person_keypoints_val2017.json

可以发现,annotations中有三种标注类型,image captions(看图说话)、object instaces(目标实例,用于目标检测)、object keypoints(目标关键点,用于姿态估计),本项目采用object instances。

1.2、数据集处理

需要将数据集提取出我们需要的类,并转成yolo需要的格式。

1.2.1、Yolov5数据格式

yolov5需要什么格式数据呢?根据官方介绍,数据格式应如下:

images

train

img1.jpg

img2.jpg

...

val

img3.jpg

...

labels

train

img1.txt

img2.txt

...

val

img3.txt

...

每个txt文件中应为如下格式:

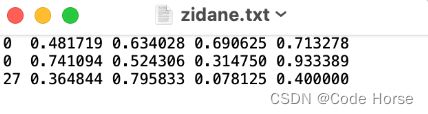

每一列分别对应为类别id、相对中心坐标x、相对中心坐标y、标注框相对宽度w,标注框相对高度h。

1.2.2、COCO数据格式(json)

主要有如下几个字段

info:

"info": { # 数据集信息描述

"description": "COCO 2017 Dataset", # 数据集描述

"url": "http://cocodataset.org", # 下载地址

"version": "1.0", # 版本

"year": 2017, # 年份

"contributor": "COCO Consortium", # 提供者

"date_created": "2017/09/01" # 数据创建日期

},

licenses:

"licenses": [

{

"url": "http://creativecommons.org/licenses/by-nc-sa/2.0/",

"id": 1,

"name": "Attribution-NonCommercial-ShareAlike License"

},

……

……

],

images:

"images": [

{

"license": 4,

"file_name": "000000397133.jpg", # 图片名

"coco_url": "http://images.cocodataset.org/val2017/000000397133.jpg",# 网路地址路径

"height": 427, # 高

"width": 640, # 宽

"date_captured": "2013-11-14 17:02:52", # 数据获取日期

"flickr_url": "http://farm7.staticflickr.com/6116/6255196340_da26cf2c9e_z.jpg",# flickr网路地址

"id": 397133 # 图片的ID编号(每张图片ID是唯一的)

},

……

……

],

categories:

"categories": [ # 类别描述

{

"supercategory": "person", # 主类别

"id": 1, # 类对应的id (0 默认为背景)

"name": "person" # 子类别

},

{

"supercategory": "vehicle",

"id": 2,

"name": "bicycle"

},

{

"supercategory": "vehicle",

"id": 3,

"name": "car"

},

……

……

],

annotations:

"annotation": [

{

"segmentation": [ # 对象的边界点(边界多边形)

[

224.24,297.18,# 第一个点 x,y坐标

228.29,297.18, # 第二个点 x,y坐标

234.91,298.29,

……

……

225.34,297.55

]

],

"area": 1481.3806499999994, # 区域面积

"iscrowd": 0, #

"image_id": 397133, # 对应的图片ID(与images中的ID对应)

"bbox": [217.62,240.54,38.99,57.75], # 定位边框 [x,y,w,h]

"category_id": 44, # 类别ID(与categories中的ID对应)

"id": 82445 # 对象ID,因为每一个图像有不止一个对象,所以要对每一个对象编号(每个对象的ID是唯一的)

},

……

……

]

1.2.3、COCO数据集处理

提取json文件并改为txt格式,json.load()载入json文件,得到images和annotations信息,遍历所有图像,如果当前图像有标注框信息,且所属类为我们需要的类时,写入txt,并copy图像到新文件中。

def json_txt(json_path, txt_path, source_photo, target_photo):

# 生成存放json文件的路径

if not os.path.exists(txt_path):

os.mkdir(txt_path)

# 读取json文件

with open(json_path, 'r') as f:

dictortary = json.load(f)

classes_ids = [1, 2, 4]

classes_ids_myid = {1:0, 2:1, 4:2}

# 得到images和annotations信息

images_value = dictortary.get("images") # 得到某个键下对应的值

annotations_value = dictortary.get("annotations") # 得到某个键下对应的值

# 使用images下的图像名的id创建txt文件

list=[] # 将文件名存储在list中

id2name = dict()

id2height = dict()

id2width = dict()

for i in images_value:

list.append(i.get("id"))

id2name[i.get("id")] = i.get("file_name")

id2height[i.get("id")] = i.get("height")

id2width[i.get("id")] = i.get("width")

# 将id对应图片的bbox写入txt文件中

for i in list: # 所有图像

for j in annotations_value: # 遍历所有annotations

try:

if j.get("image_id") == i and (j.get("category_id") in classes_ids):

# bbox标签归一化处理

box = j.get('bbox')

bbox = convert((id2width[i], id2height[i]), box)

file_name = txt_path + "coco_" + str(i) + '.txt'

if not os.path.exists(file_name):

open(file_name, 'w')

with open(file_name, 'a') as file1: # 写入txt文件中

print(classes_ids_myid[j.get("category_id")], format(bbox[0], '.3f'), format(bbox[1], '.3f'), format(bbox[2], '.3f'), format(bbox[3], '.3f'), file=file1)

imgname = id2name[i]

source_photo_path = source_photo + imgname

target_photo_path = target_photo + "coco_" + str(i) + '.jpg'

if not os.path.exists(target_photo_path):

shutil.copy(source_photo_path, target_photo_path)

except:

print("i == ", i)同时需要将json中bbox中框的绝对位置(,,w, h)转为相对位置(,,w`, h`),通过convert()函数:

def convert(size, box):# 将xmin,ymin,w,h转为相对中心坐标x、相对中心坐标y、标注框相对宽度w,标注框相对高度h。

dw = 1. / size[0]

dh = 1. / size[1]

x = box[0] + box[2] / 2.0

y = box[1] + box[3] / 2.0

w = box[2]

h = box[3]

x = x * dw

w = w * dw

y = y * dh

h = h * dh

return (x, y, w, h)最终目录格式如下:

至此,数据集处理完毕!

1.3、Yolov5训练

在github中下载好Yolov5模型,其中配置十分完备,训练时仅更改几个特殊配置即可:

1、vehicle_detection_data_conf.yaml

2、vehicle_detection_hyper_conf.yaml

3、vehicle_detection_model_conf.yaml

vehicle_detection_data_conf.yaml,配置训练路径及类数:

train: Vehicle_Detection_Dataset/images/train

val: Vehicle_Detection_Dataset/images/val

# number of classes

nc: 3

# class names

names: ['person', 'bicycle', 'motorcycle']vehicle_detection_hyper_conf.yaml,配置超参:

# YOLOv5 by Ultralytics, GPL-3.0 license

# Hyperparameters for VOC finetuning

# python train.py --batch 64 --weights yolov5m.pt --data VOC.yaml --img 512 --epochs 50

# See tutorials for hyperparameter evolution https://github.com/ultralytics/yolov5#tutorials

# Hyperparameter Evolution Results

# Generations: 306

# P R mAP.5 mAP.5:.95 box obj cls

# Metrics: 0.6 0.936 0.896 0.684 0.0115 0.00805 0.00146

lr0: 0.0032

lrf: 0.12

momentum: 0.843

weight_decay: 0.00036

warmup_epochs: 2.0

warmup_momentum: 0.5

warmup_bias_lr: 0.05

box: 0.0296

cls: 0.243

cls_pw: 0.631

obj: 0.301

obj_pw: 0.911

iou_t: 0.2

anchor_t: 2.91

# anchors: 3.63

fl_gamma: 0.0

hsv_h: 0.0138

hsv_s: 0.664

hsv_v: 0.464

degrees: 0.373

translate: 0.245

scale: 0.898

shear: 0.602

perspective: 0.0

flipud: 0.00856

fliplr: 0.5

mosaic: 1.0

mixup: 0.243

copy_paste: 0.0vehicle_detection_model_conf.yaml,配置模型参数

# YOLOv5 by Ultralytics, GPL-3.0 license

# Parameters

nc: 3 # number of classes

depth_multiple: 0.67 # model depth multiple -> compound scaling

width_multiple: 0.75 # layer channel multiple -> compound scaling

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)之后即可开始训练(train.sh):

python yolov5/train.py --img 640 \

--cfg configs/vehicle_detection/vehicle_detection_model_conf.yaml \

--hyp configs/vehicle_detection/vehicle_detection_hyper_conf.yaml \

--batch -1 \

--weight yolov5m.pt \

--epochs 100 \

--data configs/vehicle_detection/vehicle_detection_data_conf.yaml \

--workers 8 \

--project "configs/vehicle_detection/Model Vehicle Detection" \

--save-period 1