python爬虫爬取网页图片保存本地

目录

一:爬取网页图片保存至本地

二:爬取网页数据导出excel查看

一:爬取网页图片保存至本地

导入必要的库

import collections # 词频统计库

import os

import re # 正则表达式库

import urllib.error # 指定url,获取网页数据

import urllib.request

import jieba # 结巴分词

import matplotlib.pyplot as plt # 图像展示库

import numpy as np # numpy数据处理库

import pandas as pd

import wordcloud # 词云展示库

import xlwt # 进行excel操作

from PIL import Image # 图像处理库

from bs4 import BeautifulSoup # 网页解析,获取数据

from pyecharts.charts import Bar # 画柱形图伪装成浏览器,向网页获取服务

head = {

"User-Agent": "Mozilla / 5.0(Windows NT 10.0;WOW64) AppleWebKit / 537.36(KHTML, likeGecko) Chrome / 85.0.4183.121Safari / 537.36"

}字符串模糊识别 (正则表达式)

# 影片详情链接规则

findLink = re.compile(r'') # 创建正则表达式对象

# 影片图片的链接

findImgSrc = re.compile(r'(.*)')

# 影片评分

findRating = re.compile(r'')

# 评价人数

findJudge = re.compile(r'(\d*)人评价')

# 概况

findInq = re.compile(r'(.*)')

# 找到影片的相关内容

findBd = re.compile(r'(.*?)

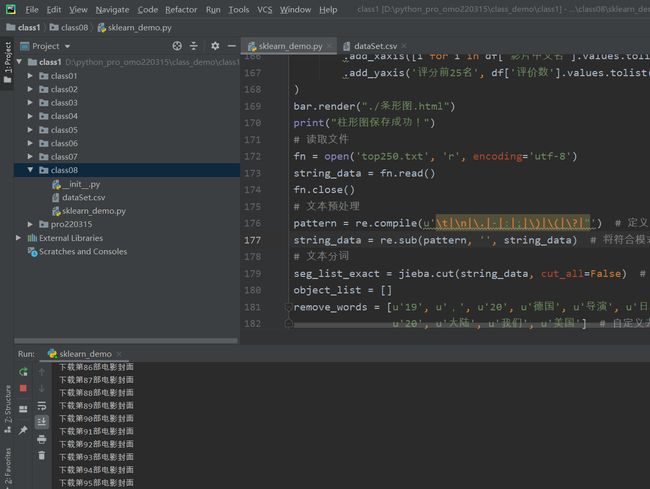

', re.S) 爬取网页数据,下载电影封面,保存至本地自己设置好的文件夹

# 爬取网页

def getDate(baseurl):

datalist = []

x = 1

# 调用获取页面信息的函数(10次)

for i in range(0, 10):

url = baseurl + str(i * 25)

html = askURL(url) # 保存获取到的网页源码

# 逐一解析数据

soup = BeautifulSoup(html, "html.parser")

for item in soup.find_all('div', class_="item"):

data = [] # 保存一部电影的所有信息

item = str(item) # 将item转换为字符串

# 影片详情链接

link = re.findall(findLink, item)[0]

# 追加内容到列表

data.append(link)

imgSrc = re.findall(findImgSrc, item)[0]

data.append(imgSrc)

titles = re.findall(findTitle, item)

if (len(titles) == 2):

ctitle = titles[0]

data.append(ctitle) # 添加中文名

otitle = titles[1].replace("/", "")

data.append(otitle) # 添加外国名

else:

data.append(titles[0])

data.append(' ') # 外国名如果没有则留空

rating = re.findall(findRating, item)[0]

data.append(rating)

judgeNum = re.findall(findJudge, item)[0]

data.append(judgeNum)

inq = re.findall(findInq, item)

if len(inq) != 0:

inq = inq[0].replace("。", "")

data.append(inq)

else:

data.append(' ')

bd = re.findall(findBd, item)[0]

bd = re.sub('等待下载,可在本地文件夹查看封面图片

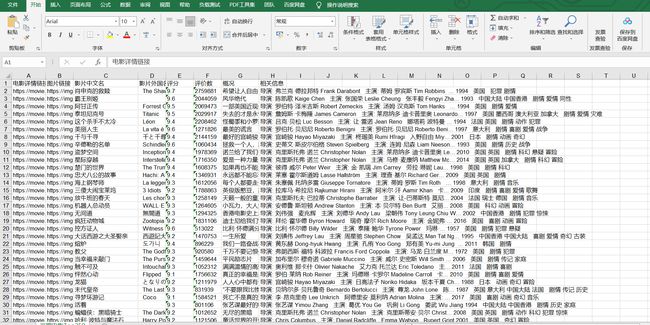

二:爬取网页数据导出excel查看

得到指定的一个url的网页内容

# 得到指定一个url的网页内容

def askURL(url):

request = urllib.request.Request(url, headers=head)

html = ""

try:

response = urllib.request.urlopen(request)

html = response.read().decode("utf-8")

except urllib.error.URLError as e:

if hasattr(e, "code"):

print(e.code) # 打印错误信息

if hasattr(e, "reason"):

print(e.reason) # 打印错误原因

return html保存数据 至 excel

# 保存数据

def saveData(datalist, savepath):

book = xlwt.Workbook(encoding="utf-8", style_compression=0) # 创建workbook对象

sheet = book.add_sheet("豆瓣电影Top250", cell_overwrite_ok=True) # 创建工作表

col = ('电影详情链接', "图片链接", "影片中文名", "影片外国名", "评分", "评价数", "概况", "相关信息")

try:

for i in range(0, 8):

sheet.write(0, i, col[i]) # 输入列名

for i in range(0, 250):

print("第%d条" % (i + 1))

data = datalist[i]

for j in range(0, 8):

sheet.write(i + 1, j, data[j])

book.save(savepath)

except:

print("爬取异常")完整源码分享

import collections # 词频统计库

import os

import re # 正则表达式库

import urllib.error # 指定url,获取网页数据

import urllib.request

import jieba # 结巴分词

import matplotlib.pyplot as plt # 图像展示库

import numpy as np # numpy数据处理库

import pandas as pd

import wordcloud # 词云展示库

import xlwt # 进行excel操作

from PIL import Image # 图像处理库

from bs4 import BeautifulSoup # 网页解析,获取数据

from pyecharts.charts import Bar # 画柱形图

def main():

baseurl = "https://movie.douban.com/top250?start="

# 获取网页

datalist = getDate(baseurl)

savepath = ".\\豆瓣电影Top250.xls"

# 保存数据

saveData(datalist, savepath)

head = {

"User-Agent": "Mozilla / 5.0(Windows NT 10.0;WOW64) AppleWebKit / 537.36(KHTML, likeGecko) Chrome / 85.0.4183.121Safari / 537.36"

}

# 影片详情链接规则

findLink = re.compile(r'') # 创建正则表达式对象

# 影片图片的链接

findImgSrc = re.compile(r'(.*)')

# 影片评分

findRating = re.compile(r'')

# 评价人数

findJudge = re.compile(r'(\d*)人评价')

# 概况

findInq = re.compile(r'(.*)')

# 找到影片的相关内容

findBd = re.compile(r'(.*?)

', re.S)

# 爬取网页

def getDate(baseurl):

datalist = []

x = 1

# 调用获取页面信息的函数(10次)

for i in range(0, 10):

url = baseurl + str(i * 25)

html = askURL(url) # 保存获取到的网页源码

# 逐一解析数据

soup = BeautifulSoup(html, "html.parser")

for item in soup.find_all('div', class_="item"):

data = [] # 保存一部电影的所有信息

item = str(item) # 将item转换为字符串

# 影片详情链接

link = re.findall(findLink, item)[0]

# 追加内容到列表

data.append(link)

imgSrc = re.findall(findImgSrc, item)[0]

data.append(imgSrc)

titles = re.findall(findTitle, item)

if (len(titles) == 2):

ctitle = titles[0]

data.append(ctitle) # 添加中文名

otitle = titles[1].replace("/", "")

data.append(otitle) # 添加外国名

else:

data.append(titles[0])

data.append(' ') # 外国名如果没有则留空

rating = re.findall(findRating, item)[0]

data.append(rating)

judgeNum = re.findall(findJudge, item)[0]

data.append(judgeNum)

inq = re.findall(findInq, item)

if len(inq) != 0:

inq = inq[0].replace("。", "")

data.append(inq)

else:

data.append(' ')

bd = re.findall(findBd, item)[0]

bd = re.sub('