伯特斯卡斯

重点 (Top highlight)

Given a question and a passage, the task of Question Answering (QA) focuses on identifying the exact span within the passage that answers the question.

给定一个问题和一个段落 ,“问答”(QA)的任务集中于确定段落中回答该问题的确切范围。

Recently, our team at Fast Forward Labs have been exploring state of the art models for Question Answering and have used the rather excellent HuggingFace transformers library. As we applied BERT for QA models (BERTQA) to datasets outside of wikipedia (legal documents), we have observed a variety of results. Naturally, one of the things we have been exploring are methods to better understand why the model provides certain responses, and especially when it fails. This post focuses on the following questions:

最近,我们的Fast Forward Labs团队一直在探索用于Question Answering的最新模型,并使用了相当出色的HuggingFace变压器库。 当我们将BERT用于质量检查模型(BERTQA)应用于维基百科(法律文件)之外的数据集时,我们已经观察到各种结果。 自然,我们一直在探索的方法之一就是更好地理解模型为什么提供某些响应的方法,尤其是当模型失败时。 这篇文章重点关注以下问题:

- What are some approaches to explaining a BERT based model? 有什么方法可以解释基于BERT的模型?

- Why are Gradients a good approach? 为什么渐变是一种好方法?

- How to implement Gradient explanations for BERT in Tensorflow 2.0? 如何在Tensorflow 2.0中实现BERT的梯度说明?

- Some example results and visualizations! 一些示例结果和可视化!

Code used for this post (graphs above) is available in this Colab notebook. Try it out!

此文章使用的代码(上图)在此Colab笔记本中可用。 试试看!

我们如何为BERT等NLP模型建立解释界面? (How Do We Build An Explanation Interface for NLP Models like BERT?)

From the human computer interaction perspective, a primary requirement for such an interface is glanceabilty — i.e. the interface should provide an artifact — text, number(s), or visualization that provides a complete picture of how each input contributes to the model prediction. There are several possible strategies for this. We can use model agnostic tools like LIME and SHAP or explore properties of the model such as self-attention weights or gradients in explaining behaviour.

从人机交互的角度来看,对于这样的接口的主要要求是glanceabilty -即接口应该提供一个工件-文本,数字(或多个),或可视化,它提供如何每个输入有助于模型预测的完整画面。 有几种可能的策略。 我们可以使用模型不可知工具(例如LIME和SHAP)或探索模型的属性(例如自我注意权重或梯度)来解释行为。

黑匣子模型说明(LIME,SHAP) (Blackbox Model Explanation (LIME, SHAP))

Blackbox methods such as LIME and SHAP are based on input perturbation (i.e. remove words from the input and observe its impact on model prediction) and have a few limitations. Of relevance here is that LIME does not guarantee consistency (LIME local models may not be faithful to the global model) and SHAP has known computation complexity issues(KernelSHAP explores multiple combinations of input where a feature is present/absent …. computing these combinations can take a while). See this notebook for some additional discussion on these methods as well as their pros and cons.

黑盒方法(如LIME和SHAP)基于输入扰动(即,从输入中删除单词并观察其对模型预测的影响),并且存在一些局限性。 与此相关的是,LIME不能保证一致性(LIME局部模型可能不忠于全局模型),并且SHAP具有已知的计算复杂性问题(KernelSHAP探索了存在/不存在特征的输入的多个组合……计算这些组合可以需要一段时间)。 有关这些方法及其优缺点的其他讨论,请参见此笔记本 。

We’ll skip this approach.

我们将跳过这种方法。

基于注意的解释 (Attention Based Explanation)

Given that BERT is an attention based model, it is tempting to use attention weights as a way to explain its behaviour. After all, attention weights are a reflection of what inputs are important to some output task [5]. This line of thought is not exactly bad, as attention weights have been useful in helping us understand and debug sequence to sequence (seq2seq) models [5]. However, BERT uses attention mechanisms differently (see this relevant article on self-attention mechanisms). While a traditional seq2seq model typically has a single attention mechanism [5] that reflects which input tokens are attended to, BERT (base) contains 12 layers, with 12 attention heads each (for a total of 144 attention mechanisms)!

鉴于BERT是基于注意力的模型,因此倾向于使用注意力权重作为解释其行为的方式。 毕竟,注意力权重反映了哪些输入对某些输出任务很重要[5]。 这种思路并不完全不好,因为注意力权重在帮助我们理解和调试序列到序列(seq2seq)模型方面很有用[5]。 但是,BERT使用注意机制的方式有所不同(请参阅有关自我注意机制的相关文章 )。 传统的seq2seq模型通常具有单一的关注机制[5],该机制反映attended to关注哪些输入令牌,而BERT(基本)包含12层,每个都有12个关注头(总共144个关注机制)!

Furthermore, given that BERT layers are interconnected, attention is not over words but over hidden embeddings, which themselves can be mixed representations of multiple embeddings. Recent research shows that each of these attention heads focus on different patterns (e.g. heads that attend to the direct objects of verbs, determiners of nouns, objects of prepositions, and coreferent mentions [1]). Each of these different attention patterns are combined in opaque ways to enable BERTs complex language modeling capabilities. This immediately brings up the challenge of deciding which (combination of) mechanism(s) to use for explaining the model. For additional details on visual patterns within BERT attention heads, see this excellent post by Jesse Vig.

此外, 鉴于BERT层是相互连接的,所以注意的重点不是单词而是隐藏的嵌入,它们本身可以是多个嵌入的混合表示 。 最近的研究表明,这些关注头中的每个关注头都集中在不同的模式上(例如,关注动词直接宾语,名词的确定词,介词宾语和相关指称的头[1])。 这些不同的注意力模式均以不透明的方式组合在一起,以使BERT具备复杂的语言建模功能。 这立即带来了决定使用哪种(多种)机理来解释模型的挑战。 有关BERT注意头中视觉模式的更多详细信息,请参阅Jesse Vig的出色文章 。

Related research has also found that attention weights may be misleading as explanations in general [2] and that attention weights are not directly interpretable [3]. This is not to say attention weights are useless for debugging models .. far from it. They are valuable for scientific probing exercises [1] that help us understand model behaviour, but perhaps not as a tool for end user interpretability.

相关研究还发现,注意力权重可能会误导一般性解释[2],并且注意力权重无法直接解释[3]。 这并不是说注意权重对于调试模型没有用。 它们对于有助于我们了解模型行为的科学探测练习[1]很有用,但可能不是最终用户可解释性的工具。

We will also skip the attention based explanation approach.

我们还将跳过基于注意力的解释方法。

基于梯度的解释 (Gradient Based Explanation)

It turns out that we can leverage the gradients in a trained deep neural network to efficiently infer the relationship between inputs and output. This works because the gradient quantifies how much a change in each input dimension would change the predictions in a small neighborhood around the input. While this approach is simple, existing research suggests simple gradient explanations are stable, and faithful to the model/data generating process [4] compared to more sophisticated methods (e.g. GradCam and Integrated Gradients). Let’s explore this approach!

事实证明,我们可以利用经过训练的深度神经网络中的梯度来有效地推断输入和输出之间的关系。 之所以可行,是因为该梯度可量化每个输入维度的变化将在输入周围的较小邻域中更改预测值。 尽管这种方法很简单 ,但现有研究表明,与更复杂的方法(例如GradCam和Integrated Gradients)相比,简单的梯度解释是稳定的,并且忠实于模型/数据生成过程[4]。 让我们探索这种方法!

Gradients in TF 2.0 via GradientTape!Luckily, this process is fairly straightforward from a Tensorflow 2.0 (keras api) standpoint, using GradientTape. GradientTape allows us to record operations on a set of variables we want to perform automatic differentiation on. To explain the model’s output on a given input we can (i) instantiate the GradientTape and watch our input variable (ii) compute forward pass through the model (iii) get gradients of output of interest (e.g. a specific class logits) with respect to the watched input. (iv) use the normalized gradients as explanations. The code snippet below shows how this can be done — where model is a Hugging Face BERT model and tokenizer is a Hugging Face tokenizer.

通过GradientTape在TF 2.0中实现渐变! 幸运的是,从Tensorflow 2.0(keras api)的角度来看,此过程非常简单,使用GradientTape即可 。 GradientTape允许我们记录要对其执行自动微分的一组变量的操作。 为了解释模型在给定输入上的输出,我们可以(i)实例化GradientTape并观察我们的输入变量(ii)计算通过模型的正向传递(iii)得出感兴趣的输出(例如特定类logit)相对于监视的输入。 (iv)使用归一化的梯度作为解释。 下面的代码片段显示了如何完成此操作-其中model是Hugging Face BERT模型,而tokenizer是Hugging Face令牌生成器。

def get_gradient(question, context, model, tokenizer):

"""Return gradient of input (question) wrt to model output span prediction

Args:

question (str): text of input question

context (str): text of question context/passage

model (QA model): Hugging Face BERT model for QA transformers.modeling_tf_distilbert.TFDistilBertForQuestionAnswering, transformers.modeling_tf_bert.TFBertForQuestionAnswering

tokenizer (tokenizer): transformers.tokenization_bert.BertTokenizerFast

Returns:

(tuple): (gradients, token_words, token_types, answer_text)

"""

embedding_matrix = model.bert.embeddings.word_embeddings

encoded_tokens = tokenizer.encode_plus(question, context, add_special_tokens=True, return_tensors="tf")

token_ids = list(encoded_tokens["input_ids"].numpy()[0])

vocab_size = embedding_matrix.get_shape()[0]

# convert token ids to one hot. We can't differentiate wrt to int token ids hence the need for one hot representation

token_ids_tensor = tf.constant([token_ids], dtype='int32')

token_ids_tensor_one_hot = tf.one_hot(token_ids_tensor, vocab_size)

with tf.GradientTape(watch_accessed_variables=False) as tape:

# (i) watch input variable

tape.watch(token_ids_tensor_one_hot)

# multiply input model embedding matrix; allows us do backprop wrt one hot input

inputs_embeds = tf.matmul(token_ids_tensor_one_hot,embedding_matrix)

# (ii) get prediction

start_scores,end_scores = model({"inputs_embeds": inputs_embeds, "token_type_ids": encoded_tokens["token_type_ids"], "attention_mask": encoded_tokens["attention_mask"] })

answer_start, answer_end = get_best_start_end_position(start_scores, end_scores)

start_output_mask = get_correct_span_mask(answer_start, len(token_ids))

end_output_mask = get_correct_span_mask(answer_end, len(token_ids))

# zero out all predictions outside of the correct span positions; we want to get gradients wrt to just these positions

predict_correct_start_token = tf.reduce_sum(start_scores * start_output_mask)

predict_correct_end_token = tf.reduce_sum(end_scores * end_output_mask)

# (iii) get gradient of input with respect to both start and end output

gradient_non_normalized = tf.norm(

tape.gradient([predict_correct_start_token, predict_correct_end_token], token_ids_tensor_one_hot),axis=2)

# (iv) normalize gradient scores and return them as "explanations"

gradient_tensor = (

gradient_non_normalized /

tf.reduce_max(gradient_non_normalized)

)

gradients = gradient_tensor[0].numpy().tolist()

token_words = tokenizer.convert_ids_to_tokens(token_ids)

token_types = list(encoded_tokens["token_type_ids"].numpy()[0])

answer_text = tokenizer.convert_tokens_to_string(token_ids[answer_start:answer_end])

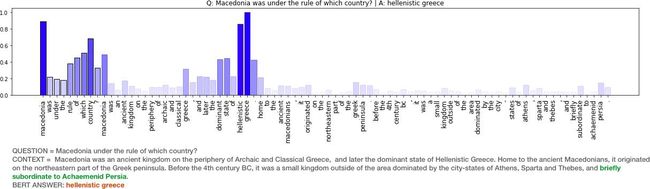

return gradients, token_words, token_types,answer_textFull sample code can be found in this Colab notebook. Visuals below show results from explaining 8 random question + context snippets.

完整的示例代码可在此Colab笔记本中找到。 下面的视觉效果显示了解释8个随机问题+上下文摘要的结果。

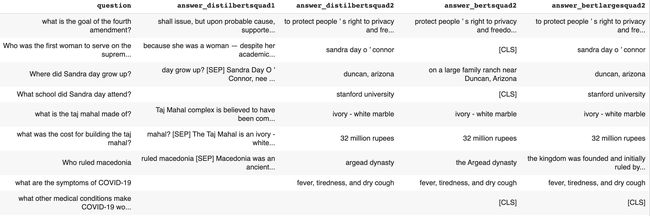

*DistilBERT SQUAD1 (261M): returns 5/8. 2 correct answers.*DistilBERT SQUAD2 (265MB): returns 7/8 answers. 7 correct answers*BERT base (433MB): returns 5/8 answers. 5 correct answers*BERT large (1.34GB): returns 7/8 answers. 7 correct answers

* DistilBERT SQUAD1(261M):返回5/8。 2个正确答案。* DistilBERT SQUAD2(265MB):返回7/8个答案。 7个正确答案* BERT基础(433MB):返回5/8个答案。 5个正确答案* BERT大(1.34GB):返回7/8个答案。 7个正确答案

Explanations like the gradient method above and model output provide a few insights on BERT based QA models.

上面的梯度方法和模型输出之类的解释为基于BERT的质量检查模型提供了一些见解。

- We see that in cases where BERT does not have an answer (e.g. it outputs a CLS token only), it generally does not have high normalized gradient scores for most of the input tokens. Perhaps explanation scores can be combined with model confidence scores (start/end span softmax) to build a more complete metric for confidence in the span prediction. 我们看到,在BERT没有答案的情况下(例如,它仅输出CLS令牌),对于大多数输入令牌来说,它通常不具有较高的归一化梯度得分。 也许可以将解释分数与模型置信度分数(开始/结束跨度softmax)组合起来,以建立更完整的度量标准以用于跨度预测的置信度。

- There are some cases where the model appears to be responsive to the right tokens but still fails to return an answer. Having a larger model (e.g bert large) helps in some cases (see answer screenshot above). Bert base correctly finds answers for 5/8 questions while BERT large finds answers for 7/8 questions. There is a cost though .. bert base model size is ~540MB vs bertlarge ~1.34GB and almost 3x the run time. 在某些情况下,模型似乎对正确的令牌做出了响应,但仍然无法返回答案。 在某些情况下,使用较大的模型(例如bert大)会有所帮助(请参见上面的答案屏幕截图)。 Bert base可以正确找到5/8问题的答案,而BERT large可以找到7/8问题的答案。 不过,这是有成本的.. bert基本模型的大小是〜540MB,而bertlarge是1.34GB,几乎是运行时间的三倍。

On the randomly selected question/context pairs above, the smaller, faster DistilBERT (squad2) surprisingly performs better than BERTbase and at par with BERTlarge. Results also demonstrate why, we all should not be using QA models trained on SQUAD1 (hint: the answer spans provided are quite poor).

在上面随机选择的问题/上下文对中,较小,更快的DistilBERT(squad2)的性能令人惊讶地优于BERTbase,与BERTlarge相当。 研究结果还表明,为什么,大家不应该使用的培训上SQUAD1 QA车型(提示:所提供的答案跨度是相当差)。

In addition to these insights, explanations also enable sensemaking of model results by end users. In this case, sensemaking from the Human Computer Interaction perspective is focused on interface affordances that help the user build intuition on how, why and when these models work.

除了这些见解之外,解释还使最终用户可以对模型结果进行有意义的解释。 在这种情况下,从“人机交互”的角度进行意义分析的重点是界面功能,这些功能可帮助用户直观地了解这些模型的工作方式,原因和时间。

结论:下一步是什么? (Conclusions: Whats Next?)

We have repurposed bar charts to visualize the impact of input tokens on how a BERTQA model selects answer spans. Perhaps an overlaid text approach (similar to textualheatmaps by Andreas Madsen) would be better. I am working on some user interface that ties this together and will explore it in a future post. There are also a few other potential gradient based methods that can be used to yield explanations (e.g. Integrated Gradients, GradCam, SmoothGrad, see [4] for a complete list). It may be interesting to compare explanations from each method.

我们重新设计了条形图的用途,以可视化输入令牌对BERTQA模型选择答案范围的影响。 覆盖文本方法(类似于Andreas Madsen的textualheatmaps )可能会更好。 我正在研究一些将其联系在一起的用户界面,并将在以后的文章中进行探讨。 还有一些其他基于潜在梯度的方法可用于产生解释(例如,Integrated Gradients,GradCam,SmoothGrad,有关完整列表,请参见[4])。 比较每种方法的解释可能会很有趣。

[1] Clark, Kevin, et al. “What does bert look at? an analysis of bert’s attention.” arXiv preprint arXiv:1906.04341 (2019).[2] Jain, Sarthak, and Byron C. Wallace. “Attention is not explanation.” arXiv preprint arXiv:1902.10186 (2019).[3] Brunner, Gino, et al. “On identifiability in transformers.” International Conference on Learning Representations. 2019.[4] Adebayo, Julius, et al. “Sanity checks for saliency maps.” Advances in Neural Information Processing Systems. 2018.[5] Bahdanau, Dzmitry, Kyunghyun Cho, and Yoshua Bengio. “Neural machine translation by jointly learning to align and translate.” arXiv preprint arXiv:1409.0473 (2014).

[1] Clark,Kevin等。 “伯特看什么? 伯特的注意力分析。” arXiv预印本arXiv:1906.04341 (2019)。[2] Jain,Sarthak和Byron C. Wallace。 “注意不是解释。” arXiv预印本arXiv:1902.10186 (2019)。[3] Brunner,Gino等人。 “关于变压器的可识别性。” 国际学习代表性会议 。 2019. [4] 阿德巴约,朱利叶斯等人。 “健全性检查显着性图。” 神经信息处理系统的研究进展 。 2018. [5] Bahdanau,Dzmitry,Kyunghyun Cho和Yoshua Bengio。 “通过共同学习对齐和翻译来进行神经机器翻译。” arXiv预印本arXiv:1409.0473 (2014)。

翻译自: https://towardsdatascience.com/explainbertqa-687abe3b2fcc

伯特斯卡斯