Ubuntu一站式搭建Kubernetes与Kubeflow

Ubuntu环境下Kubernetes与Kubeflow一站式搭建

前言

服务器系统:ubuntu16.04

服务器网络:192.168.51.6

Kubernetes版本:1.16.3

Docker版本:18.09.8

Kubeflow版本:1.0.2

提示:Kubernetes可以是单节点集群或是多节点集群,master节点的部署方式与node节点的部署方式略有不同,稍后会有详细说明,旨在帮助大家快速部署,减少踩坑,点赞收藏哦

一、配置要求

服务器硬件配置需求此处不做赘述,当然大家的机器配置越高越好。

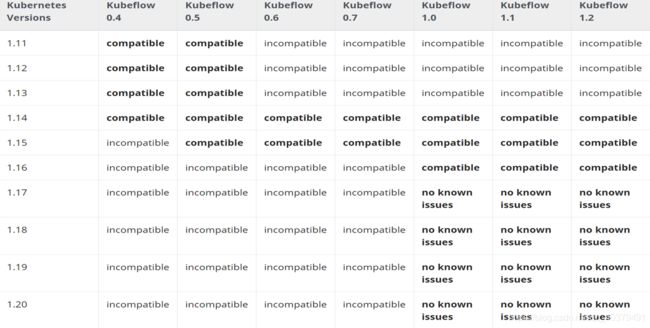

Kubernetes的旧版本可能与最新的Kubeflow版本不兼容,以下表格提供了关于kubeflow和Kubernetes版本之间兼容性的信息,也可前往链接自行查看:https://www.kubeflow.org/docs/started/k8s/overview/#minimum-system-requirements

二、安装kubernetes

1.关闭防火墙、selinux以及swap(master节点和node节点均执行)

a、关闭防火墙

代码如下(示例):

sudo ufw disable

b、关闭selinux(自行选择)

临时关闭:

setenforce 0 # 临时

永久关闭:

sed -i 's/enforcing/disabled' /etc/selinux/config # 永久

c、关闭swap

sudo sed -i 's/^.*swap/#&/g' /etc/fstab

sudo swapoff -a # 临时

2.将桥接的IPV4流量传递到iptables(master节点和node节点均执行)

指令如下:

sudo tee /etc/sysctl.d/k8s.conf <<-'EOF'

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

使其生效:

sudo sysctl --system

3.时间同步(master节点和node节点均执行)

sudo apt install ntpdate -y

sudo ntpdate time.windows.com

4.安装Docker(master节点和node节点均执行)

卸载旧版本:

sudo apt-get remove docker docker-engine docker-ce docker.io # 卸载旧版本

更新 sources.list(提示:更新前请自行备份sources.list):

sudo tee /etc/apt/sources.list <<-'EOF'

deb http://mirrors.aliyun.com/ubuntu/ xenial main

deb-src http://mirrors.aliyun.com/ubuntu/ xenial main

deb http://mirrors.aliyun.com/ubuntu/ xenial-updates main

deb-src http://mirrors.aliyun.com/ubuntu/ xenial-updates main

deb http://mirrors.aliyun.com/ubuntu/ xenial universe

deb-src http://mirrors.aliyun.com/ubuntu/ xenial universe

deb http://mirrors.aliyun.com/ubuntu/ xenial-updates universe

deb-src http://mirrors.aliyun.com/ubuntu/ xenial-updates universe

deb http://mirrors.aliyun.com/ubuntu/ xenial-security main

deb-src http://mirrors.aliyun.com/ubuntu/ xenial-security main

deb http://mirrors.aliyun.com/ubuntu/ xenial-security universe

deb-src http://mirrors.aliyun.com/ubuntu/ xenial-security universe

EOF

安装docker(依次执行):

sudo apt-get update

sudo apt-get install -y apt-transport-https ca-certificates curl software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

sudo apt-get update

apt-cache madison docker-ce # 查看docker版本列表,选择安装自己需要的版本(此处选择安装18.09.8)

sudo apt install docker-ce=5:18.09.8~3-0~ubuntu-xenial

更新用户组

sudo groupadd docker

sudo gpasswd -a $USER docker

newgrp docker

创建文件 /etc/docker/daemon.json ,添加内容,命令如下:

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://docker.mirrors.ustc.edu.cn/"]

}

EOF

重启docker并设置开机自启动:

sudo systemctl daemon-reload

sudo systemctl restart docker

systemctl enable docker

关闭dns服务,注释dns,内容如下:

sudo gedit /etc/NetworkManager/NetworkManager.conf # 打开配置文件

[main]

plugins=ifupdown,keyfile,ofono

#dns=dnsmasq # 这行注释

保存并关闭,重启服务:

sudo systemctl restart network-manager

5.安装kubeadm,kubelet,kubectl(master节点和node节点均执行)

sudo apt-get update && sudo apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

创建文件 /etc/apt/sources.list.d/kubernetes.list并添加内容, 指令如下:

sudo tee /etc/apt/sources.list.d/kubernetes.list <<-'EOF'

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

sudo apt-get update

sudo apt-get install -y kubelet=1.16.3-00 kubeadm=1.16.3-00 kubectl=1.16.3-00

提示:以上步骤,master节点和node节点均需执行,后面的步骤master节点和node节点执行略有不同

创建集群并初始化(仅master节点执行):

sudo kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.16.3 --apiserver-advertise-address=192.168.51.6 --pod-network-cidr=10.244.0.0/16

等待安装完成后,根据提示初始化 kubectl,例:

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

以下内容用于添加node节点,是由主节点初始化后生成的,注意保存对应的自己机器生成的信息,例:

kubeadm join 192.168.8.42:6443 --token ppch60.xst0udineu69v0ht \

--discovery-token-ca-cert-hash sha256:aa956312810107c67a72d350961f200a41f08ff3aeda4f1e41daaddbccd1ed62

如果出错,重置并重新初始化,直至成功:

sudo kubeadm reset

sudo kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.16.3 --apiserver-advertise-address=192.168.51.6 --pod-network-cidr=10.244.0.0/16

设置开机启动:

sudo systemctl enable kubelet

启动k8s服务程序

sudo systemctl start kubelet

安装flannel网络插件:

kubectl apply -f kube-flannel.yml

kube-flannel.yml下载网址如下:

链接:https://pan.baidu.com/s/1WOaNkB-FG08LYvbuoTWRmA

提取码:jbe4

如不能下载,附kube-flannel.yml内容如下:

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-amd64

command: [ "/opt/bin/flanneld","/bin/bash", "-ce", "tail -f /dev/null" ]

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

查看状态

kubectl get pod -n kube-system

有时会有coreDNS两个容器起不来,执行命令$ sudo gedit /run/flannel/subnet.env,添加以下内容:

FLANNEL_NETWORK=10.244.0.0/16

FLANNEL_SUBNET=10.244.0.1/24

FLANNEL_MTU=1450

FLANNEL_IPMASQ=true

让 master 也可以接受调度:

kubectl taint nodes --all node-role.kubernetes.io/master-

此处选用flannel网络插件原因如下:之前环境测试过程中选择了calico网络插件,导致安装kubeflow后有几个pod一直起不来,猜测可能由网络问题导致,如有需要,calico网络插件安装方式如下

安装calico网络插件:

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

查看状态:

kubectl get pod -n kube-system

如果插件pod起不来,重启机器,然后依次执行以下命令:

sudo ufw disable

setenforce 0

sudo swapoff -a

sudo systemctl restart docker

sudo systemctl restart kubelet.service

添加Node节点

Node节点环境配置方式如下

将主节点中的/etc/kubernetes/admin.conf文件拷贝到node节点相同的目录下,执行远程拷贝指令:

sudo scp /etc/kubernetes/admin.conf wk@192.168.51.7:/etc/kubernetes

注意:该指令在主节点下执行,[email protected]为node节点IP,需换成你自己的IP

然后依次执行:

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

kubeadm join 192.168.8.42:6443 --token ppch60.xst0udineu69v0ht \

--discovery-token-ca-cert-hash sha256:aa956312810107c67a72d350961f200a41f08ff3aeda4f1e41daaddbccd1ed62

注意:记得关闭swap

安装网络插件,Node节点安装网络插件方式与主节点方式一致

至此kubernetes集群部署完毕

安装kubeflow

该教程旨在帮助小伙伴们快速完成环境部署,防止踩坑

安装步骤

从https://github.com/kubeflow/kfctl/releases/下载v1.0.2版本对应的kfctl二进制文件,解压安装包并添加到执行路径:

tar -xvf kfctl_v1.0.2-0-ga476281_linux.tar.gz

sudo cp kfctl /usr/bin

进入root用户下的/root路径执行:

wget https://github.com/kubeflow/manifests/archive/v1.0.2.tar.gz

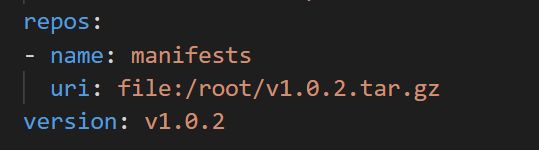

从https://github.com/kubeflow/manifests/blob/v1.0-branch/kfdef/kfctl_k8s_istio.v1.0.2.yaml拷贝yaml文件到/root路径下,修改该yaml文件,文件末尾处,修改如下:

在/root路径下编译配置文件:

kfctl build -V -f "/root/kfctl_k8s_istio.v1.0.2.yaml"

提示:root用户下使用kubectl,进入root用户下依次执行:

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

编译后生成kustomize文件夹,将kustomize文件夹拷贝到本地目录,通过VSCode软件修改相关配置文件,搜索包含‘imagepull’字段的文件,将所有的镜像拉取策略全部改为“IfNotPresent”。

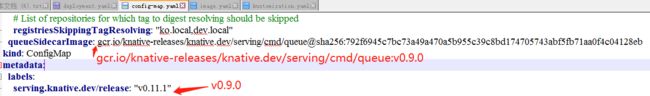

搜索包含‘gcr.io/knative-releases’字段的文件,打开对应文件,把所有v0.11.0的字眼全部改成v0.9.0,把镜像地址也改成如下图样式:

其中kustomization.yaml的修改如下,所有项都这么修改:

修改kustomize/bootstrap/base/stateful-set.yaml文件,添加镜像拉取策略:imagePullPolicy: IfNotPresent

创建PV资源,在/root路径下创建4个pv文件,附pv文件内容:

kubeflow-pv1.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: kubeflow-pv1

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/data1/kubeflow-pv1"

kubeflow-pv2.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: kubeflow-pv2

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/data1/kubeflow-pv2"

kubeflow-pv3.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: kubeflow-pv3

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/data1/kubeflow-pv3"

kubeflow-pv4.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: kubeflow-pv4

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/data1/kubeflow-pv4"

在/root路径下依次执行命令:

kubectl apply -f kubeflow-pv1.yaml

kubectl apply -f kubeflow-pv2.yaml

kubectl apply -f kubeflow-pv3.yaml

kubectl apply -f kubeflow-pv4.yaml

查看创建的PV:

kubectl get pv

拉取镜像,执行image-pull.sh脚本,附脚本内容:

#!/bin/bash

# pull istio images

docker pull istio/sidecar_injector:1.1.6

docker pull istio/proxyv2:1.1.6

docker pull istio/proxy_init:1.1.6

docker pull istio/pilot:1.1.6

docker pull istio/mixer:1.1.6

docker pull istio/galley:1.1.6

docker pull istio/citadel:1.1.6

# pull ml-pipeline images

docker pull registry.cn-hangzhou.aliyuncs.com/pigeonw/viewer-crd-controller:0.2.5

docker pull registry.cn-hangzhou.aliyuncs.com/wenxinax/ml-pipeline-api-server:0.2.5

docker pull registry.cn-hangzhou.aliyuncs.com/pigeonw/frontend:0.2.5

docker pull registry.cn-hangzhou.aliyuncs.com/pigeonw/visualization-server:0.2.5

docker pull registry.cn-hangzhou.aliyuncs.com/pigeonw/scheduledworkflow:0.2.5

docker pull registry.cn-hangzhou.aliyuncs.com/wenxinax/ml-pipeline-persistenceagent:0.2.5

docker pull registry.cn-hangzhou.aliyuncs.com/pigeonw/envoy:metadata-grpc

# pull kubeflow-images-public images

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/profile-controller:v1.0.0-ge50a8531

docker pull registry.cn-hangzhou.aliyuncs.com/therenoedge/profile-controller-v20190619-v0-219-gbd3daa8c-dirty-1ced0e:v20190619-v0-219-gbd3daa8c-dirty-1ced0e

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/notebook-controller:v1.0.0-gcd65ce25

docker pull registry.cn-hangzhou.aliyuncs.com/therenoedge/notebook-controller-v20190614-v0-160-g386f2749-e3b0c4:v20190614-v0-160-g386f2749-e3b0c4

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/jupyter-web-app:v1.0.0-g2bd63238

docker pull registry.cn-hangzhou.aliyuncs.com/gfyulx/jupyter-web-app:v0.5.0

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/centraldashboard:v1.0.0-g3ec0de71

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/tf_operator:v1.0.0-g92389064

docker pull registry.cn-hangzhou.aliyuncs.com/therenoedge/tf_operator-kubeflow-tf-operator-postsubmit-v1-5adee6f-6109-a25c:kubeflow-tf-operator-postsubmit-v1-5adee6f-6109-a25c

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/pytorch-operator:v1.0.0-g047cf0f

docker pull registry.cn-hangzhou.aliyuncs.com/therenoedge/pytorch-operator-v0.6.0-18-g5e36a57:v0.6.0-18-g5e36a57

docker pull registry.cn-hangzhou.aliyuncs.com/pigeonw/katib-ui:v0.8.0

docker pull registry.cn-hangzhou.aliyuncs.com/pigeonw/katib-controller:v0.8.0

# docker pull registry.cn-hangzhou.aliyuncs.com/yuanminglei/katib-db-manager:v0.8.0

docker pull registry.cn-hangzhou.aliyuncs.com/katib/katib-db-manager:v0.8.0

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/kfam:v1.0.0-gf3e09203

docker pull registry.cn-hangzhou.aliyuncs.com/therenoedge/kfam-v20190612-v0-170-ga06cdb79-dirty-a33ee4:v20190612-v0-170-ga06cdb79-dirty-a33ee4

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/admission-webhook:v1.0.0-gaf96e4e3

docker pull registry.cn-hangzhou.aliyuncs.com/open-open/admission-webhook:v20190520-v0-139-gcee39dbc-dirty-0d8f4c

# docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/metadata:v0.1.11

docker pull registry.cn-hangzhou.aliyuncs.com/wenxinax/kubeflow-images-public-metadata:v0.1.11

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/metadata-frontend:v0.1.8

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/application:1.0-beta

docker pull registry.cn-hangzhou.aliyuncs.com/wenxinax/kubeflow-images-public-ingress-setup:latest

# pull knative-releases images

docker pull jimmysong/knative-serving-cmd-activator:0.9

docker pull jimmysong/knative-serving-cmd-webhook:0.9

docker pull jimmysong/knative-serving-cmd-controller:0.9

docker pull jimmysong/knative-serving-cmd-networking-istio:0.9

docker pull jimmysong/knative-serving-cmd-autoscaler-hpa:0.9

docker pull jimmysong/knative-serving-cmd-autoscaler:0.9

docker pull jimmysong/knative-serving-cmd-queue:0.9

# pull extra images

docker pull registry.cn-hangzhou.aliyuncs.com/wenxinax/kfserving-kfserving-controller:0.2.2

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow_0/tfx-oss-public-ml_metadata_store_server:v0.21.1

docker pull registry.cn-hangzhou.aliyuncs.com/pigeonw/spark-operator:v1beta2-1.0.0-2.4.4

docker pull registry.cn-hangzhou.aliyuncs.com/kubebuilder/kube-rbac-proxy:v0.4.0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/spartakus-amd64:v1.1.0

# pull argoproj images

docker pull argoproj/workflow-controller:v2.3.0

docker pull argoproj/argoui:v2.3.0

docker pull tensorflow/tensorflow:1.8.0

docker pull mysql:5.6

docker pull minio/minio:RELEASE.2018-02-09T22-40-05Z

docker pull mysql:8.0.3

docker pull mysql:8

# new add

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-katib/file-metrics-collector:v0.8.0

docker pull registry.cn-hangzhou.aliyuncs.com/hellobike-public/tfevent-metrics-collector:v0.8.0

docker pull registry.cn-hangzhou.aliyuncs.com/kubeflow-katib/suggestion-hyperopt:v0.8.0

docker pull registry.cn-hangzhou.aliyuncs.com/morningsong/suggestion-chocolate:v0.8.0

docker pull registry.cn-hangzhou.aliyuncs.com/morningsong/suggestion-skopt:v0.8.0

docker pull registry.cn-hangzhou.aliyuncs.com/morningsong/suggestion-nasrl:v0.8.0

docker pull registry.cn-hangzhou.aliyuncs.com/therenoedge/viewer-crd-controller-0.1.31:0.1.31

docker pull metacontroller/metacontroller:v0.3.0

镜像重命名,执行image-rename.sh脚本,附脚本内容:

#!/bin/bash

# pull ml-pipeline images

docker tag registry.cn-hangzhou.aliyuncs.com/pigeonw/viewer-crd-controller:0.2.5 gcr.io/ml-pipeline/viewer-crd-controller:0.2.5

docker rmi registry.cn-hangzhou.aliyuncs.com/pigeonw/viewer-crd-controller:0.2.5

docker tag registry.cn-hangzhou.aliyuncs.com/wenxinax/ml-pipeline-api-server:0.2.5 gcr.io/ml-pipeline/api-server:0.2.5

docker rmi registry.cn-hangzhou.aliyuncs.com/wenxinax/ml-pipeline-api-server:0.2.5

docker tag registry.cn-hangzhou.aliyuncs.com/pigeonw/frontend:0.2.5 gcr.io/ml-pipeline/frontend:0.2.5

docker rmi registry.cn-hangzhou.aliyuncs.com/pigeonw/frontend:0.2.5

docker tag registry.cn-hangzhou.aliyuncs.com/pigeonw/visualization-server:0.2.5 gcr.io/ml-pipeline/visualization-server:0.2.5

docker rmi registry.cn-hangzhou.aliyuncs.com/pigeonw/visualization-server:0.2.5

docker tag registry.cn-hangzhou.aliyuncs.com/pigeonw/scheduledworkflow:0.2.5 gcr.io/ml-pipeline/scheduledworkflow:0.2.5

docker rmi registry.cn-hangzhou.aliyuncs.com/pigeonw/scheduledworkflow:0.2.5

docker tag registry.cn-hangzhou.aliyuncs.com/wenxinax/ml-pipeline-persistenceagent:0.2.5 gcr.io/ml-pipeline/persistenceagent:0.2.5

docker rmi registry.cn-hangzhou.aliyuncs.com/wenxinax/ml-pipeline-persistenceagent:0.2.5

docker tag registry.cn-hangzhou.aliyuncs.com/pigeonw/envoy:metadata-grpc gcr.io/ml-pipeline/envoy:metadata-grpc

docker rmi registry.cn-hangzhou.aliyuncs.com/pigeonw/envoy:metadata-grpc

# pull kubeflow-images-public images

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/profile-controller:v1.0.0-ge50a8531 gcr.io/kubeflow-images-public/profile-controller:v1.0.0-ge50a8531

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/profile-controller:v1.0.0-ge50a8531

docker tag registry.cn-hangzhou.aliyuncs.com/therenoedge/profile-controller-v20190619-v0-219-gbd3daa8c-dirty-1ced0e:v20190619-v0-219-gbd3daa8c-dirty-1ced0e gcr.io/kubeflow-images-public/profile-controller:v20190619-v0-219-gbd3daa8c-dirty-1ced0e

docker rmi registry.cn-hangzhou.aliyuncs.com/therenoedge/profile-controller-v20190619-v0-219-gbd3daa8c-dirty-1ced0e:v20190619-v0-219-gbd3daa8c-dirty-1ced0e

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/notebook-controller:v1.0.0-gcd65ce25 gcr.io/kubeflow-images-public/notebook-controller:v1.0.0-gcd65ce25

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/notebook-controller:v1.0.0-gcd65ce25

docker tag registry.cn-hangzhou.aliyuncs.com/therenoedge/notebook-controller-v20190614-v0-160-g386f2749-e3b0c4:v20190614-v0-160-g386f2749-e3b0c4 gcr.io/kubeflow-images-public/notebook-controller:v20190614-v0-160-g386f2749-e3b0c4

docker rmi registry.cn-hangzhou.aliyuncs.com/therenoedge/notebook-controller-v20190614-v0-160-g386f2749-e3b0c4:v20190614-v0-160-g386f2749-e3b0c4

docker tag registry.cn-hangzhou.aliyuncs.com/pigeonw/katib-ui:v0.8.0 gcr.io/kubeflow-images-public/katib/v1alpha3/katib-ui:v0.8.0

docker rmi registry.cn-hangzhou.aliyuncs.com/pigeonw/katib-ui:v0.8.0

docker tag registry.cn-hangzhou.aliyuncs.com/pigeonw/katib-controller:v0.8.0 gcr.io/kubeflow-images-public/katib/v1alpha3/katib-controller:v0.8.0

docker rmi registry.cn-hangzhou.aliyuncs.com/pigeonw/katib-controller:v0.8.0

# docker tag registry.cn-hangzhou.aliyuncs.com/yuanminglei/katib-db-manager:v0.8.0 gcr.io/kubeflow-images-public/katib/v1alpha3/katib-db-manager:v0.8.0

# docker rmi registry.cn-hangzhou.aliyuncs.com/yuanminglei/katib-db-manager:v0.8.0

docker tag registry.cn-hangzhou.aliyuncs.com/katib/katib-db-manager:v0.8.0 gcr.io/kubeflow-images-public/katib/v1alpha3/katib-db-manager:v0.8.0

docker rmi registry.cn-hangzhou.aliyuncs.com/katib/katib-db-manager:v0.8.0

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/jupyter-web-app:v1.0.0-g2bd63238 gcr.io/kubeflow-images-public/jupyter-web-app:v1.0.0-g2bd63238

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/jupyter-web-app:v1.0.0-g2bd63238

docker tag registry.cn-hangzhou.aliyuncs.com/gfyulx/jupyter-web-app:v0.5.0 gcr.io/kubeflow-images-public/jupyter-web-app:v0.5.0

docker rmi registry.cn-hangzhou.aliyuncs.com/gfyulx/jupyter-web-app:v0.5.0

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/centraldashboard:v1.0.0-g3ec0de71 gcr.io/kubeflow-images-public/centraldashboard:v1.0.0-g3ec0de71

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/centraldashboard:v1.0.0-g3ec0de71

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/tf_operator:v1.0.0-g92389064 gcr.io/kubeflow-images-public/tf_operator:v1.0.0-g92389064

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/tf_operator:v1.0.0-g92389064

docker tag registry.cn-hangzhou.aliyuncs.com/therenoedge/tf_operator-kubeflow-tf-operator-postsubmit-v1-5adee6f-6109-a25c:kubeflow-tf-operator-postsubmit-v1-5adee6f-6109-a25c gcr.io/kubeflow-images-public/tf_operator:kubeflow-tf-operator-postsubmit-v1-5adee6f-6109-a25c

docker rmi registry.cn-hangzhou.aliyuncs.com/therenoedge/tf_operator-kubeflow-tf-operator-postsubmit-v1-5adee6f-6109-a25c:kubeflow-tf-operator-postsubmit-v1-5adee6f-6109-a25c

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/pytorch-operator:v1.0.0-g047cf0f gcr.io/kubeflow-images-public/pytorch-operator:v1.0.0-g047cf0f

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/pytorch-operator:v1.0.0-g047cf0f

docker tag registry.cn-hangzhou.aliyuncs.com/therenoedge/pytorch-operator-v0.6.0-18-g5e36a57:v0.6.0-18-g5e36a57 gcr.io/kubeflow-images-public/pytorch-operator:v0.6.0-18-g5e36a57

docker rmi registry.cn-hangzhou.aliyuncs.com/therenoedge/pytorch-operator-v0.6.0-18-g5e36a57:v0.6.0-18-g5e36a57

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/kfam:v1.0.0-gf3e09203 gcr.io/kubeflow-images-public/kfam:v1.0.0-gf3e09203

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/kfam:v1.0.0-gf3e09203

docker tag registry.cn-hangzhou.aliyuncs.com/therenoedge/kfam-v20190612-v0-170-ga06cdb79-dirty-a33ee4:v20190612-v0-170-ga06cdb79-dirty-a33ee4 gcr.io/kubeflow-images-public/kfam:v20190612-v0-170-ga06cdb79-dirty-a33ee4

docker rmi registry.cn-hangzhou.aliyuncs.com/therenoedge/kfam-v20190612-v0-170-ga06cdb79-dirty-a33ee4:v20190612-v0-170-ga06cdb79-dirty-a33ee4

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/admission-webhook:v1.0.0-gaf96e4e3 gcr.io/kubeflow-images-public/admission-webhook:v1.0.0-gaf96e4e3

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/admission-webhook:v1.0.0-gaf96e4e3

docker tag registry.cn-hangzhou.aliyuncs.com/open-open/admission-webhook:v20190520-v0-139-gcee39dbc-dirty-0d8f4c gcr.io/kubeflow-images-public/admission-webhook:v20190520-v0-139-gcee39dbc-dirty-0d8f4c

docker rmi registry.cn-hangzhou.aliyuncs.com/open-open/admission-webhook:v20190520-v0-139-gcee39dbc-dirty-0d8f4c

# docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/metadata:v0.1.11 gcr.io/kubeflow-images-public/metadata:v0.1.11

# docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/metadata:v0.1.11

docker tag registry.cn-hangzhou.aliyuncs.com/wenxinax/kubeflow-images-public-metadata:v0.1.11 gcr.io/kubeflow-images-public/metadata:v0.1.11

docker rmi registry.cn-hangzhou.aliyuncs.com/wenxinax/kubeflow-images-public-metadata:v0.1.11

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/metadata-frontend:v0.1.8 gcr.io/kubeflow-images-public/metadata-frontend:v0.1.8

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/metadata-frontend:v0.1.8

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/application:1.0-beta gcr.io/kubeflow-images-public/kubernetes-sigs/application:1.0-beta

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-images-public/application:1.0-beta

docker tag registry.cn-hangzhou.aliyuncs.com/wenxinax/kubeflow-images-public-ingress-setup:latest gcr.io/kubeflow-images-public/ingress-setup:latest

docker rmi registry.cn-hangzhou.aliyuncs.com/wenxinax/kubeflow-images-public-ingress-setup:latest

# pull knative-releases images

docker tag jimmysong/knative-serving-cmd-activator:0.9 gcr.io/knative-releases/knative.dev/serving/cmd/activator:v0.9.0

docker rmi jimmysong/knative-serving-cmd-activator:0.9

docker tag jimmysong/knative-serving-cmd-webhook:0.9 gcr.io/knative-releases/knative.dev/serving/cmd/webhook:v0.9.0

docker rmi jimmysong/knative-serving-cmd-webhook:0.9

docker tag jimmysong/knative-serving-cmd-controller:0.9 gcr.io/knative-releases/knative.dev/serving/cmd/controller:v0.9.0

docker rmi jimmysong/knative-serving-cmd-controller:0.9

docker tag jimmysong/knative-serving-cmd-networking-istio:0.9 gcr.io/knative-releases/knative.dev/serving/cmd/networking/istio:v0.9.0

docker rmi jimmysong/knative-serving-cmd-networking-istio:0.9

docker tag jimmysong/knative-serving-cmd-autoscaler-hpa:0.9 gcr.io/knative-releases/knative.dev/serving/cmd/autoscaler-hpa:v0.9.0

docker rmi jimmysong/knative-serving-cmd-autoscaler-hpa:0.9

docker tag jimmysong/knative-serving-cmd-autoscaler:0.9 gcr.io/knative-releases/knative.dev/serving/cmd/autoscaler:v0.9.0

docker rmi jimmysong/knative-serving-cmd-autoscaler:0.9

docker tag jimmysong/knative-serving-cmd-queue:0.9 gcr.io/knative-releases/knative.dev/serving/cmd/queue:v0.9.0

docker rmi jimmysong/knative-serving-cmd-queue:0.9

# pull extra images

docker tag registry.cn-hangzhou.aliyuncs.com/wenxinax/kfserving-kfserving-controller:0.2.2 gcr.io/kfserving/kfserving-controller:0.2.2

docker rmi registry.cn-hangzhou.aliyuncs.com/wenxinax/kfserving-kfserving-controller:0.2.2

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow_0/tfx-oss-public-ml_metadata_store_server:v0.21.1 gcr.io/tfx-oss-public/ml_metadata_store_server:v0.21.1

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow_0/tfx-oss-public-ml_metadata_store_server:v0.21.1

docker tag registry.cn-hangzhou.aliyuncs.com/pigeonw/spark-operator:v1beta2-1.0.0-2.4.4 gcr.io/spark-operator/spark-operator:v1beta2-1.0.0-2.4.4

docker rmi registry.cn-hangzhou.aliyuncs.com/pigeonw/spark-operator:v1beta2-1.0.0-2.4.4

docker tag registry.cn-hangzhou.aliyuncs.com/kubebuilder/kube-rbac-proxy:v0.4.0 gcr.io/kubebuilder/kube-rbac-proxy:v0.4.0

docker rmi registry.cn-hangzhou.aliyuncs.com/kubebuilder/kube-rbac-proxy:v0.4.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/spartakus-amd64:v1.1.0 gcr.io/google_containers/spartakus-amd64:v1.1.0

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/spartakus-amd64:v1.1.0

# new add

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-katib/file-metrics-collector:v0.8.0 gcr.io/kubeflow-images-public/katib/v1alpha3/file-metrics-collector:v0.8.0

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-katib/file-metrics-collector:v0.8.0

docker tag registry.cn-hangzhou.aliyuncs.com/hellobike-public/tfevent-metrics-collector:v0.8.0 gcr.io/kubeflow-images-public/katib/v1alpha3/tfevent-metrics-collector:v0.8.0

docker rmi registry.cn-hangzhou.aliyuncs.com/hellobike-public/tfevent-metrics-collector:v0.8.0

docker tag registry.cn-hangzhou.aliyuncs.com/kubeflow-katib/suggestion-hyperopt:v0.8.0 gcr.io/kubeflow-images-public/katib/v1alpha3/suggestion-hyperopt:v0.8.0

docker rmi registry.cn-hangzhou.aliyuncs.com/kubeflow-katib/suggestion-hyperopt:v0.8.0

docker tag registry.cn-hangzhou.aliyuncs.com/morningsong/suggestion-chocolate:v0.8.0 gcr.io/kubeflow-images-public/katib/v1alpha3/suggestion-chocolate:v0.8.0

docker rmi registry.cn-hangzhou.aliyuncs.com/morningsong/suggestion-chocolate:v0.8.0

docker tag registry.cn-hangzhou.aliyuncs.com/morningsong/suggestion-skopt:v0.8.0 gcr.io/kubeflow-images-public/katib/v1alpha3/suggestion-skopt:v0.8.0

docker rmi registry.cn-hangzhou.aliyuncs.com/morningsong/suggestion-skopt:v0.8.0

docker tag registry.cn-hangzhou.aliyuncs.com/morningsong/suggestion-nasrl:v0.8.0 gcr.io/kubeflow-images-public/katib/v1alpha3/suggestion-nasrl:v0.8.0

docker rmi registry.cn-hangzhou.aliyuncs.com/morningsong/suggestion-nasrl:v0.8.0

docker tag registry.cn-hangzhou.aliyuncs.com/therenoedge/viewer-crd-controller-0.1.31:0.1.31 gcr.io/ml-pipeline/viewer-crd-controller:0.1.31

docker rmi registry.cn-hangzhou.aliyuncs.com/therenoedge/viewer-crd-controller-0.1.31:0.1.31

安装kubeflow,在/root路径下执行:

kfctl apply -V -f "/root/kfctl_k8s_istio.v1.0.2.yaml"

等待安装完成,如果出错,重新执行上面命令

查看pod情况:

kubectl get pod -n Kubeflow

提示:kubernetes使用calico网络插件导致某些pod起不来,所以选择flannel网络插件

**划重点:**带minmo标签的pod出错,该pod问题会连带其它pod启动出现问题,是由于pv资源创建出错,通过kubectl describe pod xxxxxx -n kubeflow命令查看具体错误信息,需要进入/data1目录,创建文件夹kubeflow-pv2(根据报错实际情况创建所需的文件夹)即可解决

启动kubeflow ui

nohup kubectl port-forward -n istio-system svc/istio-ingressgateway 8088:80 --address=0.0.0.0 &

至此,kubeflow部署完毕,欢迎大家点赞收藏留言^^