动手学深度学习之经典的卷积神经网络之AlexNet

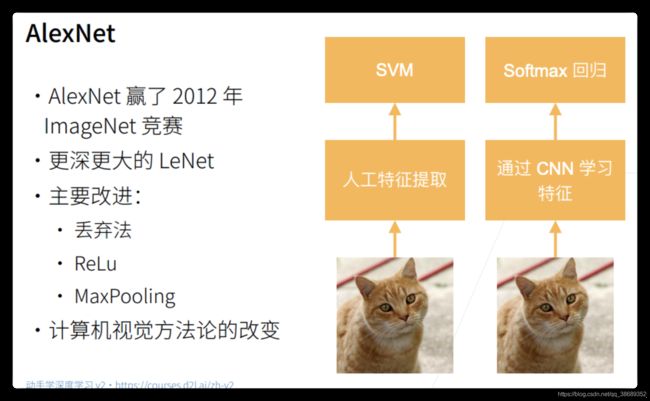

AlexNet

- AlexNet本质是是一个更深更大的LeNet,本质上并没有什么区别

- 主要的改进

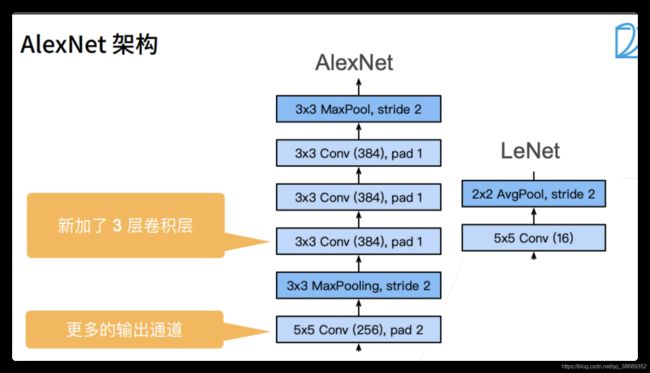

AlexNet架构

-

AlexNet的输入是一个224 * 224的矩阵,它的通道数为3,因为它是一个RGB的图片。第一个卷积层:它的卷积核的大小是11 * 11,通道数为96,stride=4。第一个池化层:使用的是最大池化,池化窗口的大小为3 * 3,stride=2。卷积层:卷积核的大小为5 * 5,通道数为256,padding=2,这样就可以识别更多的模式了。池化层:池化窗口为:3 * 3,stride=2。三个一样的卷积层:卷积核为:3 * 3, 通道数为384,padding=1。池化层:池化窗口为3 * 3, stride=2。两个隐藏层:隐藏层的大小是4096.

-

更多细节

- 激活函数从Simoid变到了ReLu(减缓梯度消失)

- 隐藏全连接层之后加入了丢弃层

- 数据增强

复杂度对比

总结

代码实现

import torch

from torch import nn

class Reshape(nn.Module):

def forward(self, X):

return X.view(-1, 3, 224, 224)

# AlexNet网络架构

AlexNet = nn.Sequential(

nn.Conv2d(1, 96, kernel_size=11, stride=4, padding=1), nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(96, 256, kernel_size=5, padding=2), nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(256, 384, kernel_size=3, padding=1), nn.ReLU(),

nn.Conv2d(384, 384, kernel_size=3, padding=1), nn.ReLU(),

nn.Conv2d(384, 256, kernel_size=3, padding=1), nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2),nn.Flatten(),

nn.Linear(6400, 4096), nn.ReLU(), nn.Dropout(p=0.5),

nn.Linear(4096, 4096), nn.ReLU(), nn.Dropout(p=0.5),

nn.Linear(4096, 10)

)

# 看看每一层是怎么变化的

X = torch.randn(1, 1, 224, 224)

for layer in AlexNet:

X = layer(X)

print(layer.__class__.__name__, "Output shape:\t", X.shape)

Conv2d Output shape: torch.Size([1, 96, 54, 54])

ReLU Output shape: torch.Size([1, 96, 54, 54])

MaxPool2d Output shape: torch.Size([1, 96, 26, 26])

Conv2d Output shape: torch.Size([1, 256, 26, 26])

ReLU Output shape: torch.Size([1, 256, 26, 26])

MaxPool2d Output shape: torch.Size([1, 256, 12, 12])

Conv2d Output shape: torch.Size([1, 384, 12, 12])

ReLU Output shape: torch.Size([1, 384, 12, 12])

Conv2d Output shape: torch.Size([1, 384, 12, 12])

ReLU Output shape: torch.Size([1, 384, 12, 12])

Conv2d Output shape: torch.Size([1, 256, 12, 12])

ReLU Output shape: torch.Size([1, 256, 12, 12])

MaxPool2d Output shape: torch.Size([1, 256, 5, 5])

Flatten Output shape: torch.Size([1, 6400])

Linear Output shape: torch.Size([1, 4096])

ReLU Output shape: torch.Size([1, 4096])

Dropout Output shape: torch.Size([1, 4096])

Linear Output shape: torch.Size([1, 4096])

ReLU Output shape: torch.Size([1, 4096])

Dropout Output shape: torch.Size([1, 4096])

Linear Output shape: torch.Size([1, 10])

/Users/tiger/opt/anaconda3/envs/d2l-zh/lib/python3.8/site-packages/torch/nn/functional.py:718: UserWarning: Named tensors and all their associated APIs are an experimental feature and subject to change. Please do not use them for anything important until they are released as stable. (Triggered internally at ../c10/core/TensorImpl.h:1156.)

return torch.max_pool2d(input, kernel_size, stride, padding, dilation, ceil_mode)

下面是使用Google Colab的GPU训练的结果

import torch

from d2l import torch as d2l

from torch import nn

class Reshape(nn.Module):

def forward(self, X):

return X.view(-1, 3, 224, 224)

# AlexNet网络架构

AlexNet = nn.Sequential(

nn.Conv2d(1, 96, kernel_size=11, stride=4, padding=1), nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(96, 256, kernel_size=5, padding=2), nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(256, 384, kernel_size=3, padding=1), nn.ReLU(),

nn.Conv2d(384, 384, kernel_size=3, padding=1), nn.ReLU(),

nn.Conv2d(384, 256, kernel_size=3, padding=1), nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2),nn.Flatten(),

nn.Linear(6400, 4096), nn.ReLU(), nn.Dropout(p=0.5),

nn.Linear(4096, 4096), nn.ReLU(), nn.Dropout(p=0.5),

nn.Linear(4096, 10)

)

batch_size = 128

train_iter, test_iter = d2l.load_data_fashion_mnist(batch_size, resize=224)

lr, num_epochs = 0.01, 10

d2l.train_ch6(AlexNet, train_iter, test_iter, num_epochs, lr, d2l.try_gpu())

loss 0.329, train acc 0.881, test acc 0.884

1469.2 examples/sec on cuda:0

!nvidia-smi

Wed Aug 18 04:38:12 2021

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.57.02 Driver Version: 460.32.03 CUDA Version: 11.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 |

| N/A 48C P8 10W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+