OpenCV-针对不同分辨率的匹配操作

针对不同分辨率的匹配操作

- 项目要求

-

- OpenCV模板匹配

-

- 模板匹配的工作方式

- 模板匹配的匹配方式

- 模板匹配存在的问题

- 解决方法

-

- 方法1:直方图+自适应模板匹配

-

- 结果

- 方法二:SIFT

-

- 效果

- 方法三:灰度匹配+模板匹配

-

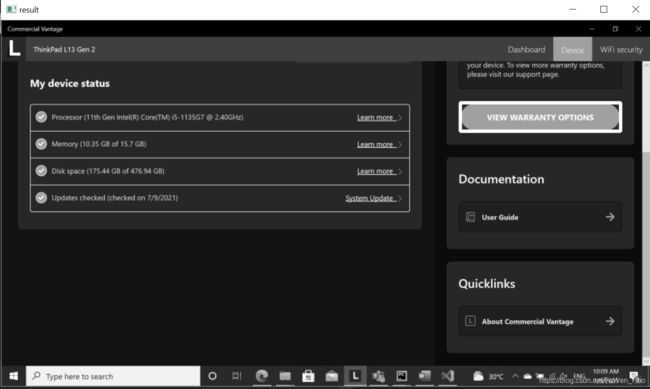

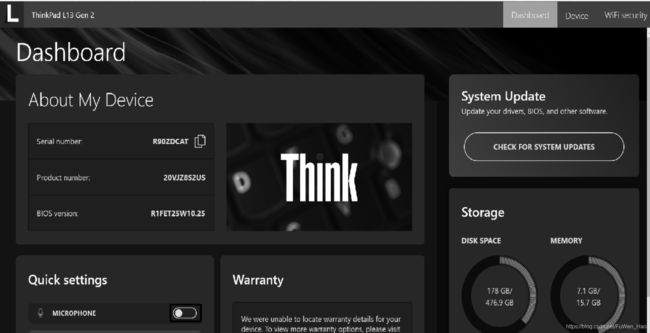

- 结果和结论

项目要求

有一个需要,在UI自动化中,我们需要匹配某个元素在app中的位置,如何获取该元素的位置呢?一般可以通过Automation ID或者XPath,但是,有些控件或者元素,它无法通过这种方法定位,所以,我们把问题抽象成在一张图片中,框出目标元素的位置。

OpenCV模板匹配

模板匹配的工作方式

模板匹配的工作方式跟直方图的反向投影基本一样,大致过程是这样的:通过在输入图像上滑动图像块对实际的图像块和输入图像进行匹配。

假设我们有一张100x100的输入图像,有一张10x10的模板图像,查找的过程是这样的:

(1)从输入图像的左上角(0,0)开始,切割一块(0,0)至(10,10)的临时图像;

(2)用临时图像和模板图像进行对比,对比结果记为c;

(3)对比结果c,就是结果图像(0,0)处的像素值;

(4)切割输入图像从(0,1)至(10,11)的临时图像,对比,并记录到结果图像;

(5)重复(1)~(4)步直到输入图像的右下角。

大家可以看到,直方图反向投影对比的是直方图,而模板匹配对比的是图像的像素值;模板匹配比直方图反向投影速度要快一些,但是我个人认为直方图反向投影的鲁棒性会更好。

模板匹配的匹配方式

在OpenCv和EmguCv中支持以下6种对比方式:

CV_TM_SQDIFF 平方差匹配法:该方法采用平方差来进行匹配;最好的匹配值为0;匹配越差,匹配值越大。

CV_TM_CCORR 相关匹配法:该方法采用乘法操作;数值越大表明匹配程度越好。

CV_TM_CCOEFF 相关系数匹配法:1表示完美的匹配;-1表示最差的匹配。

CV_TM_SQDIFF_NORMED 归一化平方差匹配法

CV_TM_CCORR_NORMED 归一化相关匹配法

CV_TM_CCOEFF_NORMED 归一化相关系数匹配法

根据我的测试结果来看,上述几种匹配方式需要的计算时间比较接近(跟《学习OpenCV》书上说的不同),我们可以选择一个能适应场景的匹配方式。

模板匹配存在的问题

1.对于分辨率不同的图片,它无法正常匹配。在实际的任务中,我们的模板图片可能发生分辨率的改变。

2.对于些许形变的图片,它也无法正常匹配。

3.截图内容无法匹配。

关于自适应屏幕显示:

#include 但是为了完整得显示图片,多次尝试发现,flag参数为WINDOW_NORMAL时才可以在手动调整窗口的条件下显示完整的图片。

解决方法

方法1:直方图+自适应模板匹配

#includehistogram.h

//直方图比较

#include大致思路:

给定的模板,它已经是分辨率比较低的了,我们只能改变截图,根据,每次缩放截图,我们把结果用map存起来,map的key为直方图相似度,value为Point和Rect(该点坐标和对比的图),通过,map自动排序。

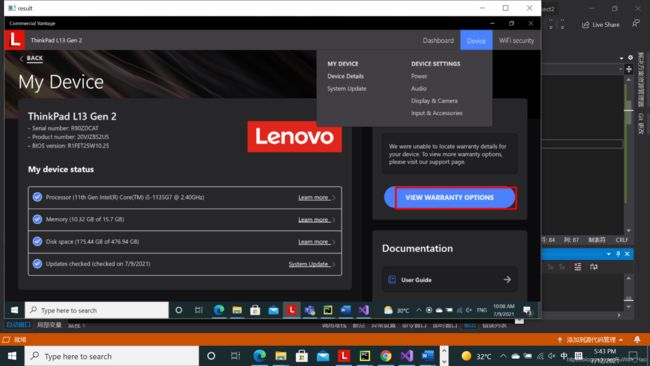

结果

方法二:SIFT

#!/usr/bin/env python

# Python 2/3 compatibility

from __future__ import print_function

import numpy as np

import cv2

def init_feature():

# SIFT匹配

detector = cv2.SIFT_create(700)

# BRISK匹配

#cv2.BRISK_create()

# 归一化

norm = cv2.NORM_L2

#cv2.NORM_L2

# 特征值全匹配

matcher = cv2.BFMatcher(norm)

return detector, matcher

def filter_matches(kp1, kp2, matches, ratio = 0.75):

mkp1, mkp2 = [], []

for m in matches:

if len(m) == 2 and m[0].distance < m[1].distance * ratio:

m = m[0]

mkp1.append( kp1[m.queryIdx] )

mkp2.append( kp2[m.trainIdx] )

p1 = np.float32([kp.pt for kp in mkp1])

p2 = np.float32([kp.pt for kp in mkp2])

kp_pairs = zip(mkp1, mkp2)

return p1, p2, kp_pairs

def explore_match(win, img1, img2, kp_pairs, status = None, H = None):

h1, w1 = img1.shape[:2]

h2, w2 = img2.shape[:2]

vis = np.zeros((max(h1, h2), w1+w2), np.uint8)

vis[:h1, :w1] = img1

vis[:h2, w1:w1+w2] = img2

vis = cv2.cvtColor(vis, cv2.COLOR_GRAY2BGR)

if H is not None:

corners = np.float32([[0, 0], [w1, 0], [w1, h1], [0, h1]])

corners = np.int32( cv2.perspectiveTransform(corners.reshape(1, -1, 2), H).reshape(-1, 2) + (w1, 0) )

cv2.polylines(vis, [corners], True, (0, 0, 255))

cv2.imshow(win, vis)

return vis

if __name__ == '__main__':

img1 = cv2.imread('button.png', 0)

img2 = cv2.imread('test1.png', 0)

smaller_img1 = cv2.resize(img1, None, fx=0.5, fy=0.5, interpolation=cv2.INTER_AREA)

smaller_img2 = cv2.resize(img2, None, fx=0.5, fy=0.5, interpolation=cv2.INTER_AREA)

detector, matcher = init_feature()

kp1, desc1 = detector.detectAndCompute(smaller_img1, None)

kp2, desc2 = detector.detectAndCompute(smaller_img2, None)

raw_matches = matcher.knnMatch(desc1, trainDescriptors = desc2, k = 2)

p1, p2, kp_pairs = filter_matches(kp1, kp2, raw_matches)

if len(p1) >= 4:

H, status = cv2.findHomography(p1, p2, cv2.RANSAC, 5.0)

print('%d / %d inliers/matched' % (np.sum(status), len(status)))

vis = explore_match('find_obj', smaller_img1, smaller_img2, kp_pairs, status, H)

cv2.waitKey()

cv2.destroyAllWindows()

else:

print('%d matches found, not enough for homography estimation' % len(p1))

通过,缩放和SIFT。其实际效果,有时候并不好。

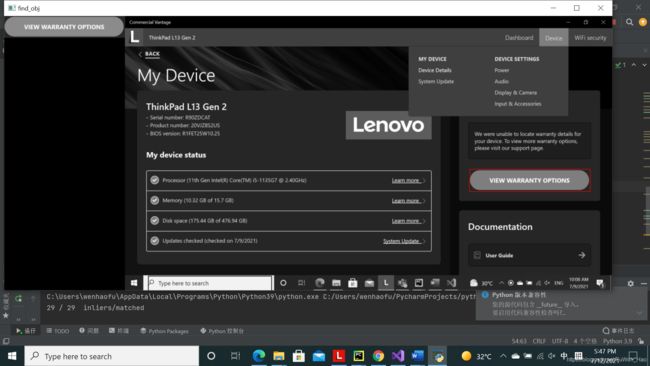

效果

方法三:灰度匹配+模板匹配

GrayMatching.h

#include