- PyTorch & TensorFlow速成复习:从基础语法到模型部署实战(附FPGA移植衔接)

阿牛的药铺

算法移植部署pytorchtensorflowfpga开发

PyTorch&TensorFlow速成复习:从基础语法到模型部署实战(附FPGA移植衔接)引言:为什么算法移植工程师必须掌握框架基础?针对光学类产品算法FPGA移植岗位需求(如可见光/红外图像处理),深度学习框架是算法落地的"桥梁"——既要用PyTorch/TensorFlow验证算法可行性,又要将训练好的模型(如CNN、目标检测)转换为FPGA可部署的格式(ONNX、TFLite)。本文采用"

- 深度学习模型表征提取全解析

ZhangJiQun&MXP

教学2024大模型以及算力2021AIpython深度学习人工智能pythonembedding语言模型

模型内部进行表征提取的方法在自然语言处理(NLP)中,“表征(Representation)”指将文本(词、短语、句子、文档等)转化为计算机可理解的数值形式(如向量、矩阵),核心目标是捕捉语言的语义、语法、上下文依赖等信息。自然语言表征技术可按“静态/动态”“有无上下文”“是否融入知识”等维度划分一、传统静态表征(无上下文,词级为主)这类方法为每个词分配固定向量,不考虑其在具体语境中的含义(无法解

- 【Qualcomm】高通SNPE框架简介、下载与使用

Jackilina_Stone

人工智能QualcommSNPE

目录一高通SNPE框架1SNPE简介2QNN与SNPE3Capabilities4工作流程二SNPE的安装与使用1下载2Setup3SNPE的使用概述一高通SNPE框架1SNPE简介SNPE(SnapdragonNeuralProcessingEngine),是高通公司推出的面向移动端和物联网设备的深度学习推理框架。SNPE提供了一套完整的深度学习推理框架,能够支持多种深度学习模型,包括Pytor

- vllm本地部署bge-reranker-v2-m3模型API服务实战教程

雷 电法王

大模型部署linuxpythonvscodelanguagemodel

文章目录一、说明二、配置环境2.1安装虚拟环境2.2安装vllm2.3对应版本的pytorch安装2.4安装flash_attn2.5下载模型三、运行代码3.1启动服务3.2调用代码验证一、说明本文主要介绍vllm本地部署BAAI/bge-reranker-v2-m3模型API服务实战教程本文是在Ubuntu24.04+CUDA12.8+Python3.12环境下复现成功的二、配置环境2.1安装虚

- Qualcomm Hexagon DSP 与 AI Engine 架构深度分析:从微架构原理到 Android 部署实战

观熵

国产NPU×Android推理优化人工智能架构android

QualcommHexagonDSP与AIEngine架构深度分析:从微架构原理到Android部署实战关键词QualcommHexagon、AIEngine、HTA、HVX、HMX、Snapdragon、DSP推理加速、AIC、QNNSDK、Tensor编排、AndroidNNAPI、异构调度摘要HexagonDSP架构是QualcommSnapdragonSoC平台中长期演进的异构计算核心之一

- 深度学习篇---昇腾NPU&CANN 工具包

Atticus-Orion

上位机知识篇图像处理篇深度学习篇深度学习人工智能NPU昇腾CANN

介绍昇腾NPU是华为推出的神经网络处理器,具有强大的AI计算能力,而CANN工具包则是面向AI场景的异构计算架构,用于发挥昇腾NPU的性能优势。以下是详细介绍:昇腾NPU架构设计:采用达芬奇架构,是一个片上系统,主要由特制的计算单元、大容量的存储单元和相应的控制单元组成。集成了多个CPU核心,包括控制CPU和AICPU,前者用于控制处理器整体运行,后者承担非矩阵类复杂计算。此外,还拥有AICore

- 深度学习图像分类数据集—桃子识别分类

AI街潜水的八角

深度学习图像数据集深度学习分类人工智能

该数据集为图像分类数据集,适用于ResNet、VGG等卷积神经网络,SENet、CBAM等注意力机制相关算法,VisionTransformer等Transformer相关算法。数据集信息介绍:桃子识别分类:['B1','M2','R0','S3']训练数据集总共有6637张图片,每个文件夹单独放一种数据各子文件夹图片统计:·B1:1601张图片·M2:1800张图片·R0:1601张图片·S3:

- NumPy-@运算符详解

GG不是gg

numpynumpy

NumPy-@运算符详解一、@运算符的起源与设计目标1.从数学到代码:符号的统一2.设计目标二、@运算符的核心语法与运算规则1.基础用法:二维矩阵乘法2.一维向量的矩阵语义3.高维数组:批次矩阵运算4.广播机制:灵活的形状匹配三、@运算符与其他乘法方式的核心区别1.对比`np.dot()`2.对比元素级乘法`*`3.对比`np.matrix`的`*`运算符四、典型应用场景:从基础到高阶1.深度学习

- NLP_知识图谱_大模型——个人学习记录

macken9999

自然语言处理知识图谱大模型自然语言处理知识图谱学习

1.自然语言处理、知识图谱、对话系统三大技术研究与应用https://github.com/lihanghang/NLP-Knowledge-Graph深度学习-自然语言处理(NLP)-知识图谱:知识图谱构建流程【本体构建、知识抽取(实体抽取、关系抽取、属性抽取)、知识表示、知识融合、知识存储】-元気森林-博客园https://www.cnblogs.com/-402/p/16529422.htm

- 解决 Python 包安装失败问题:以 accelerate 为例

在使用Python开发项目时,我们经常会遇到依赖包安装失败的问题。今天,我们就以accelerate包为例,详细探讨一下可能的原因以及解决方法。通过这篇文章,你将了解到Python包安装失败的常见原因、如何切换镜像源、如何手动安装包,以及一些实用的注意事项。一、问题背景在开发一个深度学习项目时,我需要安装accelerate包来优化模型的训练过程。然而,当我运行以下命令时:bash复制pipins

- 从RNN循环神经网络到Transformer注意力机制:解析神经网络架构的华丽蜕变

熊猫钓鱼>_>

神经网络rnntransformer

1.引言在自然语言处理和序列建模领域,神经网络架构经历了显著的演变。从早期的循环神经网络(RNN)到现代的Transformer架构,这一演变代表了深度学习方法在处理序列数据方面的重大进步。本文将深入比较这两种架构,分析它们的工作原理、优缺点,并通过实验结果展示它们在实际应用中的性能差异。2.循环神经网络(RNN)2.1基本原理循环神经网络是专门为处理序列数据而设计的神经网络架构。RNN的核心思想

- pycharm无法识别conda环境(已解决)

Reborker

pycharmcondaide

文章目录前言研究过程解决办法前言好久不用pycharm了,打开后提示更新,更新到了2023.1版本。安装conda后在新建了一个虚拟环境pytorch,但是无论是基础环境还是虚拟环境,pycharm都识别不出conda里的python.exe(如图)。如果不想看啰嗦直接看后面的解决办法,比较闲的话可以看看我的研究过程。研究过程看了很多博客,尝试了以下解决办法:加载conda.bat文件,虽然出现了

- jetson agx orin 刷机、cuda、pytorch配置指南【亲测有效】

jetsonagxorin刷机指南注意事项刷机具体指南cuda环境配置指南Anconda、Pytorch配置注意事项1.使用设备自带usbtoc的传输线时,注意c口插到orin左侧的口,右侧的口不支持数据传输;2.刷机时需准备ubuntu系统,可以是虚拟机,注意安装SDKManager刷机时,JetPack版本要选对,JetPack6.0的对应ubuntu22,cuda12版本,对应pytorch

- 如何使用Python实现交通工具识别

如何使用Python实现交通工具识别文章目录技术架构功能流程识别逻辑用户界面增强特性依赖项主要类别内容展示该系统是一个基于深度学习的交通工具识别工具,具备以下核心功能与特点:技术架构使用预训练的ResNet50卷积神经网络模型(来自ImageNet数据集)集成图像增强预处理技术(随机裁剪、旋转、翻转等)采用多数投票机制提升预测稳定性基于置信度评分的结果筛选策略功能流程用户通过GUI界面选择待识别图

- Yolov5-obb(旋转目标poly_nms_cuda.cu编译bug记录及解决方案)

关于在执行pythonsetup.pydevelop#or"pipinstall-v-e."时poly_nms_cuda.cu报错问题。前面步骤严格按照install.md环境1.pytorch版本较低时(我的是1.10):poly_nms_cuda.cu文件添加”#defineeps1e-8“,删除“constdoubleeps=1E-8;”这句2.pytorch版本较高时(我用的是1.27)h

- Python OpenCV教程从入门到精通的全面指南【文末送书】

一键难忘

pythonopencv开发语言

文章目录PythonOpenCV从入门到精通1.安装OpenCV2.基本操作2.1读取和显示图像2.2图像基本操作3.图像处理3.1图像转换3.2图像阈值处理3.3图像平滑4.边缘检测和轮廓4.1Canny边缘检测4.2轮廓检测5.高级操作5.1特征检测5.2目标跟踪5.3深度学习与OpenCVPythonOpenCV从入门到精通【文末送书】PythonOpenCV从入门到精通OpenCV(Ope

- 第八周 tensorflow实现猫狗识别

降花绘

365天深度学习tensorflow系列tensorflow深度学习人工智能

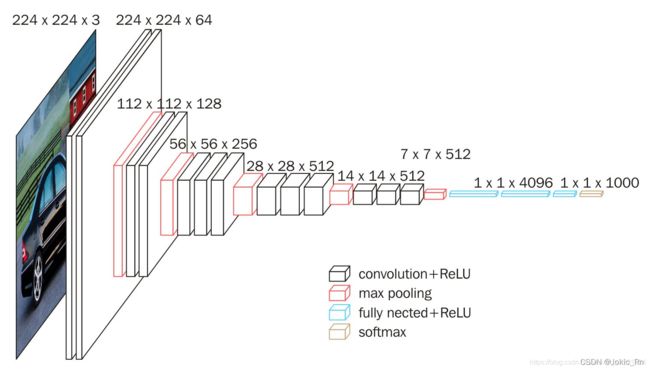

本文为365天深度学习训练营内部限免文章(版权归K同学啊所有)**参考文章地址:[TensorFlow入门实战|365天深度学习训练营-第8周:猫狗识别(训练营内部成员可读)]**作者:K同学啊文章目录一、本周学习内容:1、自己搭建VGG16网络2、了解model.train_on_batch()3、了解tqdm,并使用tqdm实现可视化进度条二、前言三、电脑环境四、前期准备1、导入相关依赖项2、

- 深度学习实战-使用TensorFlow与Keras构建智能模型

程序员Gloria

Python超入门TensorFlowpython

深度学习实战-使用TensorFlow与Keras构建智能模型深度学习已经成为现代人工智能的重要组成部分,而Python则是实现深度学习的主要编程语言之一。本文将探讨如何使用TensorFlow和Keras构建深度学习模型,包括必要的代码实例和详细的解析。1.深度学习简介深度学习是机器学习的一个分支,使用多层神经网络来学习和表示数据中的复杂模式。其广泛应用于图像识别、自然语言处理、推荐系统等领域。

- AI在垂直领域的深度应用:医疗、金融与自动驾驶的革新之路

AI在垂直领域的深度应用:医疗、金融与自动驾驶的革新之路一、医疗领域:AI驱动的精准诊疗与效率提升1.医学影像诊断AI算法通过深度学习技术,已实现对X光、CT、MRI等影像的快速分析,辅助医生检测癌症、骨折等疾病。例如,GoogleDeepMind的AI系统在乳腺癌筛查中,误检率比人类专家低9.4%;中国的推想医疗AI系统可在20秒内完成肺部CT扫描分析,为急诊救治争取黄金时间。2.药物研发传统药

- 专题:2025云计算与AI技术研究趋势报告|附200+份报告PDF、原数据表汇总下载

原文链接:https://tecdat.cn/?p=42935关键词:2025,云计算,AI技术,市场趋势,深度学习,公有云,研究报告云计算和AI技术正以肉眼可见的速度重塑商业世界。过去十年,全球云服务收入激增8倍,中国云计算市场规模突破6000亿元,而深度学习算法的应用量更是暴涨400倍。这些数字背后,是企业从“自建机房”到“云原生开发”的转型,是AI从“实验室”走向“产业级应用”的跨越。本报告

- 【深度学习解惑】在实践中如何发现和修正RNN训练过程中的数值不稳定?

云博士的AI课堂

大模型技术开发与实践哈佛博后带你玩转机器学习深度学习深度学习rnn人工智能tensorflowpytorch神经网络机器学习

在实践中发现和修正RNN训练过程中的数值不稳定目录引言与背景介绍原理解释代码说明与实现应用场景与案例分析实验设计与结果分析性能分析与技术对比常见问题与解决方案创新性与差异性说明局限性与挑战未来建议和进一步研究扩展阅读与资源推荐图示与交互性内容语言风格与通俗化表达互动交流1.引言与背景介绍循环神经网络(RNN)在处理序列数据时表现出色,但训练过程中常面临梯度消失和梯度爆炸问题,导致数值不稳定。当网络

- 【深度学习实战】当前三个最佳图像分类模型的代码详解

云博士的AI课堂

大模型技术开发与实践哈佛博后带你玩转机器学习深度学习深度学习人工智能分类模型机器学习TransformerEfficientNetConvNeXt

下面给出三个在当前图像分类任务中精度表现突出的模型示例,分别基于SwinTransformer、EfficientNet与ConvNeXt。每个模型均包含:训练代码(使用PyTorch)从预训练权重开始微调(也可注释掉预训练选项,从头训练)数据集目录结构:└──dataset_root├──buy#第一类图像└──nobuy#第二类图像随机拆分:80%训练,20%验证每个Epoch输出一次loss

- 第35周—————糖尿病预测模型优化探索

目录目录前言1.检查GPU2.查看数据编辑3.划分数据集4.创建模型与编译训练5.编译及训练模型6.结果可视化7.总结前言本文为365天深度学习训练营中的学习记录博客原作者:K同学啊1.检查GPUimporttorch.nnasnnimporttorch.nn.functionalasFimporttorchvision,torch#设置硬件设备,如果有GPU则使用,没有则使用cpudevice=

- 深度学习预备知识

AmazingMQ

深度学习人工智能

1.Tensor张量定义:张量(tensor)表示一个由数值组成的数组,这个数组可能有多个维度(轴)。具有一个轴的张量对应数学上的向量,具有两个轴的张量对应数学上的矩阵,具有两个以上轴的张量目前没有特定的数学名称。importtorch#arange创建一个行向量x,这个行向量包含以0开始的前12个整数。x=torch.arange(12)print("x=",x)#x=tensor([0,1,2

- 根茎式装配体(RA)作为下一代协同智能范式的理论、架构与应用

由数入道

人工智能思维框架软件工程智能体

一、引言——范式危机与新大陆的召唤1.1表征主义的黄昏:当前AI协同范式的认知天花板自艾伦·图灵在《计算机器与智能》中播下思想的种子以来,人工智能的漫长征途始终被一个强大而内隐的哲学范式所笼罩——我们称之为“表征主义”(Representationism)。这一范式,无论其外在形态如何演变,从早期的符号逻辑、专家系统,到如今风靡全球的深度学习神经网络,其核心信念从未动摇:智能的核心,在于构建一个关

- Manus AI与多语言手写识别

ManusAI与多语言手写识别背景与概述手写识别技术的发展现状与挑战ManusAI的核心技术与应用场景多语言手写识别的市场需求与难点ManusAI的技术架构深度学习在手写识别中的应用多语言支持的模型设计数据预处理与特征提取方法多语言手写识别的关键挑战不同语言字符的多样性处理上下文语义与书写风格适应性低资源语言的训练数据获取解决方案与优化策略迁移学习在多语言任务中的应用端到端模型的优化与轻量化用户反

- 基于LIDC-IDRI肺结节肺癌数据集的人工智能深度学习分类良性和恶性肺癌(Python 全代码)全流程解析(二)

基于LIDC-IDRI肺结节肺癌数据集的人工智能深度学习分类良性和恶性肺癌(Python全代码)全流程解析(二)1环境配置和数据集预处理1.1环境配置1.1数据集预处理2深度学习模型训练和评估2.1深度学习模型训练2.1深度学习模型评估笑话一则开心一下喽完整代码如下:模型文件如下深度学习模型讲解---待续第一部分内容的传送门第三部分传送门1环境配置和数据集预处理1.1环境配置环境配置建议使用ana

- 深度学习交互式图像分割技术演进与突破

wang1776866571

深度学习交互式分割深度学习人工智能交互式分割

说明本文为作者读研期间基于交互式图像分割领域公开文献的系统梳理与个人理解总结,所有内容均为原创撰写(ai辅助创作),未直接复制或抄袭他人成果。文中涉及的算法、模型及实验结论均参考自领域内公开发表的学术论文(具体文献见文末参考文献列表)。本文旨在为交互式图像分割领域的学习者提供一份结构化的综述参考,内容涵盖技术演进、核心方法、关键技术优化及应用前景,希望能为相关研究提供启发。摘要:本文系统综述了基于

- 美团辟谣「30万本科生送外卖」;微软裁员再引争议,员工未归属股票被全部回收;传OpenAI“开放权重模型”最快下周上线|极客头条

极客日报

microsoft

「极客头条」——技术人员的新闻圈!CSDN的读者朋友们好,「极客头条」来啦,快来看今天都有哪些值得我们技术人关注的重要新闻吧。(投稿或寻求报道:

[email protected])整理|苏宓出品|CSDN(ID:CSDNnews)一分钟速览新闻点!美团辟谣「30万本科生送外卖」传字节跳动旗下沐瞳科技已收购杭州心光流美2025福布斯中国最佳CEO榜单揭晓:马化腾、雷军、王传福排名前三武汉大学集成电路学

- Text2Reward学习笔记

1.提示词请问,“glew”是一个RL工程师常用的工具库吗?请问,thiscodebase主要是做什么用的呀?1.1解释代码是否可以请您根据thiscodebase的主要功能,参考PyTorch的文档格式和文档风格,使用Markdown格式为选中的代码行编写一段相应的文档说明呢?2.项目环境配置2.1新建环境[official]2.1.1Featurizecondacreate-p~/work/d

- xml解析

小猪猪08

xml

1、DOM解析的步奏

准备工作:

1.创建DocumentBuilderFactory的对象

2.创建DocumentBuilder对象

3.通过DocumentBuilder对象的parse(String fileName)方法解析xml文件

4.通过Document的getElem

- 每个开发人员都需要了解的一个SQL技巧

brotherlamp

linuxlinux视频linux教程linux自学linux资料

对于数据过滤而言CHECK约束已经算是相当不错了。然而它仍存在一些缺陷,比如说它们是应用到表上面的,但有的时候你可能希望指定一条约束,而它只在特定条件下才生效。

使用SQL标准的WITH CHECK OPTION子句就能完成这点,至少Oracle和SQL Server都实现了这个功能。下面是实现方式:

CREATE TABLE books (

id &

- Quartz——CronTrigger触发器

eksliang

quartzCronTrigger

转载请出自出处:http://eksliang.iteye.com/blog/2208295 一.概述

CronTrigger 能够提供比 SimpleTrigger 更有具体实际意义的调度方案,调度规则基于 Cron 表达式,CronTrigger 支持日历相关的重复时间间隔(比如每月第一个周一执行),而不是简单的周期时间间隔。 二.Cron表达式介绍 1)Cron表达式规则表

Quartz

- Informatica基础

18289753290

InformaticaMonitormanagerworkflowDesigner

1.

1)PowerCenter Designer:设计开发环境,定义源及目标数据结构;设计转换规则,生成ETL映射。

2)Workflow Manager:合理地实现复杂的ETL工作流,基于时间,事件的作业调度

3)Workflow Monitor:监控Workflow和Session运行情况,生成日志和报告

4)Repository Manager:

- linux下为程序创建启动和关闭的的sh文件,scrapyd为例

酷的飞上天空

scrapy

对于一些未提供service管理的程序 每次启动和关闭都要加上全部路径,想到可以做一个简单的启动和关闭控制的文件

下面以scrapy启动server为例,文件名为run.sh:

#端口号,根据此端口号确定PID

PORT=6800

#启动命令所在目录

HOME='/home/jmscra/scrapy/'

#查询出监听了PORT端口

- 人--自私与无私

永夜-极光

今天上毛概课,老师提出一个问题--人是自私的还是无私的,根源是什么?

从客观的角度来看,人有自私的行为,也有无私的

- Ubuntu安装NS-3 环境脚本

随便小屋

ubuntu

将附件下载下来之后解压,将解压后的文件ns3environment.sh复制到下载目录下(其实放在哪里都可以,就是为了和我下面的命令相统一)。输入命令:

sudo ./ns3environment.sh >>result

这样系统就自动安装ns3的环境,运行的结果在result文件中,如果提示

com

- 创业的简单感受

aijuans

创业的简单感受

2009年11月9日我进入a公司实习,2012年4月26日,我离开a公司,开始自己的创业之旅。

今天是2012年5月30日,我忽然很想谈谈自己创业一个月的感受。

当初离开边锋时,我就对自己说:“自己选择的路,就是跪着也要把他走完”,我也做好了心理准备,准备迎接一次次的困难。我这次走出来,不管成败

- 如何经营自己的独立人脉

aoyouzi

如何经营自己的独立人脉

独立人脉不是父母、亲戚的人脉,而是自己主动投入构造的人脉圈。“放长线,钓大鱼”,先行投入才能产生后续产出。 现在几乎做所有的事情都需要人脉。以银行柜员为例,需要拉储户,而其本质就是社会人脉,就是社交!很多人都说,人脉我不行,因为我爸不行、我妈不行、我姨不行、我舅不行……我谁谁谁都不行,怎么能建立人脉?我这里说的人脉,是你的独立人脉。 以一个普通的银行柜员

- JSP基础

百合不是茶

jsp注释隐式对象

1,JSP语句的声明

<%! 声明 %> 声明:这个就是提供java代码声明变量、方法等的场所。

表达式 <%= 表达式 %> 这个相当于赋值,可以在页面上显示表达式的结果,

程序代码段/小型指令 <% 程序代码片段 %>

2,JSP的注释

<!-- -->

- web.xml之session-config、mime-mapping

bijian1013

javaweb.xmlservletsession-configmime-mapping

session-config

1.定义:

<session-config>

<session-timeout>20</session-timeout>

</session-config>

2.作用:用于定义整个WEB站点session的有效期限,单位是分钟。

mime-mapping

1.定义:

<mime-m

- 互联网开放平台(1)

Bill_chen

互联网qq新浪微博百度腾讯

现在各互联网公司都推出了自己的开放平台供用户创造自己的应用,互联网的开放技术欣欣向荣,自己总结如下:

1.淘宝开放平台(TOP)

网址:http://open.taobao.com/

依赖淘宝强大的电子商务数据,将淘宝内部业务数据作为API开放出去,同时将外部ISV的应用引入进来。

目前TOP的三条主线:

TOP访问网站:open.taobao.com

ISV后台:my.open.ta

- 【MongoDB学习笔记九】MongoDB索引

bit1129

mongodb

索引

可以在任意列上建立索引

索引的构造和使用与传统关系型数据库几乎一样,适用于Oracle的索引优化技巧也适用于Mongodb

使用索引可以加快查询,但同时会降低修改,插入等的性能

内嵌文档照样可以建立使用索引

测试数据

var p1 = {

"name":"Jack",

"age&q

- JDBC常用API之外的总结

白糖_

jdbc

做JAVA的人玩JDBC肯定已经很熟练了,像DriverManager、Connection、ResultSet、Statement这些基本类大家肯定很常用啦,我不赘述那些诸如注册JDBC驱动、创建连接、获取数据集的API了,在这我介绍一些写框架时常用的API,大家共同学习吧。

ResultSetMetaData获取ResultSet对象的元数据信息

- apache VelocityEngine使用记录

bozch

VelocityEngine

VelocityEngine是一个模板引擎,能够基于模板生成指定的文件代码。

使用方法如下:

VelocityEngine engine = new VelocityEngine();// 定义模板引擎

Properties properties = new Properties();// 模板引擎属

- 编程之美-快速找出故障机器

bylijinnan

编程之美

package beautyOfCoding;

import java.util.Arrays;

public class TheLostID {

/*编程之美

假设一个机器仅存储一个标号为ID的记录,假设机器总量在10亿以下且ID是小于10亿的整数,假设每份数据保存两个备份,这样就有两个机器存储了同样的数据。

1.假设在某个时间得到一个数据文件ID的列表,是

- 关于Java中redirect与forward的区别

chenbowen00

javaservlet

在Servlet中两种实现:

forward方式:request.getRequestDispatcher(“/somePage.jsp”).forward(request, response);

redirect方式:response.sendRedirect(“/somePage.jsp”);

forward是服务器内部重定向,程序收到请求后重新定向到另一个程序,客户机并不知

- [信号与系统]人体最关键的两个信号节点

comsci

系统

如果把人体看做是一个带生物磁场的导体,那么这个导体有两个很重要的节点,第一个在头部,中医的名称叫做 百汇穴, 另外一个节点在腰部,中医的名称叫做 命门

如果要保护自己的脑部磁场不受到外界有害信号的攻击,最简单的

- oracle 存储过程执行权限

daizj

oracle存储过程权限执行者调用者

在数据库系统中存储过程是必不可少的利器,存储过程是预先编译好的为实现一个复杂功能的一段Sql语句集合。它的优点我就不多说了,说一下我碰到的问题吧。我在项目开发的过程中需要用存储过程来实现一个功能,其中涉及到判断一张表是否已经建立,没有建立就由存储过程来建立这张表。

CREATE OR REPLACE PROCEDURE TestProc

IS

fla

- 为mysql数据库建立索引

dengkane

mysql性能索引

前些时候,一位颇高级的程序员居然问我什么叫做索引,令我感到十分的惊奇,我想这绝不会是沧海一粟,因为有成千上万的开发者(可能大部分是使用MySQL的)都没有受过有关数据库的正规培训,尽管他们都为客户做过一些开发,但却对如何为数据库建立适当的索引所知较少,因此我起了写一篇相关文章的念头。 最普通的情况,是为出现在where子句的字段建一个索引。为方便讲述,我们先建立一个如下的表。

- 学习C语言常见误区 如何看懂一个程序 如何掌握一个程序以及几个小题目示例

dcj3sjt126com

c算法

如果看懂一个程序,分三步

1、流程

2、每个语句的功能

3、试数

如何学习一些小算法的程序

尝试自己去编程解决它,大部分人都自己无法解决

如果解决不了就看答案

关键是把答案看懂,这个是要花很大的精力,也是我们学习的重点

看懂之后尝试自己去修改程序,并且知道修改之后程序的不同输出结果的含义

照着答案去敲

调试错误

- centos6.3安装php5.4报错

dcj3sjt126com

centos6

报错内容如下:

Resolving Dependencies

--> Running transaction check

---> Package php54w.x86_64 0:5.4.38-1.w6 will be installed

--> Processing Dependency: php54w-common(x86-64) = 5.4.38-1.w6 for

- JSONP请求

flyer0126

jsonp

使用jsonp不能发起POST请求。

It is not possible to make a JSONP POST request.

JSONP works by creating a <script> tag that executes Javascript from a different domain; it is not pos

- Spring Security(03)——核心类简介

234390216

Authentication

核心类简介

目录

1.1 Authentication

1.2 SecurityContextHolder

1.3 AuthenticationManager和AuthenticationProvider

1.3.1 &nb

- 在CentOS上部署JAVA服务

java--hhf

javajdkcentosJava服务

本文将介绍如何在CentOS上运行Java Web服务,其中将包括如何搭建JAVA运行环境、如何开启端口号、如何使得服务在命令执行窗口关闭后依旧运行

第一步:卸载旧Linux自带的JDK

①查看本机JDK版本

java -version

结果如下

java version "1.6.0"

- oracle、sqlserver、mysql常用函数对比[to_char、to_number、to_date]

ldzyz007

oraclemysqlSQL Server

oracle &n

- 记Protocol Oriented Programming in Swift of WWDC 2015

ningandjin

protocolWWDC 2015Swift2.0

其实最先朋友让我就这个题目写篇文章的时候,我是拒绝的,因为觉得苹果就是在炒冷饭, 把已经流行了数十年的OOP中的“面向接口编程”还拿来讲,看完整个Session之后呢,虽然还是觉得在炒冷饭,但是毕竟还是加了蛋的,有些东西还是值得说说的。

通常谈到面向接口编程,其主要作用是把系统��设计和具体实现分离开,让系统的每个部分都可以在不影响别的部分的情况下,改变自身的具体实现。接口的设计就反映了系统

- 搭建 CentOS 6 服务器(15) - Keepalived、HAProxy、LVS

rensanning

keepalived

(一)Keepalived

(1)安装

# cd /usr/local/src

# wget http://www.keepalived.org/software/keepalived-1.2.15.tar.gz

# tar zxvf keepalived-1.2.15.tar.gz

# cd keepalived-1.2.15

# ./configure

# make &a

- ORACLE数据库SCN和时间的互相转换

tomcat_oracle

oraclesql

SCN(System Change Number 简称 SCN)是当Oracle数据库更新后,由DBMS自动维护去累积递增的一个数字,可以理解成ORACLE数据库的时间戳,从ORACLE 10G开始,提供了函数可以实现SCN和时间进行相互转换;

用途:在进行数据库的还原和利用数据库的闪回功能时,进行SCN和时间的转换就变的非常必要了;

操作方法: 1、通过dbms_f

- Spring MVC 方法注解拦截器

xp9802

spring mvc

应用场景,在方法级别对本次调用进行鉴权,如api接口中有个用户唯一标示accessToken,对于有accessToken的每次请求可以在方法加一个拦截器,获得本次请求的用户,存放到request或者session域。

python中,之前在python flask中可以使用装饰器来对方法进行预处理,进行权限处理

先看一个实例,使用@access_required拦截:

?