深度学习第一天(pytorch学习笔记)

目录

- 入坑(2020.4.29)

- torch环境准备

- 一次比赛的代码

-

-

-

- 加载pytorch框架下的依赖项

- 加载数据集,并分为训练集和测试集

- 开始训练

-

-

- 结束语

入坑(2020.4.29)

喜欢深度学习,喜欢python。

看起来torch挺好的。

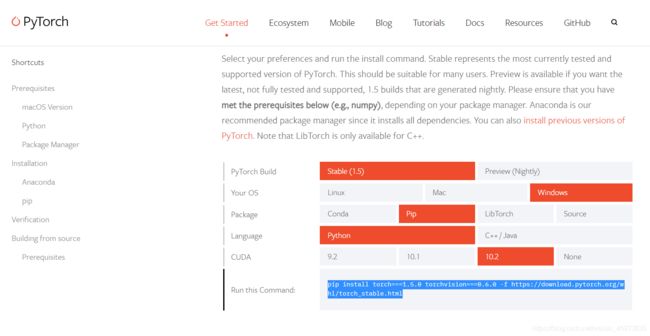

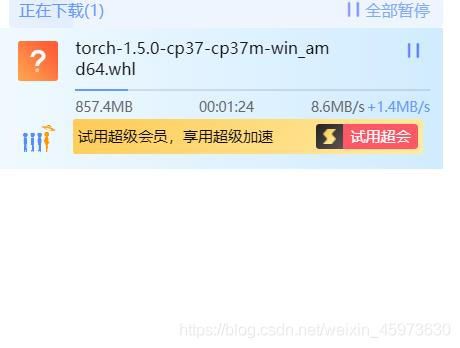

torch环境准备

import python

torch.cuda.is_available()

这时应该返回True.

一次比赛的代码

不懂的话直接当普通代码执行,当然本人还是纯小白,代码是copy来的,一次比赛的baseline代码

加载pytorch框架下的依赖项

from __future__ import print_function, division

import torch

import torch.nn as nn

import torch.optim as optim

from torch.optim import lr_scheduler

from torch.autograd import Variable

import torchvision

from torchvision import datasets, models, transforms

import time

import os

加载数据集,并分为训练集和测试集

dataTrans = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.5, 0.5, 0.5], [0.5, 0.5, 0.5])

])

data_dir = './images'

all_image_datasets = datasets.ImageFolder(data_dir, dataTrans)

trainsize = int(0.8*len(all_image_datasets))

testsize = len(all_image_datasets) - trainsize

train_dataset, test_dataset = torch.utils.data.random_split(all_image_datasets,[trainsize,testsize])

image_datasets = {'train':train_dataset,'val':test_dataset}

dataloders = {x: torch.utils.data.DataLoader(image_datasets[x],

batch_size=16,

shuffle=True,

num_workers=4) for x in ['train', 'val']}

dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'val']}

# use gpu or not

use_gpu = torch.cuda.is_available()

开始训练

def train_model(model, lossfunc, optimizer, scheduler, num_epochs=5):

start_time = time.time()

best_model_wts = model.state_dict()

best_acc = 0.0

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

# Each epoch has a training and validation phase

for phase in ['train', 'val']:

if phase == 'train':

scheduler.step()

model.train(True) # Set model to training mode

else:

model.train(False) # Set model to evaluate mode

running_loss = 0.0

running_corrects = 0.0

# Iterate over data.

for data in dataloders[phase]:

# get the inputs

inputs, labels = data

# wrap them in Variable

if use_gpu:

inputs = Variable(inputs.cuda())

labels = Variable(labels.cuda())

else:

inputs, labels = Variable(inputs), Variable(labels)

# zero the parameter gradients

optimizer.zero_grad()

# forward

outputs = model(inputs)

_, preds = torch.max(outputs.data, 1)

loss = lossfunc(outputs, labels)

# backward + optimize only if in training phase

if phase == 'train':

loss.backward()

optimizer.step()

# statistics

running_loss += loss.data

running_corrects += torch.sum(preds == labels.data).to(torch.float32)

epoch_loss = running_loss / dataset_sizes[phase]

epoch_acc = running_corrects / dataset_sizes[phase]

print('{} Loss: {:.4f} Acc: {:.4f}'.format(

phase, epoch_loss, epoch_acc))

# deep copy the model

if phase == 'val' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = model.state_dict()

elapsed_time = time.time() - start_time

print('Training complete in {:.0f}m {:.0f}s'.format(

elapsed_time // 60, elapsed_time % 60))

print('Best val Acc: {:4f}'.format(best_acc))

# load best model weights

model.load_state_dict(best_model_wts)

return model

# get model and replace the original fc layer with your fc layer

model_ft = models.resnet50(pretrained=True)

num_ftrs = model_ft.fc.in_features

model_ft.fc = nn.Linear(num_ftrs, 10)

if use_gpu:

model_ft = model_ft.cuda()

# define loss function

lossfunc = nn.CrossEntropyLoss()

# setting optimizer and trainable parameters

# params = model_ft.parameters()

# list(model_ft.fc.parameters())+list(model_ft.layer4.parameters())

#params = list(model_ft.fc.parameters())+list( model_ft.parameters())

params = list(model_ft.fc.parameters())

optimizer_ft = optim.SGD(params, lr=0.001, momentum=0.9)

# Decay LR by a factor of 0.1 every 7 epochs

exp_lr_scheduler = lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

model_ft = train_model(model=model_ft,

lossfunc=lossfunc,

optimizer=optimizer_ft,

scheduler=exp_lr_scheduler,

num_epochs=5)

Epoch 0/4

----------

C:\Users\16413\anaconda3\lib\site-packages\torch\optim\lr_scheduler.py:123: UserWarning: Detected call of `lr_scheduler.step()` before `optimizer.step()`. In PyTorch 1.1.0 and later, you should call them in the opposite order: `optimizer.step()` before `lr_scheduler.step()`. Failure to do this will result in PyTorch skipping the first value of the learning rate schedule. See more details at https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate

"https://pytorch.org/docs/stable/optim.html#how-to-adjust-learning-rate", UserWarning)

train Loss: 0.0750 Acc: 0.6700

val Loss: 0.0436 Acc: 0.8200

Epoch 1/4

----------

train Loss: 0.0399 Acc: 0.8250

val Loss: 0.0345 Acc: 0.8470

Epoch 2/4

----------

train Loss: 0.0330 Acc: 0.8473

val Loss: 0.0303 Acc: 0.8610

Epoch 3/4

----------

train Loss: 0.0300 Acc: 0.8575

val Loss: 0.0293 Acc: 0.8650

Epoch 4/4

----------

train Loss: 0.0288 Acc: 0.8643

val Loss: 0.0281 Acc: 0.8750

Training complete in 6m 31s

Best val Acc: 0.875000

torch.save(model_ft.state_dict(), './model.pth')

print("done")

done

结束语

热爱是一件很好的事。

读者可以百度或知乎一下,深度学习、神经网络、resnet等一堆概念。

明天,进攻pytorch官方文档。

本人第一篇Blog,不要介意哈。