365天深度学习训练营-第P6周:好莱坞明星识别

目录

一、前言

二、我的环境

三、代码实现

1、设置GPU

2、导入数据

3、划分数据集

4、调用VGG16模型

5、训练代码

6、测试函数

7、设置动态学习率

8、开始训练

8、数据可视化

9、模型评估

四、拔高部分

1、手动搭建VGG-16模型

2、用简单的CNN模型进行训练

3、用MobileNetV2进行训练

一、前言

>- ** 本文为[365天深度学习训练营](https://mp.weixin.qq.com/s/xLjALoOD8HPZcH563En8bQ) 中的学习记录博客**

>- ** 参考文章:365天深度学习训练营-第P6周:好莱坞明星识别(训练营内部成员可读)**

>- ** 原作者:[K同学啊|接辅导、项目定制](https://mtyjkh.blog.csdn.net/)**● 难度:夯实基础⭐⭐

● 语言:Python3、Pytorch3

● 时间:12月23日-12月28日

要求:

自己搭建VGG-16网络框架

调用官方的VGG-16网络框架

如何查看模型的参数量以及相关指标

拔高(可选):

测试集准确率达到60%(难度有点大,但是这个过程可以学到不少)

手动搭建VGG-16网络框架二、我的环境

语言环境:Python3.7

编译器:jupyter notebook

深度学习环境:TensorFlow2

三、代码实现

1、设置GPU

import torch

import torch.nn as nn

import torchvision.transforms as transforms

import torchvision

from torchvision import transforms, datasets

import os, PIL, pathlib

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

device2、导入数据

#导入数据

import os,PIL,random,pathlib

data_dir = './6-data/'

data_dir = pathlib.Path(data_dir)

data_paths = list(data_dir.glob('*'))

classeNames = [str(path).split("\\")[1] for path in data_paths]

classeNames

# 关于transforms.Compose的更多介绍可以参考:https://blog.csdn.net/qq_38251616/article/details/124878863

train_transforms = transforms.Compose([

transforms.Resize([224, 224]), # 将输入图片resize成统一尺寸

# transforms.RandomHorizontalFlip(), # 随机水平翻转

transforms.ToTensor(), # 将PIL Image或numpy.ndarray转换为tensor,并归一化到[0,1]之间

transforms.Normalize( # 标准化处理-->转换为标准正太分布(高斯分布),使模型更容易收敛

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]) # 其中 mean=[0.485,0.456,0.406]与std=[0.229,0.224,0.225] 从数据集中随机抽样计算得到的。

])

total_data = datasets.ImageFolder("./6-data/",transform=train_transforms)

total_data

total_data.class_to_idx3、划分数据集

train_size = int(0.8 * len(total_data))

test_size = len(total_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

train_dataset, test_dataset

batch_size = 32

train_dl = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=1)

test_dl = torch.utils.data.DataLoader(test_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=1)

for X, y in test_dl:

print("Shape of X [N, C, H, W]: ", X.shape)

print("Shape of y: ", y.shape, y.dtype)

break

4、调用VGG16模型

from torchvision.models import vgg16

device = "cuda" if torch.cuda.is_available() else "cpu"

print("Using {} device".format(device))

# 加载预训练模型,并且对模型进行微调

model = vgg16(pretrained=True).to(device) # 加载预训练的vgg16模型

for param in model.parameters():

param.requires_grad = False # 冻结模型的参数,这样子在训练的时候只训练最后一层的参数

# 修改classifier模块的第6层(即:(6): Linear(in_features=4096, out_features=2, bias=True))

# 注意查看我们下方打印出来的模型

model.classifier._modules['6'] = nn.Linear(4096, len(classeNames)) # 修改vgg16模型中最后一层全连接层,输出目标类别个数

model.to(device)

model

5、训练代码

# 训练循环

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset) # 训练集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

train_loss, train_acc = 0, 0 # 初始化训练损失和正确率

for X, y in dataloader: # 获取图片及其标签

X, y = X.to(device), y.to(device)

# 计算预测误差

pred = model(X) # 网络输出

loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失

# 反向传播

optimizer.zero_grad() # grad属性归零

loss.backward() # 反向传播

optimizer.step() # 每一步自动更新

# 记录acc与loss

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_acc /= size

train_loss /= num_batches

return train_acc, train_loss6、测试函数

# 测试函数

def test(dataloader, model, loss_fn):

size = len(dataloader.dataset) # 测试集的大小

num_batches = len(dataloader) # 批次数目, (size/batch_size,向上取整)

test_loss, test_acc = 0, 0

# 当不进行训练时,停止梯度更新,节省计算内存消耗

with torch.no_grad():

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

# 计算loss

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_acc /= size

test_loss /= num_batches

return test_acc, test_loss7、设置动态学习率

# 设置动态学习率

def adjust_learning_rate(optimizer, epoch, start_lr):

# 每 2 个epoch衰减到原来的 0.98

lr = start_lr * (0.92 ** (epoch // 2))

for param_group in optimizer.param_groups:

param_group['lr'] = lr

learn_rate = 1e-4 # 初始学习率

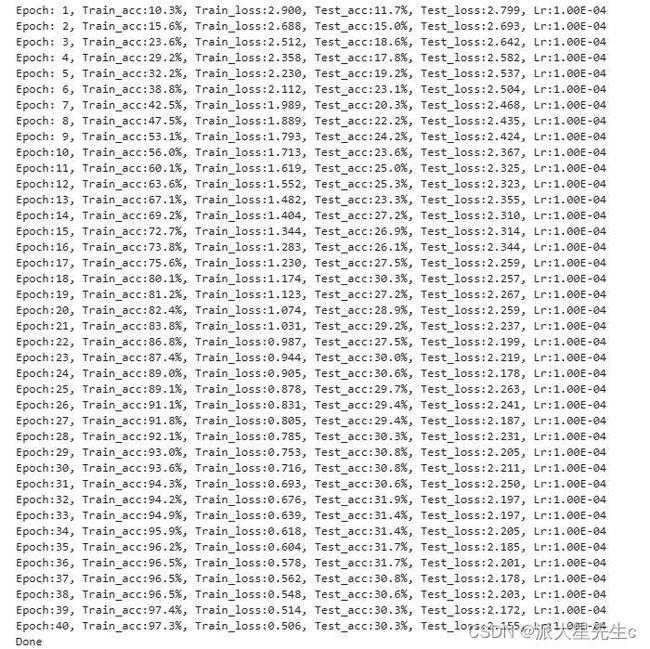

optimizer = torch.optim.SGD(model.parameters(), lr=learn_rate)8、开始训练

# 开始训练

loss_fn = nn.CrossEntropyLoss() # 创建损失函数

epochs = 40

train_loss = []

train_acc = []

test_loss = []

test_acc = []

for epoch in range(epochs):

# 更新学习率(使用自定义学习率时使用)

adjust_learning_rate(optimizer, epoch, learn_rate)

model.train()

epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)

# scheduler.step() # 更新学习率(调用官方动态学习率接口时使用)

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

# 获取当前的学习率

lr = optimizer.state_dict()['param_groups'][0]['lr']

template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, Lr:{:.2E}')

print(template.format(epoch + 1, epoch_train_acc * 100, epoch_train_loss,

epoch_test_acc * 100, epoch_test_loss, lr))

# 保存最佳模型到文件中

PATH = './best_model.pth' # 保存的参数文件名

torch.save(model.state_dict(), PATH)

print('Done')

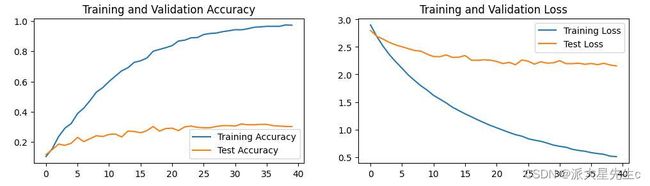

8、数据可视化

# 数据可视化

import matplotlib.pyplot as plt

#隐藏警告

import warnings

warnings.filterwarnings("ignore") #忽略警告信息

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号

plt.rcParams['figure.dpi'] = 100 #分辨率

epochs_range = range(epochs)

plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, train_acc, label='Training Accuracy')

plt.plot(epochs_range, test_acc, label='Test Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Training Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')

plt.show()9、模型评估

#模型评估

best_model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, best_model, loss_fn)四、拔高部分

1、手动搭建VGG-16模型

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.sequ1=nn.Sequential(

nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1), # 64*224*224

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, kernel_size=3, stride=1, padding=1), # 64*224*224

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False), # 64*112*112

nn.Conv2d(64, 128, kernel_size=3, stride=1, padding=1), # 128*112*112

nn.ReLU(inplace=True),

nn.Conv2d(128, 128, kernel_size=3, stride=1, padding=1), # 128*112*112

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False), # 128*56*56

nn.Conv2d(128, 256, kernel_size=3, stride=1, padding=1), # 256*56*56

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1), # 256*56*56

nn.ReLU(inplace=True),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1), # 256*56*56

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False), # 256*28*28

nn.Conv2d(256, 512, kernel_size=3, stride=1, padding=1), # 512*28*28

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1), # 512*28*28

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1), # 512*28*28

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False), # 512*14*14

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1), # 512*14*14

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1), # 512*14*14

nn.ReLU(inplace=True),

nn.Conv2d(512, 512, kernel_size=3, stride=1, padding=1), # 512*14*14

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) # 512*7*7

)

self.pool2=nn.AdaptiveAvgPool2d(output_size=(7, 7)) # 512*7*7

self.sequ3=nn.Sequential(

nn.Linear(in_features=25088, out_features=4096, bias=True),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5, inplace=False),

nn.Linear(in_features=4096, out_features=4096, bias=True),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5, inplace=False),

nn.Linear(in_features=4096, out_features=17, bias=True)

)

def forward(self, x):

x = self.sequ1(x)

x = self.pool2(x)

x = self.sequ3(x)

return x

2、用简单的CNN模型进行训练

![]()

3、用MobileNetV2进行训练

import torch

import torch.nn as nn

import torchvision

# 分类个数

num_class = 17

# DW卷积

def Conv3x3BNReLU(in_channels,out_channels,stride,groups):

return nn.Sequential(

# stride=2 wh减半,stride=1 wh不变

nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=3, stride=stride, padding=1, groups=groups),

nn.BatchNorm2d(out_channels),

nn.ReLU6(inplace=True)

)

# PW卷积

def Conv1x1BNReLU(in_channels,out_channels):

return nn.Sequential(

nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=1, stride=1),

nn.BatchNorm2d(out_channels),

nn.ReLU6(inplace=True)

)

# # PW卷积(Linear) 没有使用激活函数

def Conv1x1BN(in_channels,out_channels):

return nn.Sequential(

nn.Conv2d(in_channels=in_channels, out_channels=out_channels, kernel_size=1, stride=1),

nn.BatchNorm2d(out_channels)

)

class InvertedResidual(nn.Module):

# t = expansion_factor,也就是扩展因子,文章中取6

def __init__(self, in_channels, out_channels, expansion_factor, stride):

super(InvertedResidual, self).__init__()

self.stride = stride

self.in_channels = in_channels

self.out_channels = out_channels

mid_channels = (in_channels * expansion_factor)

# print("expansion_factor:", expansion_factor)

# print("mid_channels:",mid_channels)

# 先1x1卷积升维,再1x1卷积降维

self.bottleneck = nn.Sequential(

# 升维操作: 扩充维度是 in_channels * expansion_factor (6倍)

Conv1x1BNReLU(in_channels, mid_channels),

# DW卷积,降低参数量

Conv3x3BNReLU(mid_channels, mid_channels, stride, groups=mid_channels),

# 降维操作: 降维度 in_channels * expansion_factor(6倍) 降维到指定 out_channels 维度

Conv1x1BN(mid_channels, out_channels)

)

# 第一种: stride=1 才有shortcut 此方法让原本不相同的channels相同

if self.stride == 1:

self.shortcut = Conv1x1BN(in_channels, out_channels)

# 第二种: stride=1 切 in_channels=out_channels 才有 shortcut

# if self.stride == 1 and in_channels == out_channels:

# self.shortcut = ()

def forward(self, x):

out = self.bottleneck(x)

# 第一种:

out = (out+self.shortcut(x)) if self.stride==1 else out

# 第二种:

# out = (out + x) if self.stride == 1 and self.in_channels == self.out_channels else out

return out

class MobileNetV2(nn.Module):

# num_class为分类个数, t为扩充因子

def __init__(self, num_classes=num_class, t=6):

super(MobileNetV2,self).__init__()

# 3 -> 32 groups=1 不是组卷积 单纯的卷积操作

self.first_conv = Conv3x3BNReLU(3,32,2,groups=1)

# 32 -> 16 stride=1 wh不变

self.layer1 = self.make_layer(in_channels=32, out_channels=16, stride=1, factor=1, block_num=1)

# 16 -> 24 stride=2 wh减半

self.layer2 = self.make_layer(in_channels=16, out_channels=24, stride=2, factor=t, block_num=2)

# 24 -> 32 stride=2 wh减半

self.layer3 = self.make_layer(in_channels=24, out_channels=32, stride=2, factor=t, block_num=3)

# 32 -> 64 stride=2 wh减半

self.layer4 = self.make_layer(in_channels=32, out_channels=64, stride=2, factor=t, block_num=4)

# 64 -> 96 stride=1 wh不变

self.layer5 = self.make_layer(in_channels=64, out_channels=96, stride=1, factor=t, block_num=3)

# 96 -> 160 stride=2 wh减半

self.layer6 = self.make_layer(in_channels=96, out_channels=160, stride=2, factor=t, block_num=3)

# 160 -> 320 stride=1 wh不变

self.layer7 = self.make_layer(in_channels=160, out_channels=320, stride=1, factor=t, block_num=1)

# 320 -> 1280 单纯的升维操作

self.last_conv = Conv1x1BNReLU(320,1280)

self.avgpool = nn.AvgPool2d(kernel_size=7,stride=1)

self.dropout = nn.Dropout(p=0.2)

self.linear = nn.Linear(in_features=1280,out_features=num_classes)

self.init_params()

def make_layer(self, in_channels, out_channels, stride, factor, block_num):

layers = []

# 与ResNet类似,每层Bottleneck单独处理,指定stride。此层外的stride均为1

layers.append(InvertedResidual(in_channels, out_channels, factor, stride))

# 这些叠加层stride均为1,in_channels = out_channels, 其中 block_num-1 为重复次数

for i in range(1, block_num):

layers.append(InvertedResidual(out_channels, out_channels, factor, 1))

return nn.Sequential(*layers)

# 初始化权重操作

def init_params(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight)

nn.init.constant_(m.bias, 0)

elif isinstance(m, nn.Linear) or isinstance(m, nn.BatchNorm2d):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

def forward(self, x):

x = self.first_conv(x) # torch.Size([1, 32, 112, 112])

x = self.layer1(x) # torch.Size([1, 16, 112, 112])

x = self.layer2(x) # torch.Size([1, 24, 56, 56])

x = self.layer3(x) # torch.Size([1, 32, 28, 28])

x = self.layer4(x) # torch.Size([1, 64, 14, 14])

x = self.layer5(x) # torch.Size([1, 96, 14, 14])

x = self.layer6(x) # torch.Size([1, 160, 7, 7])

x = self.layer7(x) # torch.Size([1, 320, 7, 7])

x = self.last_conv(x) # torch.Size([1, 1280, 7, 7])

x = self.avgpool(x) # torch.Size([1, 1280, 1, 1])

x = x.view(x.size(0),-1) # torch.Size([1, 1280])

x = self.dropout(x)

x = self.linear(x) # torch.Size([1, 5])

return x

device = "cuda" if torch.cuda.is_available() else "cpu"

print("Using {} device".format(device))

model = MobileNetV2().to(device)

model

虽然算法是新算法 但是准确率并没有提高 仅供参考

MobileNet v2网络是由google团队在2018年提出的,相比MobileNet V1网络,准确率更高,模型更小。

网络中的亮点 :

- Inverted Residuals (倒残差结构 )

- Linear Bottlenecks(结构的最后一层采用线性层)