OpenCV从入门到精通——角点特征点提取匹配算法

harris角点

- 角点可以是两个边缘的角点;

- 角点是邻域内具有两个主方向的特征点;

- 角点通常被定义为两条边的交点,更严格的说,角点的局部邻域应该具有两个不同区域的不同方向的边界。或者说,角点就是多条轮廓线之间的交点。

- 像素点附近区域像素无论是在梯度方向、还是在梯度幅值上都发生较大的变化。

- 一阶导数(即灰度的梯度)的局部最大所对应的像素点;

- 两条及两条以上边缘的交点;

- 图像中梯度值和梯度方向的变化速率都很高的点;

- 角点处的一阶导数最大,二阶导数为零,指示物体边缘变化不连续的方向。

- 角点在任意一个方向上做微小移动,都会引起该区域的梯度图的方向和幅值发生很大变化。

- 具有旋转不变形,但是不具备尺度不变性。

算法步骤

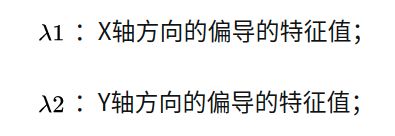

- 求x,y两个方向梯度,并计算出矩阵M

- 对矩阵M计算特征值、行列式和迹

- 根据特征值的关系并使用阈值确定图像特征

函数api

CV_EXPORTS_W void cornerHarris( InputArray src, OutputArray dst, int blockSize,

int ksize, double k,

int borderType = BORDER_DEFAULT );

dst:Harri算法的输出矩阵(输出图像),CV_32FC1类型,与src有同样的尺寸

src:输入图像,单通道,8位或浮点型

blockSize:邻域大小

ksize:Sobel算子的孔径大小

k:Harri算法系数

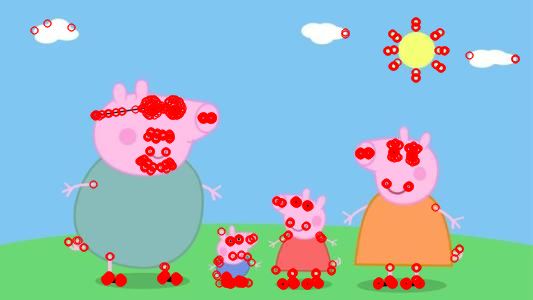

import cv2

import numpy as np

img = cv2.imread("./images/32.jpg")

img2gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

img2gray = np.float32(img2gray)

dst = cv2.cornerHarris(img2gray,blockSize=2,ksize=3,k=0.02)

dst = cv2.dilate(dst,cv2.getStructuringElement(cv2.MORPH_RECT,ksize=(8,8)))

img[dst>0.01*dst.max()] = [0,0,255]

cv2.imshow("dst",img)

cv2.waitKey(0)

托马斯算法

Shi-Tomasi 发现,角点的稳定性其实和矩阵 M 的较小特征值有关,于是直接用较小的那个特征值作为分数。这样就不用调整k值了。所以 Shi-Tomasi 将分数公式改为如下形式:

![]()

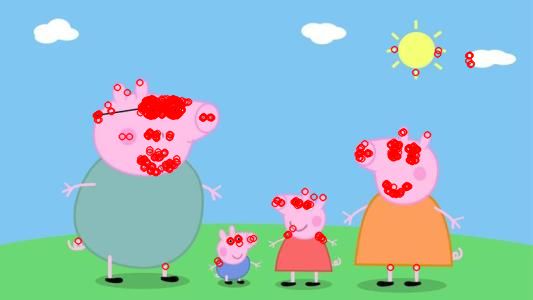

import cv2

import numpy as np

img = cv2.imread("./images/32.jpg")

img2gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

img2gray = np.float32(img2gray)

# dst = cv2.cornerHarris(img2gray,blockSize=2,ksize=3,k=0.02)

corners = cv2.goodFeaturesToTrack(img2gray,100,0.01,10)

# dst = cv2.dilate(dst,cv2.getStructuringElement(cv2.MORPH_RECT,ksize=(8,8)))

# img[dst>0.01*dst.max()] = [0,0,255]

corners = np.int0(corners)

for i in corners:

x,y = i.ravel()

cv2.circle(img,(x,y),radius=3,color=255,thickness=3)

cv2.imshow("dst",img)

cv2.waitKey(0)

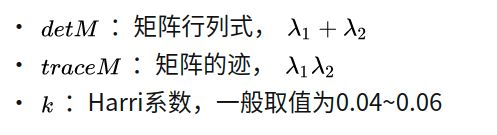

fast 算法

- 点与周围的值比较,阈值确定。

import cv2

src = cv2.imread("33.jpg")

grayImg = cv2.cvtColor(src, cv2.COLOR_BGR2GRAY)

fast = cv2.FastFeatureDetector_create(threshold=35)

# fast.setNonmaxSuppression(False)

kp = fast.detect(grayImg, None)

img2 = cv2.drawKeypoints(src, kp, None, (0, 0, 255), cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

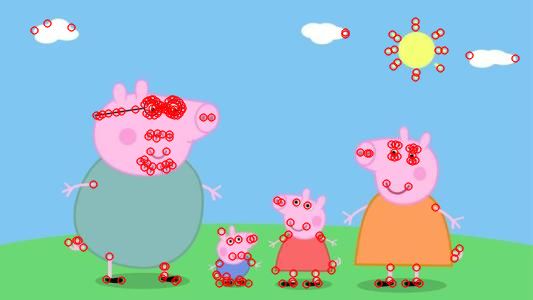

print('Threshold: ', fast.getThreshold())

print('nonmaxSuppression: ', fast.getNonmaxSuppression())

print('neighborhood: ', fast.getType())

print('Total Keypoints with nonmaxSuppression: ', len(kp))

#

cv2.imshow('fast_true', img2)

#

# fast.setNonmaxSuppression(False)

# kp = fast.detect(grayImg, None)

#

# print('Total Keypoints without nonmaxSuppression: ', len(kp))

#

# img3 = cv2.drawKeypoints(src, kp, None, (0, 0, 255), cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

# cv2.imshow('fast_false', img3)

cv2.waitKey()

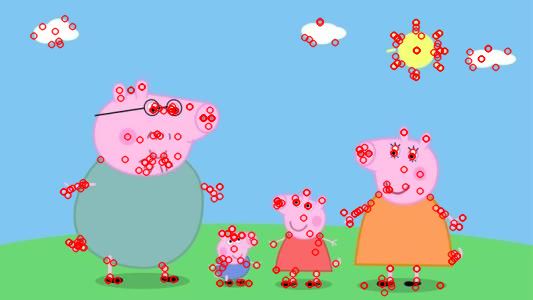

import cv2

src = cv2.imread("./images/33.jpg")

grayImg = cv2.cvtColor(src, cv2.COLOR_BGR2GRAY)

fast = cv2.FastFeatureDetector_create(threshold=35)

# fast.setNonmaxSuppression(False)

kp = fast.detect(grayImg, None)

img2 = cv2.drawKeypoints(src, kp, None, (0, 0, 255), cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

print('Threshold: ', fast.getThreshold())

print('nonmaxSuppression: ', fast.getNonmaxSuppression())

print('neighborhood: ', fast.getType())

print('Total Keypoints with nonmaxSuppression: ', len(kp))

#

cv2.imshow('fast_true', img2)

#

fast.setNonmaxSuppression(False)

kp = fast.detect(grayImg, None)

print('Total Keypoints without nonmaxSuppression: ', len(kp))

img3 = cv2.drawKeypoints(src, kp, None, (0, 0, 255), cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

cv2.imshow('fast_false', img3)

cv2.waitKey()

ORB算法

- 常用,略菜sift,但是快

- fast还要快

import cv2

import numpy as np

img = cv2.imread("./images/33.jpg")

img2gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

orb = cv2.ORB_create()

kp = orb.detect(img2gray,None)

kp,des = orb.compute(img2gray,kp)

img2 = cv2.drawKeypoints(img,kp,None,color=(0,0,255),flags=0)

cv2.imshow("dst",img2)

cv2.waitKey(0)

sift 算法

- 加了大小匹配,解决了尺度不变形

- 专利算法,慎用

import cv2

src = cv2.imread("./images/33.jpg")

grayImg = cv2.cvtColor(src, cv2.COLOR_BGR2GRAY)

sift = cv2.SIFT_create()

kp = sift.detect(grayImg,None)

img = cv2.drawKeypoints(src,kp,None,color=(0,0,255))

cv2.imshow("img",img)

cv2.waitKey()

SURF算法

-

比sift快

-

现有专利保护,需要重新编译,或者换OpenCV版本

import cv2

src = cv2.imread("./images/33.jpg")

grayImg = cv2.cvtColor(src, cv2.COLOR_BGR2GRAY)

surf= cv2.xfeatures2d.SURF_create()

kp = surf.detect(grayImg,None)

img = cv2.drawKeypoints(src,kp,None,color=(0,0,255))

cv2.imshow("img",img)

cv2.waitKey()

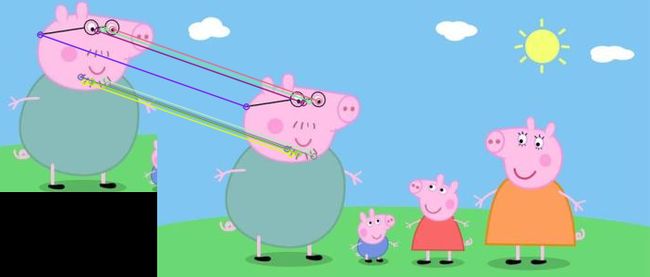

匹配算法

import cv2

img1 = cv2.imread("./images/34.jpg")

grayImage1= cv2.cvtColor(img1,cv2.COLOR_BGR2GRAY)

img2 = cv2.imread("./images/33.jpg")

grayImage2= cv2.cvtColor(img2,cv2.COLOR_BGR2GRAY)

orb = cv2.ORB_create()

kp1, des1 = orb.detectAndCompute(grayImage1, None)

kp2, des2 = orb.detectAndCompute(grayImage2, None)

bf = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

matches = bf.match(des1, des2)

matches = sorted(matches, key=lambda x: x.distance)

img3 = cv2.drawMatches(img1, kp1, img2, kp2, matches[:10], None, flags=2)

cv2.imshow("img",img3)

cv2.waitKey(0

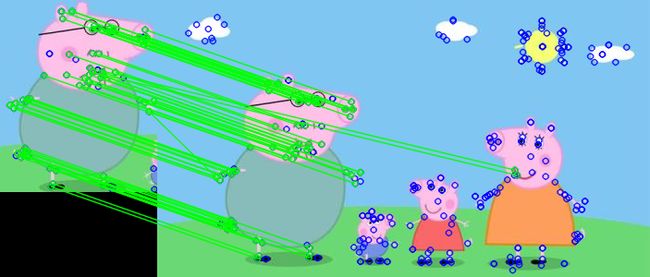

grayImg1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY)

img2 = cv2.imread('./images/33.jpg')

grayImg2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY)

detector = cv2.xfeatures2d.SIFT_create()

kp1, des1 = detector.detectAndCompute(grayImg1, None)

kp2, des2 = detector.detectAndCompute(grayImg2, None)

matcher = cv2.DescriptorMatcher_create(cv2.DescriptorMatcher_FLANNBASED)

matches = matcher.knnMatch(des1, des2, k=2)

matchesMask = [[0, 0] for i in range(len(matches))]

for i, (m, n) in enumerate(matches):

if m.distance < 0.7 * n.distance:

matchesMask[i] = [1, 0]

draw_params = dict(matchColor=(0, 255, 0), singlePointColor=(255, 0, 0), matchesMask=matchesMask, flags=0)

img3 = cv2.drawMatchesKnn(img1, kp1, img2, kp2, matches, None, **draw_params)

cv2.imshow("img", img3)

cv2.waitKey(0)