模型部署之NVIDIA AGX Xavier 配置和使用Torch,ONNX,TensorRT做模型推理

目录

1. 配置CUDA和cudnn

2. 配置Torch

3. 配置ONNX

4.配置TensorRT

5. 三者性能对比:

7. 其他

6. References

1. 配置CUDA和cudnn

参考这篇文章的做法,从百度网盘下载别人下载好的cuda,cudnn,python3.6和tensorrt。

- 该网盘资源安装的CUDA 版本是10.2,一般情况都是够用的。

- 安装的tensorrt需要对应py3.6,其他版本的python是识别不到所安装的tensorrt的。

- 安装cudnn的时候会有多行类似的报错:“/sbin/ldconfig.real: /usr/local/cuda-10.2/targets/aarch64 linux /lib /libcudnn_adv_infer.so.8 is not a symbolic link”,对每一个库报错都要执行以下两行命令,以adv_infer报错为例:

sudo ln -sf /usr/local/cuda-10.2/targets/aarch64-linux/lib/libcudnn_adv_infer.so.8.0.0 /usr/local/cuda-10.2/targets/aarch64-linux/lib/libcudnn_adv_infer.so.8 sudo ln -sf /usr/local/cuda-10.2/targets/aarch64-linux/lib/libcudnn_adv_infer.so.8 /usr/local/cuda-10.2/targets/aarch64-linux/lib/libcudnn_adv_infer.so - 安装完CUDA记得要添加环境变量:

export LD_LIBRARY_PATH=/usr/local/cuda/lib export PATH=$PATH:/usr/local/cuda/bin -

检查cudnn是否安装成功:

cat /usr/include/cudnn_version.h | grep CUDNN_MAJOR -A 2 -

archiconda是适用于aarch64架构的conda,但并不太需要,因为如果要涉及用python3和ROS通信的话用conda也解决不了问题,最后还得用docker。但这里也给一个配置archiconda的教程:配置archiconda.

2. 配置Torch

先参考官方教程安装依赖: Install PyTorch on Jetson Nano - Q-engineering

然后要下载适配aarch64架构的torch和torchvision。第一节配置CUDA和cudnn中提到的百度网盘里有对应py3.6的torch和torchvision,也可以从https://download.pytorch.org/whl/torch/ 这个网站里选择aarch64的torch离线包,还可以从https://torch.kmtea.eu/whl/stable-cn.html 这个网站里下载,这个里面把常用的pip包都自己编译打包成了适配aarch64的whil文件,非常好用。

安装了CUDA和torch后,可以按yolov3测试CUDA和torch教程测试torch是否能调用GPU。

如果要安装OpenBLAS,可参考如下教程自己编译源码:源码编译OpenBLAS

3. 配置ONNX

直接pip install onnxruntime只能下载cpu版本的onnxruntime,如果要下载gpu版本的适配aarch64架构的要去官网下载,尤其注意下载的时候要注意对应python版本和CUDA版本。如果报错“the ort build has [‘’] enabled, you are required to explicitly set the providers parameter when instantiating inferencesession.”,说明CUDA版本对应错了,onnx和CUDA的版本对应关系在NVIDIA - CUDA | onnxruntime中查看。

安装好后,可以在python中调用onnxruntime查看是否能调用GPU。因为onnxruntime分为

>> import onnxruntime as rt

>> rt.get_device()

'GPU'- torch转onnx:可参考如下代码:

model = Net()

model_recover = torch.load(pt_model_path, map_location='cuda:0')

model.load_state_dict(model_recover)

model.eval()

# Input to the model

x = torch.randn(1, 2, 3, 256, 341)

# Export the model

torch.onnx.export(model, # model being run

x, # model input

output_onnx, # where to save the model

verbose=True,

export_params=True, # store the trained parameter weights inside the model file

opset_version=10, # the ONNX version to export the model to

do_constant_folding=True, # whether to execute constant folding for optimization

input_names = ['input'], # the model's input names

output_names = ['output'], # the model's output names

#dynamic_axes={'input' : {0 : 'batch_size'}, # variable batch size

# 'output_0' : {0 : 'batch_size'},

# 'output_1' : {0 : 'batch_size'}}

)导出的onnx文件可以用netron查看其表示的模型结构,netron在线网址:Netron

- onnx inference:

import onnxruntime as ort

def to_numpy(tensor):

return tensor.detach().cpu().numpy() if tensor.requires_grad else tensor.cpu().numpy()

model_recover_path = ''

provider = ['CUDAExecutionProvider'] if self.device == 'cuda' else ['CPUExecutionProvider']

ort_session = ort.InferenceSession(model_recover_path, providers=provider)

ort_inputs = {ort_session.get_inputs()[0].name: to_numpy(inputs)}

ort_outs = ort_session.run(None, ort_inputs) # warm up

st = time.time()

ort_outs = ort_session.run(None, ort_inputs)

et = time.time()

print('onnx inference time:', et - st, ' use:', ort.get_device())- 还可以对比torch模型和onnx模型推理的精度差别,没有输出说明对比结果能满足rtol相对误差容忍度和atol绝对误差容忍度。

# load torch model and set model to .eval() first

torch_out = torch_model(inputs)

ort_session = ort.InferenceSession(onnx_model_path, providers=['CUDAExecutionProvider'])

ort_inputs = {ort_session.get_inputs()[0].name: to_numpy(inputs)}

ort_outs = ort_session.run(None, ort_inputs)

np.testing.assert_allclose(to_numpy(torch_out), ort_outs[0], rtol=1e-03, atol=1e-05)4.配置TensorRT

- 需要先安装pycuda,但pip install pycuda的包没法适配aamd64架构,需要自己去pypi搜pycuda然后下载源码并编译,以下命令参考自教程 Jetson Nano 安装pycuda。

# 从pypi网站下载pycuda源码 tar zxvf pycuda-2019.1.2.tar.gz cd pycuda-2019.1.2/ python3 configure.py --cuda-root=/usr/local/cuda-10.2 sudo python3 setup.py install - onnx转tensorrt:

用tensorrt自带的trtexec转模型

/usr/src/tensorrt/bin/trtexec --onnx=mymodel.onnx --saveEngine=mymodel.trt --workspace=4000

报错1:onnx2trt_utils.cpp:220: Your ONNX model has been generated with INT64 weights, while TensorRT does not natively support INT64. Attempting to cast down to INT32.

解决:不影响执行,可以不用管。如果要解决可以用onnx-simplifier将onnx文件再做一次简化。

报错2:Some tactics do not have sufficient workspace memory to run. Increasing workspace size may increase performance, please check verbose output.

解决:命令行设置较大的workspace如 --workspace=4000

报错3:若使用python时出现“illegal instruction core dumped”

解决:修改~/.bashrc: export OPENBLAS_CORETYPE=ARMV8

- tensorrt inference

def do_tensorrt_inference(inputs, trt_model_path):

BATCH_SIZE = 1

USE_FP16 = False

output_dim = 4

target_dtype = np.float16 if USE_FP16 else np.float32

f = open(trt_model_path, "rb")

runtime = trt.Runtime(trt.Logger(trt.Logger.WARNING))

engine = runtime.deserialize_cuda_engine(f.read())

context = engine.create_execution_context()

inputs_np = to_numpy(inputs)

#input_batch = np.random.randn(*inputs_shape).astype(target_dtype)

output = np.empty([BATCH_SIZE, output_dim], dtype = target_dtype)

d_input = cuda.mem_alloc(BATCH_SIZE * inputs_np.nbytes)

d_output = cuda.mem_alloc(BATCH_SIZE * output.nbytes)

bindings = [int(d_input), int(d_output)]

stream = cuda.Stream()

def _predict(inputs): # result gets copied into output

# transfer input data to device

cuda.memcpy_htod_async(d_input, inputs, stream)

# execute model

context.execute_async_v2(bindings, stream.handle, None) # async inference.if use sync inference, replace execute_async_v2 byexecute_v2

# transfer predictions back

cuda.memcpy_dtoh_async(output, d_output, stream)

# syncronize threads

stream.synchronize()

return output

output = _predict(inputs_np) # warm up

st = time.time()

output = _predict(inputs_np)

et = time.time()

print('tensorrt inference time:', et - st)

print('tensorrt pred:', output)5. 三者性能对比:

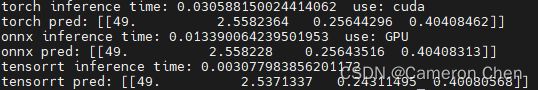

以我的经验来说,onnx对于精度的降低最多在0.00001级几乎没有损失,推理用时一般是torch用时的0.3到0.1倍;tensorrt的推理速度确实很猛,可以到只有onnx用时的十分之一,但精度丢失较多,相对于torch的预测结果精度丢失在0.01级,我这里还没用fp16的混合浮点模式,如果用了fp16则精度丢失应当会更多。因此如果对精度有要求的话,onnx其实就够用了。

放一张推理对比图感受一下区别:

7. 其他

python3环境下调用tf和cv_bridge ros包:

tf和cv_bridge原本只在python2中可以用,因为ROS1是python2编译的,如果改到ROS2即python3中使用,则要下载源码自己编译。但更简单的方法是去https://rospypi.github.io/simple/网址下载whl包,用pip的方式安装ros包,里面的pip包是同时支持py2,py3的,非常方便,很多包都不需要经过ros下载了。

6. References

jetson agx xavier:从亮机到yolov5下tensorrt加速_Eva20150932的博客-CSDN博客_jetpack4.6 安装onnx-simplifier

xavier nx 安裝cuda, cudnn, tensorrt, opencv, 中文輸入法 - wangaolin - 博客园jetson agx xavier:从亮机到yolov5下tensorrt加速_Eva20150932的博客-CSDN博客_jetpack4.6 安装onnx-simplifierxavier nx 安裝cuda, cudnn, tensorrt, opencv, 中文輸入法 - wangaolin - 博客园

Jetson Xavier NX手动安装cuda和cudnn_All will be well的博客-CSDN博客_jetson cuda安装

英伟达Jetson xavier agx的GPU部署yolov5 - stacso - 博客园

TensorRT/onnx_to_tensorrt.py at master · NVIDIA/TensorRT · GitHub

ONNX简易部署教程_51CTO博客_lilishop部署教程

tensorRT踩坑日常之engine推理_静待有缘人的博客-CSDN博客

ubuntu18.04 安装OpenBLAS_Mountain Q的博客-CSDN博客_linux安装openblas

Jetson Nano安装pycuda(踩坑传)_doubleZ0108的博客-CSDN博客_nano pycuda