【slam十四讲第二版】【课本例题代码向】【第七讲~视觉里程计Ⅰ】【1OpenCV的ORB特征】【2手写ORB特征】【3对极约束求解相机运动】【4三角测量】【5求解PnP】【3D-3D:ICP】

【slam十四讲第二版】【课本例题代码向】【第七讲~视觉里程计Ⅰ】【1OpenCV的ORB特征】【2手写ORB特征】【3对极约束求解相机运动】【4三角测量】【5求解PnP】【3D-3D:ICP】

- 前言

- 1 OpenCV的ORB特征

-

- 1.1 文件代码和所需图片

- 1.2 orb_cv.cpp

- 1.3 CMakeLists.txt

- 1.4 输出

- 2 手写ORB特征|基于Hamming的暴力匹配

-

- 2.1 项目工程包和所需图片

- 2.2 orb_self.cpp

- 2.3 CMakeLists.txt

- 2.4 遇到的问题

-

- 2.4.1 _mm_popcnt_u32使用报错

- 2.5 输出

- 3 对极约束求解相机运动

-

- 3.1 项目工程包和所需图片

- 3.2 pose_estimation_2d2d.cpp

- 3.3 CMakeLists.txt

- 3.4 输出

- 4 三角测量

-

- 4.1 前言

- 4.2 triangulation.cpp

- 4.3 CMakeLists.txt

- 4.4 输出:

- 5求解PnP

-

- 5.1 前言

- 5.2 pose_estimation_3d2d.cpp

- 5.3 CMakeLists.txt

- 5.4 运行结果

- 5.5 遇到的问题(在第一版代码的基础上)

- 5.6 感悟

- 6 3D-3D:ICP

-

- 6.1 前言

- 6.2 pose_estimation_3d3d.cpp

- 6.3 CMakeLists.txt

- 6.4 输出结果

- 6.5 报错

- 6.6 感悟

-

- 6.6.1 学到了如何把Eigen转换为cv::Mat下的矩阵

- 6.6.2 如何把cv::Mat下的矩阵转换为Eigen下矩阵

【slam十四讲第二版】【课本例题代码向】【第三~四讲刚体运动、李群和李代数】【eigen3.3.4和pangolin安装,Sophus及fim的安装使用】【绘制轨迹】【计算轨迹误差】

【slam十四讲第二版】【课本例题代码向】【第五讲~相机与图像】【OpenCV、图像去畸变、双目和RGB-D、遍历图像像素14种】

【slam十四讲第二版】【课本例题代码向】【第六讲~非线性优化】【安装对应版本ceres2.0.0和g2o教程】【手写高斯牛顿、ceres2.0.0、g2o拟合曲线及报错解决方案】

前言

不得不吐槽一句,进度太慢,效率也太慢

所需要的依赖包的安装请看我之前的博客【slam十四讲第二版】【课本例题代码向】【第六讲~非线性优化】【安装对应版本ceres2.0.0和g2o教程】【手写高斯牛顿、ceres2.0.0、g2o拟合曲线及报错解决方案】

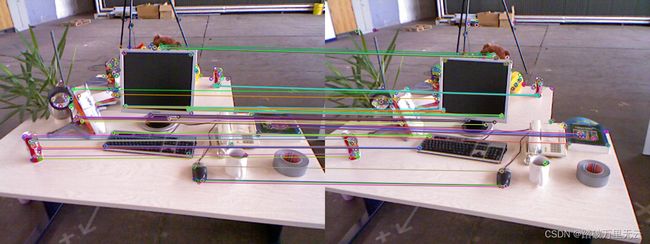

1 OpenCV的ORB特征

1.1 文件代码和所需图片

我的整个代码功能附上来了,另外需要的两张图片也在里面,如果需要的自取:链接:https://pan.baidu.com/s/1obCQ0Yb2hGOTK9f0Whvwfw

提取码:kfs1

1.2 orb_cv.cpp

#include 1.3 CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project(orb_cv)

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_BUILD_TYPE "Release")

find_package(OpenCV 3 REQUIRED)

include_directories(${OpenCV_INCLUDE_DIRS})

add_executable(orb_cv src/orb_cv.cpp)

target_link_libraries(orb_cv ${OpenCV_LIBRARIES})

1.4 输出

/home/bupo/my_study/slam14/slam14_my/cap7/orb_cv/cmake-build-debug/orb_cv

[ INFO:0] Initialize OpenCL runtime...

extract ORB cost = 0.0135794 seconds.

match ORB cost = 0.000664683 seconds.

--Min dist : 7

--Max dist : 95

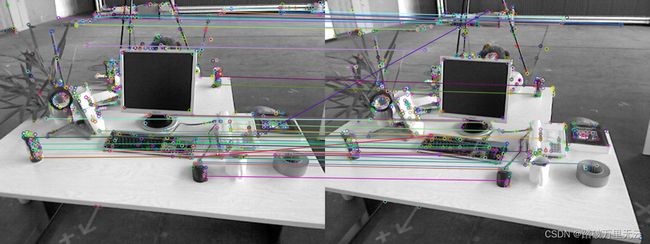

2 手写ORB特征|基于Hamming的暴力匹配

2.1 项目工程包和所需图片

链接:https://pan.baidu.com/s/1npVLlLt3WBjAAKhOAZ3t1w

提取码:dy1p

2.2 orb_self.cpp

#include 2.3 CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project(orb_self)

set(CMAKE_CXX_FLAGS "-std=c++14 -mfma")

find_package(OpenCV REQUIRED)

include_directories(${OpenCV_INCLUDE_DIRS})

add_executable(orb_self src/orb_self.cpp)

target_link_libraries(orb_self ${OpenCV_LIBRARIES})

2.4 遇到的问题

2.4.1 _mm_popcnt_u32使用报错

- 首先要确保你的CPU支持SSE,使用指令

echo "SSE 4.2 supported" || echo "SSE 4.2 not supported“"可以查看,参考文章关于虚拟化中cpu的指令集SSE 4.2的不支持 - 第一开始遇到报错:

/usr/lib/gcc/x86_64-linux-gnu/7/include/popcntintrin.h:35:1: error: inlining failed in call to always_inline ‘int _mm_popcnt_u32(unsigned int)’: target specific option mismatch _mm_popcnt_u32 (unsigned int __X) ^~~~~~~~~~~~~~

很纳闷,这里的报错不是代码的错误,如果你的CPU支持SSE,那么就是CMakeLists.txt文件错误,因为我第一开始理想化的

错误使用了set(CMAKE_CXX_FLAGS "-std=c++11 -O2 ${SSE_FLAGS} -msse4")

应该使用set(CMAKE_CXX_FLAGS "-std=c++14 -mfma")

2.5 输出

/home/bupo/my_study/slam14/slam14_my/cap7/orb_self/cmake-build-debug/orb_self

bad/total: 43/638

bad/total: 7/595

extract ORB cost = 0.016009 seconds.

match ORB cost = 0.0256946 seconds.

matches: 51

done

3 对极约束求解相机运动

3.1 项目工程包和所需图片

项目工程和所需图片都在链接:https://pan.baidu.com/s/1oENApqlZ6i5Oz7Copexswg

提取码:c1cu

另外运行很简单,如果你没有clion,只需要在项目路径下创建一个build文件夹,然后cmake… 然后make 然后运行可执行文件就可以了

3.2 pose_estimation_2d2d.cpp

#include detector = FeatureDetector::create ( "ORB" );

// Ptr descriptor = DescriptorExtractor::create ( "ORB" );

cv::Ptr<cv::DescriptorMatcher> matcher = cv::DescriptorMatcher::create("BruteForce-Hamming");

//-- 第一步:检测 Oriented FAST 角点位置

detector->detect(img_1, keypoints_1);

detector->detect(img_2, keypoints_2);

//-- 第二步:根据角点位置计算 BRIEF 描述子

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector<cv::DMatch> match;

matcher->match(descriptors_1,descriptors_2,match);

//-- 第四步:匹配点对筛选

double min_dist = 10000, max_dist = 0;

//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离

for (int i = 0; i < descriptors_1.rows; i++)

{

double dist = match[i].distance;

if(dist < min_dist) min_dist = dist;

if(dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f\n", max_dist);

printf("-- Min dist : %f\n", min_dist);

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.

for(int i = 0; i < descriptors_1.rows; i++)

{

if(match[i].distance <= max(2*min_dist, 30.0))

{

matches.push_back(match[i]);

}

}

}

// 像素坐标转相机归一化坐标

cv::Point2d pixel2cam (const cv::Point2d& p, const cv::Mat& K)

{

return cv::Point2d( //at是内参数矩阵

(p.x - K.at<double> (0,2)) / K.at<double>(0,0),

(p.y - K.at<double> (1,2)) / K.at<double>(1,1)

);

}

void pose_estimation_2d2d(std::vector<cv::KeyPoint>& keypoints_1, std::vector<cv::KeyPoint>& keypoints_2,

std::vector<cv::DMatch>& matches,

cv::Mat& R, cv::Mat& t)

{

// 相机内参,TUM Freiburg2

cv::Mat K = (cv::Mat_<double>(3,3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

//-- 把匹配点转换为vector的形式

vector<cv::Point2f> points1;

vector<cv::Point2f> points2;

for(int i = 0; i < (int) matches.size(); i++)

{

points1.push_back(keypoints_1[matches[i].queryIdx].pt);//匹配点对中第一张图片上的点

points2.push_back(keypoints_2[matches[i].trainIdx].pt);//匹配点对中第二张图片上的点

}

//-- 计算基础矩阵

cv::Mat fundamental_matrix;

fundamental_matrix = cv::findFundamentalMat(points1, points2,CV_FM_8POINT);//计算给定一组对应点的基本矩阵 八点法

cout << "fundamental_matrix is" << endl << fundamental_matrix << endl;

//-- 计算本质矩阵

cv::Point2d principal_point (325.1, 249.7); //相机光心, TUM dataset标定值

double focal_length = 521; //相机焦距, TUM dataset标定值

cv::Mat essential_matrix;

essential_matrix = cv::findEssentialMat(points1,points2,focal_length,principal_point);

cout<<"essential_matrix is "<<endl<< essential_matrix<<endl;

//-- 计算单应矩阵

cv::Mat homography_matrix;

homography_matrix = cv::findHomography(points1, points2, cv::RANSAC, 3);

cout<<"homography_matrix is "<<endl<<homography_matrix<<endl;

//-- 从本质矩阵中恢复旋转和平移信息.

cv::recoverPose(essential_matrix, points1, points2, R,t,

focal_length,principal_point);

cout<<"R is "<<endl<<R<<endl;

cout<<"t is "<<endl<<t<<endl;

}

3.3 CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project(pose_estimation_2d2d)

set(CMAKE_BUILD_TYPE "Release")

set( CMAKE_CXX_STANDARD 14)

# 添加cmake模块以使用g2o

#list(APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake_modules)

find_package(OpenCV 3.1 REQUIRED)

# find_package( OpenCV REQUIRED ) # use this if in OpenCV2

include_directories("/usr/include/eigen3")

include_directories(${OpenCV_INCLUDE_DIRS})

add_executable(pose_estimation_2d2d src/pose_estimation_2d2d.cpp)

target_link_libraries(pose_estimation_2d2d ${OpenCV_LIBRARIES})

3.4 输出

/home/bupo/my_study/slam14/slam14_my/cap7/pose_estimation_2d2d/cmake-build-debug/pose_estimation_2d2d

读取src文件下的图片

[ INFO:0] Initialize OpenCL runtime...

-- Max dist : 95.000000

-- Min dist : 7.000000

一共找到了81组匹配点

fundamental_matrix is

[5.435453065936294e-06, 0.0001366043242989641, -0.02140890086948122;

-0.0001321142229824704, 2.339475702778057e-05, -0.006332906454396256;

0.02107630352202776, -0.00366683395295285, 1]

essential_matrix is

[0.01724015832721737, 0.3280543357941316, 0.04737477831442286;

-0.3243229585962945, 0.03292958445202431, -0.6262554366073029;

-0.005885857752317864, 0.6253830041920339, 0.01531678649092664]

homography_matrix is

[0.91317517918067, -0.1092435315821776, 29.95860009981271;

0.02223560352310949, 0.9826008005061946, 6.50891083956826;

-0.0001001560381023939, 0.0001037779436396116, 1]

R is

[0.9985534106102478, -0.05339308467584829, 0.006345444621109364;

0.05321959721496342, 0.9982715997131746, 0.02492965459802013;

-0.007665548311698023, -0.02455588961730218, 0.9996690690694515]

t is

[-0.8829934995085557;

-0.05539655431450295;

0.4661048182498381]

t^R =

[-0.02438126572381069, -0.4639388908753586, -0.06699805400667588;

0.4582372816724479, -0.04792977660828776, 0.9410371538411662;

-0.8831734264150997, 0.04247755931090476, 0.1844386941457087]

epipolar constraint = [-0.07304359960899821]

epipolar constraint = [0.2707830639560381]

epipolar constraint = [0.7394658183384351]

...(这里省略的使用所有有效的匹配对验证对极约束的结果,总共应该是81对,也就是所有的匹配点对数)

epipolar constraint = [0.1199788747639846]

epipolar constraint = [0.2506340185166976]

进程已结束,退出代码0

4 三角测量

4.1 前言

-

代码自取:链接:https://pan.baidu.com/s/1IVHnjF_KhvjuyMpkPQSpLg

提取码:0fg8 -

或者直接取图片链接: https://pan.baidu.com/s/1XxodUt9AOBpytMwsab4slA 提取码: 5b14

-

其实就是在对极约束求解相机运动的基础上加了一个三角代码测量,演示

4.2 triangulation.cpp

#include detector = FeatureDetector::create ( "ORB" );

// Ptr descriptor = DescriptorExtractor::create ( "ORB" );

cv::Ptr<cv::DescriptorMatcher> matcher = cv::DescriptorMatcher::create("BruteForce-Hamming");

//-- 第一步:检测 Oriented FAST 角点位置

detector->detect(img_1, keypoints_1);

detector->detect(img_2, keypoints_2);

//-- 第二步:根据角点位置计算 BRIEF 描述子

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector<cv::DMatch> match;

matcher->match(descriptors_1,descriptors_2,match);

//-- 第四步:匹配点对筛选

double min_dist = 10000, max_dist = 0;

//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离

for (int i = 0; i < descriptors_1.rows; i++)

{

double dist = match[i].distance;

if(dist < min_dist) min_dist = dist;

if(dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f\n", max_dist);

printf("-- Min dist : %f\n", min_dist);

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.

for(int i = 0; i < descriptors_1.rows; i++)

{

if(match[i].distance <= max(2*min_dist, 30.0))

{

matches.push_back(match[i]);

}

}

}

// 像素坐标转相机归一化坐标

cv::Point2d pixel2cam (const cv::Point2d& p, const cv::Mat& K)

{

return cv::Point2d( //at是内参数矩阵

(p.x - K.at<double> (0,2)) / K.at<double>(0,0),

(p.y - K.at<double> (1,2)) / K.at<double>(1,1)

);

}

void pose_estimation_2d2d(std::vector<cv::KeyPoint>& keypoints_1, std::vector<cv::KeyPoint>& keypoints_2,

std::vector<cv::DMatch>& matches,

cv::Mat& R, cv::Mat& t)

{

// 相机内参,TUM Freiburg2

cv::Mat K = (cv::Mat_<double>(3,3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

//-- 把匹配点转换为vector的形式

vector<cv::Point2f> points1;

vector<cv::Point2f> points2;

for(int i = 0; i < (int) matches.size(); i++)

{

points1.push_back(keypoints_1[matches[i].queryIdx].pt);//匹配点对中第一张图片上的点

points2.push_back(keypoints_2[matches[i].trainIdx].pt);//匹配点对中第二张图片上的点

}

//-- 计算基础矩阵

cv::Mat fundamental_matrix;

fundamental_matrix = cv::findFundamentalMat(points1, points2,CV_FM_8POINT);//计算给定一组对应点的基本矩阵 八点法

cout << "fundamental_matrix is" << endl << fundamental_matrix << endl;

//-- 计算本质矩阵

cv::Point2d principal_point (325.1, 249.7); //相机光心, TUM dataset标定值

double focal_length = 521; //相机焦距, TUM dataset标定值

cv::Mat essential_matrix;

essential_matrix = cv::findEssentialMat(points1,points2,focal_length,principal_point);

cout<<"essential_matrix is "<<endl<< essential_matrix<<endl;

//-- 计算单应矩阵

cv::Mat homography_matrix;

homography_matrix = cv::findHomography(points1, points2, cv::RANSAC, 3);

cout<<"homography_matrix is "<<endl<<homography_matrix<<endl;

//-- 从本质矩阵中恢复旋转和平移信息.

cv::recoverPose(essential_matrix, points1, points2, R,t,

focal_length,principal_point);

cout<<"R is "<<endl<<R<<endl;

cout<<"t is "<<endl<<t<<endl;

}

//加入了三角测量部分

void triangulation(const vector<cv::KeyPoint>& keypoint_1, const vector<cv::KeyPoint>& keypoint_2,

const std::vector<cv::DMatch>& matches, const cv::Mat& R, const cv::Mat& t,

vector<cv::Point3d>& points)

{

cv::Mat T1 = (cv::Mat_<float> (3,4) <<

1,0,0,0,

0,1,0,0,

0,0,1,0);

cv::Mat T2 = (cv::Mat_<float> (3,4) <<

R.at<double>(0,0), R.at<double>(0,1), R.at<double>(0,2), t.at<double>(0,0),

R.at<double>(1,0), R.at<double>(1,1), R.at<double>(1,2), t.at<double>(1,0),

R.at<double>(2,0), R.at<double>(2,1), R.at<double>(2,2), t.at<double>(2,0));

cv::Mat K = (cv::Mat_<double> (3,3) << 520.9, 0, 325.1, 0, 521.0, 249.7, 0, 0, 1);

vector<cv::Point2f> pts_1,pts_2;

for(cv::DMatch m : matches)

{

// 将像素坐标转换至相机坐标

pts_1.push_back(pixel2cam(keypoint_1[m.queryIdx].pt, K));

pts_2.push_back(pixel2cam(keypoint_2[m.trainIdx].pt, K));

}

cv::Mat pts_4d;

//第一个相机的3x4投影矩阵。

//第2个相机的3x4投影矩阵。

cv::triangulatePoints(T1,T2,pts_1,pts_2,pts_4d);

// 转换成非齐次坐标

for(int i = 0; i < pts_4d.cols; i++)

{

cv::Mat x = pts_4d.col(i);

x /= x.at<float>(3,0);// 归一化

cv::Point3d p(x.at<float>(0,0), x.at<float>(1,0), x.at<float>(2,0));

points.push_back(p);

}

}

4.3 CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project(triangulation)

set(CMAKE_BUILD_TYPE "Release")

set( CMAKE_CXX_STANDARD 14)

# 添加cmake模块以使用g2o

#list(APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake_modules)

find_package(OpenCV 3.1 REQUIRED)

# find_package( OpenCV REQUIRED ) # use this if in OpenCV2

include_directories("/usr/include/eigen3")

include_directories(${OpenCV_INCLUDE_DIRS})

add_executable(triangulation src/triangulation.cpp)

target_link_libraries(triangulation ${OpenCV_LIBRARIES})

4.4 输出:

/home/bupo/my_study/slam14/slam14_my/cap7/triangulation/cmake-build-debug/triangulation

读取src文件下的图片

[ INFO:0] Initialize OpenCL runtime...

-- Max dist : 95.000000

-- Min dist : 7.000000

一共找到了81组匹配点

fundamental_matrix is

[5.435453065936294e-06, 0.0001366043242989641, -0.02140890086948122;

-0.0001321142229824704, 2.339475702778057e-05, -0.006332906454396256;

0.02107630352202776, -0.00366683395295285, 1]

essential_matrix is

[0.01724015832721737, 0.3280543357941316, 0.04737477831442286;

-0.3243229585962945, 0.03292958445202431, -0.6262554366073029;

-0.005885857752317864, 0.6253830041920339, 0.01531678649092664]

homography_matrix is

[0.91317517918067, -0.1092435315821776, 29.95860009981271;

0.02223560352310949, 0.9826008005061946, 6.50891083956826;

-0.0001001560381023939, 0.0001037779436396116, 1]

R is

[0.9985534106102478, -0.05339308467584829, 0.006345444621109364;

0.05321959721496342, 0.9982715997131746, 0.02492965459802013;

-0.007665548311698023, -0.02455588961730218, 0.9996690690694515]

t is

[-0.8829934995085557;

-0.05539655431450295;

0.4661048182498381]

point in the first camera frame: [-0.0136303, -0.302687]

point projected from 3D [-0.0133075, -0.300258], d=66.0186

point in the second camera frame: [-0.00403148, -0.270058]

point reprojected from second frame: [-0.004225950933477142, -0.2724864380718128, 1]

point in the first camera frame: [-0.153772, -0.0742802]

point projected from 3D [-0.153775, -0.0744559], d=21.0728

point in the second camera frame: [-0.180649, -0.0589251]

point reprojected from second frame: [-0.1806548929627975, -0.05875349311330996, 1]

...(一样这里应该有81个输出,这里就省去了,只留下三个参考)

point in the first camera frame: [0.111923, 0.146583]

point projected from 3D [0.111961, 0.146161], d=13.3843

point in the second camera frame: [0.0431343, 0.167215]

point reprojected from second frame: [0.04307433511399977, 0.1676208349311905, 1]

进程已结束,退出代码0

5求解PnP

5.1 前言

所需要的文件以及我的工程自取:链接:https://pan.baidu.com/s/1NJ-hd2O2Jo8RhcH90iXv-A

提取码:xwc3

注释参考文章SLAM十四讲-ch7(2)-位姿估计(包含2d-2d、3d-2d、3d-3d、以及三角化实现代码的注释)

5.2 pose_estimation_3d2d.cpp

// Created by nnz on 2022/4/5

#include detector = FeatureDetector::create ( "ORB" );

// Ptr descriptor = DescriptorExtractor::create ( "ORB" );

cv::Ptr<cv::DescriptorMatcher> matcher = cv::DescriptorMatcher::create("BruteForce-Hamming");

//-- 第一步:检测 Oriented FAST 角点位置

detector->detect(img_1, keypoints_1);

detector->detect(img_2, keypoints_2);

//-- 第二步:根据角点位置计算 BRIEF 描述子

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector<cv::DMatch> match;

// BFMatcher matcher ( NORM_HAMMING );

matcher->match(descriptors_1, descriptors_2, match);

//-- 第四步:匹配点对筛选

double min_dist = 10000, max_dist = 0;

//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离

for (int i = 0; i < descriptors_1.rows;i++)

{

double dist = match[i].distance;

if(dist > max_dist) max_dist = dist;

if(dist < min_dist) min_dist = dist;

}

printf("-- Max dist : %f \n", max_dist);;

printf("-- Min dist : %f \n", min_dist);

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.

for ( int i = 0; i < descriptors_1.rows; i++ )

{

if ( match[i].distance <= max ( 2*min_dist, 30.0 ))

{

matches.push_back ( match[i] );

}

}

}

//实现像素坐标到相机坐标的转换(求出来的只是包含相机坐标下的x,y的二维点)

cv::Point2d pixel2cam(const cv::Point2d& p, const cv::Mat& K)

{

return cv::Point2d(

((p.x - K.at<double>(0,2))/K.at<double>(0,0)),

((p.y - K.at<double>(1,2))/K.at<double>(1,1))

);

}

//手写高斯牛顿

// BA by gauss-newton 手写高斯牛顿进行位姿优化

void bundleAdjustmentGaussNewton(const VecVector3d& points_3d,

const VecVector2d& points_2d,

const cv::Mat & K,

Sophus::SE3d& pose)

{

typedef Eigen::Matrix<double, 6, 1> Vector6d;

const int iters = 10;//迭代次数

double cost = 0, lastcost = 0;//代价函数(目标函数)

//拿出内参

double fx = K.at<double>(0,0);

double fy = K.at<double>(1,1);

double cx = K.at<double>(0,2);

double cy = K.at<double>(1,2);

//进入迭代

for (int iter = 0; iter < iters; iter++)

{

Eigen::Matrix<double,6,6> H = Eigen::Matrix<double,6,6>::Zero();//初始化H矩阵

Vector6d b = Vector6d::Zero();//对b矩阵初始化

cost = 0;

// 遍历所有的特征点 计算cost

for(int i=0;i<points_3d.size();i++)

{

Eigen::Vector3d pc=pose*points_3d[i];//利用待优化的pose得到图2的相机坐标下的3d点

double inv_z=1.0/pc[2];//得到图2的相机坐标下的3d点的z的倒数,也就是1/z

double inv_z2 = inv_z * inv_z;//(1/z)^2

//定义投影

Eigen::Vector2d proj(fx * pc[0] / pc[2] + cx, fy * pc[1] / pc[2] + cy);

//定义误差

Eigen::Vector2d e=points_2d[i]-proj;

cost += e.squaredNorm();//cost=e*e

//定义雅克比矩阵J

Eigen::Matrix<double, 2, 6> J;

J << -fx * inv_z,

0,

fx * pc[0] * inv_z2,

fx * pc[0] * pc[1] * inv_z2,

-fx - fx * pc[0] * pc[0] * inv_z2,

fx * pc[1] * inv_z,

0,

-fy * inv_z,

fy * pc[1] * inv_z2,

fy + fy * pc[1] * pc[1] * inv_z2,

-fy * pc[0] * pc[1] * inv_z2,

-fy * pc[0] * inv_z;

H += J.transpose() * J;

b += -J.transpose() * e;

}

//出了这个内循环,表述结束一次迭代的计算,接下来,要求pose了

Vector6d dx;//P129页 公式6.33 计算增量方程 Hdx=b

dx = H.ldlt().solve(b);//算出增量dx

//判断dx这个数是否有效

if(isnan(dx[0]))

{

cout << "result is nan!" << endl;

break;

}

//如果我们进行了迭代,且最后的cost>=lastcost的话,那就表明满足要求了,可以停止迭代了

if(iter > 0 && cost >= lastcost)

{

// cost increase, update is not good

cout << "cost: " << cost << ", last cost: " << lastcost << endl;

break;

}

//优化pose 也就是用dx更新pose

pose = Sophus::SE3d::exp(dx) * pose; //dx是李代数,要转换为李群

lastcost=cost;

cout << "iteration " << iter << " cost="<< std::setprecision(12) << cost << endl;

//std::setprecision(12)浮点数控制位数为12位

//如果误差特别小了,也结束迭代

if (dx.norm() < 1e-6)

{

// converge

break;

}

}

cout<<"pose by g-n \n"<<pose.matrix()<<endl;

}

//利用g2o优化pose

void bundleAdjustmentG2O(const VecVector3d& points_3d,

const VecVector2d& points_2d,

const cv::Mat & K,

Sophus::SE3d &pose)

{

// 构建图优化,先设定g2o

typedef g2o::BlockSolver<g2o::BlockSolverTraits<6,3>> Block;// pose 维度为 6, landmark 维度为 3

Block::LinearSolverType* linearSolver = new g2o::LinearSolverCSparse<Block::PoseMatrixType>(); // 线性方程求解器

Block* solver_ptr = new Block (unique_ptr<Block::LinearSolverType>(linearSolver)); // 矩阵块求解器

// 梯度下降方法,可以从GN, LM, DogLeg 中选

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg(unique_ptr<Block>(solver_ptr));

g2o::SparseOptimizer optimizer; // 图模型

optimizer.setAlgorithm(solver); // 设置求解器 算法g-n

optimizer.setVerbose(false); // 取消调试输出

//加入顶点

Vertexpose *v=new Vertexpose();

v->setEstimate(Sophus::SE3d());

v->setId(0);

optimizer.addVertex(v);

//K

// K

Eigen::Matrix3d K_eigen;

K_eigen <<

K.at<double>(0, 0), K.at<double>(0, 1), K.at<double>(0, 2),

K.at<double>(1, 0), K.at<double>(1, 1), K.at<double>(1, 2),

K.at<double>(2, 0), K.at<double>(2, 1), K.at<double>(2, 2);

//加入边

int index=1;

for(size_t i=0;i<points_2d.size();++i)

{

//遍历 把3d点和像素点拿出来

auto p2d = points_2d[i];

auto p3d = points_3d[i];

EdgeProjection *edge = new EdgeProjection(p3d, K_eigen);//有参构造

edge->setId(index);

edge->setVertex(0,v);

edge->setMeasurement(p2d);//设置观测值,其实就是图2 里的匹配特征点的像素位置

edge->setInformation(Eigen::Matrix2d::Identity());//信息矩阵是二维方阵,因为误差是二维

optimizer.addEdge(edge);//加入边

index++;//边的编号++

}

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

optimizer.setVerbose(false);

optimizer.initializeOptimization();//开始 初始化

optimizer.optimize(10);//迭代次数

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "optimization costs time: " << time_used.count() << " seconds." << endl;

cout << "pose estimated by g2o =\n" << v->estimate().matrix() << endl;

pose = v->estimate();

}

void bundleAdjustment(const vector<cv::Point3f> points_3d,

const vector<cv::Point2f> points_2d,

const cv::Mat& K,

cv::Mat& R, cv::Mat& t)

{

// 初始化g2o

typedef g2o::BlockSolver<g2o::BlockSolverTraits<6,3>> Block;// pose 维度为 6, landmark 维度为 3

Block::LinearSolverType* linearSolver = new g2o::LinearSolverCSparse<Block::PoseMatrixType>(); // 线性方程求解器

Block* solver_ptr = new Block (unique_ptr<Block::LinearSolverType>(linearSolver)); // 矩阵块求解器

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg(unique_ptr<Block>(solver_ptr));

g2o::SparseOptimizer optimizer; // 图模型

optimizer.setAlgorithm( solver ); // 设置求解器

// vertex 有两个顶点,一个是优化位姿,一个是优化特征点的空间位置

g2o::VertexSE3Expmap* pose = new g2o::VertexSE3Expmap(); // camera pose

Eigen::Matrix3d R_mat;

R_mat <<

R.at<double> ( 0,0 ), R.at<double> ( 0,1 ), R.at<double> ( 0,2 ),

R.at<double> ( 1,0 ), R.at<double> ( 1,1 ), R.at<double> ( 1,2 ),

R.at<double> ( 2,0 ), R.at<double> ( 2,1 ), R.at<double> ( 2,2 );

pose->setId(0);

pose->setEstimate(g2o::SE3Quat(R_mat, Eigen::Vector3d(t.at<double>(0,0), t.at<double>(1,0), t.at<double>(2,0))));

optimizer.addVertex(pose);

int index = 1;

for (const cv::Point3f p:points_3d) // landmarks

{

g2o::VertexPointXYZ* point = new g2o::VertexPointXYZ();

point->setId(index++);

point->setEstimate(Eigen::Vector3d(p.x, p.y, p.z));

point->setMarginalized(true);// g2o 中必须设置 marg 参见第十讲内容

optimizer.addVertex(point);

}

// parameter: camera intrinsics

g2o::CameraParameters* camera = new g2o::CameraParameters (

K.at<double> ( 0,0 ), Eigen::Vector2d ( K.at<double> ( 0,2 ), K.at<double> ( 1,2 ) ), 0

);

camera->setId ( 0 );

optimizer.addParameter ( camera );

// edges

index = 1;

for ( const cv::Point2f p:points_2d )

{

g2o::EdgeProjectXYZ2UV* edge = new g2o::EdgeProjectXYZ2UV();

edge->setId ( index );

edge->setVertex ( 0, dynamic_cast<g2o::VertexPointXYZ*> ( optimizer.vertex ( index ) ) );

edge->setVertex ( 1, pose );

edge->setMeasurement ( Eigen::Vector2d ( p.x, p.y ) );

edge->setParameterId ( 0,0 );

// 信息矩阵:协方差矩阵之逆 这里为1表示加权为1

edge->setInformation ( Eigen::Matrix2d::Identity() );//这里的信息矩阵可以参考:http://www.cnblogs.com/gaoxiang12/p/5244828.html 里面有说明

optimizer.addEdge ( edge );

index++;

}

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

optimizer.setVerbose ( false );// 打开调试输出,也就是输出迭代信息的设置

optimizer.initializeOptimization();

optimizer.optimize ( 100 );

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>> ( t2-t1 );

cout<<"optimization costs time: "<<time_used.count() <<" seconds."<<endl;

cout<<endl<<"after optimization:"<<endl;

cout<<"T="<<endl<<Eigen::Isometry3d ( pose->estimate() ).matrix() <<endl;

}

5.3 CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project(pose_estimation_3d2d)

set( CMAKE_CXX_STANDARD 14)

#SET(G2O_LIBS g2o_cli g2o_ext_freeglut_minimal g2o_simulator g2o_solver_slam2d_linear g2o_types_icp g2o_types_slam2d g2o_core g2o_interface g2o_solver_csparse g2o_solver_structure_only g2o_types_sba g2o_types_slam3d g2o_csparse_extension g2o_opengl_helper g2o_solver_dense g2o_stuff g2o_types_sclam2d g2o_parser g2o_solver_pcg g2o_types_data g2o_types_sim3 cxsparse )

set( CMAKE_BUILD_TYPE Release )

# 添加cmake模块以使用g2o

list( APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake_modules )

find_package(OpenCV 3.1 REQUIRED)

include_directories(${OpenCV_INCLUDE_DIRECTORIES})

include_directories("/usr/include/eigen3")

find_package(G2O REQUIRED)

include_directories(${G2O_INCLUDE_DIRECTORIES})

find_package(CSparse REQUIRED)

include_directories(${CSPARSE_INCLUDE_DIR})

find_package(Sophus REQUIRED)

include_directories(${Sophus_INCLUDE_DIRS})

add_executable(pose_estimation_3d2d src/pose_estimation_3d2d.cpp)

target_link_libraries( pose_estimation_3d2d

${OpenCV_LIBS}

g2o_core g2o_stuff g2o_types_sba g2o_csparse_extension

g2o_types_slam3d

#${G2O_LIBS}

${CSPARSE_LIBRARY}

${Sophus_LIBRARIES} fmt

)

5.4 运行结果

/home/bupo/shenlan/zuoye/cap7/pose_estimation_3d2d/cmake-build-debug/pose_estimation_3d2d 1.png 2.png 1_depth.png 2_depth.png

[ INFO:0] Initialize OpenCL runtime...

-- Max dist : 95.000000

-- Min dist : 7.000000

一共找到了81组匹配点

3d-2d pairs: 77

使用cv求解 位姿

***********************************opencv***********************************

solve pnp in opencv cost time: 0.00274987 seconds.

R=

[0.9979193252225089, -0.05138618904650331, 0.03894200717386666;

0.05033852907733834, 0.9983556574295412, 0.02742286944793203;

-0.04028712992734059, -0.02540552801469367, 0.9988651091656532]

t=

[-0.1255867099750398;

-0.007363525258777434;

0.06099926588678889]

calling bundle adjustment

optimization costs time: 0.0012403 seconds.

after optimization:

T=

0.997841 -0.0518393 0.0403291 -0.127516

0.0507013 0.9983 0.028745 -0.00947167

-0.0417507 -0.0266382 0.998773 0.0595037

0 0 0 1

***********************************opencv***********************************

手写高斯牛顿优化位姿

***********************************手写高斯牛顿***********************************

iteration 0 cost=44765.3537799

iteration 1 cost=431.695366816

iteration 2 cost=319.560037493

iteration 3 cost=319.55886789

pose by g-n

0.997919325221 -0.0513861890122 0.0389420072614 -0.125586710123

0.0503385290405 0.998355657431 0.0274228694545 -0.00736352527141

-0.0402871300142 -0.0254055280183 0.998865109162 0.0609992659219

0 0 0 1

solve pnp by gauss newton cost time: 0.000125385 seconds.

R =

0.997919325221 -0.0513861890122 0.0389420072614

0.0503385290405 0.998355657431 0.0274228694545

-0.0402871300142 -0.0254055280183 0.998865109162

t = -0.125586710123 -0.00736352527141 0.0609992659219

***********************************手写高斯牛顿***********************************

g2o优化位姿

***********************************g2o***********************************

optimization costs time: 0.000207278 seconds.

pose estimated by g2o =

0.997919325223 -0.0513861890471 0.0389420071732 -0.125586709974

0.0503385290779 0.998355657429 0.0274228694493 -0.007363525261

-0.0402871299268 -0.0254055280161 0.998865109166 0.0609992658862

0 0 0 1

solve pnp by g2o cost time: 0.000303667 seconds.

***********************************g2o***********************************

进程已结束,退出代码0

5.5 遇到的问题(在第一版代码的基础上)

- 首先是修改CMakeLists.txt的问题

- 大部分的代码需要更新到C++14,所以这里就是把

set( CMAKE_CXX_FLAGS "-std=c++11 -O3" )

调整为:

set( CMAKE_CXX_STANDARD 14)

然后是g2o使用问题,会报错FAILED: pose_estimation_3d2d /usr/bin/ld: CMakeFiles/pose_estimation_3d2d.dir/src/pose_estimation_3d2d.cpp.o: undefined reference to symbol '_ZTVN3g2o14VertexPointXYZE' //usr/local/lib/libg2o_types_slam3d.so: 无法添加符号: DSO missing from command line collect2: error: ld returned 1 exit status ninja: build stopped: subcommand failed.

这里DSO missing的原因基本上是因为有库没有连接上,导致未识别。最保险的方法是在最前端将所有库设置为G2O_LIBS:

SET(G2O_LIBS g2o_cli g2o_ext_freeglut_minimal g2o_simulator g2o_solver_slam2d_linear g2o_types_icp g2o_types_slam2d g2o_core g2o_interface g2o_solver_csparse g2o_solver_structure_only g2o_types_sba g2o_types_slam3d g2o_csparse_extension g2o_opengl_helper g2o_solver_dense g2o_stuff g2o_types_sclam2d g2o_parser g2o_solver_pcg g2o_types_data g2o_types_sim3 cxsparse )

并在随后对应的链接中加入${G2O_LIBS}:

target_link_libraries( pose_estimation_3d2d

${OpenCV_LIBS}

g2o_core g2o_stuff g2o_types_sba g2o_csparse_extension ${G2O_LIBS}

${CSPARSE_LIBRARY}

)

当然其实不需要把所有的都链接上,这样编译也会很慢,不set,然后只添加g2o_types_slam3d,随便编译会警告,但是可以运行:

target_link_libraries( pose_estimation_3d2d

${OpenCV_LIBS}

g2o_core g2o_stuff g2o_types_sba g2o_csparse_extension g2o_types_slam3d#${G2O_LIBS}

${CSPARSE_LIBRARY}

)

- 其次是.cpp文件

- 之前遇到过的问题,就是g2o版本的问题,新的版本需要使用智能指针,可以参考我之前写的文章中的3.1节有解决这个问题(slam十四讲第二版】【课本例题代码向】【第六讲~非线性优化】),把

bundleAdjustment函数中的

// 初始化g2o

typedef g2o::BlockSolver< g2o::BlockSolverTraits<6,3> > Block; // pose 维度为 6, landmark 维度为 3

Block::LinearSolverType* linearSolver = new g2o::LinearSolverCSparse<Block::PoseMatrixType>(); // 线性方程求解器

Block* solver_ptr = new Block ( linearSolver ); // 矩阵块求解器

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg ( solver_ptr );

调整为:

// 初始化g2o

typedef g2o::BlockSolver< g2o::BlockSolverTraits<6,3> > Block; // pose 维度为 6, landmark 维度为 3

Block::LinearSolverType* linearSolver = new g2o::LinearSolverCSparse<Block::PoseMatrixType>(); // 线性方程求解器

Block* solver_ptr = new Block ( linearSolver ); // 矩阵块求解器

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg ( solver_ptr );

- 除此之外,g2o的新版本,会把

g2o::VertexSBAPointXYZ替换为g2o::EdgeProjectXYZ2UV - 改错参考:SLAM十四讲ch7代码调整(undefined reference to symbol)

- 在写完使用g2o进行BA优化函数之后(即

bundleAdjustmentG2O()函数),出现报错

FAILED: pose_estimation_3d2d

: && /usr/bin/c++ -g -rdynamic CMakeFiles/pose_estimation_3d2d.dir/src/pose_estimation_3d2d.cpp.o -o pose_estimation_3d2d -Wl,-rpath,/usr/local/lib /usr/local/lib/libopencv_dnn.so.3.4.1 /usr/local/lib/libopencv_ml.so.3.4.1 /usr/local/lib/libopencv_objdetect.so.3.4.1 /usr/local/lib/libopencv_shape.so.3.4.1 /usr/local/lib/libopencv_stitching.so.3.4.1 /usr/local/lib/libopencv_superres.so.3.4.1 /usr/local/lib/libopencv_videostab.so.3.4.1 /usr/local/lib/libopencv_viz.so.3.4.1 -lg2o_core -lg2o_stuff -lg2o_types_sba -lg2o_csparse_extension -lg2o_cli -lg2o_ext_freeglut_minimal -lg2o_simulator -lg2o_solver_slam2d_linear -lg2o_types_icp -lg2o_types_slam2d -lg2o_core -lg2o_interface -lg2o_solver_csparse -lg2o_solver_structure_only -lg2o_types_sba -lg2o_types_slam3d -lg2o_csparse_extension -lg2o_opengl_helper -lg2o_solver_dense -lg2o_stuff -lg2o_types_sclam2d -lg2o_parser -lg2o_solver_pcg -lg2o_types_data -lg2o_types_sim3 -lcxsparse -lcxsparse -lfmt /usr/local/lib/libopencv_calib3d.so.3.4.1 /usr/local/lib/libopencv_features2d.so.3.4.1 /usr/local/lib/libopencv_flann.so.3.4.1 /usr/local/lib/libopencv_highgui.so.3.4.1 /usr/local/lib/libopencv_photo.so.3.4.1 /usr/local/lib/libopencv_video.so.3.4.1 /usr/local/lib/libopencv_videoio.so.3.4.1 /usr/local/lib/libopencv_imgcodecs.so.3.4.1 /usr/local/lib/libopencv_imgproc.so.3.4.1 /usr/local/lib/libopencv_core.so.3.4.1 -lg2o_core -lg2o_types_sba -lg2o_csparse_extension -lg2o_cli -lg2o_ext_freeglut_minimal -lg2o_simulator -lg2o_solver_slam2d_linear -lg2o_types_icp -lg2o_types_slam2d -lg2o_interface -lg2o_solver_csparse -lg2o_solver_structure_only -lg2o_types_slam3d -lg2o_opengl_helper -lg2o_solver_dense -lg2o_types_sclam2d -lg2o_parser -lg2o_solver_pcg -lg2o_types_data -lg2o_types_sim3 -lcxsparse -lcxsparse -lfmt && :

CMakeFiles/pose_estimation_3d2d.dir/src/pose_estimation_3d2d.cpp.o:在函数‘ceres::internal::FixedArray<double, 6ul, std::allocator<double> >::operator[](unsigned long)’中:

/usr/local/include/ceres/internal/fixed_array.h:215:对‘google::LogMessageFatal::LogMessageFatal(char const*, int, google::CheckOpString const&)’未定义的引用

/usr/local/include/ceres/internal/fixed_array.h:215:对‘google::LogMessage::stream()’未定义的引用

/usr/local/include/ceres/internal/fixed_array.h:215:对‘google::LogMessageFatal::~LogMessageFatal()’未定义的引用

/usr/local/include/ceres/internal/fixed_array.h:215:对‘google::LogMessageFatal::~LogMessageFatal()’未定义的引用

CMakeFiles/pose_estimation_3d2d.dir/src/pose_estimation_3d2d.cpp.o:在函数‘std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >* google::MakeCheckOpString<unsigned long, unsigned long>(unsigned long const&, unsigned long const&, char const*)’中:

/usr/include/glog/logging.h:692:对‘google::base::CheckOpMessageBuilder::CheckOpMessageBuilder(char const*)’未定义的引用

/usr/include/glog/logging.h:694:对‘google::base::CheckOpMessageBuilder::ForVar2()’未定义的引用

/usr/include/glog/logging.h:695:对‘google::base::CheckOpMessageBuilder::NewString[abi:cxx11]()’未定义的引用

/usr/include/glog/logging.h:692:对‘google::base::CheckOpMessageBuilder::~CheckOpMessageBuilder()’未定义的引用

/usr/include/glog/logging.h:692:对‘google::base::CheckOpMessageBuilder::~CheckOpMessageBuilder()’未定义的引用

collect2: error: ld returned 1 exit status

ninja: build stopped: subcommand failed.

- 将CMakeLists.txt文件中设置为‘Release’不要设置为Debug即可解决此问题

set( CMAKE_BUILD_TYPE Release )

- 解决参考:对‘google::LogMessage::stream()’未定义的引用

- 然后是运行的时候遇到的问题

报错:./pose_estimation_3d2d: error while loading shared libraries: libg2o_opengl_helper.so: cannot open shared object file: No such file or directory

解决

sudo gedit /etc/ld.so.conf

加入内容:

/usr/local/lib

然后执行命令:

sudo ldconfig

参考:

error while loading shared libraries: libg2o_core.so: cannot open shared object file: No such file o

5.6 感悟

- 这里g2o的使用不仅要注意

G2O库,还要注意CSparse库

6 3D-3D:ICP

6.1 前言

- 这是本人的项目全部自取:链接:https://pan.baidu.com/s/1TWpopyomxm1Oev44alHOIQ

提取码:hi2r - 工程必须的cmake_modules文件夹:链接: https://pan.baidu.com/s/1e0KAzr5Fz7B7ksAkSYle8A 提取码: fisg

- 本章节参考:SLAM十四讲-ch7(2)-位姿估计(包含2d-2d、3d-2d、3d-3d、以及三角化实现代码的注释)

- 两张RGB图及其各自对应的深度图:链接: https://pan.baidu.com/s/1Pi0c0IynCU5bpG2QJGEGcg 提取码: ana1

6.2 pose_estimation_3d3d.cpp

//

// Created by czy on 2022/4/6.

//

#include detector = FeatureDetector::create ( "ORB" );

// Ptr descriptor = DescriptorExtractor::create ( "ORB" );

cv::Ptr<cv::DescriptorMatcher> matcher = cv::DescriptorMatcher::create("BruteForce-Hamming");

//-- 第一步:检测 Oriented FAST 角点位置

detector->detect(img_1, keypoints_1);

detector->detect(img_2, keypoints_2);

//-- 第二步:根据角点位置计算 BRIEF 描述子

descriptor->compute(img_1, keypoints_1, descriptors_1);

descriptor->compute(img_2, keypoints_2, descriptors_2);

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector<cv::DMatch> match;

// BFMatcher matcher ( NORM_HAMMING );

matcher->match(descriptors_1, descriptors_2, match);

//-- 第四步:匹配点对筛选

double min_dist = 10000, max_dist = 0;

//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离

for (int i = 0; i < descriptors_1.rows; i++) {

double dist = match[i].distance;

if (dist < min_dist) min_dist = dist;

if (dist > max_dist) max_dist = dist;

}

printf("-- Max dist : %f \n", max_dist);

printf("-- Min dist : %f \n", min_dist);

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.

for (int i = 0; i < descriptors_1.rows; i++) {

if (match[i].distance <= max(2 * min_dist, 30.0)) {

matches.push_back(match[i]);

}

}

}

//实现像素坐标到相机坐标的转换(求出来的只是包含相机坐标下的x,y的二维点)

cv::Point2d pixel2cam(const cv::Point2d &p, const cv::Mat &K) {

return cv::Point2d

(

(p.x - K.at<double>(0, 2)) / K.at<double>(0, 0),

(p.y - K.at<double>(1, 2)) / K.at<double>(1, 1)

);

}

void pose_estimation_3d3d(const vector<cv::Point3f> &pts1,

const vector<cv::Point3f> &pts2,

cv::Mat &R, cv::Mat &t)

{

int N = pts1.size();//匹配的3d点个数

cv::Point3f p1,p2;//质心

for (int i = 0; i < N; i++)

{

p1+=pts1[i];

p2+=pts2[2];

}

p1 = cv::Point3f(cv::Vec3f(p1)/N);//得到质心

p2 = cv::Point3f(cv::Vec3f(p2)/N);

vector<cv::Point3f> q1(N), q2(N);

for(int i = 0; i < N; i++)

{

//去质心

q1[i] = pts1[i] - p1;

q2[i] = pts2[i] - p2;

}

//计算 W+=q1*q2^T(求和)

Eigen::Matrix3d W = Eigen::Matrix3d::Zero();//初始化

for(int i = 0; i < N; i++)

{

W += Eigen::Vector3d(q1[i].x,q1[i].y,q1[i].z) * Eigen::Vector3d(q2[i].x,q2[i].y,q2[i].z).transpose();

}

cout << "W = " << endl << W << endl;

//利用svd分解 W=U*sigema*V

//Eigen::ComputeFullU : 在JacobiSVD中使用,表示要计算方阵U

//Eigen::ComputeFullV : 在JacobiSVD中使用,表示要计算方阵V

Eigen::JacobiSVD<Eigen::Matrix3d> svd(W,Eigen::ComputeFullU | Eigen::ComputeFullV);

Eigen::Matrix3d U = svd.matrixU();//得到U矩阵

Eigen::Matrix3d V = svd.matrixV();//得到V矩阵

cout << "U=" << U << endl;

cout << "V=" << V << endl;

Eigen::Matrix3d R_ = U * (V.transpose());

if(R_.determinant() < 0)//若旋转矩阵R_的行列式<0 则取负号

{

R_ = -R_;

}

//得到平移向量

Eigen::Vector3d t_ = Eigen::Vector3d (p1.x,p1.y,p1.z) - R_ * Eigen::Vector3d(p2.x,p2.y,p2.z);

//把 Eigen形式的 r 和 t_ 转换为CV 中的Mat格式

R = (cv::Mat_<double>(3,3) <<

R_(0, 0), R_(0, 1), R_(0, 2),

R_(1, 0), R_(1, 1), R_(1, 2),

R_(2, 0), R_(2, 1), R_(2, 2)

);

t = (cv::Mat_<double>(3, 1) << t_(0, 0), t_(1, 0), t_(2, 0));

}

//对于用g2o来进行优化的话,首先要定义顶点和边的模板

//顶点,也就是咱们要优化的pose 用李代数表示它 6维

class Vertexpose : public g2o::BaseVertex<6, Sophus::SE3d>

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;//必须写,我也不知道为什么

//重载setToOriginImpl函数 这个应该就是把刚开的待优化的pose放进去

virtual void setToOriginImpl() override

{

_estimate = Sophus::SE3d();

}

//重载oplusImpl函数,用来更新pose(待优化的系数)

virtual void oplusImpl(const double *update) override

{

Eigen::Matrix<double, 6,1> update_eigen;//更新的量,就是增量呗,dx

update_eigen << update[0], update[1], update[2], update[3], update[4], update[5];

_estimate = Sophus::SE3d::exp(update_eigen) * _estimate;//更新pose 李代数要转换为李群,这样才可以左乘

}

//存盘 读盘 :留空

virtual bool read(istream &in) override {}

virtual bool write(ostream &out) const override {}

};

//定义边

class EdgeProjectXYZRGBD : public g2o::BaseUnaryEdge<3, Eigen::Vector3d, Vertexpose>

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

EdgeProjectXYZRGBD(const Eigen::Vector3d &point) : _point(point) {}//赋值这个是图1坐标下的3d点

//计算误差

virtual void computeError() override

{

const Vertexpose *v = static_cast<const Vertexpose*>(_vertices[0]);//顶点v

_error = _measurement - v->estimate() * _point;

}

//计算雅克比

virtual void linearizeOplus() override

{

const Vertexpose *v = static_cast<const Vertexpose *>(_vertices[0]);//顶点v

Sophus::SE3d T = v->estimate();//把顶点的待优化系数拿出来

Eigen::Vector3d xyz_trans = T * _point;//变换到图2下的坐标点

//课本2 p86 and p199

_jacobianOplusXi.block<3,3>(0,0) = -Eigen::Matrix3d::Identity();

_jacobianOplusXi.block<3,3>(0,3) = Sophus::SO3d::hat(xyz_trans);

}

//存盘 读盘 :留空

virtual bool read(istream &in) override {}

virtual bool write(ostream &out) const override {}

protected:

Eigen::Vector3d _point;

};

//利用g2o

void bundleAdjustment(const vector<cv::Point3f> &pts1,

const vector<cv::Point3f> &pts2,

cv::Mat &R, cv::Mat &t)

{

// 构建图优化,先设定g2o

typedef g2o::BlockSolver<g2o::BlockSolverTraits<6,3>> Block;// pose 维度为 6, landmark 维度为 3

Block::LinearSolverType* linearSolver = new g2o::LinearSolverCSparse<Block::PoseMatrixType>(); // 线性方程求解器

Block* solver_ptr = new Block (unique_ptr<Block::LinearSolverType>(linearSolver)); // 矩阵块求解器

g2o::OptimizationAlgorithmGaussNewton* solver = new g2o::OptimizationAlgorithmGaussNewton(unique_ptr<Block>(solver_ptr));

g2o::SparseOptimizer optimizer;// 图模型

optimizer.setAlgorithm(solver);// 设置求解器

optimizer.setVerbose(false); // 调试输出

//加入顶点

Vertexpose *v = new Vertexpose();

v->setEstimate(Sophus::SE3d());

v->setId(0);

optimizer.addVertex(v);

//加入边

int index = 1;

for (size_t i = 0; i < pts1.size();i++)

{

EdgeProjectXYZRGBD *edge = new EdgeProjectXYZRGBD(Eigen::Vector3d(pts1[i].x,pts1[i].y,pts1[i].z));

edge->setId(index);//边的编号

edge->setVertex(0,v);//设置顶点 顶点编号

edge->setMeasurement(Eigen::Vector3d(pts2[i].x,pts2[i].y,pts2[i].z));

edge->setInformation(Eigen::Matrix3d::Identity());//set信息矩阵为单位矩阵 信息矩阵是3维方阵,因为误差是3维

optimizer.addEdge(edge);//加入边

index++;//边的编号++

}

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

optimizer.setVerbose(false);

optimizer.initializeOptimization();//开始 初始化

optimizer.optimize(10);//迭代次数

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast<chrono::duration<double>>(t2 - t1);

cout << "optimization costs time: " << time_used.count() << " seconds." << endl;

cout << endl << "after optimization:" << endl;

cout << "T=\n" << v->estimate().matrix() << endl;

// 把位姿转换为Mat类型

Eigen::Matrix3d R_ = v->estimate().rotationMatrix();

Eigen::Vector3d t_ = v->estimate().translation();

R = (cv::Mat_<double>(3,3) << R_(0, 0), R_(0, 1), R_(0, 2),

R_(1, 0), R_(1, 1), R_(1, 2),

R_(2, 0), R_(2, 1), R_(2, 2));

t = (cv::Mat_<double>(3, 1) << t_(0, 0), t_(1, 0), t_(2, 0));

}

6.3 CMakeLists.txt

cmake_minimum_required(VERSION 2.8)

project(pose_estimation_3d3d)

set(CMAKE_CXX_STANDARD 14)

set(CMAKE_BUILD_TYPE Release )

list(APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake_modules)

# eigen3

include_directories("/usr/include/eigen3")

#opencv2

find_package(OpenCV 3.1 REQUIRED)

#g2o

find_package(G2O REQUIRED)

find_package(CSparse REQUIRED)

#Sophus

find_package(Sophus REQUIRED)

include_directories(

${OpenCV_INCLUDE_DIRECTORIES}

${G2O_INCLUDE_DIRECTORIES}

${CSPARSE_INCLUDE_DIR}

${Sophus_INCLUDE_DIRECTORIES}

)

add_executable(pose_estimation_3d3d src/pose_estimation_3d3d.cpp)

target_link_libraries(pose_estimation_3d3d

${OpenCV_LIBRARIES}

g2o_core g2o_stuff g2o_types_sba

g2o_csparse_extension

g2o_types_slam3d

${CSPARSE_LIBRARY}

${Sophus_LIBRARIES} fmt

)

6.4 输出结果

- 正如课本p200所讲,这里的R,t是从第二张图到第一张图的变换,之前的R,t都是第一张图到第二张图的变换

- 我认为自己设置SVD求解的时候可以修改的,之后有时间自己修改一下,再来确定

/home/bupo/shenlan/zuoye/cap7/pose_estimation_3d3d/cmake-build-release/pose_estimation_3d3d ./src/1.png ./src/2.png ./src/1_depth.png ./src/2_depth.png

[ INFO:0] Initialize OpenCL runtime...

-- Max dist : 95.000000

-- Min dist : 7.000000

一共找到了81组匹配点

3d-3d pairs: 57

************************************ICP 3d-3d by SVD****************************************

W =

10.4543 -0.546814 6.16332

-1.64047 3.245 1.42499

3.16335 -4.96179 -1.15376

U= 0.961894 0.268057 -0.0539041

-0.109947 0.559704 0.821367

0.250343 -0.784141 0.567848

V= 0.8824 -0.0914481 -0.461527

-0.17002 0.852669 -0.494013

0.438707 0.514386 0.736847

ICP via SVD results:

R = [0.8491402669281291, 0.09165187733023789, 0.5201545351749511;

-0.5272840804832376, 0.09016969161333116, 0.8448910729693515;

0.03053367894919912, -0.9917002370152983, 0.1248932918680087]

t = [-0.2341765323736505;

-2.435024883544938;

1.678413644815799]

R^T = [0.8491402669281291, -0.5272840804832376, 0.03053367894919912;

0.09165187733023789, 0.09016969161333116, -0.9917002370152983;

0.5201545351749511, 0.8448910729693515, 0.1248932918680087]

t^T = [-1.136349276840491;

1.905511371012304;

1.969516166693823]

************************************ICP 3d-3d by SVD****************************************

************************************ICP 3d-3d by g2o****************************************

optimization costs time: 0.000511787 seconds.

after optimization:

T=

0.849513 -0.526723 0.0298586 -0.142711

0.0919136 0.0920349 -0.991505 1.65126

0.5195 0.84504 0.126598 1.84464

0 0 0 1

R=

[0.8495126322540705, -0.5267226536295997, 0.02985856316290605;

0.09191361179951504, 0.09203490651701673, -0.9915046464582867;

0.5194999283991564, 0.8450401304883673, 0.1265977972062496]

t = [-0.1427112070493184, 1.651257756926735, 1.844644951727316]

验证 p2 = R*P1 +t

p1 = [-0.0374123, -0.830816, 2.7448]

p2 = [-0.00596015, -0.399253, 1.4784]

(R*p1+t) = [0.3450717715204448;

-1.15012703155679;

1.470622311290227]

p1 = [-0.243698, -0.117719, 1.5848]

p2 = [-0.280475, -0.0914872, 1.5526]

(R*p1+t) = [-0.2404107766432324;

0.0466877051455481;

1.819198314809955]

p1 = [-0.627753, 0.160186, 1.3396]

p2 = [-1.03421, 0.231768, 2.0712]

(R*p1+t) = [-0.7203702645515666;

0.2800818852018891;

1.823481775685739]

p1 = [-0.627221, 0.101454, 1.3116]

p2 = [-0.984101, 0.138962, 1.941]

(R*p1+t) = [-0.6898191354798927;

0.3024874237171442;

1.770581847254317]

p1 = [0.402045, -0.341821, 2.2068]

p2 = [0.376626, -0.261343, 2.2068]

(R*p1+t) = [0.4447679999908566;

-0.5313007260737734;

2.044030900480374]

************************************ICP 3d-3d by g2o****************************************

进程已结束,退出代码0

6.5 报错

由于之前的铺垫,本次工程遇到的报错较少,【解决问题】【SLAM十四讲第7讲】【关于自己创建工程,遇到的CSparse警告的解决方案】

6.6 感悟

6.6.1 学到了如何把Eigen转换为cv::Mat下的矩阵

摘自6.2

//cv::Mat &R, cv::Mat &t

// 把位姿转换为Mat类型

Eigen::Matrix3d R_ = v->estimate().rotationMatrix();

Eigen::Vector3d t_ = v->estimate().translation();

R = (cv::Mat_<double>(3,3) << R_(0, 0), R_(0, 1), R_(0, 2),

R_(1, 0), R_(1, 1), R_(1, 2),

R_(2, 0), R_(2, 1), R_(2, 2));

t = (cv::Mat_<double>(3, 1) << t_(0, 0), t_(1, 0), t_(2, 0));

6.6.2 如何把cv::Mat下的矩阵转换为Eigen下矩阵

摘自5.2

const cv::Mat & K,

Eigen::Matrix3d K_eigen;

K_eigen <<

K.at<double>(0, 0), K.at<double>(0, 1), K.at<double>(0, 2),

K.at<double>(1, 0), K.at<double>(1, 1), K.at<double>(1, 2),

K.at<double>(2, 0), K.at<double>(2, 1), K.at<double>(2, 2);

- 我也学到了,使用g2o的时候,如何控制是否输出调试信息:

optimizer.setVerbose(false);,其中true就是输出调式信息,false就是不输出,这个调试信息是库输出的,不需要自己编写,也就是每次迭代的时间等信息