Pytorch实现InceptionV4模型

Pytorch实现InceptionV4模型

- 模型简介

- split-transform-merge模型

- InceptionV4的基本模块及源码

-

- 卷积模块

- InceptionA模块

- InceptionB模块

- InceptionC模块

- ReductionA模块

- ReductionB模块

- Stem模块

- InceptionV4模型及源码

- 完整源码

模型简介

论文链接:https://arxiv.org/pdf/1602.07261.pdf

模型提出时间:2016年

split-transform-merge模型

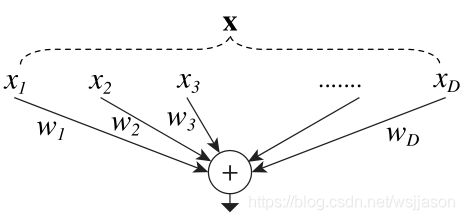

split-transform-merge模型即“分离-变换-合并”模型,顾名思义,该模型包括分离、变换和合并三步。

神经网络中的单个神经元(perceptron)就是最简单的split-transform-merge模型,其工作过程包括:将输入矢量x=[x1,x2,…,xD]分离为D个数值(split),各维矢量值与权值相乘(transform),累加求和(merge)。

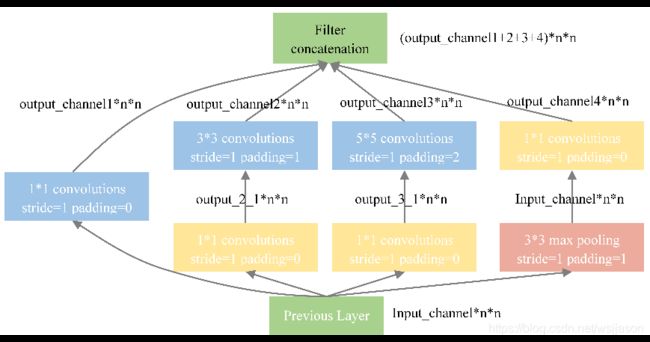

Inception系列的模型均属于split-transform-merge模型,其工作过程包括:将输入图片分为多个通道进行处理(split),各通道内的卷积、池化变换(transform),各通道的输出叠加得到最终输出(merge)。

Inception系列的模型均属于split-transform-merge模型,其工作过程包括:将输入图片分为多个通道进行处理(split),各通道内的卷积、池化变换(transform),各通道的输出叠加得到最终输出(merge)。

InceptionV4的基本模块及源码

卷积模块

InceptionV4的所有卷积模块均有三部分构成:卷积、批标准化和激活。模型中一共包含了三类卷积核:第一类卷积核stride=2且padding=0,功能是压缩图片的大小;第二类卷积核stride=1且padding=⌊kernelsize/2⌋,功能是提取特征但不改变图片的大小;第三类卷积核是非对称卷积核,kernelsize=(x,y),stride=1且padding=(⌊x/2⌋,⌊y/2⌋),功能是提取特征但不改变图片的大小。下文中三类卷积核分别由红色、绿色和蓝色标注,源码如下:

# 卷积核1:stride=2,padding=0

def Conv1(input_channel, output_channel, kernel_size):

return nn.Sequential(

nn.Conv2d(input_channel, output_channel, kernel_size, stride=2, padding=0),

nn.BatchNorm2d(output_channel),

nn.ReLU(inplace = True)

)

# 卷积核2:对称卷积核,stride=1, padding=kernel_size//2

def Conv2(input_channel, output_channel, kernel_size):

return nn.Sequential(

nn.Conv2d(input_channel, output_channel, kernel_size, stride=1, padding=kernel_size//2),

nn.BatchNorm2d(output_channel),

nn.ReLU(inplace=True)

)

# 卷积核3:非对称卷积核,kernel_size=(kernel_size1, kernel_size2),

# stride=1,padding=(kernel_size1//2, kernel_size2//2)

def Conv3(input_channel, output_channel, kernel_size1, kernel_size2):

return nn.Sequential(

nn.Conv2d(input_channel, output_channel, kernel_size=(kernel_size1, kernel_size2),

stride=1, padding=(kernel_size1//2, kernel_size2//2)),

nn.BatchNorm2d(output_channel),

nn.ReLU(inplace = True)

)

InceptionA模块

InceptionA模块的源码如下:# InceptionA

class InceptionA(nn.Module):

def __init__(self, input_channel):

super(InceptionA, self).__init__()

self.block1 = nn.Sequential(

nn.AvgPool2d(kernel_size=3, stride=1, padding=1),

Conv2(input_channel, 96, 1)

)

self.block2 = Conv2(input_channel, 96, 1)

self.block3 = nn.Sequential(

Conv2(input_channel, 64, 1),

Conv2(64, 96, 3)

)

self.block4 = nn.Sequential(

Conv2(input_channel, 64, 1),

Conv2(64, 96, 3),

Conv2(96, 96, 3)

)

def forward(self, x):

x1 = self.block1(x)

x2 = self.block2(x)

x3 = self.block3(x)

x4 = self.block4(x)

x = torch.cat([x1, x2, x3, x4], dim=1)

return x

InceptionB模块

InceptionB模块的源码如下:# InceptionB

class InceptionB(nn.Module):

def __init__(self, input_channel):

super(InceptionB, self).__init__()

self.block1 = nn.Sequential(

nn.AvgPool2d(kernel_size=3, stride=1, padding=1),

Conv2(input_channel, 128, 1)

)

self.block2 = Conv2(input_channel, 384, 1)

self.block3 = nn.Sequential(

Conv2(input_channel, 192, 1),

Conv3(192, 224, 1, 7),

Conv3(224, 256, 1, 7)

)

self.block4 = nn.Sequential(

Conv2(input_channel, 192, 1),

Conv3(192, 192, 1, 7),

Conv3(192, 224, 7, 1),

Conv3(224, 224, 1, 7),

Conv3(224, 256, 7, 1)

)

def forward(self, x):

x1 = self.block1(x)

x2 = self.block2(x)

x3 = self.block3(x)

x4 = self.block4(x)

x = torch.cat([x1, x2, x3, x4], dim=1)

return x

InceptionC模块

InceptionC模块的源码如下:# InceptionC

class InceptionC(nn.Module):

def __init__(self, input_channel):

super(InceptionC, self).__init__()

self.block1 = nn.Sequential(

nn.AvgPool2d(kernel_size=3, stride=1, padding=1),

Conv2(input_channel, 256, 1)

)

self.block2 = Conv2(input_channel, 256, 1)

self.block3 = nn.Sequential(

Conv2(input_channel, 384, 1),

Conv3(384, 256, 1, 3)

)

self.block4 = nn.Sequential(

Conv2(input_channel, 384, 1),

Conv3(384, 256, 3, 1)

)

self.block5 = nn.Sequential(

Conv2(input_channel, 384, 1),

Conv3(384, 448, 1, 3),

Conv3(448, 512, 3, 1),

Conv3(512, 256, 3, 1)

)

self.block6 = nn.Sequential(

Conv2(input_channel, 384, 1),

Conv3(384, 448, 1, 3),

Conv3(448, 512, 3, 1),

Conv3(512, 256, 1, 3)

)

def forward(self, x):

x1 = self.block1(x)

x2 = self.block2(x)

x3 = self.block3(x)

x4 = self.block4(x)

x5 = self.block5(x)

x6 = self.block6(x)

x = torch.cat([x1, x2, x3, x4, x5, x6], dim=1)

return x

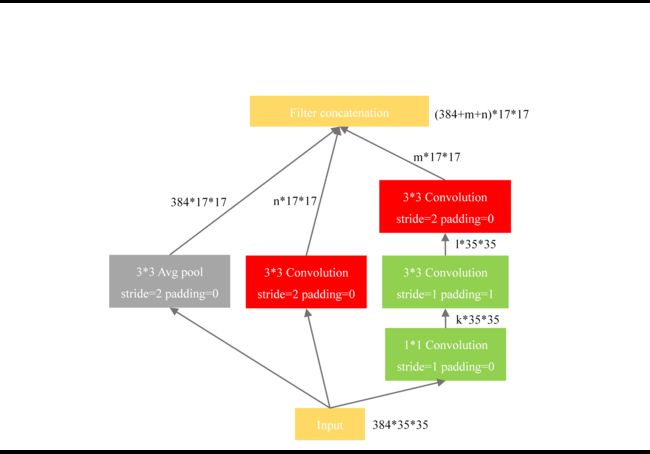

ReductionA模块

ReductionA模块的源码如下:

ReductionA模块的源码如下:

# ReductionA:将35*35的图片降维至17*17

class ReductionA(nn.Module):

def __init__(self, input_channel, k, l, m, n):

super(ReductionA, self).__init__()

self.block1 = nn.MaxPool2d(kernel_size=3, stride=2, padding=0)

self.block2 = Conv1(input_channel, n, 3)

self.block3 = nn.Sequential(

Conv2(input_channel, k, 1),

Conv2(k, l, 3),

Conv1(l, m, 3)

)

def forward(self, x):

x1 = self.block1(x)

x2 = self.block2(x)

x3 = self.block3(x)

x = torch.cat([x1, x2, x3], dim=1)

return x

ReductionB模块

ReductionB模块的源码如下:# ReductionB:将17*17的图片降维至8*8

class ReductionB(nn.Module):

def __init__(self, input_channel):

super(ReductionB, self).__init__()

self.block1 = nn.MaxPool2d(kernel_size=3, stride=2, padding=0)

self.blocl2 = nn.Sequential(

Conv2(input_channel, 192, 1),

Conv1(192, 192, 3)

)

self.block3 = nn.Sequential(

Conv2(input_channel, 256, 1),

Conv3(256, 256, 1, 7),

Conv3(256, 320, 7, 1),

Conv1(320, 320, 3)

)

def forward(self, x):

x1 = self.block1(x)

x2 = self.block2(x)

x3 = self.block3(x)

x = torch.cat([x1, x2, x3], dim=1)

return x

Stem模块

Stem模块的源码如下:# Stem

class Stem(nn.Module):

def __init__(self):

super(Stem, self).__init__()

self.block1 = nn.Sequential(

Conv1(3, 32, 3),

nn.Conv2d(32, 32, 3, stride=1, padding=0),

nn.BatchNorm2d(32),

nn.ReLU(),

Conv2(32, 64, 3)

)

self.block2_1 = nn.MaxPool2d(kernel_size=3, stride=2, padding=0)

self.block2_2 = nn.Sequential(

Conv1(64, 96, 3)

)

self.block3_1 = nn.Sequential(

Conv2(160, 64, 1),

nn.Conv2d(160, 96, 3, stride=1, padding=0),

nn.BatchNorm2d(96),

nn.ReLU()

)

self.block3_2 = nn.Sequential(

Conv2(160, 64, 1),

Conv3(64, 64, 7, 1),

Conv3(64, 64, 1, 7),

nn.Conv2d(64, 96, 3, stride=1, padding=0),

nn.BatchNorm2d(96),

nn.ReLU()

)

self.block4_1 = Conv1(192, 192, 3)

self.block4_2 = nn.MaxPool2d(kernel_size=3, stride=2, padding=0)

def forward(self, x):

x = self.block1(x)

x1 = self.block2_1(x)

x2 = self.block2_2(x)

x = torch.cat([x1, x2], dim=1)

x1 = self.block3_1(x)

x2 = self.block3_2(x)

x = torch.cat([x1, x2], dim=1)

x1 = self.block4_1(x)

x2 = self.block4_2(x)

x = torch.cat([x1, x2], dim=1)

return x

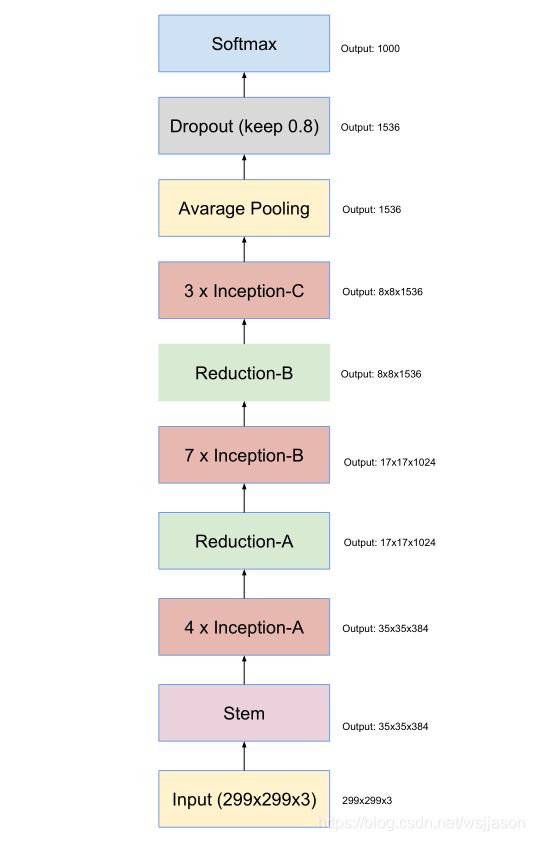

InceptionV4模型及源码

IncpetionV4模型由第三部分的基本模块构成,其结构如下图所示:

IncpetionV4模型的源码如下:

IncpetionV4模型的源码如下:

# InceptionV4 Network

class InceptionV4(nn.Module):

def __init__(self):

super(InceptionV4, self).__init__()

self.block1 = Stem()

self.block2 = nn.Sequential(

InceptionA(384),

InceptionA(384),

InceptionA(384),

InceptionA(384)

)

self.block3 = ReductionA(384, 192, 224, 256, 384)

self.block4 = nn.Sequential(

InceptionB(1024),

InceptionB(1024),

InceptionB(1024),

InceptionB(1024),

InceptionB(1024),

InceptionB(1024),

InceptionB(1024)

)

self.block5 = ReductionB(1024)

self.block6 = nn.Sequential(

InceptionC(1536),

InceptionC(1536),

InceptionC(1536),

)

self.block7 = nn.Sequential(

nn.AvgPool2d(kernel_size=8),

nn.Dropout(p=0.8)

)

self.linear = nn.Linear(1536,1000)

def forward(self, x):

x = self.block1(x)

x = self.block2(x)

x = self.block3(x)

x = self.block4(x)

x = self.block5(x)

x = self.block6(x)

x = self.block7(x)

x = x.view(x.size(0), -1)

x = self.linear(x)

return x

完整源码

import torch

import torch.nn as nn

'''Inception V4 model'''

# 卷积核1:stride=2,padding=0

def Conv1(input_channel, output_channel, kernel_size):

return nn.Sequential(

nn.Conv2d(input_channel, output_channel, kernel_size, stride=2, padding=0),

nn.BatchNorm2d(output_channel),

nn.ReLU(inplace = True)

)

# 卷积核2:对称卷积核,stride=1, padding=kernel_size//2

def Conv2(input_channel, output_channel, kernel_size):

return nn.Sequential(

nn.Conv2d(input_channel, output_channel, kernel_size, stride=1, padding=kernel_size//2),

nn.BatchNorm2d(output_channel),

nn.ReLU(inplace=True)

)

# 卷积核3:非对称卷积核,kernel_size=(kernel_size1, kernel_size2),

# stride=1,padding=(kernel_size1//2, kernel_size2//2)

def Conv3(input_channel, output_channel, kernel_size1, kernel_size2):

return nn.Sequential(

nn.Conv2d(input_channel, output_channel, kernel_size=(kernel_size1, kernel_size2),

stride=1, padding=(kernel_size1//2, kernel_size2//2)),

nn.BatchNorm2d(output_channel),

nn.ReLU(inplace = True)

)

# InceptionA

class InceptionA(nn.Module):

def __init__(self, input_channel):

super(InceptionA, self).__init__()

self.block1 = nn.Sequential(

nn.AvgPool2d(kernel_size=3, stride=1, padding=1),

Conv2(input_channel, 96, 1)

)

self.block2 = Conv2(input_channel, 96, 1)

self.block3 = nn.Sequential(

Conv2(input_channel, 64, 1),

Conv2(64, 96, 3)

)

self.block4 = nn.Sequential(

Conv2(input_channel, 64, 1),

Conv2(64, 96, 3),

Conv2(96, 96, 3)

)

def forward(self, x):

x1 = self.block1(x)

x2 = self.block2(x)

x3 = self.block3(x)

x4 = self.block4(x)

x = torch.cat([x1, x2, x3, x4], dim=1)

return x

# InceptionB

class InceptionB(nn.Module):

def __init__(self, input_channel):

super(InceptionB, self).__init__()

self.block1 = nn.Sequential(

nn.AvgPool2d(kernel_size=3, stride=1, padding=1),

Conv2(input_channel, 128, 1)

)

self.block2 = Conv2(input_channel, 384, 1)

self.block3 = nn.Sequential(

Conv2(input_channel, 192, 1),

Conv3(192, 224, 1, 7),

Conv3(224, 256, 1, 7)

)

self.block4 = nn.Sequential(

Conv2(input_channel, 192, 1),

Conv3(192, 192, 1, 7),

Conv3(192, 224, 7, 1),

Conv3(224, 224, 1, 7),

Conv3(224, 256, 7, 1)

)

def forward(self, x):

x1 = self.block1(x)

x2 = self.block2(x)

x3 = self.block3(x)

x4 = self.block4(x)

x = torch.cat([x1, x2, x3, x4], dim=1)

return x

# InceptionC

class InceptionC(nn.Module):

def __init__(self, input_channel):

super(InceptionC, self).__init__()

self.block1 = nn.Sequential(

nn.AvgPool2d(kernel_size=3, stride=1, padding=1),

Conv2(input_channel, 256, 1)

)

self.block2 = Conv2(input_channel, 256, 1)

self.block3 = nn.Sequential(

Conv2(input_channel, 384, 1),

Conv3(384, 256, 1, 3)

)

self.block4 = nn.Sequential(

Conv2(input_channel, 384, 1),

Conv3(384, 256, 3, 1)

)

self.block5 = nn.Sequential(

Conv2(input_channel, 384, 1),

Conv3(384, 448, 1, 3),

Conv3(448, 512, 3, 1),

Conv3(512, 256, 3, 1)

)

self.block6 = nn.Sequential(

Conv2(input_channel, 384, 1),

Conv3(384, 448, 1, 3),

Conv3(448, 512, 3, 1),

Conv3(512, 256, 1, 3)

)

def forward(self, x):

x1 = self.block1(x)

x2 = self.block2(x)

x3 = self.block3(x)

x4 = self.block4(x)

x5 = self.block5(x)

x6 = self.block6(x)

x = torch.cat([x1, x2, x3, x4, x5, x6], dim=1)

return x

# ReductionA:将35*35的图片降维至17*17

class ReductionA(nn.Module):

def __init__(self, input_channel, k, l, m, n):

super(ReductionA, self).__init__()

self.block1 = nn.MaxPool2d(kernel_size=3, stride=2, padding=0)

self.block2 = Conv1(input_channel, n, 3)

self.block3 = nn.Sequential(

Conv2(input_channel, k, 1),

Conv2(k, l, 3),

Conv1(l, m, 3)

)

def forward(self, x):

x1 = self.block1(x)

x2 = self.block2(x)

x3 = self.block3(x)

x = torch.cat([x1, x2, x3], dim=1)

return x

# ReductionB:将17*17的图片降维至8*8

class ReductionB(nn.Module):

def __init__(self, input_channel):

super(ReductionB, self).__init__()

self.block1 = nn.MaxPool2d(kernel_size=3, stride=2, padding=0)

self.blocl2 = nn.Sequential(

Conv2(input_channel, 192, 1),

Conv1(192, 192, 3)

)

self.block3 = nn.Sequential(

Conv2(input_channel, 256, 1),

Conv3(256, 256, 1, 7),

Conv3(256, 320, 7, 1),

Conv1(320, 320, 3)

)

def forward(self, x):

x1 = self.block1(x)

x2 = self.block2(x)

x3 = self.block3(x)

x = torch.cat([x1, x2, x3], dim=1)

return x

# Stem

class Stem(nn.Module):

def __init__(self):

super(Stem, self).__init__()

self.block1 = nn.Sequential(

Conv1(3, 32, 3),

nn.Conv2d(32, 32, 3, stride=1, padding=0),

nn.BatchNorm2d(32),

nn.ReLU(),

Conv2(32, 64, 3)

)

self.block2_1 = nn.MaxPool2d(kernel_size=3, stride=2, padding=0)

self.block2_2 = nn.Sequential(

Conv1(64, 96, 3)

)

self.block3_1 = nn.Sequential(

Conv2(160, 64, 1),

nn.Conv2d(160, 96, 3, stride=1, padding=0),

nn.BatchNorm2d(96),

nn.ReLU()

)

self.block3_2 = nn.Sequential(

Conv2(160, 64, 1),

Conv3(64, 64, 7, 1),

Conv3(64, 64, 1, 7),

nn.Conv2d(64, 96, 3, stride=1, padding=0),

nn.BatchNorm2d(96),

nn.ReLU()

)

self.block4_1 = Conv1(192, 192, 3)

self.block4_2 = nn.MaxPool2d(kernel_size=3, stride=2, padding=0)

def forward(self, x):

x = self.block1(x)

x1 = self.block2_1(x)

x2 = self.block2_2(x)

x = torch.cat([x1, x2], dim=1)

x1 = self.block3_1(x)

x2 = self.block3_2(x)

x = torch.cat([x1, x2], dim=1)

x1 = self.block4_1(x)

x2 = self.block4_2(x)

x = torch.cat([x1, x2], dim=1)

return x

# InceptionV4 Network

class InceptionV4(nn.Module):

def __init__(self):

super(InceptionV4, self).__init__()

self.block1 = Stem()

self.block2 = nn.Sequential(

InceptionA(384),

InceptionA(384),

InceptionA(384),

InceptionA(384)

)

self.block3 = ReductionA(384, 192, 224, 256, 384)

self.block4 = nn.Sequential(

InceptionB(1024),

InceptionB(1024),

InceptionB(1024),

InceptionB(1024),

InceptionB(1024),

InceptionB(1024),

InceptionB(1024)

)

self.block5 = ReductionB(1024)

self.block6 = nn.Sequential(

InceptionC(1536),

InceptionC(1536),

InceptionC(1536),

)

self.block7 = nn.Sequential(

nn.AvgPool2d(kernel_size=8),

nn.Dropout(p=0.8)

)

self.linear = nn.Linear(1536,1000)

def forward(self, x):

x = self.block1(x)

x = self.block2(x)

x = self.block3(x)

x = self.block4(x)

x = self.block5(x)

x = self.block6(x)

x = self.block7(x)

x = x.view(x.size(0), -1)

x = self.linear(x)

return x