pytorch入门day5-卷积神经网络实战

目录

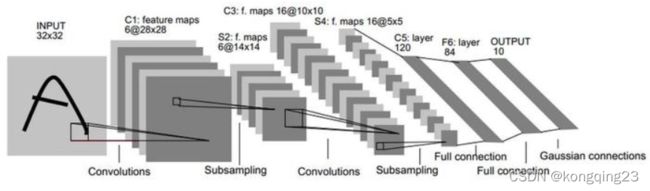

LeNet网络实战

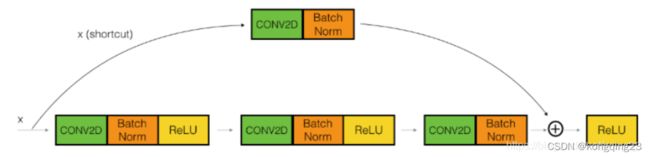

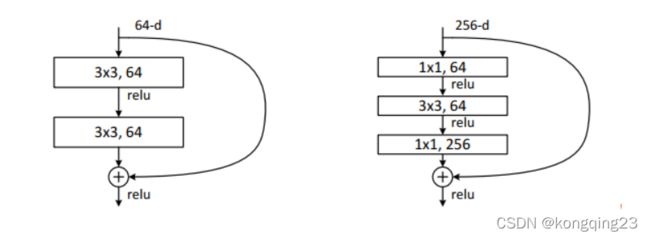

ResNet

训练函数

LeNet网络实战

import torch

from torch.utils.data import DataLoader

from torchvision import datasets

from torchvision import transforms

from torch import nn

from lenet5 import Lenet5

from torch import optim

def main():

batch_size=32

cifar_train=datasets.CIFAR10('cifar',True,transform=transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor()

]),download=True)

cifar_train=DataLoader(cifar_train,batch_size=batch_size,shuffle=True)

cifar_test = datasets.CIFAR10('cifar', False, transform=transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor()

]), download=True)

cifar_test = DataLoader(cifar_train, batch_size=batch_size, shuffle=True)

x,label=iter(cifar_train).next()

print('x:', x.shape, 'label:', label.shape)

model.train()

device = torch.device('cuda')

model = Lenet5().to(device)

# CrossEntropyLoss进行交叉熵运算(包括了softmax计算),判断多分类问题中预测试与真实值的差距

criteon = nn.CrossEntropyLoss().to(device)

optimizer = optim.Adam(model.parameters(), lr=1e-3)

print(model)

for epoch in range(1000):

for batchidx,(x,label) in enumerate(cifar_train):

# x [b, 3, 32, 32]

# label [b]

x, label = x.to(device), label.to(device)

logits=model(x)

# logits: [b, 10]

# label: [b]

# loss: tensor scalar

loss = criteon(logits, label)

# backprop

#先将梯度清零,再进行反向传播,再进行梯度更新

optimizer.zero_grad()

loss.backward()

optimizer.step()

if epoch % 10 == 0:

print('epoch {}: loss = {}'

.format(epoch, loss.item()))

model.eval()

#在测试集内 不需要跟踪反向梯度计算

with torch.no_grad():

total_correct=0

total_num=0

for x,label in cifar_test:

x,label=x.to(device),label.to(device)

logits=model(x)

pred=logits.argmax(dim=1)

correct=torch.eq(pred,label).float().sum().item()#eq函数调用后会返回一个byte,true或者false估计,然后需要将其转换成float类型再通过item()函数来提取它的值

total_correct+=correct

total_num+=x.size(0)

acc = total_correct / total_num

print(epoch, 'test acc:', acc)

if __name__ == '__main__':

main()

ResNet

import torch

from torch import nn

from torch.nn import functional as F

class ResBlk(nn.Module):

"""

残差网络块

"""

def __init__(self,ch_in,ch_out,stride=1):

super(ResBlk,self).__init__()

self.conv1=nn.Conv2d(ch_in,ch_out,kernel_size=3,stride=stride,padding=1)

self.bn1=nn.BatchNorm2d(ch_out)

self.conv2 = nn.Conv2d(ch_out, ch_out, kernel_size=3, stride=1, padding=1)

self.bn2 = nn.BatchNorm2d(ch_out)

self.extra=nn.Sequential()

if ch_out!=ch_in:

#[b, ch_in, h, w] => [b, ch_out, h, w]

self.extra=nn.Sequential(

nn.Conv2d(ch_in,ch_out,kernel_size=1,stride=stride),

nn.BatchNorm2d(ch_out)

)

def forward(self,x):

"""

:param x: [b, ch, h, w]

"""

out=F.relu(self.bn1(self.conv1(x)))

out=self.bn2(self.conv2(out))

#短接

# extra module: [b, ch_in, h, w] => [b, ch_out, h, w] 保证能够进行残差计算

out = self.extra(x) + out

out = F.relu(out)

return out

class ResNet18(nn.Module):

def __init__(self):

super(ResNet18, self).__init__()

self.conv1=nn.Sequential(

nn.Conv2d(3,64,kernel_size=3,stride=3,padding=1),

nn.BatchNorm2d(64)

)

#紧跟着设置4个残差块

# [b, 64, h, w] => [b, 128, h ,w]

self.blk1=ResBlk(64,128,stride=2)

# [b, 128, h, w] => [b, 256, h, w]

self.blk2=ResBlk(128,256,stride=2)

# [b, 256, h, w] => [b, 512, h, w]

self.blk3 = ResBlk(256, 512, stride=2)

# [b, 512, h, w] => [b, 512, h, w]

self.blk4 = ResBlk(512, 512, stride=2)

self.outlayer = nn.Linear(512 * 1 * 1, 10)

def forward(self,x):

x=F.relu(self.conv1(x))

# [b, 64, h, w] => [b, 1024, h, w]

x = self.blk1(x)

x = self.blk2(x)

x = self.blk3(x)

x = self.blk4(x)

# print('after conv:', x.shape)#[2, 512, 1, 1]

x = F.adaptive_avg_pool2d(x, [1, 1])

# print('after pool:', x.shape)

x = x.view(x.size(0), -1)

x = self.outlayer(x)

return x

def main():

blk = ResBlk(64, 128, stride=2)

tmp = torch.randn(2, 64, 32, 32)

out = blk(tmp)

print('block:', out.shape)

x = torch.randn(2, 3, 32, 32)

model = ResNet18()

out = model(x)

print('resnet:', out.shape)

if __name__ == '__main__':

main()

训练函数

import torch

from torch.utils.data import DataLoader

from torchvision import datasets

from torchvision import transforms

from torch import nn

from lenet5 import Lenet5

from torch import optim

from resnet import ResNet18

def main():

batch_size=32

cifar_train = datasets.CIFAR10('cifar', True, transform=transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

]), download=True)

cifar_train = DataLoader(cifar_train, batch_size=batch_size, shuffle=True)

cifar_test = datasets.CIFAR10('cifar', False, transform=transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225])

]), download=True)

cifar_test = DataLoader(cifar_test, batch_size=batch_size, shuffle=True)

x,label=iter(cifar_train).next()

print('x:', x.shape, 'label:', label.shape)

device = torch.device('cuda')

#model = Lenet5().to(device)

model = ResNet18().to(device)

# CrossEnmodel = Lenet5().to(device)tropyLoss进行交叉熵运算(包括了softmax计算),判断多分类问题中预测试与真实值的差距

criteon = nn.CrossEntropyLoss().to(device)

optimizer = optim.Adam(model.parameters(), lr=1e-3)

print(model)

model.train()

for epoch in range(1000):

for batchidx,(x,label) in enumerate(cifar_train):

# x [b, 3, 32, 32]

# label [b]

x, label = x.to(device), label.to(device)

logits=model(x)

# logits: [b, 10]

# label: [b]

# loss: tensor scalar

loss = criteon(logits, label)

# backprop

#先将梯度清零,再进行反向传播,再进行梯度更新

optimizer.zero_grad()

loss.backward()

optimizer.step()

if epoch % 10 == 0:

print('epoch {}: loss = {}'

.format(epoch, loss.item()))

model.eval()

#在测试集内 不需要跟踪反向梯度计算

with torch.no_grad():

total_correct=0

total_num=0

for x, label in cifar_test:

x,label=x.to(device),label.to(device)

logits=model(x)

pred=logits.argmax(dim=1)

correct=torch.eq(pred,label).float().sum().item()#eq函数调用后会返回一个byte,true或者false估计,然后需要将其转换成float类型再通过item()函数来提取它的值

total_correct+=correct

total_num+=x.size(0)

acc = total_correct / total_num

if epoch % 10 == 0:

print('epoch {}: acc = {}'

.format(epoch, acc))

if __name__ == '__main__':

main()