FSRCNN神经网络

1 FSRCNN

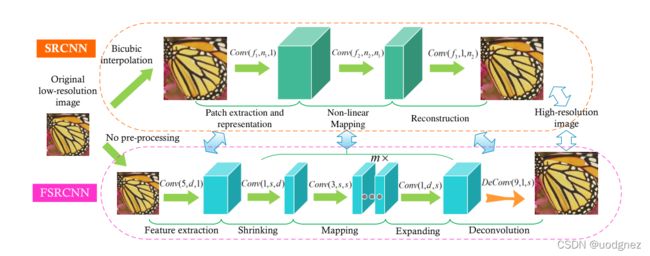

FSRCNN是对之前SRCNN的改进,主要体现在更快的加速和更出色的修复质量上。

作者将FSRCNN分为五个部分:

- 特征提取:这部分类似于SRCNN中的第一部分。但SRCNN中输入的是经过插值后的低分辨率图像(尺寸与输出图像一致),而FSRCNN直接是对原始低分辨率图像进行操作。kernelSize: 5 × 5 5\times5 5×5

- 收缩:减低特征维度,减少参数。kernelSize: 1 × 1 1\times1 1×1

- 非线性映射:串联多个中等大小的卷积核( 3 × 3 3\times3 3×3)来代替SRCNN中的 5 × 5 5\times5 5×5。

- 扩展:类似于收缩的逆过程。若直接从低维特征中生成图像,最终的恢复质量会很差。kernelSize: 1 × 1 1\times1 1×1

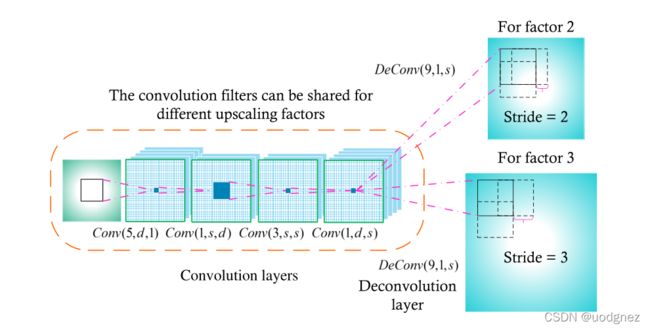

- 反卷积:上采样。kernelSize: 9 × 9 9\times 9 9×9

激活函数:PReLu。主要是为了避免ReLu中零梯度导致的“死特征”。

损失函数:MSE。

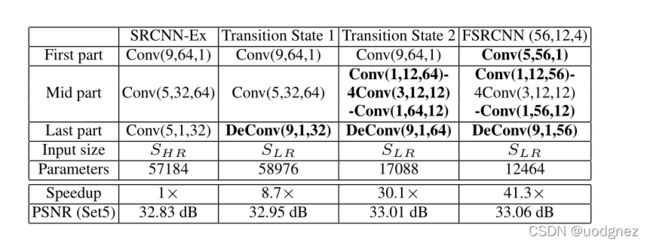

The transitions from SRCNN to FSRCNN:

可以从图中看出来,这种加速并不是以性能下降为代价的。

2 code

from torch import nn

class FSRCNN(nn.Module):

def __init__(self, scale, inputChannel=1, outputChannel=1):

super(FSRCNN, self).__init__()

self.firstPart = nn.Sequential(

nn.Conv2d(inputChannel, 56, kernel_size=5, padding=5 // 2),

nn.PReLU(56)

)

self.midPart = nn.Sequential(

nn.Conv2d(56, 12, kernel_size=1),

nn.PReLU(12),

nn.Conv2d(12, 12, kernel_size=3, padding=3 // 2),

nn.PReLU(12),

nn.Conv2d(12, 12, kernel_size=3, padding=3 // 2),

nn.PReLU(12),

nn.Conv2d(12, 12, kernel_size=3, padding=3 // 2),

nn.PReLU(12),

nn.Conv2d(12, 12, kernel_size=3, padding=3 // 2),

nn.PReLU(12),

nn.Conv2d(12, 56, kernel_size=1),

nn.PReLU(56),

)

self.lastPart = nn.Sequential(

nn.ConvTranspose2d(56, outputChannel, kernel_size=9, stride=scale, padding=9//2, output_padding=scale-1),

)

def forward(self, x):

x = self.firstPart(x)

x = self.midPart(x)

out = self.lastPart(x)

return out

https://zhuanlan.zhihu.com/p/31664818

https://arxiv.org/abs/1608.00367