BP神经网络代码实现

1.用sigmoid(x)函数激活:

其导数f'(x)=f(x)(1-f(x))

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

def sigmoid(x):

return 1/(1+np.exp(-x))

def BP(data_tr, data_te, maxiter=1000):

data_tr, data_te = np.array(data_tr), np.array(data_te)

net_in = np.array([0.0, 0, -1])

out_in = np.array([0.0, 0, 0, 0, -1]) # 输出层的输入,即隐层的输出

w_mid = np.random.rand(3, 4) # 隐层神经元的权值&阈值

w_out = np.random.rand(5) # 输出层神经元的权值&阈值

delta_w_out = np.zeros([5]) # 输出层权值&阈值的修正量

delta_w_mid = np.zeros([3,4]) # 中间层权值&阈值的修正量

yita = 1.75 # η: 学习速率

Err = np.zeros([maxiter]) # 记录总体样本每迭代一次的错误率

# 1.样本总体训练的次数

for it in range(maxiter):

# 衡量每一个样本的误差

err = np.zeros([len(data_tr)])

# 2.训练集训练一遍

for j in range(len(data_tr)):

net_in[:2] = data_tr[j, :2] # 存储当前对象前两个属性值

real = data_tr[j, 2]

# 3.当前对象进行训练

for i in range(4):

out_in[i] = sigmoid(sum(net_in*w_mid[:, i])) # 计算输出层输入

res = sigmoid(sum(out_in * w_out)) # 获得训练结果

err[j] = abs(real - res)

# --先调节输出层的权值与阈值

delta_w_out = yita*res*(1-res)*(real-res)*out_in # 权值调整

delta_w_out[4] = -yita*res*(1-res)*(real-res) # 阈值调整

w_out = w_out + delta_w_out

# --隐层权值和阈值的调节

for i in range(4):

# 权值调整

delta_w_mid[:, i] = yita * out_in[i] * (1 - out_in[i]) * w_out[i] * res * (1 - res) * (real - res) * net_in

# 阈值调整

delta_w_mid[2, i] = -yita * out_in[i] * (1 - out_in[i]) * w_out[i] * res * (1 - res) * (real - res)

w_mid = w_mid + delta_w_mid

Err[it] = err.mean()

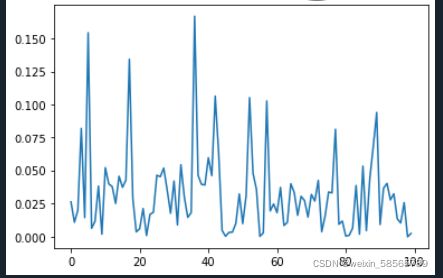

plt.plot(Err)

plt.show()

# 存储预测误差

err_te = np.zeros([ len(data_te) ])

# 预测样本len(data_te)个

for j in range( len(data_te) ):

net_in[:2] = data_te[j, :2] # 存储数据

real = data_te[j, 2] # 真实结果

# net_in和w_mid的相乘过程

for i in range(4):

# 输入层到隐层的传输过程

out_in[i] = sigmoid(sum(net_in*w_mid[:, i]))

res = sigmoid(sum(out_in*w_out)) # 网络预测结果输出

err_te[j] = abs(real-res) # 预测误差

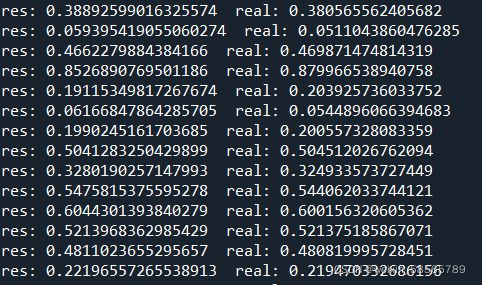

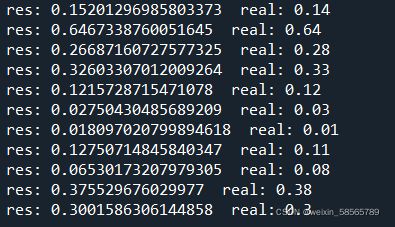

print('res:', res, ' real:', real)

plt.plot(err_te)

plt.show()

if "__main__" == __name__:

# 1.读取样本

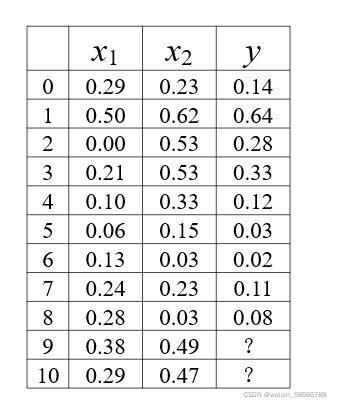

data_tr = pd.read_csv("D:\\人工智能\\3.3 data_tr.txt")

data_te = pd.read_csv("D:\\人工智能\\3.3 data_te.txt")

BP(data_tr, data_te, maxiter=1000)仿造sigmoid(x)函数实现tanh函数和ReLU函数的激活

!!!注意:

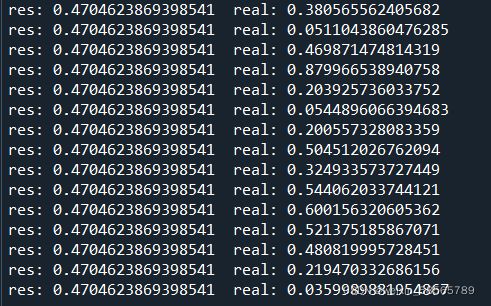

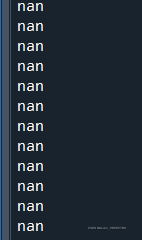

以下两个代码实现中的学习速率不能太大,否者就会出现以下这种情况:

res值一样,明显出错。

于是我在代码中增加了print(sum(net_in*w_mid[:, i]))

运行中出现了:

原因:

学习速率太大,会出现震荡,出现震荡,会导致你的梯度值特别大,很可能出现溢出这种情况。这个梯度值可能是inf,-inf。

而以下这些操作都会导致nan的产生,这些都是不确定的操作

np.inf/np.inf

0*np.inf

具体可以看(40条消息) nan值的出现_run_session的博客-CSDN博客_nan值

2.用tanh函数激活:

其导数为f'(x)=1-f(x)*f(x)

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

def tanh(x):

return (np.exp(x) - np.exp(-x)) / (np.exp(x) + np.exp(-x))

def BP(data_tr, data_te, maxiter=500):

data_tr, data_te = np.array(data_tr), np.array(data_te)

net_in = np.array([0.0, 0, -1])

out_in = np.array([0.0, 0, 0, 0, -1]) # 输出层的输入,即隐层的输出

w_mid = np.random.rand(3, 4) # 隐层神经元的权值&阈值

w_out = np.random.rand(5) # 输出层神经元的权值&阈值

delta_w_out = np.zeros([5]) # 输出层权值&阈值的修正量

delta_w_mid = np.zeros([3,4]) # 中间层权值&阈值的修正量

yita = 0.20 # η: 学习速率

Err = np.zeros([maxiter]) # 记录总体样本每迭代一次的错误率

# 1.样本总体训练的次数

for it in range(maxiter):

# 衡量每一个样本的误差

err = np.zeros([len(data_tr)])

# 2.训练集训练一遍

for j in range(len(data_tr)):

net_in[:2] = data_tr[j, :2] # 存储当前对象前两个属性值

real = data_tr[j, 2]

# 3.当前对象进行训练

for i in range(4):

out_in[i] = tanh (sum(net_in*w_mid[:, i])) # 计算输出层输入

res = tanh (sum(out_in * w_out)) # 获得训练结果

err[j] = abs(real - res)

# --先调节输出层的权值与阈值

delta_w_out = yita*(1-res*res)*(real-res)*out_in # 权值调整 f'(x)=1-f(x)*f(x)

delta_w_out[4] = -yita*(1-res*res)*(real-res) # 阈值调整

w_out = w_out + delta_w_out

# --隐层权值和阈值的调节

for i in range(4):

# 权值调整

delta_w_mid[:, i] = yita * (1 - out_in[i] * out_in[i]) * w_out[i] * (1 - res * res) * (real - res) * net_in

# 阈值调整

delta_w_mid[2, i] = -yita * (1 - out_in[i] * out_in[i]) * w_out[i] * (1 - res * res) * (real - res)

w_mid = w_mid + delta_w_mid

Err[it] = err.mean()

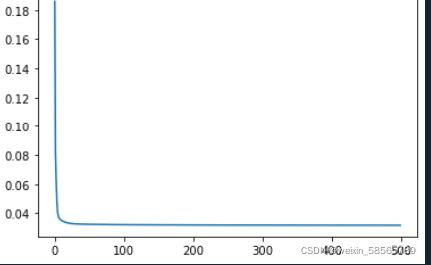

plt.plot(Err)

plt.show()

# 存储预测误差

err_te = np.zeros([ 100 ])

# 预测样本len(data_te)个

for j in range( 100 ):

net_in[:2] = data_te[j, :2] # 存储数据

real = data_te[j, 2] # 真实结果

# net_in和w_mid的相乘过程

for i in range(4):

# 输入层到隐层的传输过程

out_in[i] = tanh(sum(net_in*w_mid[:, i]))

res = tanh(sum(out_in*w_out)) # 网络预测结果输出

err_te[j] = abs(real-res) # 预测误差

print('res:', res, ' real:', real)

plt.plot(err_te)

plt.show()

if "__main__" == __name__:

# 1.读取样本

data_tr = pd.read_csv("D:\\人工智能\\3.3 data_tr.txt")

data_te = pd.read_csv("D:\\人工智能\\3.3 data_te.txt")

BP(data_tr, data_te, maxiter=500)3.用ReLU函数激活:

其导数f'(x)=1,x>0;f'(x)=0,x<=0

这里要求学习速率要特别低,我设了0.01

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

def ReLU(x):

return np.where(x > 0, x, 0)

def ReLUd(x):

return np.where(x > 0, 1, 0)

def BP(data_tr, data_te, maxiter=2000):

data_tr, data_te = np.array(data_tr), np.array(data_te)

net_in = np.array([0.0, 0, -1])

out_in = np.array([0.0, 0, 0, 0, -1]) # 输出层的输入,即隐层的输出

w_mid = np.random.rand(3, 4) # 隐层神经元的权值&阈值

w_out = np.random.rand(5) # 输出层神经元的权值&阈值

delta_w_out = np.zeros([5]) # 输出层权值&阈值的修正量

delta_w_mid = np.zeros([3,4]) # 中间层权值&阈值的修正量

Err = []

yita = 0.01 # η: 学习速率

Err = np.zeros([maxiter]) # 记录总体样本每迭代一次的错误率

# 1.样本总体训练的次数

for it in range(maxiter):

# 衡量每一个样本的误差

err = np.zeros([len(data_tr)])

# 2.训练集训练一遍

for j in range(len(data_tr)):

net_in[:2] = data_tr[j, :2] # 存储当前对象前两个属性值

real = data_tr[j, 2]

# 3.当前对象进行训练

for i in range(4):

out_in[i] = ReLU(sum(net_in*w_mid[:, i])) # 计算输出层输入

res = ReLU(sum(out_in * w_out)) # 获得训练结果

err[j] = abs(real - res)

# --先调节输出层的权值

delta_w_out = yita*(real-res)*out_in*ReLUd(res) # 权值调整

delta_w_out[4] = -yita*(real-res)*ReLUd(res) # 阈值调整

w_out = w_out + delta_w_out

# --隐层权值和阈值的调节

for i in range(4):

# 权值调整

delta_w_mid[:, i] = yita * w_out[i]* (real - res) * net_in*ReLUd(res)

# 阈值调整

delta_w_mid[2, i] = -yita * w_out[i] * (real - res)*ReLUd(res)

w_mid = w_mid + delta_w_mid

Err[it] = err.mean()

plt.plot(Err)

plt.show()

# 存储预测误差

err_te = np.zeros([ len(data_te) ])

# 预测样本len(data_te)个

for j in range( len(data_te) ):

net_in[:2] = data_te[j, :2] # 存储数据

real = data_te[j, 2] # 真实结果

# net_in和w_mid的相乘过程

for i in range(4):

# 输入层到隐层的传输过程

out_in[i] = ReLU(sum(net_in*w_mid[:, i]))

res = ReLU(sum(out_in*w_out)) # 网络预测结果输出

err_te[j] = abs(real-res) # 预测误差

print('res:', res, ' real:', real)

plt.plot(err_te)

plt.show()

if "__main__" == __name__:

# 1.读取样本

data_tr = pd.read_csv("D:\\人工智能\\3.3 data_tr.txt")

data_te = pd.read_csv("D:\\人工智能\\3.3 data_te.txt")

BP(data_tr, data_te, maxiter=2000)若要解决出现nan的问题,可以看(40条消息) 解决输出为nan的问题_Tchunren的博客-CSDN博客_网络输出为nan