VINS-Mono学习(四)——回环检测与重定位

目录

1、闭环检测常用方法有哪些?

2、ORB SLAM2中Loop Closing的具体实现流程是怎样的?

3、VINS回环检测与重定位、四自由度位姿图优化

3.1 第一部分:回环检测与重定位

3.1.1 回环检测(只对关键帧)

3.1.2 回环候选帧之间的特征匹配

3.1.3 紧耦合重定位

3.2 第二部分:全局位姿图优化

3.3 代码解析

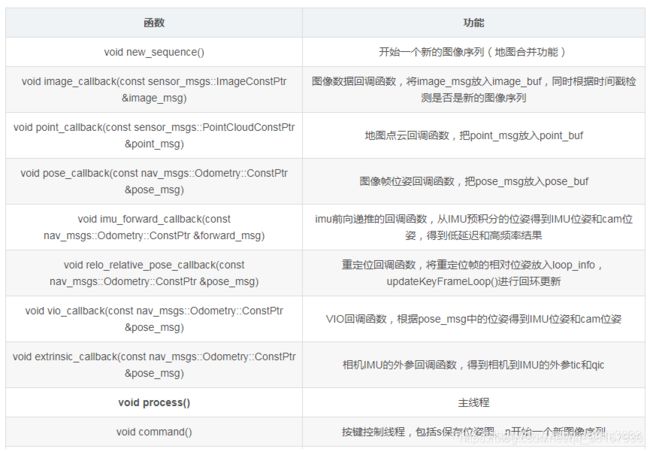

3.3.1 pose_graph_node.cpp

3.3.2 process()函数

3.3.3 pose_graph.h

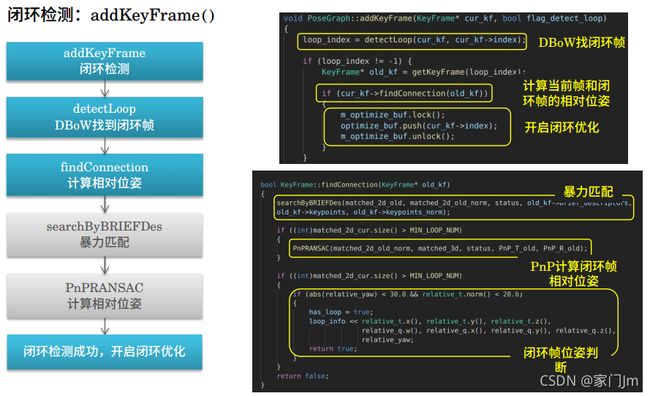

3.3.4 addKeyFrame()添加关键帧,进行回环检测与闭环

3.3.5 detectLoop()回环检测返回候选帧索引

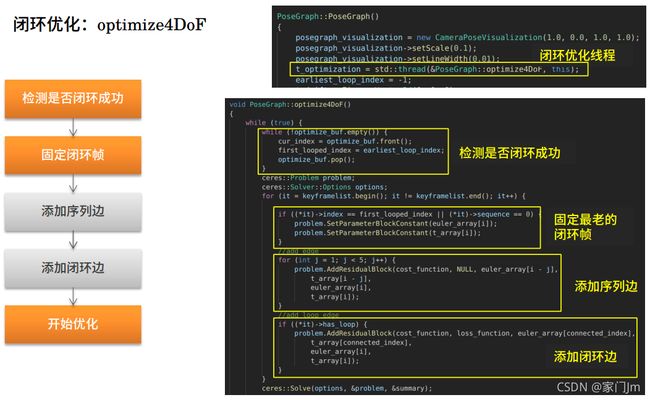

3.3.6 optimize4DoF()位姿图优化

3.3.7 keyframe.h

参考文章:

![]()

在正式开始学习VINS之前,先来看几个SLAM面试中的小问题:

1、闭环检测常用方法有哪些?

1. ORB SLAM中采用的是词袋模型进行闭环检测筛选出候选帧,再通过求解Sim3判断最合适的关键帧。

- Sim3:使用3对匹配点来进行相似变换(similarity transformation)的求解,进而解出两个坐标系之间的旋转矩阵、平移向量和尺度。

2. LSD SLAM中的闭环检测主要是根据视差、关键帧连接关系,找出候选帧,然后对每个候选帧和测试的关键帧之间进行双向Sim3跟踪,如果求解出的两个李代数满足马氏距离在一定范围内,则认为闭环成功。

3. 点云上的回环检测用的是scan-context。

4. RGB-D SLAM 回环检测,回环的本质就是识别曾经到过的地方,最简单的回环检测策略,就是把新来的关键帧与之前所有的关键帧进行比较,不过这样会导致越往后,需要比较的帧越多。所以,稍微快速一点的方法是在过去的帧里随机挑选一些,与当前帧进行比较。更进一步的,也可以用图像处理/模式识别的方法计算图像间的相似性,对相似的图像进行检测。

RGB-D 中的回环检测策略流程概括如下:

- 初始化关键帧序列:F,并将第一帧

放入F。

放入F。 - 对于新来的一帧I,计算F中最后一帧与I的运动,并估计该运动的大小e。有以下几种可能:

- 若e>

,说明运动太大,可能是计算错误,丢弃该帧;

,说明运动太大,可能是计算错误,丢弃该帧; - 若没有匹配上(match太少),说明该帧图像质量不高,丢弃;

- 若e<

,说明离前一个关键帧很近,同样丢弃;

,说明离前一个关键帧很近,同样丢弃; - 剩下的情况,只有是特征匹配成功的,运动估计正确,同时离上一个关键帧有一定距离,则把I作为新的关键帧,进入回环检测程序;

- 若e>

- 近距离回环:匹配I与F末尾m个关键帧,匹配成功时,在图里增加一条边。

- 随机回环:随机在F里取n个帧,与I进行匹配。若匹配上(这里说的匹配是根据两帧内点数量进行判断,内点数量少于阈值则匹配失败;而内点数量inliers计算来自两帧之间cv::solvePnPRansac函数最后一个参数),往图里增加一条边。

- 将I放在F末尾。若有新的数据,则回2;若无,则进行优化与地图拼接。

总结来说的话,就是根据关键帧进行近距离回环检测和随机回环检测,看当前帧能否与历史关键帧进行匹配,匹配成功就使用g2o添加边,然后进行全局BA位姿优化。

6. 如下,ORB-SLAM2中的回环检测。

2、ORB SLAM2中Loop Closing的具体实现流程是怎样的?

①首先是通过回环检测(Bow得分)和共视关系检查从所有关键帧中筛选出一组和当前帧有可能形成闭环的候选帧;

②然后利用相似求解器Sim3Solver求解出候选帧与当前帧之间的相似变换(注意这里是单目相似变换,而双目或者RGBD是刚体变换),利用相似变换找出更多的匹配地图点;

③然后进行闭环位姿优化(对应下面的回答),如果优化结果较好的话就不再判断其他候选帧。

④下一步就是闭环矫正,通过得出来的相似变换对当前帧进行位姿调整并且传播到与当前帧相连的关键帧,回环两侧的关键帧完成对齐,然后利用调整过的位姿更新这些相连关键帧对应的地图点,同时在Covisibility Graph里面增加闭环边,然后进行Essential Graph的优化(即全局位姿优化),当前帧与闭环匹配帧之间的边不进行优化,

⑤最后再来一个全局BA优化即完成了Loop Closing的全部流程。

相关优化方法如下:

闭环位姿优化:当检测到闭环时,闭环连接的两个关键帧的位姿需要通过Sim3/SE3优化(以使得其尺度一致)。优化求解两帧之间的相似变换矩阵,使得二维对应点(feature)的投影误差最小。

![]()

全局位姿优化:这个优化就相当于《视觉SLAM十四讲》中的Pose Graph,值得注意的是如果是单目的话这里优化的是Sim3,如果是双目或者RGBD的话这里优化的SE3,残差定义为:![]() ,ORB SLAM2中优化的对象是Essential Graph,Essential Graph指的是所有的关键帧顶点,但是优化边大大减少,包括spanning tree(生成树)和共视权重θ>100的边,以及闭环连接边,闭环调整CorrectLoop过程中的优化。

,ORB SLAM2中优化的对象是Essential Graph,Essential Graph指的是所有的关键帧顶点,但是优化边大大减少,包括spanning tree(生成树)和共视权重θ>100的边,以及闭环连接边,闭环调整CorrectLoop过程中的优化。

![]()

全局BA优化:用于单目初始化的CreateInitialMapMonocular函数以及闭环优化的RunGlobalBundleAdjustment函数(在闭环结束前新开一个线程,进行全局优化。

![]()

3、VINS回环检测与重定位、四自由度位姿图优化

下面正式开始学习VINS代码中的回环检测部分。

回环检测的关键就是如何有效检测出相机曾经经过同一个地方,这样可以避免较大的累积误差,使得当前帧和之前的某一帧迅速建立约束,形成新的较小的累积误差。 由于回环检测提供了当前数据与所有历史数据的关联,在跟踪算法丢失后,还可以利用重定位。

论文中主要分为两部分:回环检测与重定位、4-DOF的位姿图优化。

第一部分主要是为了通过回环检测找到当前帧和候选帧的联系,并通过简单的紧耦合重定位将局部滑动窗口移动与过去的位姿对齐。

第二部分是为了保证基于重定位结果对过去的所有位姿进行全局优化。

3.1 第一部分:回环检测与重定位

VINS重定位模块主要包含回环检测,回环候选帧之间的特征匹配,紧耦合重定位三个部分。

3.1.1 回环检测(只对关键帧)

1. 采用DBow2词袋位置识别方法进行回环检测。经过时间空间一致性检验后,DBoW2返回回环检测候选帧。

2. 除了用单目VIO的角点特征外,还添加了500个角点并使用BRIEF描述子,描述子用作在视觉词袋在数据库里进行搜索。这些额外的角点能用来实现更好的回环检测。

3. VINS中只保留所有用于特征检索的BRIEF描述子,丢弃原始图像以减小内存。

4. 单目VIO可以观测到滚动和俯仰角,VINS并不需要依赖旋转不变性。

3.1.2 回环候选帧之间的特征匹配

1、检测到回环时,通过BRIEF描述子匹配找到对应关系。但是直接的描述子匹配会导致很多外点。 2、本文提出两步几何剔除法:

1)2D-2D: 使用RANSAC进行F矩阵测试,

2)3D-2D: 使用RANSAC进行PnP,基于已知的滑动窗特征点的3D位置,和回路闭合候选处图像的2D观测(像素坐标)。 当内点超过一定阈值时,我们将该候选帧视为正确的循环检测并执行重定位。

3.1.3 紧耦合重定位

1、重定位过程使单目VIO维持当前滑动窗口与过去的位姿图对齐。

2、将所有回环帧的位姿作为常量,利用所有IMU测量值、局部视觉测量和从回环中提取特征对应值,共同优化滑动窗口。

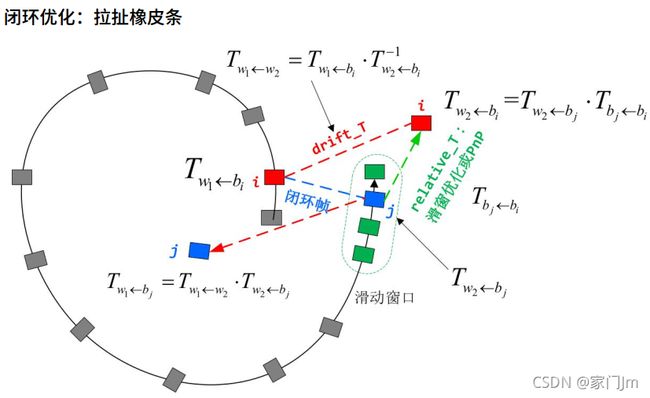

3.2 第二部分:全局位姿图优化

A、位姿图中添加关键帧

B、4自由度位姿图优化

C、位姿图管理

![]()

相关代码都在pose_graph文件中,主要分为三个程序,分别为:

- keyframe.cpp/.h :构建关键帧类、描述子计算、匹配关键帧与回环帧。

- pose_graph.cpp/.h :位姿图的建立与图优化、回环检测与闭环。

- pose_graph_node.cpp :ROS 节点函数、回调函数、主线程。

3.3 代码解析

3.3.1 pose_graph_node.cpp

主要分为7个回调函数以及主线程process()函数。

7个回调函数:包括关键帧的位姿(keyframe_pose)、重定位位姿(relo_relative_pose)、相机到IMU的外参估计(extrinsic)、VIO里程计信息PQV(odometry)、关键帧中的3D点云(keyframe_point)、IMU传播值(imu_propagate)。

1、ROS初始化,设置句柄

2、从launch文件读取参数和参数文件config中的参数

3、如果需要进行回环检测则读取词典和BRIEF描述子的模板文件,同时读取

4、config中的其他参数、设置带回环的结果输出路径。

5、加载先前位姿图 loadPoseGraph()

6、订阅各个topic并执行各自回调函数

7、发布/pose_graph的topic

8、设置主线程 process() 和键盘控制线程 command()

int main(int argc, char **argv)

{

// 1.ROS初始化,设置句柄

ros::init(argc, argv, "pose_graph");

ros::NodeHandle n("~");

posegraph.registerPub(n);

// 2.读取参数

n.getParam("visualization_shift_x", VISUALIZATION_SHIFT_X);

n.getParam("visualization_shift_y", VISUALIZATION_SHIFT_Y);

n.getParam("skip_cnt", SKIP_CNT);

n.getParam("skip_dis", SKIP_DIS);

std::string config_file;

n.getParam("config_file", config_file);

cv::FileStorage fsSettings(config_file, cv::FileStorage::READ);

if(!fsSettings.isOpened())

{

std::cerr << "ERROR: Wrong path to settings" << std::endl;

}

double camera_visual_size = fsSettings["visualize_camera_size"];

cameraposevisual.setScale(camera_visual_size);

cameraposevisual.setLineWidth(camera_visual_size / 10.0);

LOOP_CLOSURE = fsSettings["loop_closure"];

std::string IMAGE_TOPIC;

int LOAD_PREVIOUS_POSE_GRAPH;

//3.如果需要进行回环检测则读取词典和BRIEF描述子的模板文件,同时读取config中的其他参数、设置带回环的结果输出路径。

if (LOOP_CLOSURE)

{

ROW = fsSettings["image_height"];

COL = fsSettings["image_width"];

// 3.1读取字典

std::string pkg_path = ros::package::getPath("pose_graph");

string vocabulary_file = pkg_path + "/../support_files/brief_k10L6.bin";

cout << "vocabulary_file" << vocabulary_file << endl;

posegraph.loadVocabulary(vocabulary_file);

// 3.2读取BRIEF描述子的模板文件

BRIEF_PATTERN_FILE = pkg_path + "/../support_files/brief_pattern.yml";

cout << "BRIEF_PATTERN_FILE" << BRIEF_PATTERN_FILE << endl;

m_camera = camodocal::CameraFactory::instance()->generateCameraFromYamlFile(config_file.c_str());

fsSettings["image_topic"] >> IMAGE_TOPIC;

fsSettings["pose_graph_save_path"] >> POSE_GRAPH_SAVE_PATH;

fsSettings["output_path"] >> VINS_RESULT_PATH;

fsSettings["save_image"] >> DEBUG_IMAGE;

VISUALIZE_IMU_FORWARD = fsSettings["visualize_imu_forward"];

LOAD_PREVIOUS_POSE_GRAPH = fsSettings["load_previous_pose_graph"];

FAST_RELOCALIZATION = fsSettings["fast_relocalization"];

VINS_RESULT_PATH = VINS_RESULT_PATH + "/vins_result_loop.csv";

std::ofstream fout(VINS_RESULT_PATH, std::ios::out);

fout.close();

fsSettings.release();

// 3.3加载先前的位姿图

if (LOAD_PREVIOUS_POSE_GRAPH)

{

printf("load pose graph\n");

m_process.lock();

posegraph.loadPoseGraph();

m_process.unlock();

printf("load pose graph finish\n");

load_flag = 1;

}

else

{

printf("no previous pose graph\n");

load_flag = 1;

}

}

fsSettings.release();

// 4.订阅话题topic并执行各自回调函数

ros::Subscriber sub_imu_forward = n.subscribe("/vins_estimator/imu_propagate", 2000, imu_forward_callback);

ros::Subscriber sub_vio = n.subscribe("/vins_estimator/odometry", 2000, vio_callback);

ros::Subscriber sub_image = n.subscribe(IMAGE_TOPIC, 2000, image_callback);

ros::Subscriber sub_pose = n.subscribe("/vins_estimator/keyframe_pose", 2000, pose_callback);

ros::Subscriber sub_extrinsic = n.subscribe("/vins_estimator/extrinsic", 2000, extrinsic_callback);

ros::Subscriber sub_point = n.subscribe("/vins_estimator/keyframe_point", 2000, point_callback);

ros::Subscriber sub_relo_relative_pose = n.subscribe("/vins_estimator/relo_relative_pose", 2000, relo_relative_pose_callback);

// 5.发布的话题topic

pub_match_img = n.advertise("match_image", 1000);

pub_camera_pose_visual = n.advertise("camera_pose_visual", 1000);

pub_key_odometrys = n.advertise("key_odometrys", 1000);

pub_vio_path = n.advertise("no_loop_path", 1000);

pub_match_points = n.advertise("match_points", 100);

// 6. 创建两个线程,process 和 command

std::thread measurement_process;

std::thread keyboard_command_process;

//pose graph主线程

measurement_process = std::thread(process);

//键盘操作的线程

keyboard_command_process = std::thread(command);

ros::spin();

return 0;

} 3.3.2 process()函数

1、先判断是否需要回环检测

2、得到具有相同时间戳的pose_msg、image_msg、point_msg

3、构建pose_graph中用到的关键帧,然后每隔SKIP_CNT,将将距上一关键帧距离(平移向量的模)超过SKIP_DIS的图像创建为关键帧。其中,最核心的函数是KeyFrame类以及addKeyFrame()函数。

//主线程

void process()

{

// 1.先判断是否需要回环检测

if (!LOOP_CLOSURE)

return;

while (true)// 不断循环

{

// 三个参数图像、点云、VIO位姿

sensor_msgs::ImageConstPtr image_msg = NULL;

sensor_msgs::PointCloudConstPtr point_msg = NULL;

nav_msgs::Odometry::ConstPtr pose_msg = NULL;

// find out the messages with same time stamp

// 2.得到具有相同时间戳的pose_msg、image_msg、point_msg

m_buf.lock();

if(!image_buf.empty() && !point_buf.empty() && !pose_buf.empty())// 首先三个都不为空

{

if (image_buf.front()->header.stamp.toSec() > pose_buf.front()->header.stamp.toSec())

{

pose_buf.pop();

printf("throw pose at beginning\n");

}

else if (image_buf.front()->header.stamp.toSec() > point_buf.front()->header.stamp.toSec())

{

point_buf.pop();

printf("throw point at beginning\n");

}

else if (image_buf.back()->header.stamp.toSec() >= pose_buf.front()->header.stamp.toSec()

&& point_buf.back()->header.stamp.toSec() >= pose_buf.front()->header.stamp.toSec())

{

pose_msg = pose_buf.front();

pose_buf.pop();

while (!pose_buf.empty())

pose_buf.pop();

while (image_buf.front()->header.stamp.toSec() < pose_msg->header.stamp.toSec())

image_buf.pop();

image_msg = image_buf.front();

image_buf.pop();

while (point_buf.front()->header.stamp.toSec() < pose_msg->header.stamp.toSec())

point_buf.pop();

point_msg = point_buf.front();

point_buf.pop();

}

}

m_buf.unlock();

// 3.构建pose_graph中用到的关键帧,然后每隔SKIP_CNT,将将距上一关键帧距离(平移向量的模)超过SKIP_DIS的图像创建为关键帧。

if (pose_msg != NULL)

{

//printf(" pose time %f \n", pose_msg->header.stamp.toSec());

//printf(" point time %f \n", point_msg->header.stamp.toSec());

//printf(" image time %f \n", image_msg->header.stamp.toSec());

// skip first few

// 3.1 剔除最开始的SKIP_FIRST_CNT帧,

if (skip_first_cnt < SKIP_FIRST_CNT)

{

skip_first_cnt++;

continue;

}

// 3.2每隔SKIP_CNT帧进行一次 SKIP_CNT=0

if (skip_cnt < SKIP_CNT)

{

skip_cnt++;

continue;

}

else

{

skip_cnt = 0;

}

// 3.3ROS图像转为Opencv类型

cv_bridge::CvImageConstPtr ptr;

if (image_msg->encoding == "8UC1")

{

sensor_msgs::Image img;

img.header = image_msg->header;

img.height = image_msg->height;

img.width = image_msg->width;

img.is_bigendian = image_msg->is_bigendian;

img.step = image_msg->step;

img.data = image_msg->data;

img.encoding = "mono8";

ptr = cv_bridge::toCvCopy(img, sensor_msgs::image_encodings::MONO8);

}

else

ptr = cv_bridge::toCvCopy(image_msg, sensor_msgs::image_encodings::MONO8);

cv::Mat image = ptr->image;

// build keyframe

Vector3d T = Vector3d(pose_msg->pose.pose.position.x,

pose_msg->pose.pose.position.y,

pose_msg->pose.pose.position.z);

Matrix3d R = Quaterniond(pose_msg->pose.pose.orientation.w,

pose_msg->pose.pose.orientation.x,

pose_msg->pose.pose.orientation.y,

pose_msg->pose.pose.orientation.z).toRotationMatrix();

// 3.4 将距上一关键帧距离(平移向量的模)超过SKIP_DIS的图像创建为关键帧

if((T - last_t).norm() > SKIP_DIS)

{

vector point_3d;

vector point_2d_uv;

vector point_2d_normal;

vector point_id;

for (unsigned int i = 0; i < point_msg->points.size(); i++)

{

cv::Point3f p_3d;

p_3d.x = point_msg->points[i].x;

p_3d.y = point_msg->points[i].y;

p_3d.z = point_msg->points[i].z;

point_3d.push_back(p_3d);

cv::Point2f p_2d_uv, p_2d_normal;

double p_id;

p_2d_normal.x = point_msg->channels[i].values[0];

p_2d_normal.y = point_msg->channels[i].values[1];

p_2d_uv.x = point_msg->channels[i].values[2];

p_2d_uv.y = point_msg->channels[i].values[3];

p_id = point_msg->channels[i].values[4];

point_2d_normal.push_back(p_2d_normal);

point_2d_uv.push_back(p_2d_uv);

point_id.push_back(p_id);

//printf("u %f, v %f \n", p_2d_uv.x, p_2d_uv.y);

}

// 3.5 创建为关键帧类型并加入到posegraph中

KeyFrame* keyframe = new KeyFrame(pose_msg->header.stamp.toSec(), frame_index, T, R, image,

point_3d, point_2d_uv, point_2d_normal, point_id, sequence);

m_process.lock();

start_flag = 1;

//在posegraph中添加关键帧,flag_detect_loop=1回环检测

posegraph.addKeyFrame(keyframe, 1);

m_process.unlock();

frame_index++;

last_t = T;

}

}

// 4.休眠5ms

std::chrono::milliseconds dura(5);

std::this_thread::sleep_for(dura);

}

} 3.3.3 pose_graph.h

接下来将主要讲解两个函数:addKeyFrame()以及detectLoop()。

3.3.4 addKeyFrame()添加关键帧,进行回环检测与闭环

1、建一个新的图像序列

2、getVioPose()获取当前帧的位姿vio_P_cur、vio_R_cur并更新 updateVioPose

3、detectLoop()回环检测,返回回环候选帧的索引loop_index

4、计算当前帧与回环帧的相对位姿,纠正当前帧位姿w_P_cur、w_R_cur;回环得到的位姿和VIO位姿之间的偏移量shift_r、shift_t;如果存在多个图像序列,则将所有图像序列都合并到世界坐标系下

5、获取VIO当前帧的位姿P、R,根据偏移量得到实际位姿并更新 updatePose当前帧的位姿P、R

6、发布path[sequence_cnt]

7、保存闭环轨迹到VINS_RESULT_PATH

void PoseGraph::addKeyFrame(KeyFrame* cur_kf, bool flag_detect_loop)

{

//shift to base frame

Vector3d vio_P_cur;

Matrix3d vio_R_cur;

// 1.建一个新的图像序列

if (sequence_cnt != cur_kf->sequence)

{

sequence_cnt++;

sequence_loop.push_back(0);

w_t_vio = Eigen::Vector3d(0, 0, 0);

w_r_vio = Eigen::Matrix3d::Identity();

m_drift.lock();

t_drift = Eigen::Vector3d(0, 0, 0);

r_drift = Eigen::Matrix3d::Identity();

m_drift.unlock();

}

// 2.getVioPose()获取当前帧的位姿vio_P_cur、vio_R_cur并更新updateVioPose

cur_kf->getVioPose(vio_P_cur, vio_R_cur);

vio_P_cur = w_r_vio * vio_P_cur + w_t_vio;

vio_R_cur = w_r_vio * vio_R_cur;

cur_kf->updateVioPose(vio_P_cur, vio_R_cur);

cur_kf->index = global_index;

global_index++;

int loop_index = -1;

if (flag_detect_loop)

{

TicToc tmp_t;

// 3.detectLoop()回环检测,返回回环候选帧的索引loop_index

loop_index = detectLoop(cur_kf, cur_kf->index);

}

else

{

addKeyFrameIntoVoc(cur_kf);

}

// 4.存在回环候选帧

if (loop_index != -1)

{

//printf(" %d detect loop with %d \n", cur_kf->index, loop_index);

// 4.1 获取回环候选帧

KeyFrame* old_kf = getKeyFrame(loop_index);

// 当前帧与回环候选帧进行描述子匹配,如果成功则确定存在回环

if (cur_kf->findConnection(old_kf))

{

//earliest_loop_index为最早的回环候选帧

if (earliest_loop_index > loop_index || earliest_loop_index == -1)

earliest_loop_index = loop_index;

// 4.2 计算当前帧与回环帧的相对位姿,纠正当前帧位姿w_P_cur、w_R_cur

Vector3d w_P_old, w_P_cur, vio_P_cur;

Matrix3d w_R_old, w_R_cur, vio_R_cur;

old_kf->getVioPose(w_P_old, w_R_old);

cur_kf->getVioPose(vio_P_cur, vio_R_cur);

//获取当前帧与回环帧的相对位姿relative_q、relative_t

Vector3d relative_t;

Quaterniond relative_q;

relative_t = cur_kf->getLoopRelativeT();

relative_q = (cur_kf->getLoopRelativeQ()).toRotationMatrix();

//重新计算当前帧位姿w_P_cur、w_R_cur

w_P_cur = w_R_old * relative_t + w_P_old;

w_R_cur = w_R_old * relative_q;

//回环得到的位姿和VIO位姿之间的偏移量shift_r、shift_t

double shift_yaw;

Matrix3d shift_r;

Vector3d shift_t;

shift_yaw = Utility::R2ypr(w_R_cur).x() - Utility::R2ypr(vio_R_cur).x();

shift_r = Utility::ypr2R(Vector3d(shift_yaw, 0, 0));

shift_t = w_P_cur - w_R_cur * vio_R_cur.transpose() * vio_P_cur;

// shift vio pose of whole sequence to the world frame

// 4.3如果存在多个图像序列,则将所有图像序列都合并到世界坐标系下

if (old_kf->sequence != cur_kf->sequence && sequence_loop[cur_kf->sequence] == 0)

{

w_r_vio = shift_r;

w_t_vio = shift_t;

vio_P_cur = w_r_vio * vio_P_cur + w_t_vio;

vio_R_cur = w_r_vio * vio_R_cur;

cur_kf->updateVioPose(vio_P_cur, vio_R_cur);

list::iterator it = keyframelist.begin();

for (; it != keyframelist.end(); it++)

{

if((*it)->sequence == cur_kf->sequence)

{

Vector3d vio_P_cur;

Matrix3d vio_R_cur;

(*it)->getVioPose(vio_P_cur, vio_R_cur);

vio_P_cur = w_r_vio * vio_P_cur + w_t_vio;

vio_R_cur = w_r_vio * vio_R_cur;

(*it)->updateVioPose(vio_P_cur, vio_R_cur);

}

}

sequence_loop[cur_kf->sequence] = 1;

}

// 4.4 将当前帧放入优化队列中

m_optimize_buf.lock();

optimize_buf.push(cur_kf->index);

m_optimize_buf.unlock();

}

}

m_keyframelist.lock();

Vector3d P;

Matrix3d R;

// 5.获取VIO当前帧的位姿P、R,根据偏移量得到实际位姿

cur_kf->getVioPose(P, R);

P = r_drift * P + t_drift;

R = r_drift * R;

// 更新当前帧的位姿P、R

cur_kf->updatePose(P, R);

// 6.发布path[sequence_cnt]

Quaterniond Q{R};

geometry_msgs::PoseStamped pose_stamped;

pose_stamped.header.stamp = ros::Time(cur_kf->time_stamp);

pose_stamped.header.frame_id = "world";

pose_stamped.pose.position.x = P.x() + VISUALIZATION_SHIFT_X;

pose_stamped.pose.position.y = P.y() + VISUALIZATION_SHIFT_Y;

pose_stamped.pose.position.z = P.z();

pose_stamped.pose.orientation.x = Q.x();

pose_stamped.pose.orientation.y = Q.y();

pose_stamped.pose.orientation.z = Q.z();

pose_stamped.pose.orientation.w = Q.w();

path[sequence_cnt].poses.push_back(pose_stamped);

path[sequence_cnt].header = pose_stamped.header;

// 7.保存闭环轨迹到VINS_RESULT_PATH

if (SAVE_LOOP_PATH)

{

ofstream loop_path_file(VINS_RESULT_PATH, ios::app);

loop_path_file.setf(ios::fixed, ios::floatfield);

loop_path_file.precision(0);

loop_path_file << cur_kf->time_stamp * 1e9 << ",";

loop_path_file.precision(5);

loop_path_file << P.x() << ","

<< P.y() << ","

<< P.z() << ","

<< Q.w() << ","

<< Q.x() << ","

<< Q.y() << ","

<< Q.z() << ","

<< endl;

loop_path_file.close();

}

// 8.draw local connection

if (SHOW_S_EDGE)

{

list::reverse_iterator rit = keyframelist.rbegin();

for (int i = 0; i < 4; i++)

{

if (rit == keyframelist.rend())

break;

Vector3d conncected_P;

Matrix3d connected_R;

if((*rit)->sequence == cur_kf->sequence)

{

(*rit)->getPose(conncected_P, connected_R);

posegraph_visualization->add_edge(P, conncected_P);

}

rit++;

}

}

//当前帧与其回环帧连线

if (SHOW_L_EDGE)

{

if (cur_kf->has_loop)

{

//printf("has loop \n");

KeyFrame* connected_KF = getKeyFrame(cur_kf->loop_index);

Vector3d connected_P,P0;

Matrix3d connected_R,R0;

connected_KF->getPose(connected_P, connected_R);

//cur_kf->getVioPose(P0, R0);

cur_kf->getPose(P0, R0);

if(cur_kf->sequence > 0)

{

//printf("add loop into visual \n");

posegraph_visualization->add_loopedge(P0, connected_P + Vector3d(VISUALIZATION_SHIFT_X, VISUALIZATION_SHIFT_Y, 0));

}

}

}

//posegraph_visualization->add_pose(P + Vector3d(VISUALIZATION_SHIFT_X, VISUALIZATION_SHIFT_Y, 0), Q);

keyframelist.push_back(cur_kf);

publish();

m_keyframelist.unlock();

} 3.3.5 detectLoop()回环检测返回候选帧索引

1、query()查询字典数据库,得到与每一帧的相似度评分ret 2、add()添加当前关键帧到字典数据库中。

3、hconcat()通过相似度评分判断是否存在回环候选帧。

4、对于索引值大于50的关键帧才考虑进行回环,即前50帧不回环,返回评分大于0.015的最早的关键帧索引min_index。

//回环检测返回候选帧索引

int PoseGraph::detectLoop(KeyFrame* keyframe, int frame_index)

{

// put image into image_pool; for visualization

cv::Mat compressed_image;

if (DEBUG_IMAGE)

{

int feature_num = keyframe->keypoints.size();

cv::resize(keyframe->image, compressed_image, cv::Size(376, 240));

putText(compressed_image, "feature_num:" + to_string(feature_num), cv::Point2f(10, 10), CV_FONT_HERSHEY_SIMPLEX, 0.4, cv::Scalar(255));

image_pool[frame_index] = compressed_image;

}

TicToc tmp_t;

QueryResults ret;

TicToc t_query;

// 1.查询字典数据库,得到与每一帧的相似度评分ret

db.query(keyframe->brief_descriptors, ret, 4, frame_index - 50);

//printf("query time: %f", t_query.toc());

//cout << "Searching for Image " << frame_index << ". " << ret << endl;

// 2.添加当前关键帧到字典数据库中

TicToc t_add;

db.add(keyframe->brief_descriptors);

//printf("add feature time: %f", t_add.toc());

// ret[0] is the nearest neighbour's score. threshold change with neighour score

// 3.通过相似度评分判断是否存在回环候选帧

bool find_loop = false;

cv::Mat loop_result;

if (DEBUG_IMAGE)

{

loop_result = compressed_image.clone();

if (ret.size() > 0)

putText(loop_result, "neighbour score:" + to_string(ret[0].Score), cv::Point2f(10, 50), CV_FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(255));

}

// visual loop result

if (DEBUG_IMAGE)

{

for (unsigned int i = 0; i < ret.size(); i++)

{

int tmp_index = ret[i].Id;

auto it = image_pool.find(tmp_index);

cv::Mat tmp_image = (it->second).clone();

putText(tmp_image, "index: " + to_string(tmp_index) + "loop score:" + to_string(ret[i].Score), cv::Point2f(10, 50), CV_FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(255));

cv::hconcat(loop_result, tmp_image, loop_result);

}

}

//确保与相邻帧具有好的相似度评分

if (ret.size() >= 1 &&ret[0].Score > 0.05)

for (unsigned int i = 1; i < ret.size(); i++)

{

//if (ret[i].Score > ret[0].Score * 0.3)

//评分大于0.015则认为是回环候选帧

if (ret[i].Score > 0.015)

{

find_loop = true;

int tmp_index = ret[i].Id;

if (DEBUG_IMAGE && 0)

{

auto it = image_pool.find(tmp_index);

cv::Mat tmp_image = (it->second).clone();

putText(tmp_image, "loop score:" + to_string(ret[i].Score), cv::Point2f(10, 50), CV_FONT_HERSHEY_SIMPLEX, 0.4, cv::Scalar(255));

cv::hconcat(loop_result, tmp_image, loop_result);

}

}

}

/*

if (DEBUG_IMAGE)

{

cv::imshow("loop_result", loop_result);

cv::waitKey(20);

}

*/

// 4.对于索引值大于50的关键帧才考虑进行回环,即前50帧不回环

//返回评分大于0.015的最早的关键帧索引min_index

if (find_loop && frame_index > 50)

{

int min_index = -1;

for (unsigned int i = 0; i < ret.size(); i++)

{

if (min_index == -1 || (ret[i].Id < min_index && ret[i].Score > 0.015))

min_index = ret[i].Id;

}

return min_index;

}

else

return -1;

}3.3.6 optimize4DoF()位姿图优化

//四自由度位姿图优化

void PoseGraph::optimize4DoF()

{

while(true)

{

int cur_index = -1;

int first_looped_index = -1;

//取出最新一个待优化帧作为当前帧

m_optimize_buf.lock();

while(!optimize_buf.empty())

{

cur_index = optimize_buf.front();

first_looped_index = earliest_loop_index;

optimize_buf.pop();

}

m_optimize_buf.unlock();

if (cur_index != -1)

{

printf("optimize pose graph \n");

TicToc tmp_t;

m_keyframelist.lock();

KeyFrame* cur_kf = getKeyFrame(cur_index);

int max_length = cur_index + 1;

// w^t_i w^q_i

double t_array[max_length][3];

Quaterniond q_array[max_length];

double euler_array[max_length][3];

double sequence_array[max_length];

ceres::Problem problem;

ceres::Solver::Options options;

options.linear_solver_type = ceres::SPARSE_NORMAL_CHOLESKY;

//options.minimizer_progress_to_stdout = true;

//options.max_solver_time_in_seconds = SOLVER_TIME * 3;

options.max_num_iterations = 5;

ceres::Solver::Summary summary;

ceres::LossFunction *loss_function;

loss_function = new ceres::HuberLoss(0.1);

//loss_function = new ceres::CauchyLoss(1.0);

ceres::LocalParameterization* angle_local_parameterization =

AngleLocalParameterization::Create();

list::iterator it;

int i = 0;

for (it = keyframelist.begin(); it != keyframelist.end(); it++)

{

//找到第一个回环候选帧,

//回环检测到帧以前的都略过

if ((*it)->index < first_looped_index)

continue;

(*it)->local_index = i;

Quaterniond tmp_q;

Matrix3d tmp_r;

Vector3d tmp_t;

(*it)->getVioPose(tmp_t, tmp_r);

tmp_q = tmp_r;

t_array[i][0] = tmp_t(0);

t_array[i][1] = tmp_t(1);

t_array[i][2] = tmp_t(2);

q_array[i] = tmp_q;

Vector3d euler_angle = Utility::R2ypr(tmp_q.toRotationMatrix());

euler_array[i][0] = euler_angle.x();

euler_array[i][1] = euler_angle.y();

euler_array[i][2] = euler_angle.z();

sequence_array[i] = (*it)->sequence;

problem.AddParameterBlock(euler_array[i], 1, angle_local_parameterization);

problem.AddParameterBlock(t_array[i], 3);

//回环检测到的帧参数设为固定

if ((*it)->index == first_looped_index || (*it)->sequence == 0)

{

problem.SetParameterBlockConstant(euler_array[i]);

problem.SetParameterBlockConstant(t_array[i]);

}

//add edge

//对于每个i, 只计算它之前五个的位置和yaw残差

for (int j = 1; j < 5; j++)

{

if (i - j >= 0 && sequence_array[i] == sequence_array[i-j])

{

Vector3d euler_conncected = Utility::R2ypr(q_array[i-j].toRotationMatrix());

Vector3d relative_t(t_array[i][0] - t_array[i-j][0], t_array[i][1] - t_array[i-j][1], t_array[i][2] - t_array[i-j][2]);

relative_t = q_array[i-j].inverse() * relative_t;

double relative_yaw = euler_array[i][0] - euler_array[i-j][0];

ceres::CostFunction* cost_function = FourDOFError::Create( relative_t.x(), relative_t.y(), relative_t.z(),

relative_yaw, euler_conncected.y(), euler_conncected.z());

problem.AddResidualBlock(cost_function, NULL, euler_array[i-j],

t_array[i-j],

euler_array[i],

t_array[i]);

}

}

//add loop edge

if((*it)->has_loop)// 如果检测到回环

{

//必须回环检测的帧号大于或者等于当前帧的回环检测匹配帧号

assert((*it)->loop_index >= first_looped_index);

int connected_index = getKeyFrame((*it)->loop_index)->local_index;

Vector3d euler_conncected = Utility::R2ypr(q_array[connected_index].toRotationMatrix());

Vector3d relative_t;

relative_t = (*it)->getLoopRelativeT();

double relative_yaw = (*it)->getLoopRelativeYaw();

ceres::CostFunction* cost_function = FourDOFWeightError::Create( relative_t.x(), relative_t.y(), relative_t.z(),

relative_yaw, euler_conncected.y(), euler_conncected.z());

problem.AddResidualBlock(cost_function, loss_function, euler_array[connected_index],

t_array[connected_index],

euler_array[i],

t_array[i]);

}

if ((*it)->index == cur_index)

break;

i++;

}

m_keyframelist.unlock();

ceres::Solve(options, &problem, &summary);

//std::cout << summary.BriefReport() << "\n";

//printf("pose optimization time: %f \n", tmp_t.toc());

/*

for (int j = 0 ; j < i; j++)

{

printf("optimize i: %d p: %f, %f, %f\n", j, t_array[j][0], t_array[j][1], t_array[j][2] );

}

*/

m_keyframelist.lock();

i = 0;

//根据优化后的参数更新参与优化的关键帧的位姿

for (it = keyframelist.begin(); it != keyframelist.end(); it++)

{

if ((*it)->index < first_looped_index)

continue;

Quaterniond tmp_q;

tmp_q = Utility::ypr2R(Vector3d(euler_array[i][0], euler_array[i][1], euler_array[i][2]));

Vector3d tmp_t = Vector3d(t_array[i][0], t_array[i][1], t_array[i][2]);

Matrix3d tmp_r = tmp_q.toRotationMatrix();

(*it)-> updatePose(tmp_t, tmp_r);

if ((*it)->index == cur_index)

break;

i++;

}

//根据当前帧的drift,更新全部关键帧位姿

Vector3d cur_t, vio_t;

Matrix3d cur_r, vio_r;

cur_kf->getPose(cur_t, cur_r);

cur_kf->getVioPose(vio_t, vio_r);

m_drift.lock();

yaw_drift = Utility::R2ypr(cur_r).x() - Utility::R2ypr(vio_r).x();

r_drift = Utility::ypr2R(Vector3d(yaw_drift, 0, 0));

t_drift = cur_t - r_drift * vio_t;

m_drift.unlock();

//cout << "t_drift " << t_drift.transpose() << endl;

//cout << "r_drift " << Utility::R2ypr(r_drift).transpose() << endl;

//cout << "yaw drift " << yaw_drift << endl;

it++;

for (; it != keyframelist.end(); it++)

{

Vector3d P;

Matrix3d R;

(*it)->getVioPose(P, R);

P = r_drift * P + t_drift;

R = r_drift * R;

(*it)->updatePose(P, R);

}

m_keyframelist.unlock();

updatePath();

}

std::chrono::milliseconds dura(2000);

std::this_thread::sleep_for(dura);

}

} 3.3.7 keyframe.h

主要有两个类:BriefExtractor和KeyFrame

第一个类BriefExtractor:通过Brief模板文件,对图像的关键点计算Brief描述子。

class BriefExtractor

{

public:

virtual void operator()(const cv::Mat &im, vector &keys, vector &descriptors) const;

BriefExtractor(const std::string &pattern_file);

DVision::BRIEF m_brief;

};

第二个类KeyFrame:构建关键帧,通过BRIEF描述子匹配关键帧和回环候选帧。

参考文章:

1、https://blog.csdn.net/hltt3838/article/details/105378326/?utm_medium=distribute.pc_relevant.none-task-blog-2~default~baidujs_title~default-0.base&spm=1001.2101.3001.4242 VINS-Mono 代码详细解读——回环检测与重定位、四自由度位姿图优化

2、https://blog.csdn.net/hltt3838/article/details/109454760 VINS-Mono 代码解析四、闭环检测和优化

3、https://blog.csdn.net/qq_41839222/article/details/87878550 VINS-Mono代码解读——回环检测与重定位 pose graph loop closing