opencv案例实战——银行卡模式匹配识别

系列文章目录

1.图像读取及其通道与灰度

2.图像填充与图像融合

3.图像滤波

4.图像阈值

5.腐蚀与膨胀

6.图像梯度

7.边缘检测

8.轮廓与轮廓特征

银行卡模式匹配识别

- 系列文章目录

- 前言

- 案例介绍

- 划分模板

-

- 1.思路

- 2.获取边缘

- 3.获取外接矩形

- 图像预处理

-

- 切割

- 礼帽操作

- 分割数字块

-

- sobel算子

- 膨胀和腐蚀

- 外接矩形

-

- 画出轮廓区域

- 画外接矩形

- 模板匹配

-

- 分割数字块

- 读入模板

- 匹配数字

- 代码下载

前言

在之前的几篇文章中我们已经介绍了opencv的一些基础知识,本篇文章我们将结合一个案例运用之前的知识并且学习opencv中模式匹配的应用。

演示视频如下:

基于opencv图像处理的卡号检测效果演示(附源码)

用到的知识点如果有不清楚的可以查看上面列出来的系列文章。具体代码会放在最后。

案例介绍

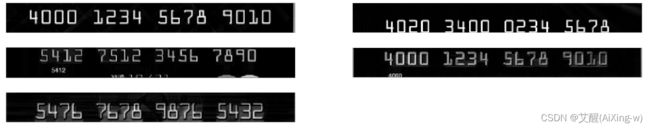

我们有若干个如下图的银行卡图片

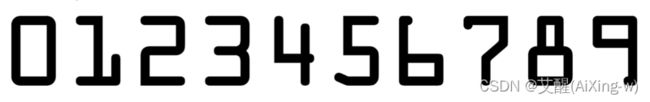

我们的目的是通过一些图像处理操作检测出银行卡中的卡号,除了银行卡图片外我们还有一个模板图片

模板图片是10个数字

为了方便展示图像,我们依然先定义一个用于展示图像的函数

def cv_show(img, name):

cv2.imshow(name, img)

cv2.waitKey(0)

cv2.destroyAllWindows()

同时为了统一处理,我们还需要有一个调整图像大小的函数

def img_resize(img, hight):

(h, w) = img.shape[0], img.shape[1]

r = h / hight

width = w / r

img = cv2.resize(img, (int(width), int(hight)))

return img

划分模板

1.思路

- 先读出模板图像

- 进行灰度化和二值化

- 边缘检测

- 求外接矩形

- 根据外接矩形裁剪

2.获取边缘

img = cv2.imread("./template/ocr_a_reference.png")

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, thresh = cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY)

ret, thresh = cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY)

contours, hierarchy = cv2.findContours(cy, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

3.获取外接矩形

显示裁剪结果

for i in range(len(contours)):

x, y, w, h = cv2.boundingRect(contours[i])

plt.subplot(3, 4, i+1)

plt.imshow(thresh[y:y+h, x:x+w],cmap = plt.cm.gray)

plt.xticks([])

plt.yticks([])

plt.show()

import os

for i in range(len(contours)):

x, y, w, h = cv2.boundingRect(contours[i])

cv2.imwrite(os.path.join('cuted_template', str(9-i)+'.jpg'), thresh[y:y+h, x:x+w])

图像预处理

我们还需要对银行卡进行图像的预处理

切割

我们留意到,银行卡只有一部分区域是我们需要的卡号,所以先进行一下切割

i = 1

plt.figure(figsize=(50, 10))

for name in os.listdir('./images'):

img = cv2.imread(os.path.join('./images',name))

img = img_resize(img, 200)

h = img.shape[0]

img = img[h//2:h//3 * 2]

plt.subplot(3, 2, i)

plt.imshow(cv2.cvtColor(img,cv2.COLOR_BGR2RGB), cmap=plt.cm.gray)

plt.xticks([])

plt.yticks([])

i+=1

plt.show()

礼帽操作

我们发现不同卡上的数字明暗程度不一样,我们可以通过礼帽操作突出更亮的区域,即突出数字

i = 1

plt.figure(figsize=(50, 10))

for name in os.listdir('./images'):

img = cv2.imread(os.path.join('./images',name), cv2.IMREAD_GRAYSCALE)

img = img_resize(img, 200)

h = img.shape[0]

img = img[h//2:h//3*2]

rectKernel = cv2.getStructuringElement(cv2.MORPH_RECT, (9, 10))

tophat = cv2.morphologyEx(img, cv2.MORPH_TOPHAT, rectKernel)

plt.subplot(3, 2, i)

plt.imshow(tophat, cmap=plt.cm.gray)

plt.xticks([])

plt.yticks([])

i+=1

plt.show()

分割数字块

通过观察发现,经过上述处理过后,依然会有一些干扰,如果直接做轮廓很难区分

设想一下,我们如果在有干扰的情况下通过轮廓画出矩形框会怎样?当然是不属于数字的部分也会被画上矩形框,那如何将他们区分开呢?我们很自然可以想到用面积或者周长,但是因为各个数字是分离的,数字的面积或周长可不一定比干扰大,所以我们要把数字分成4组,每组称为一个数字块,这样一来数字部分的就比干扰要大得多了

sobel算子

在前面的文章中我们了解到,sobel算子当使用Sx求得Gx时,会更加注重左右方向的轮廓,所以进行sobelx操作后我们图像中的数字会变得比原先略宽,虽然现在已经看不出是数字了,不过没关系,我们要的只是数字块而不是数字本身。

i = 1

plt.figure(figsize=(50, 10))

for name in os.listdir('./images'):

img = cv2.imread(os.path.join('./images',name), cv2.IMREAD_GRAYSCALE)

img = img_resize(img, 200)

h = img.shape[0]

img = img[h//2:h//3*2]

rectKernel = cv2.getStructuringElement(cv2.MORPH_RECT, (9, 5))

tophat = cv2.morphologyEx(img, cv2.MORPH_TOPHAT, rectKernel)

sobelx = cv2.Sobel(tophat, cv2.CV_64F, 1, 0, ksize=3)

sobelx = cv2.convertScaleAbs(sobelx)

minval, maxval = np.min(sobelx), np.max(sobelx)

sobelx = (255 * ((sobelx - minval) / (maxval - minval)))

sobelx = sobelx.astype('uint8')

plt.subplot(3, 2, i)

plt.imshow(sobelx, cmap=plt.cm.gray)

plt.xticks([])

plt.yticks([])

i+=1

plt.show()

膨胀和腐蚀

那么如何让这些变宽的"数字"粘连在一起呢,那必然是膨胀和腐蚀啊

i = 1

plt.figure(figsize=(50, 10))

for name in os.listdir('./images'):

img = cv2.imread(os.path.join('./images',name), cv2.IMREAD_GRAYSCALE)

img = img_resize(img, 200)

h = img.shape[0]

img = img[h//2:h//3*2]

rectKernel = cv2.getStructuringElement(cv2.MORPH_RECT, (9, 3))

tophat = cv2.morphologyEx(img, cv2.MORPH_TOPHAT, rectKernel)

sobelx = cv2.Sobel(tophat, cv2.CV_64F, 1, 0, ksize=3)

sobelx = cv2.convertScaleAbs(sobelx)

minval, maxval = np.min(sobelx), np.max(sobelx)

sobelx = (255 * ((sobelx - minval) / (maxval - minval)))

sobelx = sobelx.astype('uint8')

dilate = cv2.dilate(sobelx, rectKernel, 10)

erosion = cv2.erode(dilate, rectKernel, 10)

plt.subplot(3, 2, i)

plt.imshow(erosion, cmap=plt.cm.gray)

plt.xticks([])

plt.yticks([])

i+=1

plt.show()

可以看到数字块的雏形已经有了,但是还不够简洁,我们进行二值化之后在进行一次膨胀和腐蚀

i = 1

plt.figure(figsize=(50, 10))

for name in os.listdir('./images'):

img = cv2.imread(os.path.join('./images',name), cv2.IMREAD_GRAYSCALE)

img = img_resize(img, 200)

h = img.shape[0]

img = img[h//2:h//3*2]

rectKernel = cv2.getStructuringElement(cv2.MORPH_RECT, (9, 3))

sqKernel = cv2.getStructuringElement(cv2.MORPH_RECT, (5, 5))

tophat = cv2.morphologyEx(img, cv2.MORPH_TOPHAT, rectKernel)

sobelx = cv2.Sobel(tophat, cv2.CV_64F, 1, 0, ksize=3)

sobelx = cv2.convertScaleAbs(sobelx)

minval, maxval = np.min(sobelx), np.max(sobelx)

sobelx = (255 * ((sobelx - minval) / (maxval - minval)))

sobelx = sobelx.astype('uint8')

dilate = cv2.dilate(sobelx, rectKernel, 10)

erosion = cv2.erode(dilate, rectKernel, 10)

ret, thresh = cv2.threshold(erosion, 0, 255, cv2.THRESH_BINARY|cv2.THRESH_OTSU)

dilate = cv2.dilate(thresh, sqKernel, 10)

erosion = cv2.erode(dilate, sqKernel, 10)

plt.subplot(3, 2, i)

plt.imshow(erosion, cmap=plt.cm.gray)

plt.xticks([])

plt.yticks([])

i+=1

plt.show()

可以看到效果比前面略好,但是进步不大,所以我们的膨胀和腐蚀就先到此为止了

外接矩形

画出轮廓区域

i = 1

plt.figure(figsize=(50, 10))

for name in os.listdir('./images'):

img = cv2.imread(os.path.join('./images',name))

img = img_resize(img,200)

h = img.shape[0]

img = img[h//2:h//3*2]

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

rectKernel = cv2.getStructuringElement(cv2.MORPH_RECT, (9, 3))

sqKernel = cv2.getStructuringElement(cv2.MORPH_RECT, (5, 5))

tophat = cv2.morphologyEx(gray, cv2.MORPH_TOPHAT, rectKernel)

sobelx = cv2.Sobel(tophat, cv2.CV_64F, 1, 0, ksize=3)

sobelx = cv2.convertScaleAbs(sobelx)

minval, maxval = np.min(sobelx), np.max(sobelx)

sobelx = (255 * ((sobelx - minval) / (maxval - minval)))

sobelx = sobelx.astype('uint8')

dilate = cv2.dilate(sobelx, rectKernel, 10)

erosion = cv2.erode(dilate, rectKernel, 10)

ret, thresh = cv2.threshold(erosion, 0, 255, cv2.THRESH_BINARY|cv2.THRESH_OTSU)

dilate = cv2.dilate(thresh, sqKernel, 10)

erosion = cv2.erode(dilate, sqKernel, 10)

contour, hierarchy = cv2.findContours(erosion, cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

res = cv2.drawContours(img.copy(), contour, -1, (0, 0, 255), 3)

plt.subplot(3, 2, i)

plt.imshow(cv2.cvtColor(res, cv2.COLOR_BGR2RGB), cmap=plt.cm.gray)

plt.xticks([])

plt.yticks([])

i+=1

plt.show()

画外接矩形

我们为了后续的尝试更加方便,将外接矩形框起来的数字块保存下来

i = 1

k=1

plt.figure(figsize=(50, 10))

for name in os.listdir('./images'):

img = cv2.imread(os.path.join('./images',name))

img = img_resize(img, 200)

h = img.shape[0]

img = img[h//2:h//3*2]

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

rectKernel = cv2.getStructuringElement(cv2.MORPH_RECT, (9, 3))

sqKernel = cv2.getStructuringElement(cv2.MORPH_RECT, (5, 5))

tophat = cv2.morphologyEx(gray, cv2.MORPH_TOPHAT, rectKernel)

sobelx = cv2.Sobel(tophat, cv2.CV_64F, 1, 0, ksize=3)

sobelx = cv2.convertScaleAbs(sobelx)

minval, maxval = np.min(sobelx), np.max(sobelx)

sobelx = (255 * ((sobelx - minval) / (maxval - minval)))

sobelx = sobelx.astype('uint8')

dilate = cv2.dilate(sobelx, rectKernel, 10)

erosion = cv2.erode(dilate, rectKernel, 10)

ret, thresh = cv2.threshold(erosion, 0, 255, cv2.THRESH_BINARY|cv2.THRESH_OTSU)

dilate = cv2.dilate(thresh, sqKernel, 10)

erosion = cv2.erode(dilate, sqKernel, 10)

contour, hierarchy = cv2.findContours(erosion, cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

res = img.copy()

for cnt in contour:

x, y, w, h = cv2.boundingRect(cnt)

if w * h > 300:

res = cv2.rectangle(res, (x, y), (x+w, y+h), (0, 0, 255), 1)

cv2.imwrite(os.path.join('./cuted_images','{}.jpg'.format(k)), res[y:y+h, x:x+w])

k+=1

plt.subplot(3, 2, i)

plt.imshow(cv2.cvtColor(res, cv2.COLOR_BGR2RGB), cmap=plt.cm.gray)

plt.xticks([])

plt.yticks([])

i+=1

plt.show()

模板匹配

分割数字块

在前面我们得到了数字块,但是每个数字块是四个数字,所以我们需要像对模板进行分割一样分割数字块

img = cv2.imread('./cuted_images/1.jpg')

img = img_resize(img, 200)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY|cv2.THRESH_OTSU)

contours, _ = cv2.findContours(thresh, cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

res = img.copy()

for cnt in contours:

x, y, w, h = cv2.boundingRect(cnt)

res = cv2.rectangle(res, (x, y), (x+w, y+h), (255, 0, 0), 5)

plt.imshow(cv2.cvtColor(res, cv2.COLOR_BGR2RGB))

plt.show()

读入模板

我们这里使用字典的形式保存模板

digits = {}

for i in range(10):

digits[i] = cv2.resize(cv2.imread('./cuted_template/{}.jpg'.format(i)), (100, 150))

for i in range(10):

plt.subplot(3, 4, i+1)

plt.imshow(digits[i])

plt.title(str(i))

plt.show()

匹配数字

在进行匹配之前,我们先要介绍两个函数:cv2.matchTemplate和cv2.minMaxLoc

cv2.matchTemplate

这个函数可以用来进行模板匹配,第一个参数是待匹配的图像,第二个参数是匹配模板,第三个参数是匹配方式

第三个参数可以选值如下

| 参数值 | 评价方式 | 含义 |

|---|---|---|

| cv.TM_SQDIFF | 判断 minVal 越小,效果越好 | 计算模板与目标图像的方差,由于是像素值差值的平方的和,所以值越小匹配程度越高 |

| cv.TM_SQDIFF_NORMED | 判断 minVal 越接近0,效果越好 | 范化的cv.TM_SQDIFF,取值为0-1之间,完美匹配返回值为0 |

| cv.TM_CCORR | 判断 maxVal 越大,效果越好 | 使用dot product计算匹配度,越高匹配度就好 |

| cv.TM_CCORR_NORMED | 判断 maxVal 越接近1,效果越好 | 范化的cv.TM_CCORR,0-1之间 |

| cv.TM_CCOEFF | 判断 maxVal 越大,效果越好 | 采用模板与目标图像像素与各自图像的平均值计算dot product,正值越大匹配度越高,负值越大图像的区别越大,但如果图像没有明显的特征(即图像中的像素值与平均值接近)则返回值越接近0; |

| cv.TM_CCOEFF_NORMED | 判断 maxVal 越接近1,效果越好 | 范化的cv::TM_CCOEFF,-1 ~ 1之间 |

各种算法的特点

| 算法 | 特点 |

|---|---|

| TM_CCORR | 擅长区分出(有颜色差异的)不同区域 |

| TM_SQDIFF | 运算过程简单,匹配精度高,运算量偏大,对噪声非常敏感 |

| TM_CCOEFF | 算法计算量小,简单易实现,很适合于实时跟踪场合,但跟踪小目标和快速移动目标时常常失败 |

img = cv2.imread('./cuted_images/1.jpg')

img = img_resize(img, 200)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

ret, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY|cv2.THRESH_OTSU)

contours, _ = cv2.findContours(thresh, cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_SIMPLE)

number = ""

for cnt in contours:

x, y, w, h = cv2.boundingRect(cnt)

cur_img = res[y:y+h, x:x+w].copy()

cur_img = cv2.resize(cur_img, (100, 150))

scores = []

for i in range(10):

result = cv2.matchTemplate(cur_img, digits[i], cv2.TM_CCOEFF)

(_, score, _, _) = cv2.minMaxLoc(result)

scores.append(score)

number = str(np.argmin(scores))+number

plt.imshow(cv2.cvtColor(res, cv2.COLOR_BGR2RGB))

plt.title(number)

plt.show()

代码下载

GitHub下载地址:https://github.com/AiXing-w/template-match-banck-card