tensorflow.js基本使用 欠拟合、过拟合(五)

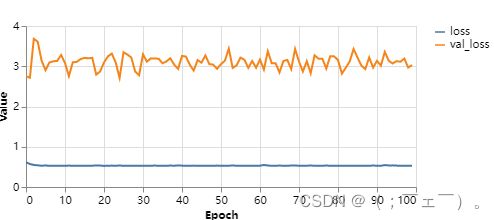

欠拟合 :用简单的模型处理复杂的数据

$(async () => {

const data = getData(200);

tfvis.render.scatterplot(

{ name: '训练数据' },

{

values: [

data.filter(p => p.label === 1),

data.filter(p => p.label === 0)

]

}

);

//欠拟合,模型简单,处理的数据过于复杂

//设置连续模型

const model = tf.sequential();

//添加层,一个神经元,激活函数,sigmoid,设置张量形状

model.add(tf.layers.dense(

{ units: 1, activation: 'sigmoid', inputShape: [2] }

));

//设置损失函数,对数损失,设置优化器,adam能自动调整学习速率

model.compile(

{ loss: tf.losses.logLoss, optimizer: tf.train.adam(0.1) }

);

//数据格式转换

const inputs = tf.tensor(data.map(p => [p.x, p.y]));

const labels = tf.tensor(data.map(p => p.label));

//训练模型

await model.fit(inputs, labels, {

validationSplit:0.2,

epochs:100,

callbacks:tfvis.show.fitCallbacks(

{ name: '训练效果' },

['loss','val_loss'],

{ callbacks:['onEpochEnd'] }

)

});

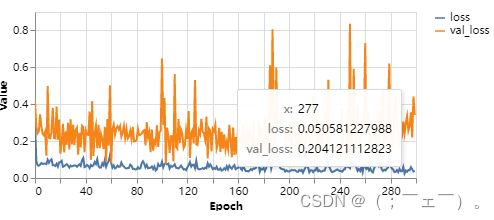

});过拟合:模型过于复杂,去兼容少部分数据,导致预测结果不准确

$(async () => {

const data = getData(200);

tfvis.render.scatterplot(

{ name: '训练数据' },

{

values: [

data.filter(p => p.label === 1),

data.filter(p => p.label === 0)

]

}

);

// 过拟合,模型过于复杂

const model = tf.sequential();

model.add(tf.layers.dense({

units: 15,

inputShape: [2],

activation: 'relu'

}));

model.add(tf.layers.dense({

units:1,

activation:'sigmoid'

}))

model.compile({

loss: tf.losses.logLoss,

optimizer: tf.train.adam(0.1)

});

const inputs = tf.tensor(data.map(p => [p.x, p.y]));

const labels = tf.tensor(data.map(p => p.label));

await model.fit(inputs, labels, {

validationSplit: 0.2,

epochs: 300,

callbacks: tfvis.show.fitCallbacks(

{ name: '训练效果' },

['loss', 'val_loss'],

{ callbacks: ['onEpochEnd'] }

)

})

});欠拟合解决方法

- 提高模型复杂度解决

过拟合解决方法

- 降低模型复杂度

- 早停法 减少训练次数

- 权重衰减 调api

- 丢弃法 调api

$(async () => {

var data = getData(200);

tfvis.render.scatterplot(

{ name: '数据训练集' },

{

values: [

data.filter(p => p.label === 1),

data.filter(p => p.label === 0)

]

}

);

const model = tf.sequential();

model.add(tf.layers.dense({

units: 15,

inputShape: [2],

activation: 'tanh',

//设置l2正则化权重衰减,防止过度拟合

// kernelRegularizer: tf.regularizers.l2({ l2: 12 })//把多余的神经元减下去

}));

//添加丢弃层 丢弃一些神经元

model.add(tf.layers.dropout({ rate: 0.4 }));

model.add(tf.layers.dense({

units: 1,

activation: 'sigmoid'

}));

model.compile({

loss: tf.losses.logLoss,

optimizer: tf.train.adam(0.1)

});

const inputs = tf.tensor(data.map(p => [p.x, p.y]));

const labels = tf.tensor(data.map(p => p.label));

await model.fit(inputs, labels, {

validationSplit: 0.2,

epochs: 100,

callbacks: tfvis.show.fitCallbacks(

{ name: '训练过程' },

['loss', 'val_loss'],

{ callbacks: ['onEpochEnd'] }

)

});

});