图像生成系列论文研读笔记——CycleGAN

前言

CycleGAN 致力于解决图像到图像映射的问题,主要用于风格迁移。可以进行非成对(unpaired)的图像到图像的转换。

论文内容

基本信息

标题:Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

时间:2018年11月5日

作者:Jun-Yan Zhu,Taesung Park,Phillip Isola,Alexei A. Efros;Berkeley AI Research (BAIR) laboratory, UC Berkeley

论文:https://arxiv.org/pdf/1703.10593.pdf

代码:https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

方法概述

两个生成器G和F,两个判别器Dx和Dy。其中G负责X->Y的映射,F负责Y->X的映射。Dx负责区分X和F(Y),Dy负责区分Y和G(X)。

损失函数设计

(1)adversarial losses for matching the distribution of generated images to the data distribution in the target domain。

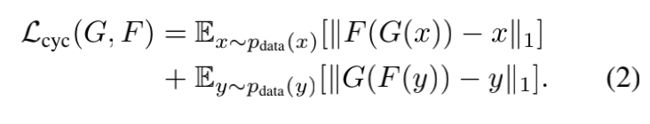

(2)cycle consistency losses to prevent the learned mappings G and F from contradicting each other。

总损失函数:

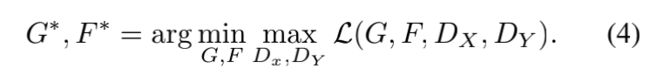

求解“二元极小极大博弈(minimax two-player game)”问题:

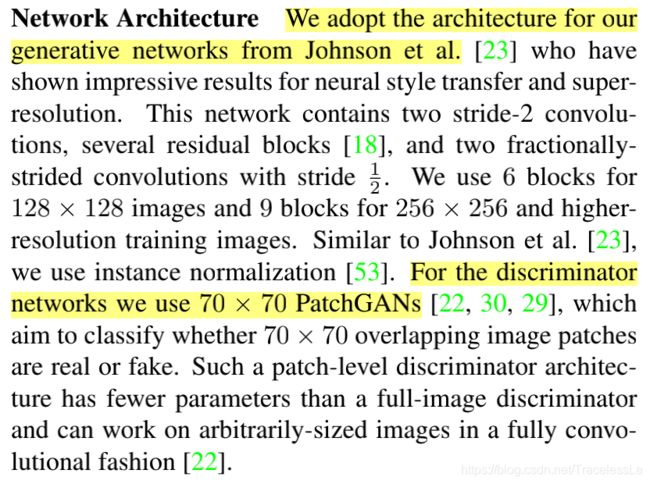

网络结构

网络训练细节

(1)对于损失函数 L G A N L_{GAN} LGAN (Equation 1), we replace the negative log likelihood objective by a least-squares loss. This loss is more stable during training and generates higher quality results.

(2)为了避免网络训练震荡,每次更新D的时候使用之前G生成的历史图片,而非最新生成的图片。Update the discriminators using a history of generated images rather than the ones produced by the latest generators. We keep an image buffer that stores the 50 previously created images.

(3)set λ = 10 in Equation 3. Use the Adam solver with a batch size of 1. All networks were trained from scratch with a learning rate of 0.0002. We keep the same learning rate for the first 100 epochs and linearly decay the rate to zero over the next 100 epochs.

评估标准

(1)AMT perceptual studies On the map↔aerial photo task, we run “real vs fake” perceptual studies on Amazon Mechanical Turk (AMT) to assess the realism of our out puts.

(2)FCN score Use it to evaluate the Cityscapes labels→photo task. The FCN metric evaluates how interpretable the generated photos are according to an off-the-shelf semantic segmentation algorithm (the fully-convolutional network, FCN).

(3)Semantic segmentation metrics To evaluate the performance of photo→labels, we use the standard metrics from the Cityscapes benchmark, including per-pixel accuracy, per-class accuracy, and mean class Intersection-Over-Union (Class IOU).

(4)用于对比的Baselines:CoGAN,SimGAN,Feature loss + GAN,BiGAN/ALI,pix2pix

论文亮点

(1)成对数据的训练相对简单,但是成对数据难获取。本论文对于非成对数据构建训练数据集,使构成两个domain,每个domain中的数据在另一个domain中是没有对应数据的。

(2)增加循环一致性损失(cycle consistency loss),同时训练G和F,以鼓励F(G(x)) ≈ x and G(F(y)) ≈ y。

应用场景

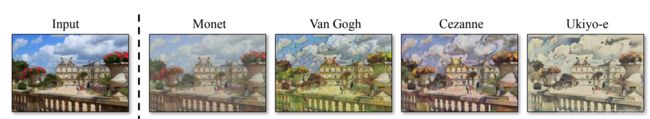

(1)Collection style transfer

(2)Object transfiguration

(3)Season transfer

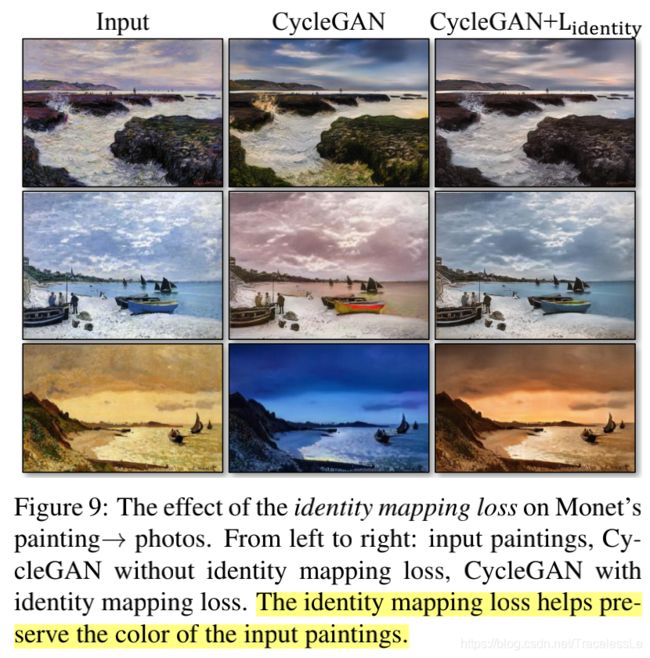

(4)Photo generation from paintings

需要注意的是在这个应用场景里引入额外的一个identity loss,可以很好的帮助保持颜色。

不足

本文设计的generator结构对于appearance改变具有很好的效果,但是对于更复杂的变化例如geometric变化则不能很好的handle。在文末展示的bad case中也说明了这一点,猫与狗之间的变换无法很好的完成。另外测试数据分布如果与训练数据分布不一致也会导致出现类似于人也被变成斑马的情况。

参考资料

[1] arxiv - Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

[2] GitHub - junyanz/pytorch-CycleGAN-and-pix2pix: Image-to-Image Translation in PyTorch

[3] CycleGAN Project Page

[4] CycleGAN/datasets

[5] cyclegan/pretrained_models

[6] 可能是近期最好玩的深度学习模型:CycleGAN的原理与实验详解 - 知乎

[7] 详解GAN代码之简单搭建并详细解析CycleGAN_python_jiongnima的博客-CSDN博客

[8] GAN就对了:Generative Adversarial Nets - CSDN博客