深度学习P2周----彩色图片识别

深度学习P2周----彩色图片识别

- ** 本文为[365天深度学习训练营]

- ** 参考文章地址: [深度学习100例-卷积神经网络(CNN)彩色图片分类 | 第2天]

- ** 作者:[K同学啊]

文章目录

- 深度学习P2周----彩色图片识别

- 前言

- 一、导入相关的包

- 二、数据集操作

-

- 1.加载数据集

- 2.查看数据集

- 三、构建CNN网络

-

- 1.torch.nn.Conv2d()

- 2.torch.nn.Maxpool2d()

- 3.torch.nn.Linear()

- 4.卷积池化层的计算过程

- 5.CNN网络

-

- a.网络shape变化过程

- b.nn创建CNN网络

- c.nn.Sequential创建CNN网络

- d.编写打印进度条函数

- 四、编写训练和测试函数

-

- 1.超参数设置和查看显存

- 2.train()

- 3.test()

- 五、训练循环函数

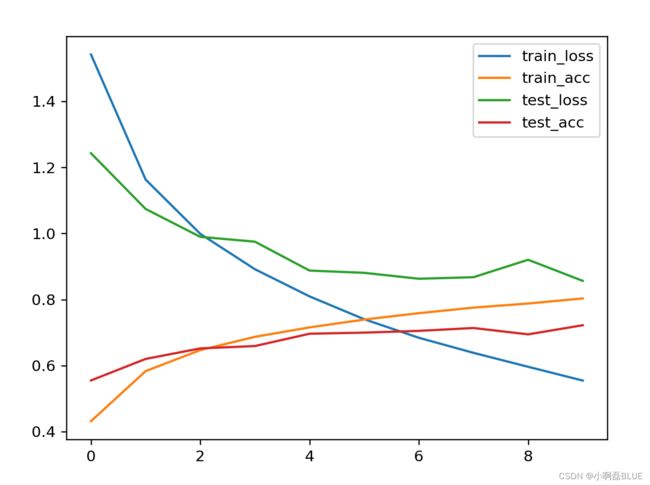

- 六、结果可视化

- 七、全部代码

- 八、总结

前言

深度学习P2周===>彩色图片识别

要求:

1.学习如何编写一个完整的深度学习程序

2.手动推导卷积层与池化层的计算过程

本次的重要在于学会构建一个简单的CNN网络

环境:python3.8, pycharm, torch

对于这次彩色图片识别的程序编写, 总结如下:

1.知道如何操作和加载内置数据集的过程,以及在迭代查看数据集图片能清楚原理,torch存储图片的形式。

2.了解卷积,池化,全连接的基本作用和原理,知道推导过程。

3.会构建简单的CNN网络,了解内部前向传播的过程。

4.了解训练,测试的原理和过程。

一、导入相关的包

import torch

from torch import nn

import numpy as np

import pandas as pd

import datetime

import matplotlib.pyplot as plt

import torch.nn.functional as F

from torch.utils.data import DataLoader

import torchvision

from torchvision.transforms import ToTensor

二、数据集操作

1.加载数据集

本次使用的torchvision内置的cifar10数据集,可直接通过torchvision类直接继承,并创建成Dataloader类。

代码如下(示例):

train_dataset = torchvision.datasets.CIFAR10(root='./cifar10',

transform=ToTensor(),

train=True,

download=True)

test_dataset = torchvision.datasets.CIFAR10(root='./cifar10',

transform=ToTensor(),

train=False,

download=True)

train_dataloader = DataLoader(train_dataset, batch_size=32, shuffle=True)

test_dataloader = DataLoader(test_dataset, batch_size=32, shuffle=False)

简要说明: root路径,transform是数据集变换形成为ToTensor(),要变成张量的形式才能送进网络进行训练,train是否作为训练集,download是否下载。batch_size=32,训练集默认为乱序,测试集一般不做乱序。

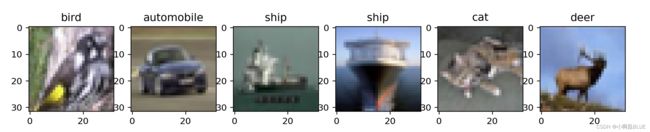

2.查看数据集

代码如下(示例):

img_bath, label_batch = next(iter(train_dataloader))

print(img_bath.shape) # torch.Size([32, 3, 32, 32])

classes = ['airplane', 'automobile', 'bird', 'cat', 'deer',

'dog', 'frog', 'horse', 'ship', 'truck'] # 数据集的类别标记名称

plt.figure(figsize=(12, 8)) # 设置画布的大小

for i, (img, label) in enumerate(zip(img_bath[:6], label_batch[:6])):

img = img.permute(1, 2, 0).numpy() # 维度交换转为numpy()形式

plt.subplot(1, 6, i+1) # 绘制1行6列的图片

plt.title(classes[label.item()]) # 标题图片标记的类别

plt.imshow(img, cmap=plt.cm.binary)

plt.show()

简要说明: torch图片的shape形式为 [batch_size,channels,height,weight],打印图片的shape为torch.Size([32, 3, 32, 32])

三、构建CNN网络

1.torch.nn.Conv2d()

torch.nn.Conv2d(in_channels,out_channels,kernel_size,stride=1,padding=0,dilation=1,groups=1,

bias=True,padding_mode='zeros',device=None,dtype=None)

in_channels:输入通道数

out_channels:输出通道数

kernel_size:卷积核大小

stride=1:卷积核步长,默认为1

padding=0:填充,默认为0

2.torch.nn.Maxpool2d()

nn.MaxPool2d(kernel_size,stride,padding=0,dilation=1,ceil_mode=False,return_indices=False)

kernel_size:卷积核大小

stride:卷积步长

3.torch.nn.Linear()

nn.Linear( in_features,out_features,bias=True,device=None,dtype=None)

in_features:输入特征大小 out_features:输出特征大小

4.卷积池化层的计算过程

输入图片:W * W

卷积核大小:F

步长:S

填充:P

输出图片:N * N

N = (W-F+2P)/S + 1

5.CNN网络

a.网络shape变化过程

3,32,32(输入数据)—>64,30,30(卷积1:F=3,S=1,P=0)—>64,15,15(池化1:F=2,S=2)—>64,13,13(卷积2:F=3,S=1,P=0)—>64,6,6(池化2:F=2,S=2)—>128,4,4(卷积3:F=3,S=1,P=0)—>128,2,2(池化3:F=2,S=2)—>512(展平)—>256(全连接1)—>10(全连接2)

b.nn创建CNN网络

class Cnn_net_1(nn.Module):

def __init__(self):

super(Cnn_net_1, self).__init__()

self.c1 = nn.Conv2d(in_channels=3, out_channels=64, kernel_size=3, stride=1)

self.s1 = nn.MaxPool2d(kernel_size=2, stride=2)

self.c2 = nn.Conv2d(in_channels=64, out_channels=64, kernel_size=3, stride=1)

self.s2 = nn.MaxPool2d(kernel_size=2, stride=2)

self.c3 = nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, stride=1)

self.s3 = nn.MaxPool2d(kernel_size=2, stride=2)

self.l1 = nn.Linear(512, 256)

self.l2 = nn.Linear(256, 10)

def forward(self, x):

x = F.relu(self.c1(x))

x = self.s1(x)

x = F.relu(self.c2(x))

x = self.s2(x)

x = F.relu(self.c3(x))

x = self.s3(x)

x = x.view(-1, 128*2*2)

x = F.relu(self.l1(x))

x = self.l2(x)

return x

c.nn.Sequential创建CNN网络

class Cnn_net_2(nn.Module):

def __init__(self):

super(Cnn_net_2, self).__init__()

self.c1 = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=64, kernel_size=3, stride=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(in_channels=64, out_channels=64, kernel_size=3, stride=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, stride=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2)

)

self.l1 = nn.Sequential(

nn.Linear(512, 256),

nn.ReLU(),

nn.Linear(256, 10)

)

def forward(self, x):

x = self.c1(x)

x = x.view(-1, 512)

x = self.l1(x)

return x

d.编写打印进度条函数

def printlog(info):

nowtime = datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')

print("\n"+"=========="*8 + '%s'%nowtime)

print(str(info)+"\n")

四、编写训练和测试函数

1.超参数设置和查看显存

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = Cnn_net_2().to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

loss_fn = nn.CrossEntropyLoss()

2.train()

def train(train_dataloader, model, loss_fn, optimizer):

size = len(train_dataloader.dataset)

num_of_batch = len(train_dataloader)

train_correct, train_loss = 0.0, 0.0

for x, y in train_dataloader:

x, y = x.to(device), y.to(device)

pre = model(x)

loss = loss_fn(pre, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

with torch.no_grad():

train_correct += (pre.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_correct /= size

train_loss /= num_of_batch

return train_correct, train_loss

3.test()

def test(test_dataloader, model, loss_fn):

size = len(test_dataloader.dataset)

num_of_batch = len(test_dataloader)

test_correct, test_loss = 0.0, 0.0

with torch.no_grad():

for x, y in test_dataloader:

x, y = x.to(device), y.to(device)

pre = model(x)

loss = loss_fn(pre, y)

test_loss += loss.item()

test_correct += (pre.argmax(1) == y).type(torch.float).sum().item()

test_correct /= size

test_loss /= num_of_batch

return test_correct, test_loss

五、训练循环函数

epochs = 10

train_acc = []

train_loss = []

test_acc = []

test_loss = []

for epoch in range(epochs):

printlog("Epoch: {0} / {1}".format(epoch, epochs))

model.train()

epoch_train_acc, epoch_train_loss = train(train_dataloader, model, loss_fn, optimizer)

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dataloader, model, loss_fn)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

template = ('train_loss:{:.5f}, train_acc:{:.5f}, test_loss:{:.5f}, test_acc:{:.5f}')

print(template.format(epoch_train_loss, epoch_train_acc, epoch_test_loss, epoch_test_acc))

print('done')

plt.plot(range(epochs), train_loss, label='train_loss')

plt.plot(range(epochs), train_acc, label='train_acc')

plt.plot(range(epochs), test_loss, label='test_loss')

plt.plot(range(epochs), test_acc, label='test_acc')

plt.legend()

plt.show()

print('done')

六、结果可视化

七、全部代码

import torch

from torch import nn

import numpy as np

import pandas as pd

import datetime

import matplotlib.pyplot as plt

import torch.nn.functional as F

from torch.utils.data import DataLoader

import torchvision

from torchvision.transforms import ToTensor

train_dataset = torchvision.datasets.CIFAR10(root='./cifar10',

transform=ToTensor(),

train=True,

download=True)

test_dataset = torchvision.datasets.CIFAR10(root='./cifar10',

transform=ToTensor(),

train=False,

download=True)

train_dataloader = DataLoader(train_dataset, batch_size=32, shuffle=True)

test_dataloader = DataLoader(test_dataset, batch_size=32, shuffle=False)

img_bath, label_batch = next(iter(train_dataloader))

print(img_bath.shape) # torch.Size([32, 3, 32, 32])

classes = ['airplane', 'automobile', 'bird', 'cat', 'deer',

'dog', 'frog', 'horse', 'ship', 'truck'] # 数据集的类别标记名称

plt.figure(figsize=(12, 8))

for i, (img, label) in enumerate(zip(img_bath[:6], label_batch[:6])):

img = img.permute(1, 2, 0).numpy()

plt.subplot(1, 6, i+1)

plt.title(classes[label.item()])

plt.imshow(img, cmap=plt.cm.binary)

plt.show()

def printlog(info):

nowtime = datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')

print("\n"+"=========="*8 + '%s'%nowtime)

print(str(info)+"\n")

class Cnn_net_1(nn.Module):

def __init__(self):

super(Cnn_net_1, self).__init__()

self.c1 = nn.Conv2d(in_channels=3, out_channels=64, kernel_size=3, stride=1)

self.s1 = nn.MaxPool2d(kernel_size=2, stride=2)

self.c2 = nn.Conv2d(in_channels=64, out_channels=64, kernel_size=3, stride=1)

self.s2 = nn.MaxPool2d(kernel_size=2, stride=2)

self.c3 = nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, stride=1)

self.s3 = nn.MaxPool2d(kernel_size=2, stride=2)

self.l1 = nn.Linear(512, 256)

self.l2 = nn.Linear(256, 10)

def forward(self, x):

x = F.relu(self.c1(x))

x = self.s1(x)

x = F.relu(self.c2(x))

x = self.s2(x)

x = F.relu(self.c3(x))

x = self.s3(x)

x = x.view(-1, 128*2*2)

x = F.relu(self.l1(x))

x = self.l2(x)

return x

class Cnn_net_2(nn.Module):

def __init__(self):

super(Cnn_net_2, self).__init__()

self.c1 = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=64, kernel_size=3, stride=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(in_channels=64, out_channels=64, kernel_size=3, stride=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, stride=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2)

)

self.l1 = nn.Sequential(

nn.Linear(512, 256),

nn.ReLU(),

nn.Linear(256, 10)

)

def forward(self, x):

x = self.c1(x)

x = x.view(-1, 512)

x = self.l1(x)

return x

device = 'cuda' if torch.cuda.is_available() else 'cpu'

model = Cnn_net_2().to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

loss_fn = nn.CrossEntropyLoss()

def train(train_dataloader, model, loss_fn, optimizer):

size = len(train_dataloader.dataset)

num_of_batch = len(train_dataloader)

train_correct, train_loss = 0.0, 0.0

for x, y in train_dataloader:

x, y = x.to(device), y.to(device)

pre = model(x)

loss = loss_fn(pre, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

with torch.no_grad():

train_correct += (pre.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_correct /= size

train_loss /= num_of_batch

return train_correct, train_loss

def test(test_dataloader, model, loss_fn):

size = len(test_dataloader.dataset)

num_of_batch = len(test_dataloader)

test_correct, test_loss = 0.0, 0.0

with torch.no_grad():

for x, y in test_dataloader:

x, y = x.to(device), y.to(device)

pre = model(x)

loss = loss_fn(pre, y)

test_loss += loss.item()

test_correct += (pre.argmax(1) == y).type(torch.float).sum().item()

test_correct /= size

test_loss /= num_of_batch

return test_correct, test_loss

epochs = 10

train_acc = []

train_loss = []

test_acc = []

test_loss = []

for epoch in range(epochs):

printlog("Epoch: {0} / {1}".format(epoch, epochs))

model.train()

epoch_train_acc, epoch_train_loss = train(train_dataloader, model, loss_fn, optimizer)

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dataloader, model, loss_fn)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

template = ('train_loss:{:.5f}, train_acc:{:.5f}, test_loss:{:.5f}, test_acc:{:.5f}')

print(template.format(epoch_train_loss, epoch_train_acc, epoch_test_loss, epoch_test_acc))

print('done')

plt.plot(range(epochs), train_loss, label='train_loss')

plt.plot(range(epochs), train_acc, label='train_acc')

plt.plot(range(epochs), test_loss, label='test_loss')

plt.plot(range(epochs), test_acc, label='test_acc')

plt.legend()

plt.show()

print('done')

八、总结

对于这次彩色图片识别的程序编写, 总结如下:

1.知道如何操作和加载内置数据集的过程,以及在迭代查看数据集图片能清楚原理,torch存储图片的形式。

2.了解卷积,池化,全连接的基本作用和原理,知道推导过程。

3.会构建简单的CNN网络,了解内部前向传播的过程。

4.了解训练,测试的原理和过程。。