DeepStream学习笔记(二):deepstream_test_1.py详解

deepstream_test_1.py详解

- 一、pipeline可视化

- 二、创建pipeline以及pipeline中的元件(element)

-

- 1、初始化Gstreamer与创建管道pipeline

- 2、创建处理输入源的元件(element)

- 3、解析h264格式的文件

- 4、创建硬件解码器

- 5、创建streammux从一个或多个数据源构成batch

- 6、创建推理元件,执行推理

- 7、创建视频颜色格式转换插件

- 8、创建绘制边界框的插件

- 9、创建显示的插件

- 三、设置pipeline中每个element的参数

- 四、将各个element添加到pipeline中

- 五、链接每个element

- 六、创建一个事件循环(event loop)、播放并收听事件

- 参考文章

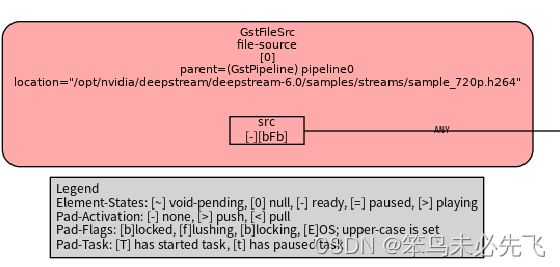

一、pipeline可视化

参考博文:Nvidia Deepstream小细节系列:Deepstream python保存pipeline结构图,创建了deepstream_test_1.py的pipeline如图:

![]()

二、创建pipeline以及pipeline中的元件(element)

1、初始化Gstreamer与创建管道pipeline

def main(args):

# Check input arguments

if len(args) != 2:

sys.stderr.write("usage: %s \n" % args[0])

sys.exit(1)

# Standard GStreamer initialization

GObject.threads_init()

Gst.init(None)

# Create gstreamer elements

# Create Pipeline element that will form a connection of other elements

print("Creating Pipeline \n ")

pipeline = Gst.Pipeline()

if not pipeline:

sys.stderr.write(" Unable to create Pipeline \n")

2、创建处理输入源的元件(element)

使用了GstStreamer的filesrc插件,从文件中读取数据。在这里详细介绍了filesrc插件。

print("Creating Source \n ")

source = Gst.ElementFactory.make("filesrc", "file-source")

if not source:

sys.stderr.write(" Unable to create Source \n")

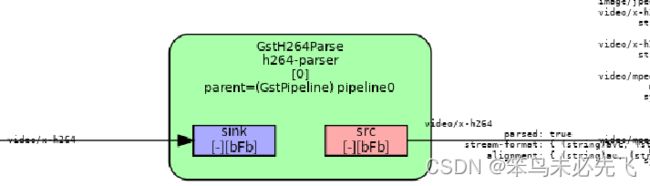

3、解析h264格式的文件

如果输入的视频格式.h264,那么需要经过一个H264解析器GstH264Parse,在这里你可以看到详细的解释。我们对GstH264Parse做任何设置,只需要调用即可。

print("Creating H264Parser \n")

h264parser = Gst.ElementFactory.make("h264parse", "h264-parser")

if not h264parser:

sys.stderr.write(" Unable to create h264 parser \n")

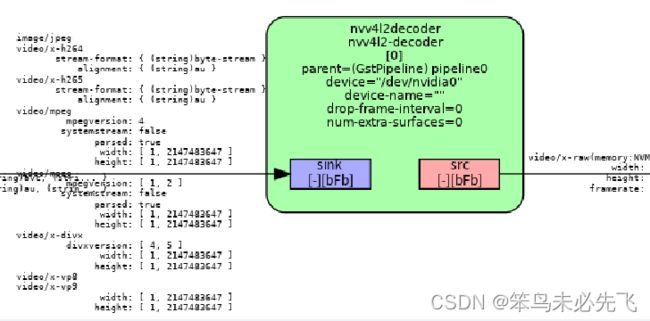

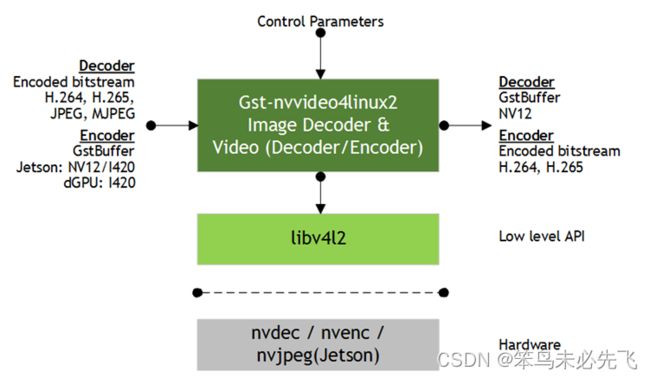

4、创建硬件解码器

数据经过H264解析器之后需要经过一个硬件解码器——nv4l2decoder,这是NVIDIA的插件,而不是GstStreamer中的插件。

print("Creating Decoder \n")

decoder = Gst.ElementFactory.make("nvv4l2decoder", "nvv4l2-decoder")

if not decoder:

sys.stderr.write(" Unable to create Nvv4l2 Decoder \n")

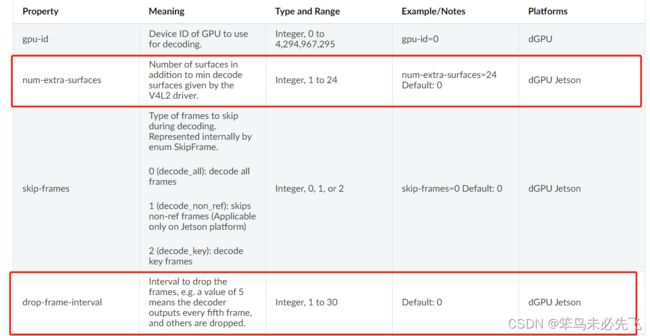

我们可以在这里查到以下资料。通过下面这张图,我们可以看到Gst-nvvideo4linux2的插件既可以用作解码器,也可以用作编码器。

在本例程中,该插件用作解码器,输入、输出和控制参数如下:

在本例程中,该插件用作解码器,输入、输出和控制参数如下:

在pipeline可视图中的drop-frame-interval、num-extra-surfaces属性解释如下:

5、创建streammux从一个或多个数据源构成batch

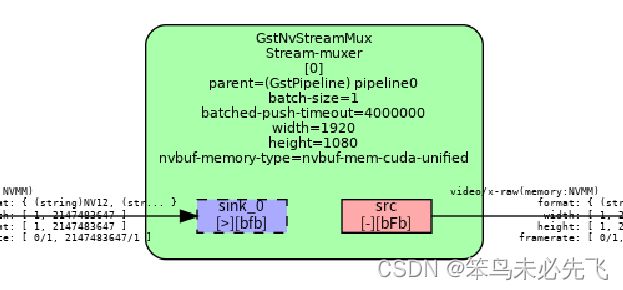

如果有多个数据源,我们可以通过nvstreammux插件将多个数据源形成一个batch。该插件也是NVIDIA的插件,我们可以在这里查看详细解释。

# Create nvstreammux instance to form batches from one or more sources.

streammux = Gst.ElementFactory.make("nvstreammux", "Stream-muxer")

if not streammux:

sys.stderr.write(" Unable to create NvStreamMux \n")

在本例程中,只有一个输入源,所以看不出来这个插件的作用,下图会更直观一点。

在本例程中,只有一个输入源,所以看不出来这个插件的作用,下图会更直观一点。

该插件的输入、输出和控制参数如下:

6、创建推理元件,执行推理

nvinfer插件使用NVIDIA TensorRT对输入数据进行推理,是deepstream中比较重要的插件,接收来自nvstreammux插件输出的NV12/RGBA buffer,然后根据网络要求对输入数据进行转换(格式转换和缩放),并将转换后的数据传递给低级库,低级库将对数据进行预处理(归一化和均值减法),然后将处理后的数据传递给TensorRT引擎进行推理。我们可以在这里查看详细解释。

# Use nvinfer to run inferencing on decoder's output,

# behaviour of inferencing is set through config file

pgie = Gst.ElementFactory.make("nvinfer", "primary-inference")

if not pgie:

sys.stderr.write(" Unable to create pgie \n")

# Use nvinfer to run inferencing on decoder's output,

# behaviour of inferencing is set through config file

pgie = Gst.ElementFactory.make("nvinfer", "primary-inference")

if not pgie:

sys.stderr.write(" Unable to create pgie \n")

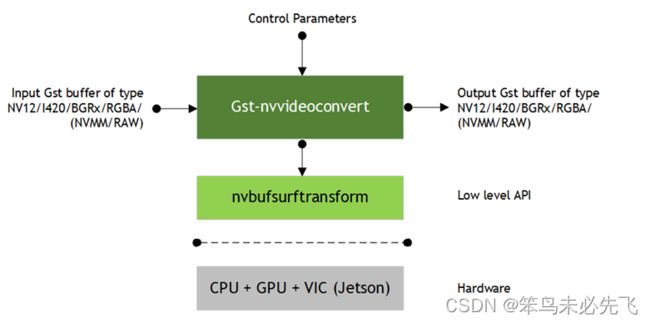

7、创建视频颜色格式转换插件

通过nvvideoconvert插件执行视频颜色格式转换。我们可以在这里查找到详细资料。

# Use convertor to convert from NV12 to RGBA as required by nvosd

nvvidconv = Gst.ElementFactory.make("nvvideoconvert", "convertor")

if not nvvidconv:

sys.stderr.write(" Unable to create nvvidconv \n")

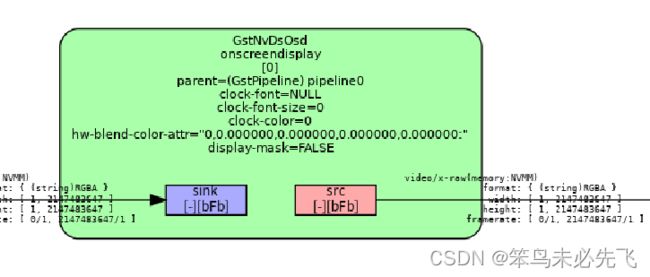

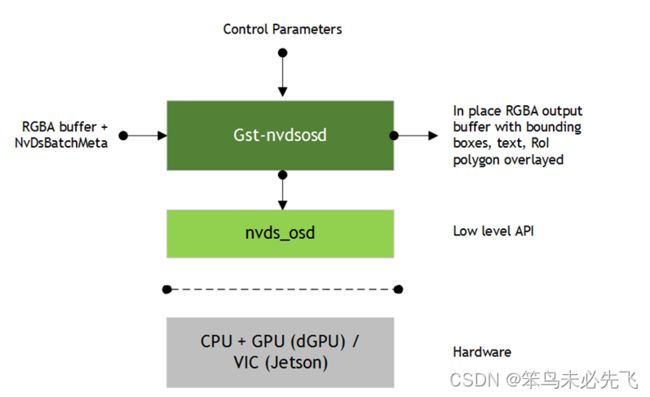

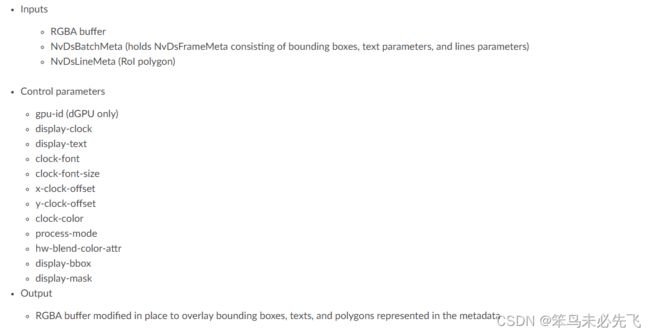

8、创建绘制边界框的插件

nvdsosd插件会将推理的结构绘制在视频中。我们可以在这里找到详细资料。

# Create OSD to draw on the converted RGBA buffer

nvosd = Gst.ElementFactory.make("nvdsosd", "onscreendisplay")

if not nvosd:

sys.stderr.write(" Unable to create nvosd \n")

9、创建显示的插件

nveglglessink插件会将结果显示在显示器上,我没有找到该插件的详细解释,如果不希望结果显示,可以使用fakesink插件。

# print("Creating EGLSink \n")

# sink = Gst.ElementFactory.make("nveglglessink", "nvvideo-renderer")

# if not sink:

# sys.stderr.write(" Unable to create egl sink \n")

#creat fakesink

print("Creating EGLSink \n")

fakesink = Gst.ElementFactory.make("fakesink", "fakesink") #fakesink不显示

if not fakesink:

sys.stderr.write(" Unable to create transform \n")

三、设置pipeline中每个element的参数

print("Playing file %s " %args[1])

source.set_property('location', args[1]) #设置输入源

streammux.set_property('width', 1920) #设置图片的长度

streammux.set_property('height', 1080) #设置图片的宽度

streammux.set_property('batch-size', 1) #设置batch的大小

streammux.set_property('batched-push-timeout', 4000000)

pgie.set_property('config-file-path', "dstest1_pgie_config.txt")#绑定推理配置参数的文件

四、将各个element添加到pipeline中

print("Adding elements to Pipeline \n")

pipeline.add(source)

pipeline.add(h264parser)

pipeline.add(decoder)

pipeline.add(streammux)

pipeline.add(pgie)

pipeline.add(nvvidconv)

pipeline.add(nvosd)

# pipeline.add(sink)

pipeline.add(fakesink)

if is_aarch64():

pipeline.add(transform)

五、链接每个element

# file-source -> h264-parser -> nvh264-decoder ->

# nvinfer -> nvvidconv -> nvosd -> video-renderer

print("Linking elements in the Pipeline \n")

source.link(h264parser)

h264parser.link(decoder)

####streammux的特殊处理方式

sinkpad = streammux.get_request_pad("sink_0")

if not sinkpad:

sys.stderr.write(" Unable to get the sink pad of streammux \n")

srcpad = decoder.get_static_pad("src")

if not srcpad:

sys.stderr.write(" Unable to get source pad of decoder \n")

####

srcpad.link(sinkpad)

streammux.link(pgie)

pgie.link(nvvidconv)

nvvidconv.link(nvosd)

if is_aarch64():

nvosd.link(transform)

#transform.link(sink)

transform.link(fakesink)

else:

# nvosd.link(sink)

nvosd.link(fakesink)

六、创建一个事件循环(event loop)、播放并收听事件

这一部分未能完全理解,可以参照NVIDIA Jetson Nano 2GB 系列文章(35):Python版test1实战说明

# create an event loop and feed gstreamer bus mesages to it

loop = GObject.MainLoop()

bus = pipeline.get_bus()

bus.add_signal_watch()

bus.connect ("message", bus_call, loop)

# Lets add probe to get informed of the meta data generated, we add probe to

# the sink pad of the osd element, since by that time, the buffer would have

# had got all the metadata.

osdsinkpad = nvosd.get_static_pad("sink")

if not osdsinkpad:

sys.stderr.write(" Unable to get sink pad of nvosd \n")

osdsinkpad.add_probe(Gst.PadProbeType.BUFFER, osd_sink_pad_buffer_probe, 0)

# start play back and listen to events

print("Starting pipeline \n")

pipeline.set_state(Gst.State.PLAYING)

try:

loop.run()

except:

pass

# cleanup

pipeline.set_state(Gst.State.NULL)

参考文章

Nvidia Deepstream极致细节:1. Deepstream Python 官方案例1:deepstream_test_1

NVIDIA Jetson Nano 2GB 系列文章(35):Python版test1实战说明