Tensorflow源码编译

相比源码编译各版本之间遇到的坑来说,pip安装真心省事。不过由于项目需要采用C++实现的整个感知模块,只能把DL前向传播这块也写成C++形式。这是我去年的编译过程,当时有不少坑没能记录下来,以后有机会再往这篇文章中打补丁吧。

Nivida显卡驱动安装

首先不管采用那种安装方式,如果要跑GPU版本显卡驱动,CUDA,CUDNN这三样必须安装。

先从官网https://www.nvidia.cn/下载与显卡匹配的驱动程序。

1.控制台下编辑文件blacklist.conf

sudo vim /etc/modprobe.d/blacklist.conf

在文件最后插入以下内容:

blacklist nouveau

options nouveau modeset=0

2.更新系统

sudo update-initramfs -u

3.重启操作系统验证nouveau是否禁用,如果没有任何显示则表示nouveau已经被禁用

lsmod | grep nouveau

4.关闭图形界面,ctrl+alt+f1进入命令行

sudo service lightdm stop

5.安装驱动程序,例如我笔记本950M的显卡下了个NVIDIA-Linux-x86_64-430.40.run

sudo ./NVIDIA-Linux-x86_64-430.40.run -no-x-check -no-opengl-files

------------------------------------------

#-no-x-check:安装驱动时关闭 X 服务

#-no-opengl-files:只安装驱动文件,不安装 OpenGL 文件

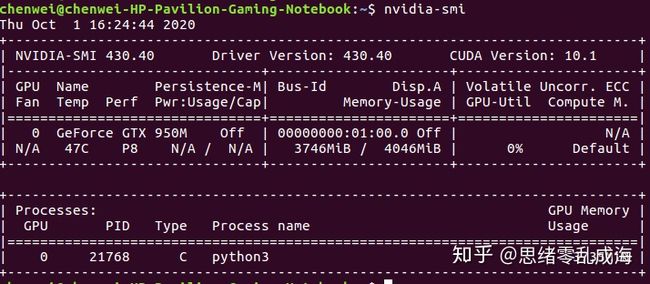

6.验证驱动是否安装

运行命令nvidia-smi检查驱动是否安装成功:

CUDA和CUDNN安装

网上看了其他人的博客安装方法和配套版本有很多种,在此我只记录下亲测过的版本CUDA9.2配套CUDNN7.1.4。CUDA9.2这个版本感觉挺坑的,网上现成的tensorflow安装包要不就基于CUDA-9.0,9.1要不就是CUDA-10.0编译的,导致后来用pip安装tensorflow1.12后,运行直接报错找不多cuda9.0的库,当然我们后面采用源码编译就不会出现这个问题了。

1.下载CUDA和CUDNN安装文件

登陆官网 https://developer.nvidia.com/cuda-downloads,下载相应版本的安装文件,注意安装文件类型选择 runfile(local),我下载的是cuda_9.2.148_396.37_linux.run

2.禁用nouveau驱动,并关闭图形化界面

此处操作在之前安装驱动程序的时候就做了,具体操作见上面。主要因为nouveau是 ubuntu16.04 默认安装的第三方开源驱动,安装cuda会跟nouveau冲突,需要先禁用。

3.运行CUDA安装程序

cd到runfile文件的路径下,执行以下命令

sudo sh cuda_9.2.148_396.37_linux.run --no-x-check --no-opengl-files

#开始安装,按提示一步步操作,按住回车看完声明。按照提示输入相应字符,不用安装驱动,其他根据内容yes。

#安装结束后,重新启动图形化界面

sudo service lightdm start

4.设置环境变量

sudo gedit ~/.bashrc

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda-9.2/lib64

export PATH=$PATH:/usr/local/cuda-9.2/bin

export CUDA_HOME=$CUDA_HOME:/usr/local/cuda-9.2

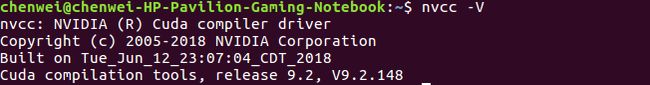

通过nvcc -V验证CUDA Toolkit:

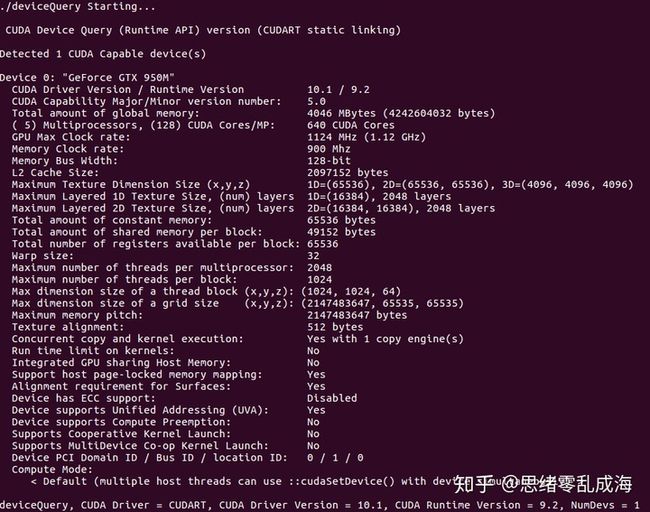

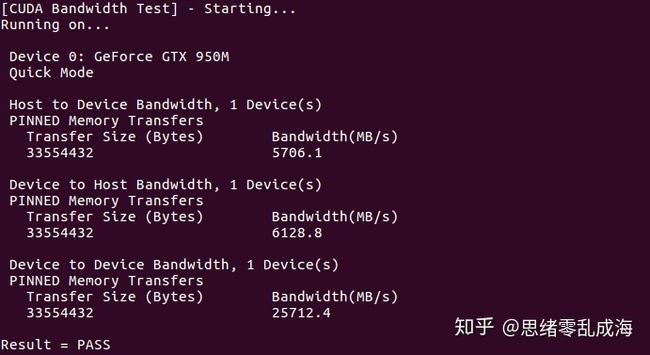

5.编译CUDA提供的samples检查CUDA运行是否正常

cd /home/chenwei/NVIDIA_CUDA-9.2_Samples

make

等待一段时间,编译 成功后,二进制文件会存放在 NVIDIA_CUDA-10.1_Samples/bin 中,执行下面的二进制文件,看是否成功

cd /home/chenwei/NVIDIA_CUDA-9.2_Samples/bin/x86_64/linux/release

./deviceQuery

./bandwidthTest

若出现以下信息,则说明成功

6.安装CUDNN

登录官网https://developer.nvidia.com/rdp/cudnn-archive下载与CUDA匹配的版本,我这里选择的是7.1.4。安装CUDNN的过程实际上是把CUDNN的头文件复制到CUDA的头文件目录里面去;把CUDNN的库复制到CUDA的库目录里面去。首先需要将下载的cudnn解压,之后再执行如下命令:

# 复制cudnn头文件

sudo cp cuda/include/* /usr/local/cuda-9.2/include/

# 复制cudnn的库

sudo cp cuda/lib64/* /usr/local/cuda-9.2/lib64/

# 添加可执行权限

sudo chmod +x /usr/local/cuda-9.2/include/cudnn.h

sudo chmod +x /usr/local/cuda-9.2/lib64/libcudnn*

7.检查CUDNN是否安装成功

cat /usr/local/cuda/include/cudnn.h | grep CUDNN_MAJOR -A 2

出现如下则证明安装成功:

Bazel的安装

ubuntu上安装Bazel有以下三种方式:

- 二进制安装

- 传统的APT仓库安装

- 编译Bazel源码安装

本次我采用的是二进制安装方式,流程如下:

1.安装必须的程序

首先安装JDK8,添加PPA源

sudo add-apt-repository ppa:webupd8team/java #添加PPA

sudo apt-get update #更新

sudo apt-get install openjdk-8-jdk #安装java8

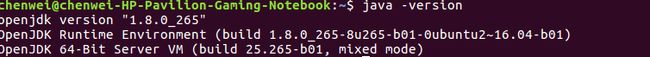

安装完成后键入java -version

若看到Java版本信息则表明JDK安装成功

安装依赖包pkg-config, zip, g++, zlib1g-dev, unzip, 和python

sudo apt-get install pkg-config zip g++ zlib1g-dev unzip python3

2.下载Bazel安装文件

从Bazel的GitHub的发布页面上下载命名格式为bazel-< version >-installer-linux-x86_64.sh的二进制安装文件。下载地址:https://github.com/bazelbuild/bazel/releases 我下载是0.15.1版本。

3.运行安装程序

按照如下方式运行Bazel的安装程序

chmod +x bazel-0.15.1-installer-linux-x86_64.sh

./bazel-0.15.1-installer-linux-x86_64.sh --user

--user标记将Bazel安装到系统上的$HOME/bin目录,并将.bazelrc路径设置为$HOME/.bazelrc。

4.设置环境变量

如果您像上一步中使用--user标记来运行Bazel安装程序,那么Bazel的可执行文件就被安装在你的$home/bin目录中了。

sudo gedit ~/.bashrc,最后一行加上

export PATH="$PATH:$HOME/bin"

然后使之生效:source ~/.bashrc

5.卸载Bazel

当用户想卸载Bazel时,可做如下操作:

rm -rf ~/.bazel

rm -rf ~/bin

rm -rf /usr/bin/bazel

注释~/.bashrc 中bazel的环境变量

编译Tensorflow

CUDA环境和编译工具都安装成功后,接下来进入正题,源码编译Tensorflow。

1.安装依赖项

sudo apt-get install default-jdk python-dev python3-dev python-numpy python3-numpy build-essential python-pip python3-pip python-virtualenv swig python-wheel python3-wheel libcurl3-dev zip zlib1g-dev unzip

2.下载Tensorflow源码

从github上下载tensorflow源码,我进行源码编译的版本是1.12.3

git clone https://github.com/tensorflow/tensorflow

cd tensorflow

git checkout r1.12

3.进行编译配置

在用Bazel编译源代码之前,我们还需要在TensorFlow源代码路径下,运行configure文件,做一些必要的配置:

chenwei@chenwei-HP-Pavilion-Gaming-Notebook:~/tensorflow$ ./configure

You have bazel 0.15.1 installed.

Please specify the location of python. [Default is /usr/bin/python]: /usr/bin/python3.5

Found possible Python library paths:

/usr/local/lib/python3.5/dist-packages

/usr/lib/python3/dist-packages

Please input the desired Python library path to use. Default is [/usr/local/lib/python3.5/dist-packages]

Do you wish to build TensorFlow with jemalloc as malloc support? [Y/n]: n

No jemalloc as malloc support will be enabled for TensorFlow.

Do you wish to build TensorFlow with Google Cloud Platform support? [Y/n]: n

No Google Cloud Platform support will be enabled for TensorFlow.

Do you wish to build TensorFlow with Hadoop File System support? [Y/n]: n

No Hadoop File System support will be enabled for TensorFlow.

Do you wish to build TensorFlow with Amazon S3 File System support? [Y/n]: n

No Amazon S3 File System support will be enabled for TensorFlow.

Do you wish to build TensorFlow with XLA JIT support? [y/N]: n

No XLA JIT support will be enabled for TensorFlow.

Do you wish to build TensorFlow with GDR support? [y/N]: n

No GDR support will be enabled for TensorFlow.

Do you wish to build TensorFlow with VERBS support? [y/N]: n

No VERBS support will be enabled for TensorFlow.

Do you wish to build TensorFlow with OpenCL SYCL support? [y/N]: n

No OpenCL SYCL support will be enabled for TensorFlow.

Do you wish to build TensorFlow with CUDA support? [y/N]: y

CUDA support will be enabled for TensorFlow.

Please specify the CUDA SDK version you want to use, e.g. 7.0. [Leave empty to default to CUDA 9.0]: 9.2

Please specify the location where CUDA 8.0 toolkit is installed. Refer to README.md for more details. [Default is /usr/local/cuda]: /usr/local/cuda-9.2

Please specify the cuDNN version you want to use. [Leave empty to default to cuDNN 7.0]: 7.1.4

Please specify the location where cuDNN 7 library is installed. Refer to README.md for more details. [Default is /usr/local/cuda-9.2]:/usr/local/cuda

Do you wish to build TensorFlow with TensorRT support? [y/N]: n

No TensorRT support will be enabled for TensorFlow.

Please specify the NCCL version you want to use. If NCCL 2.2 is not installed, then you can use version 1.3 that can be fetched automatically but it may have worse performance with multiple GPUs. [Default is 2.2]: 1.3

Please specify a list of comma-separated Cuda compute capabilities you want to build with.

You can find the compute capability of your device at: https://developer.nvidia.com/cuda-gpus.

Please note that each additional compute capability significantly increases your build time and binary size. [Default is: 5.0]:

Do you want to use clang as CUDA compiler? [y/N]: N

nvcc will be used as CUDA compiler.

Please specify which gcc should be used by nvcc as the host compiler. [Default is /usr/bin/gcc]:

Do you wish to build TensorFlow with MPI support? [y/N]: N

No MPI support will be enabled for TensorFlow.

Please specify optimization flags to use during compilation when bazel option "--config=opt" is specified [Default is -march=native]:

Add "--config=mkl" to your bazel command to build with MKL support.

Please note that MKL on MacOS or windows is still not supported.

If you would like to use a local MKL instead of downloading, please set the environment variable "TF_MKL_ROOT" every time before build.

Would you like to interactively configure ./WORKSPACE for Android builds? [y/N]: N

Not configuring the WORKSPACE for Android builds.

Configuration finished

其中因为我要配置成python3版本的tensorflow,所以python路径都用python3的。

4.编译和安装

bazel build -c opt --config=cuda //tensorflow/tools/pip_package:build_pip_package

bazel-bin/tensorflow/tools/pip_package/build_pip_package ~/tensorflow_pkg

这个要花挺长的时间。编译完成后用pip3来安装tensorflow,

sudo pip3 install ~/tensorflow_pkg/tensorflow-1.12.3-cp35-cp35m-linux_x86_64.whl

注意因为前面编译的是python3版本的tensorflow,所以要将系统默认python版本切换为python3,否则安装时会报错说要安装的tensorflow不支持当前平台。

5.测试

控制台执行python3进入环境,输入以下代码:

import tensorflow as tf

print(tf.__version__)

print(tf.__path__)

a = tf.constant(1)

b = tf.constant(2)

sess = tf.Session()

print(sess.run(a+b))

如下图所示,则表明Tensorflow安装成功

6.编译tensorflow的C++库文件

# 生成tensorflow C++库

bazel build --config=opt --config=cuda //tensorflow:libtensorflow_cc.so #有显卡

bazel build --config=opt //tensorflow:libtensorflow_cc.so #无显卡

编译需要等待较长时间,编译结束后,会产生bazel-bin、bazel-genfiles、bazel-out、bazel-tensorflow、bazel-testlogs五个文件夹,我们生成的两个so库在bazel-bin/tensorflow文件夹下libtensorflow_cc.so 和 libtensorflow_framework.so

如果要生成C库或者lite本地库,命令如下:

# 生成C库

bazel build --config=opt --config=cuda //tensorflow:libtensorflow.so

# 生成lite本地库

bazel build --config=opt //tensorflow/lite:libtensorflowlite.so

7.编译下载相关依赖文件

执行依赖包的编译:

cd tensorflow/contrib/makefile

./build_all_linux.sh

该脚本会先调用download_dependencies.sh下载相关依赖文件

8.环境配置

把需要的.h头文件以及编译出来的.so动态链接库文件复制到指定的路径下:

sudo mkdir /usr/local/include/tf

sudo cp -r bazel-genfiles /usr/local/include/tf/

sudo cp -r tensorflow /usr/local/include/tf/

sudo cp -r third_party /usr/local/include/tf/

sudo cp bazel-bin/tensorflow/libtensorflow_cc.so /usr/local/lib/

sudo cp bazel-bin/tensorflow/libtensorflow_framework.so /usr/local/lib

9.测试验证

main.cpp:

#include "tensorflow/cc/client/client_session.h"

#include "tensorflow/cc/ops/standard_ops.h"

#include "tensorflow/core/framework/tensor.h"

int main() {

using namespace tensorflow;

using namespace tensorflow::ops;

Scope root = Scope::NewRootScope();

// Matrix A = [3 2; -1 0]

auto A = Const(root, { {3.f, 2.f}, {-1.f, 0.f}});

// Vector b = [3 5]

auto b = Const(root, { {3.f, 5.f}});

// v = Ab^T

auto v = MatMul(root.WithOpName("v"), A, b, MatMul::TransposeB(true));

std::vector<Tensor> outputs;

ClientSession session(root);

// Run and fetch v

TF_CHECK_OK(session.Run({v}, &outputs));

// Expect outputs[0] == [19; -3]

LOG(INFO) << outputs[0].matrix<float>();

return 0;

}

cmakelist:

cmake_minimum_required (VERSION 3.9)

project (tf_test)

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -g -std=c++11 -W")

set(TENSORFLOW_LIBS

/usr/local/lib/libtensorflow_cc.so

/usr/local/lib/libtensorflow_framework.so

)

include_directories(

/usr/local/include/tf

/usr/local/include/tf/bazel-genfiles

/usr/local/include/tf/tensorflow

/usr/local/include/tf/third-party

/usr/local/include/eigen3

)

add_executable(tf_test main.cpp)

target_link_libraries(tf_test

${TENSORFLOW_LIBS}

)

运行结果:

编译问题汇总

1.报icu相关错误

tensorflow/core/kernel/BUILD:6589:1 no such package '@icu//': java.io.IOException

编译源码时会下载release-62-1.tar.gz文件,由于网络原因会下载失败,建议下载文件到本地利用本地的http服务来处理,具体操作如下:

# 1、安装

sudo apt-get install httpd #这一句之后会提示有很多个,从中选一个来安装

sudo apt-get install apache2

sudo apt-get install apache2-dev

# 2、可以查看是否成功

sudo systemctl status apache2

下载https://codeload.github.com/unicode-org/icu/tar.gz/release-62-1文件,然后放到/var/www/html/目录下,

"http://localhost/release-62-1.tar.gz"

然后修改tensorflow/third_party/icu/workspace.bzl文件,添加

"""Loads a lightweight subset of the ICU library for Unicode processing."""

load("//third_party:repo.bzl", "third_party_http_archive")

def repo():

third_party_http_archive(

name = "icu",

strip_prefix = "icu-release-62-1",

sha256 = "e15ffd84606323cbad5515bf9ecdf8061cc3bf80fb883b9e6aa162e485aa9761",

urls = [

"http://localhost/release-62-1.tar.gz"

"https://mirror.bazel.build/github.com/unicode-org/icu/archive/release-62-1.tar.gz",

"https://github.com/unicode-org/icu/archive/release-62-1.tar.gz",

],

build_file = "//third_party/icu:BUILD.bazel",

)

2.报压缩格式有误

C++库编译完成后,使用前需要安装一些tensorflow的依赖库,如下在执行脚本download_dependencies.sh时报压缩包没有用gzip格式压缩

chenwei@chenwei-HP-Pavilion-Gaming-Notebook:~/tensorflow$ ./tensorflow/contrib/makefile/download_dependencies.sh

downloading https://bitbucket.org/eigen/eigen/get/fd6845384b86.tar.gz

gzip: stdin: not in gzip format

tar: Child returned status 1

tar: Error is not recoverable: exiting now

通常有两种解决方法,第一种是解压时不用加入z指令tar -xvf ****,第二种是把下载的文件重命名。

解决方法:

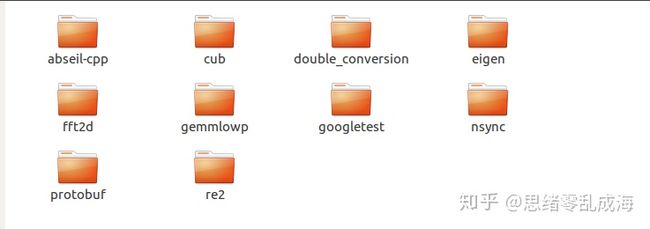

1.分别下载相应文件并解压,下载的文件名如下:

eigen,gemmlowp,googletest,nsync,protobuf,re2,fft2d,double_conversion,absl,cub

下载地址,可在.tensorflow/tensorflow/workspace.bzl文件中查询并下载,并且只能按照这个文件中的版本地址进行下载。

2.将下载的文件解压后并按照download_dependencies.sh中的要求进行命名,

统一存放在下面文件夹中:

tensorflow/tensorflow/contrib/makefile/downloads

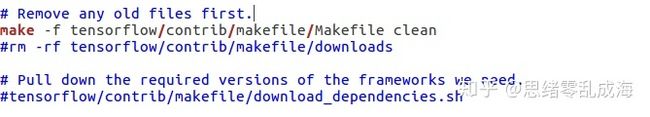

3.编辑build_all_linux.sh文件,注释掉30、33行,不然会自动删除第六步中已下载好的文件,注释如下所示:

4.再次编译:./build_all_linux.sh,编译完成后,会生成一个gen文件夹。

3.nvcc fatal

源码编译时报:Unsupported gpu architecture 'compute_75'。由于在配置显卡能力的时候默认是75未做修改,猜测是cuda9.2不支持compute_75。

解决方法:

1. ./configure配置的时候修改成nvccn能力6.1

2. 再次编译,问题解决

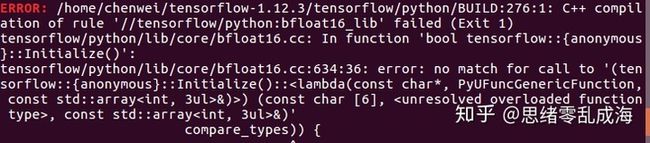

4.bfloat16_lib failed

网上有人说是numpy版本不能大于1.19.0,我测试了下先卸载sudo pip3 uninstall numpy,再安装小于1.19的版本sudo pip3 install 'numpy<1.19.0' 但是问题仍然存在。

解决方法:

由于我自己笔记本上编译为报这个错误,所以对比了下可能和python版本有关,当前是python3.6,卸载后使用ubuntu自带的python3.5再次编译即可通过。

5. 缺少依赖包

这个问题是由于之前的很多依赖是安装在python3.6上,卸载后切换到python3.5编译tensorflow时,很多依赖包需要重新安装。

解决方法:

1. 安装keras_applications

sudo pip3 install keras_applications

2. 安装keras_preprocessing

sudo pip3 install keras_preprocessing

3.再次安装,问题解决

6.报错 RuntimeError: Python version >= 3.6 required

解决方法:

1.更新setuptools

sudo pip3 install --upgrade setuptools -i https://mirrors.aliyun.com/pypi/simple/

2.更新pip

sudo python3 -m pip install --upgrade pip -i https://mirrors.aliyun.com/pypi/simple/

3.再次安装,问题解决

7. ./autogen.sh: 4: ./autogen.sh: autoreconf: not found

编译过程中出现autoreconf找不到的问题

解决方法:

- 安装相关的包

sudo apt-get install autoconf

sudo apt-get install automake

sudo apt-get install libtool

2.再次安装,问题解决

8. C++编译TF相关工程时报Eigen文件找不到

解决方法:

- cd到tensorflow/contrib/makefile/downloads/eigen文件夹下,执行以下命令:

mkdir build

cd build

cmake ..

make

sudo make install

在usr/local/include目录下会出现eigen3文件夹。

2.重新编译即可。