Pytorch深度学习(九):卷积神经网络跑Minst数据集

卷积神经网络(Minst数据集)

-

- 一、代码实现(CPU版)

- 二、代码实现(GPU版)

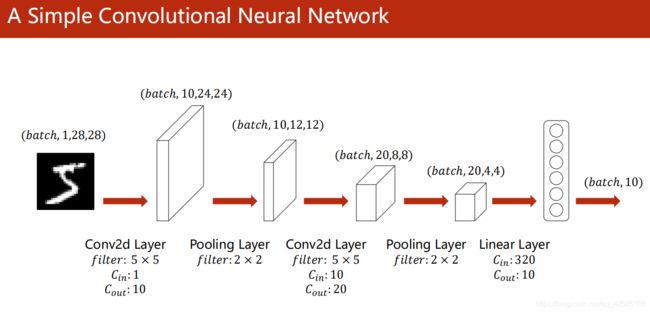

用一下例子来表示:

一、代码实现(CPU版)

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

#1.准备数据集

batch_size = 64

transform = transforms.Compose([

transforms.ToTensor(), #先将图像变成一个张量tensor

transforms.Normalize((0.1307,),(0.3081,)) #其中的0.1307是MNIST数据集的均值,0.3081是MNIST数据集的标准差

])

train_dataset = datasets.MNIST(root='./dataset/mnist/',

train=True,

download=True,

transform=transform)

train_loader = DataLoader(train_dataset,

shuffle=True,

batch_size=batch_size)

test_dataset = datasets.MNIST(root='./dataset/mnist/',

train=False,

download=True,

transform=transform)

test_loader = DataLoader(test_dataset,

shuffle=False,

batch_size=batch_size)

#2.构建卷积神经网络模型

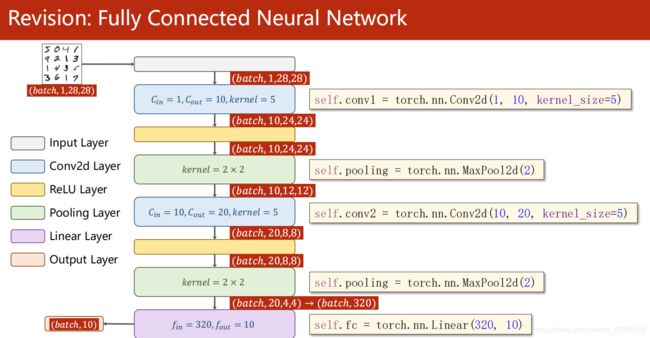

class Net(torch.nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1 = torch.nn.Conv2d(in_channels=1,out_channels=10,kernel_size=5)

self.conv2 = torch.nn.Conv2d(in_channels=10,out_channels=20,kernel_size=5)

self.pooling = torch.nn.MaxPool2d(kernel_size=2,stride=2)

self.fc = torch.nn.Linear(320,10)

def forward(self,x):

# Flatten data from (n, 1, 28, 28) to (n, 784)

batch_size = x.size(0)

x = F.relu(self.pooling(self.conv1(x)))

x = F.relu(self.pooling(self.conv2(x)))

x = x.view(batch_size,-1)

x = self.fc(x)

return x

model = Net()

#3.选择损失函数和构化器

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(),lr=0.01,momentum=0.5) #momentum 是带有优化的一个训练过程参数

#4.训练和测试

acc_list = []

epoch_list = []

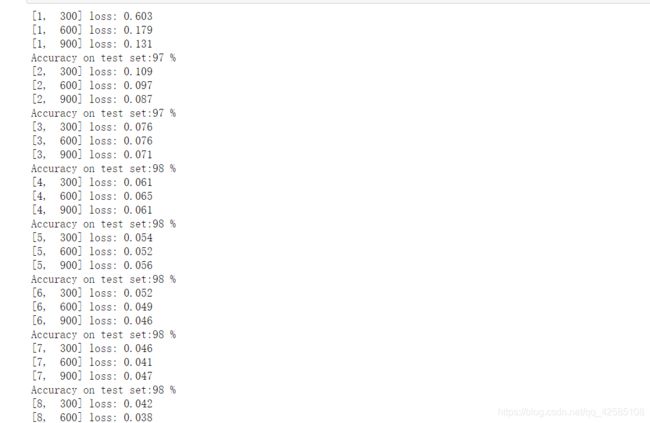

def train(epoch):

epoch_list.append(epoch)

running_loss = 0.0

for batch_idx,data in enumerate(train_loader,0):

inputs,target = data

optimizer.zero_grad()

#forward

outputs = model(inputs)

loss = criterion(outputs,target)

#backward

loss.backward()

#update

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 ==299 : #每训练300次就打印一次结果

print('[%d,%5d] loss: %.3f' % (epoch+1,batch_idx+1,running_loss/300))

running_loss = 0.0

'''

在分类问题中,通常需要使用max()函数对softmax函数的输出值进行操作,求出预测值索引。下面讲解一下torch.max()函数的输入及输出值都是什么。

1. torch.max(input, dim) 函数

output = torch.max(input, dim)

输入:input是softmax函数输出的一个tensor,dim是max函数索引的维度0/1,0是每列的最大值,1是每行的最大值

输出:会返回两个tensor,第一个tensor是每行的最大值,softmax的输出中最大的是1,所以第一个tensor是全1的tensor;第二个tensor是每行最大值的索引。

'''

def test():

correct = 0

total = 0

with torch.no_grad(): #不需要计算梯度

for data in test_loader:

images,labels = data

outputs = model(images)

_,predicted = torch.max(outputs.data,dim=1) #用max 来找最大值的下标

total += labels.size(0)

correct += (predicted == labels).sum().item()

print("Accuracy on test set:%d %%" % (100*correct/total))

acc_list.append(100*correct/total)

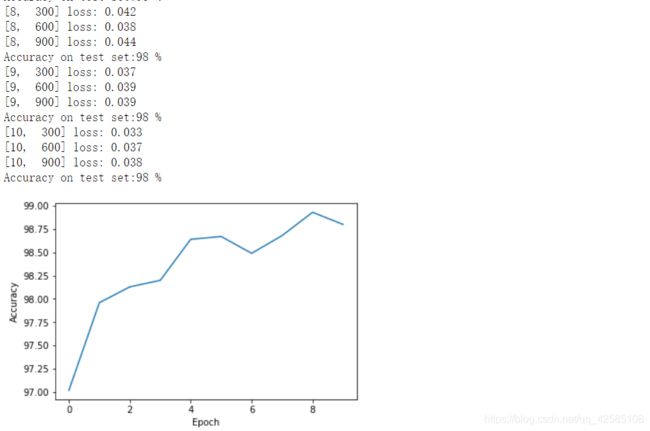

if __name__=='__main__':

for epoch in range(10):

train(epoch)

test()

plt.plot(epoch_list,acc_list)

plt.xlabel("Epoch")

plt.ylabel("Accuracy")

plt.show()

二、代码实现(GPU版)

# 1.准备数据集

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim

batch_size = 64

transform = transforms.Compose([

transforms.ToTensor(), #先将图像变成一个张量tensor

transforms.Normalize((0.1307,),(0.3081,)) #其中的0.1307是MNIST数据集的均值,0.3081是MNIST数据集的标准差

])

train_dataset = datasets.MNIST(root='./dataset/mnist/',

train=True,

download=True,

transform=transform)

train_loader = DataLoader(train_dataset,

shuffle=True,

batch_size=batch_size)

test_dataset = datasets.MNIST(root='./dataset/mnist/',

train=False,

download=True,

transform=transform)

test_loader = DataLoader(test_dataset,

shuffle=False,

batch_size=batch_size)

# 2.构建卷积网络模型

class Net(torch.nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1 = torch.nn.Conv2d(in_channels=1,out_channels=10,kernel_size=5)

self.conv2 = torch.nn.Conv2d(in_channels=10,out_channels=20,kernel_size=5)

self.pooling = torch.nn.MaxPool2d(kernel_size=2,stride=2)

self.fc = torch.nn.Linear(320,10)

def forward(self,x):

# Flatten data from (n, 1, 28, 28) to (n, 784)

batch_size = x.size(0)

x = F.relu(self.pooling(self.conv1(x)))

x = F.relu(self.pooling(self.conv2(x)))

x = x.view(batch_size,-1)

x = self.fc(x)

return x

model = Net()

#使用GPU

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model.to(device)

# 3.构造损失函数和优化器

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(),lr=0.01,momentum=0.5)

#4.训练和测试

acc_list = []

epoch_list = []

def train(epoch):

epoch_list.append(epoch)

running_loss = 0.0

for batch_idx,data in enumerate(train_loader,0):

inputs,target = data

inputs,target = inputs.to(device),target.to(device) # 使用GPU

optimizer.zero_grad() # 优化器清零

#forward

outputs = model(inputs) # 获得预测值

loss = criterion(outputs,target) # 获得损失值

#backward

loss.backward()

#update

optimizer.step()

running_loss += loss.item() # 注意加上item:不构成计算图

if batch_idx % 300 == 299: #每训练300次就打印一次平均的loss结果

print('[%d,%5d] loss: %.3f' % (epoch+1,batch_idx+1,running_loss/300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images,labels = data

images,labels = images.to(device),labels.to(device) # 使用GPU

outputs = model(images)

_,predicted = torch.max(outputs.data,dim=1) # 每一行的最大值下标

total += labels.size(0) # 测试了多少个数据

correct += (predicted == labels).sum().item() # 计算有多少个预测正确

print('Accuracy on test set:%d %%'%(100*correct/total)) # 输出正确率

acc_list.append(100*correct/total)

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

test()

plt.plot(epoch_list,acc_list)

plt.xlabel("Epoch")

plt.ylabel("Accuracy")

plt.show()