Adaptive Semantic Segmentation with a Strategic Curriculum of Proxy Labels

Adaptive Semantic Segmentation with a Strategic Curriculum of Proxy Labels(具有代理标签战略课程的自适应语义分割)

目录

- Adaptive Semantic Segmentation with a Strategic Curriculum of Proxy Labels(具有代理标签战略课程的自适应语义分割)

-

- Abstract

- Introduction

- Related Work

- Method

- Strategic Curriculum战略课程

- Experiments

- Conclusion

Abstract

Training deep networks for semantic segmentation requires annotation of large amounts of data, which can be time-consuming and expensive. Unfortunately, these trained networks still generalize poorly when tested in domains not consistent with the training data. In this paper,we show that by carefully presenting a mixture of labeled source domain and proxy-labeled target domain data to a network, we can achieve state-of-the-art unsupervised domain adaptation results. With our design, the network progressively learns features specific to the target domain using annotation from only the source domain. We generate proxy labels for the target domain using the network’s own predictions. Our architecture then allows selective mining of easy samples from this set of proxy labels, and hard samples from the annotated source domain. We conduct a series

of experiments with the GTA5, Cityscapes and BDD100k datasets on synthetic-to-real domain adaptation and geographic domain adaptation, showing the advantages of our method over baselines and existing approaches.

训练深度网络进行语义分割需要注释大量数据,这可能既耗时又昂贵。不幸的是,当在与训练数据不一致的域中进行测试时,这些训练有素的网络的推广效果仍然很差。在本文中,我们表明,通过将标记的源域数据和代理标记的目标域数据的混合物仔细呈现给网络,可以实现最新的无监督域自适应结果。通过我们的设计,网络仅使用源域中的注释来逐步学习特定于目标域的功能。我们使用网络自己的预测为目标域生成代理标签。然后,我们的架构允许选择性地从这组代理标签集中提取简单样本,并从带注释的源域中选择性提取硬样本。我们对GTA5,Cityscapes和BDD100k数据集进行了一系列从合成到真实领域适应和地理领域适应的实验,显示了我们的方法相对于基准和现有方法的优势。

Introduction

Dataset bias [37] is a well-known drawback of supervised approaches to visual recognition tasks. In general, the success of supervised learning models, both of the traditional and deep learning varieties, is restricted to data from the domain it was trained on. Even small shifts between the

training and test distributions lead to a significant increase in their error rates [39]. For deep neural networks, the common approach to handle this is fairly straightforward: pretrained deep models perform well on new domains when they are fine-tuned with a sufficient amount of data from the

new distribution. However, fine-tuning involves the bottleneck of data annotation, which for many modern computer vision problems is a far more time-consuming and expensive process than data collection [1].

数据集偏差[37]是视觉识别任务监督方法的一个众所周知的缺点。 总的来说,无论是传统学习方式还是深度学习方式,监督学习模型的成功都仅限于受过训练的领域的数据。 即使训练和测试分布之间的微小变化也会导致其错误率显着增加[39]。 对于深度神经网络,处理此问题的常用方法非常简单:经过预先训练的深度模型在新域上进行了微调,并使用来自新分布的足够数量的数据进行了微调。 但是,微调涉及数据注释的瓶颈,对于许多现代计算机视觉问题而言,它比数据收集要耗时得多且昂贵得多[1]。

Unsupervised domain adaptation aims at overcoming this dataset bias using only unlabeled data collected from the new target domain. Recent work in this area addresses Figure 1: (Top row) Validation image from the Cityscapes dataset and corresponding ground truth segmentation mask.

(Left column) Segmentations produced after 1, 2, and 4 epochs of self-training with GTA5 labels, where the model’s mistakes are slowly amplified. (Right column) Predictions at same intervals using our strategic curriculum with target easy mining and source hard mining. The network progressively adapts to the new domain.

无监督域调整旨在仅使用从新目标域收集的未标记数据来克服此数据集偏差。 该领域的最新工作是针对图1 :(第一行)来自Cityscapes数据集的验证图像和相应的地面真相分割蒙版。(左列)在经过GTA5标签的自训练1、2和4个历时后产生的分割,其中 模型的错误会逐渐放大。 (右列)使用我们的战略课程以目标容易开采和源硬开采来进行相同时间间隔的预测。 网络逐渐适应新的领域。

domain shift by aligning the features extracted from the network across the source and target domains, minimizing some measure of distance between the source and target feature distributions, such as correlation distance [34] or maximum mean discrepancy [25]. The most promising techniques under this broad class of feature alignment methods,referred to as adversarial methods, involve minimizing the accuracy of a domain discriminator network that classifies network representations according to the domain they come from [8, 38, 39]. However, implementing these adversarial approaches requires dealing with a difficult to optimize

objective function.

通过对齐从源域和目标域中的网络中提取的特征,使源域和目标特征分布之间的距离的度量最小化,例如相关距离[34]或最大平均差异[25],来进行域移位。 在这种广泛的特征对齐方法类别(称为对抗方法)下,最有前途的技术涉及使域区分器网络的准确性最小化,该域区分器网络会根据它们来自[8,38,39]的域对网络表示进行分类。 但是,实施这些对抗性方法需要处理难以优化的目标功能。

In this study, we explore an orthogonal idea, routinely applied while teaching people (or animals) to perform new tasks, which is to choose an effective sequence in which training examples are presented to a learner [18]. When applied to a machine learning system, this has been shown

to have to potential to remarkably increase the speed at which learning can occur [3]. In a domain adaptation setting, performing well on the target domain is hard for the network due to the lack of annotated, supervised training.We progressively learn target-domain specific features by

suitably ordering the training data being used to update the network’s parameters.

在这项研究中,我们探索了一种正交的思想,该思想通常在教人(或动物)执行新任务时应用,即选择一种有效的顺序,在该顺序中向学习者展示训练实例[18]。 当应用于机器学习系统时,这已经显示出有潜力显着提高学习发生的速度[3]。 在域适应设置中,由于缺少带注释的监督培训,网络很难在目标域上实现良好的性能。我们通过适当地排序用于更新网络参数的培训数据来逐步学习目标域的特定功能。

Our system utilizes a prominent class of semi-supervised learning algorithms that use the partially trained network to assign proxy labels to the unlabeled data and augment the training set, referred to as self-training [31]. We show that applying a strategic curriculum while training with this augmented dataset can help overcome domain shift in semantic

segmentation networks, without explicitly aligning features in any way. The strategy we use is based on easy and hard sample mining from the target and source domains, while also gradually shifting the overall distribution of data presented to the network towards the target domain over training. The method is far simpler to optimize than adversarial techniques while offering superior performance.

我们的系统利用一类杰出的半监督学习算法,该算法使用部分训练的网络将代理标签分配给未标记的数据,并增强训练集,称为自训练[31]。 我们表明,在使用扩展数据集进行培训的同时应用策略性课程可以帮助克服语义细分网络中的域转移,而无需以任何方式明确地对齐功能。 我们使用的策略是基于从目标域和源域的简单而艰苦的样本挖掘,同时还通过训练逐步将呈现给网络的数据的总体分布向目标域转移。 该方法比对抗技术更易于优化,同时提供了卓越的性能。

Our contributions are as follows: first, we implement a simple approach to perform self-training using a mixture of batches from the source and target domains with a gradient filtering strategy. Second, we design a lightweight architecture and suitable regularization scheme to evaluate the difficulty of training samples, based on prediction agreement in an ensemble of network branches. Finally, we present a strategic training curriculum to make our self-training approach better suited for unsupervised domain adaptation.We evaluate our method on several challenging domain

adaptation benchmarks for urban street scene semantic segmentation, performing a detailed analysis and comparing to various baselines and feature alignment methods. We also visualize the working of our algorithm.

我们的贡献如下:首先,我们采用一种简单的方法来执行自训练,该方法使用来自源域和目标域的批次混合以及梯度过滤策略来执行自我训练。 其次,我们基于网络分支集合中的预测协议,设计了一种轻量级的体系结构和合适的正则化方案来评估训练样本的难度。 最后,我们提供了一种战略培训课程,以使我们的自我培训方法更适合于无监督的领域自适应。我们在针对城市街道场景语义分割的几个具有挑战性的领域自适应基准上评估了我们的方法,进行了详细的分析并与各种基线和特征进行了比较 对齐方法。 我们还将可视化算法的工作。

Related Work

Unsupervised Domain Adaptation. There exist several situations where data collection in a target domain is extremely cheap, but labeling is prohibitively expensive. A prime example is semantic segmentation in new domains, as the means of collecting raw data (images or videos) is fairly cheap as opposed to the cost of annotating each pixel in this acquired data. Without annotation, fine-tuning is not possible, so the domain shift can be overcome by unsupervised domain adaptation.

**无监督域自适应。**在某些情况下,目标域中的数据收集非常便宜,但标记却非常昂贵。 一个主要的例子是在新领域中的语义分割,因为收集原始数据(图像或视频)的方法相当便宜,而不是在此获取的数据中注释每个像素的成本。 如果没有注释,则无法进行微调,因此可以通过无监督的域自适应来克服域移位。

Adversarial training has emerged as a successful approach for this [8, 39, 4]. The core idea is to train a domain discriminator to distinguish between representations from the source and target domains. While the discriminator tries to minimize its domain classification error, the main classification network tries to maximize this error term, minimizing the feature discrepancy between both domains. This is closely related to the way the discrepancy between the distribution of the training data and synthesized data is minimized in Generative Adversarial Networks (GANs) [10].

对抗训练已成为成功的方法[8,39,4]。 核心思想是训练域区分符,以区分源域和目标域的表示形式。 当鉴别器试图最小化其域分类误差时,主分类网络试图最大化该误差项,从而最小化两个域之间的特征差异。 这与在生成对抗网络(GAN)中将训练数据的分布与合成数据之间的差异最小化的方式密切相关[10]。

More recently, state-of-the-art unsupervised domain adaptation methods directly align the inputs between the source and target domains rather than learned representations [13, 27, 21, 47, 7]. This is achieved through the significant advances in literature on pixel-level image-to-image

translation, through techniques such as cycle-consistent GANs [51] and image stylization [23, 22]. In our results, we compare against these methods. Our approach, while being simple to implement, is also orthogonal to these ideas, meaning their benefits could potentially be combined, albeit with greater difficulty in optimization and parameter tuning.

最近,最新的无监督域自适应方法直接在源域和目标域之间对齐输入,而不是学习的表示[13、27、21、47、7]。 这是通过有关像素级图像到图像转换的文献方面的重大进展,通过周期一致的GAN [51]和图像样式化[23,22]实现的。 在我们的结果中,我们将这些方法进行了比较。 我们的方法虽然易于实现,但也与这些想法正交,这意味着尽管优化和参数调整的难度更大,但它们的优点可能会组合在一起。

Self-Training. Semi-supervised learning has a rich history and has shown considerable success for utilizing unlabeled data effectively [52]. A prominent class of semi-supervised learning algorithms, referred to as self-training, uses the trained model to assign proxy labels to unlabeled samples,which are then used as targets while training in combination with labeled data. These targets are now no longer truly representative of the ground truth, but provide some additional noisy training signal. Most practical self-training techniques require a better way to utilize this noisy annotation by avoiding those data points for which the model assigning proxy labels is not very confident [42, 26].

**自我训练。**半监督学习已有很长的历史,并且在有效利用未标记的数据方面显示出相当大的成功[52]。 一类杰出的半监督学习算法称为自我训练,它使用训练后的模型将代理标签分配给未标记的样本,然后将其用作目标,同时与标记的数据一起进行训练。 这些目标现在不再真正代表地面真实性,而是提供了一些额外的嘈杂训练信号。 大多数实用的自我训练技术都需要一种更好的方式来利用这种嘈杂的注释,方法是避免模型分配代理标签的信心不足的那些数据点[42,26]。

Classic self-training has shown limited success [29, 15,40, 41]. Its main drawback is that the model is unable to correct its own mistakes. If the model’s predictions on unlabeled data are confident but wrong, the erroneous data is nevertheless incorporated into training and the model’s errors are amplified. This effect is exacerbated under domain shift, where deep networks are known to produce confident but erroneous classifications [35]. Our work aims to counter this by guiding the self-training process, selectively presenting samples of increasing levels of difficulty to the network as training progresses. We illustrate this effect by visualizing the predictions on a validation image in the Cityscapes dataset over different epochs of our training experiments in Fig. 1. We add more detailed quantitative results comparing our method to self-training in our experiments.

经典的自我训练已显示出有限的成功[29,15,40,41]。 它的主要缺点是该模型无法纠正其自身的错误。 如果模型对未标记数据的预测是有把握的但有误,那么错误的数据仍会纳入训练中,并且模型的错误会被放大。 在域转移的情况下,这种影响会加剧,其中深层网络会产生自信但错误的分类[35]。 我们的工作旨在通过指导自我训练过程来解决此问题,随着训练的进行,有选择地向网络呈现难度不断增加的样本。 我们通过可视化图1中我们训练实验不同时期的Cityscapes数据集中的验证图像上的预测来说明这种效果。我们在实验中将我们的方法与自我训练相比较,添加了更详细的定量结果。

Our approach is closely related to a variant of selftraining called tri-training, which has been applied successfully for both semi-supervised learning and unsupervised domain adaptation [50, 33, 45]. However, we incorporate a

more generalized framework which is not restricted to networks with three branches, inspired by recent literature on ensemble-based network uncertainty estimation [20, 2, 9].

我们的方法与一种称为三训练的自训练变量密切相关,该方法已成功应用于半监督学习和无监督领域自适应[50,33,45]。 但是,我们引入了一个更通用的框架,该框架不限于具有三个分支的网络,这是受到有关基于集成的网络不确定性估计的最新文献的启发[20,2,9]。

Self-Paced and Curriculum Learning. The core intuition behind self-paced learning is that rather than considering all available samples simultaneously, an algorithm should be presented training data in a meaningful order that best facilitates learning [19]. The main challenge is measuring the difficulty of samples. In most cases, there is no direct measure of this, as what is difficult to the network may change dynamically as the network is being trained. There is existing work on estimating difficulty through a teacher network [36, 17] or the assigning difficulty weights to different samples, and then sorting by these [16, 11]. Our work, though not directly a form of curriculum learning, can be seen as a

simplified version of difficulty weighting methods. We assign all samples to certain difficulty levels, which are then used to facilitate the transfer from easier to harder samples over training.

**自定进度和课程学习。**自定进度学习的核心直觉是,与其同时考虑所有可用样本,不如提供一种算法,以有意义的顺序提供训练数据,以最有利于学习[19]。 主要挑战是测量样品的难度。 在大多数情况下,这没有直接的措施,因为随着网络的训练,网络难点可能会动态变化。 现有的工作是通过教师网络[36,17]估算难度,或为不同的样本分配难度权重,然后根据这些强度进行排序[16,11]。 我们的工作虽然不是直接的课程学习形式,但可以看作是难度加权方法的简化版本。 我们将所有样本分配给一定的难度级别,然后将其用于通过训练促进从较难样本到较难样本的转换。

Method

Self-Training for Domain Adaptation自训练领域适应

Our approach is based on self-training: we generate labels for unlabeled data in the target domain by using the network’s own predictions.

我们的方法基于自我训练:我们使用网络自己的预测为目标域中未标记的数据生成标签。

Source-Target Ratio. One intuition behind our method is that the representations learned by a network trained in a certain source domain may become more difficult to adapt to the target domain if the source network is fully optimized till convergence. Instead, we attempt to begin the adaptation of features before they become domain-specific, by training the network with a mixture of input data from both domains. Over the course of training, we present data in batches, based on a source-to-target batch ratio γ. Initially, the network observes more batches from the source domain. As training progresses, the number of target domain samples is increased, and finally maximized towards the end of training.

**源-目标比率。**我们的方法背后的一个直觉是,如果源网络经过充分优化直至收敛,则由在某个源域中训练的网络学习的表示可能会变得更加难以适应目标域。 取而代之的是,我们尝试通过混合使用来自两个域的输入数据来训练网络,从而在特征变为特定域之前就开始进行功能调整。 在培训过程中,我们基于源与目标的批次比γ来分批显示数据。 最初,网络从源域观察更多批次。 随着训练的进行,目标域样本的数量增加,并最终在训练结束时达到最大。

Gradient Filtering. A typical curriculum based training setup would involve presenting samples to the network in a specific order, which is determined in advance through a measure of sample easiness or difficulty.

We propose a different approach, to modify our sourcetarget ratio based sampling of data to incorporate information about the sample difficulty. Our strategy filters out the gradients of some hard samples in the early stages of training, and other easier samples during the later stages. This,in effect, allows us to determine the order in which samples are used for updating the network weights, even though batches are presented to the network by random sampling.

梯度过滤。典型的基于课程的培训设置将涉及以特定顺序将样本展示给网络,该顺序是通过衡量样本的难易程度预先确定的。我们提出了另一种方法来修改我们的源目标比率 基于数据的采样以合并有关采样难度的信息。 我们的策略会在训练的早期阶段过滤掉一些硬样本的梯度,并在后期阶段过滤掉其他较容易的样本。 实际上,这使我们能够确定样本用于更新网络权重的顺序,即使批次是通过随机采样呈现给网络的。

Adaptive Segmentation Architecture自适应分割架构

Segregating the predictions based on the uncertainty in the samples is the most essential component of our training strategy. To facilitate this, our learner is constructed in a way that produces multiple segmentation hypotheses for each input image, using a network ensemble.

根据样本中的不确定性来分离预测是我们培训策略中最重要的组成部分。 为方便起见,我们的学习器的构造方式是使用网络集成为每个输入图像生成多个分割假设。

Ensemble Agreement. A successful technique for approximating network uncertainty in recent literature is through variation ratios in model ensembles [2]. This refers to the number of non-modal predictions (that do not agree with the mode, or majority vote) made by an ensemble of networks trained with different initial random seeds,

**合奏协议。**在最近的文献中,一种成功的近似网络不确定性的技术是通过模型合奏中的变化率[2]。 这是指由一组使用不同初始随机种子训练的网络进行的非模态预测(与模态或多数表决不一致)的数量,

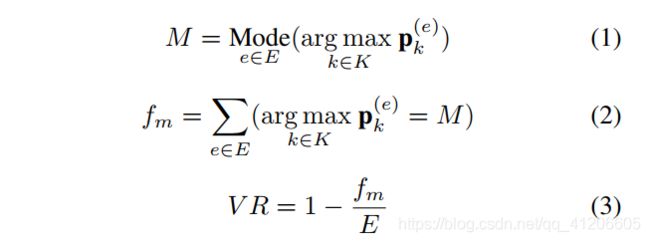

Where V R represents the variation ratios function, M is the mode of the predictions and fm is the frequency of the mode. In a semantic segmentation setup, p( ke) refers to the normalized probability output by the network indexed by e in the ensemble at a given pixel location, for class k. E is the set of all networks in the ensemble, and K is the set of

Where V R represents the variation ratios function, M is the mode of the predictions and fm is the frequency of the mode. In a semantic segmentation setup, p( ke) refers to the normalized probability output by the network indexed by e in the ensemble at a given pixel location, for class k. E is the set of all networks in the ensemble, and K is the set of

all classes. High variation ratios correspond to large disagreements among ensemble members, which are likely to be harder samples than those where all the ensemble members are in agreement.

其中V R代表变化率函数,M是预测的模式,fm是模式的频率。 在语义分割设置中,对于类k,p(ke)是指在给定像素位置的集合中由e索引的网络输出的归一化概率。 E是集合中所有网络的集合,而K是所有类的集合。 高的变化率对应于合奏成员之间的较大分歧,与所有合奏成员都同意的采样相比,这可能是更难的样本。

Branch Decoder Ensembles. If an ensemble of networks is optimized in parallel, variation ratios can be obtained at any particular iteration during the optimization. However, this requires a significant increase in computational resources,especially on semantic segmentation networks which have huge memory footprints. Lower batch sizes or input resolutions are required to reduce this footprint, which may cause a large negative impact on the overall segmentation performance. We propose two simplifications to significantly bring down the memory requirements while retaining the ability to measure variation ratios.

分支解码器集成。如果并行优化一组网络,则可以在优化过程中的任何特定迭代中获得变化率。 但是,这需要大量增加计算资源,尤其是在具有巨大内存占用量的语义分段网络上。 需要较小的批次大小或输入分辨率以减少此占用空间,这可能会对整体细分性能产生较大的负面影响。 我们提出两种简化方法,以显着降低内存需求,同时保留测量变化率的能力。

First, we only ensemble a part of the network architecture, specifically, the decoder that produces the final output from an encoded feature map. This leads to a branched architecture with a shared encoder and multiple decoders. Most segmentation architectures concentrate heavy processing in the encoder and have relatively lightweight decoder layers. Only ensembling the decoders leads to a much smaller overhead in terms of parameters, computation and memory consumption.

首先,我们仅集成网络体系结构的一部分,特别是从编码特征图生成最终输出的解码器。 这导致具有共享编码器和多个解码器的分支架构。 大多数分段架构将繁重的处理集中在编码器中,并具有相对轻量的解码器层。 仅组装解码器,就参数,计算和内存消耗而言,开销要小得多。

Further, we employ only two decoder branches in our experiments. The number of decoders quantizes the number of levels of difficulty by which we sort our data. With two branches, variation ratios become a binary measure of agreement or disagreement, sorting samples into easy and hard pixels. This simplification is motivated by the fact that similar binary measurements of difficulty have been fairly successful in tri-training approaches to semisupervised learning [50].

此外,我们在实验中仅采用了两个解码器分支。 解码器的数量量化了对数据进行排序的难度级别。 通过两个分支,变异率成为一致性或不一致性的二进制度量,将样本分类为简单像素和硬像素。 这种简化的动机是,类似的二进制难度测量在三项训练的半监督学习方法中已经相当成功[50]。

Decoder Similarity Penalty. If the two decoders learn identical weights, agreement between them with respect to a prediction would no longer be characteristic of the sample difficulty. In ensemble based uncertainty methods, different random initialization for the ensemble members has been shown to cause sufficient diversity to produce reliable

uncertainty estimates [20]. However, in our case, we are ensembling far fewer parameters, only 2 branches of the same network, and would like to enforce diversity between the branches making segmentation predictions. This helps avoid a collapse back into regular self-training.

**解码器相似性惩罚。**如果两个解码器学习相同的权重,则它们之间关于预测的一致性将不再是样本难度的特征。 在基于集合的不确定性方法中,已显示出对集合成员的不同随机初始化会导致足够的多样性,以产生可靠的不确定性估计[20]。 但是,在我们的案例中,我们集成的参数要少得多,只有同一网络的2个分支,并且希望在进行分段预测的分支之间加强多样性。 这有助于避免崩溃回到常规的自我训练中。

To facilitate this, we introduce a regularization penalty to our training objective based on the cosine similarity between the filters in each decoder. We obtain the filter weight vectors w1 and w2 by flattening the weights of both decoders and concatenating them.

为了促进这一点,我们基于每个解码器中滤波器之间的余弦相似度,将正则化惩罚引入了我们的训练目标。 我们通过将两个解码器的权重展平并对其进行级联来获得滤波器权重向量w1和w2。

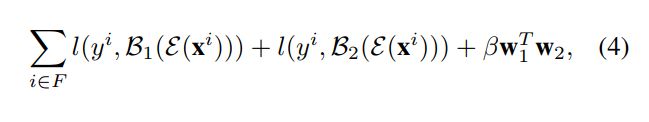

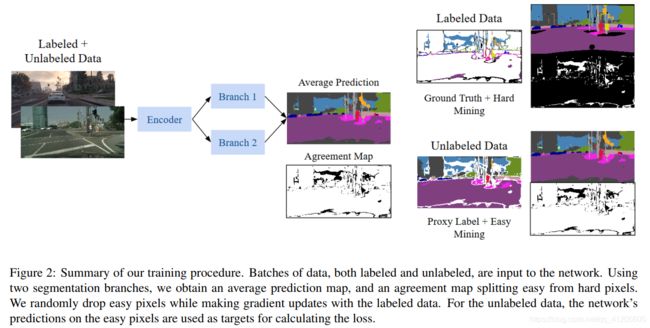

Summary. Fig. 2 gives a full summary of the network architecture and training procedure. The network loss is calculated by independently adding up the loss at two segmentation branches during training, along with the similarity penalty, as follows:

**摘要。**图2给出了网络体系结构和培训过程的完整摘要。 网络损失是通过在训练期间将两个分段分支处的损失以及相似度罚分独立累加来计算的,如下所示:

其中{(xi; yi)}(N i = 1)是训练数据,F是梯度过滤后保留的该训练数据的子集,l是分类的交叉熵损失,En-e; B1; B2分别表示通过编码器,分支1和分支2的前向通过功能。 正则化代价由可调超参数β加权。

其中{(xi; yi)}(N i = 1)是训练数据,F是梯度过滤后保留的该训练数据的子集,l是分类的交叉熵损失,En-e; B1; B2分别表示通过编码器,分支1和分支2的前向通过功能。 正则化代价由可调超参数β加权。

While obtaining our validation metrics, we generate an average prediction from both decoders before applying the softmax normalization. The agreement map between the decoders serves as a measure of the variation ratios.

在获得验证指标的同时,我们会在应用softmax归一化之前从两个解码器生成平均预测。 解码器之间的一致性映射用作变化率的度量。

图2:我们的培训程序摘要。 标记和未标记的数据批次都输入到网络。 使用两个分割分支,我们可以获得平均预测图和易于从硬像素分离的协议图。 我们随机丢弃容易的像素,同时使用标记的数据进行渐变更新。 对于未标记的数据,网络对易像素的预测将用作计算损失的目标。

图2:我们的培训程序摘要。 标记和未标记的数据批次都输入到网络。 使用两个分割分支,我们可以获得平均预测图和易于从硬像素分离的协议图。 我们随机丢弃容易的像素,同时使用标记的数据进行渐变更新。 对于未标记的数据,网络对易像素的预测将用作计算损失的目标。

Strategic Curriculum战略课程

For adaptive semantic segmentation, we define a strategic training curriculum by adding three components to our basic self-training setup: a weighted loss, target easy mining and source hard mining.

对于自适应语义分割,我们通过在基本的自我训练设置中添加三个组成部分来定义战略培训课程:加权损失,目标易挖掘和源硬挖掘。

Weighted Loss. Semantic segmentation typically involves a heavy class imbalance in the training data, leading to poor performances on minority classes. This effect is further exaggerated in a self-training setup, where any bias in predictions towards majority classes in the dataset can have a large impact on performance, since these predictions are used as

proxy labels for further training.

**加权损失。**语义分割通常会在训练数据中造成严重的班级失衡,从而导致少数班级表现不佳。 在自训练设置中,这种效果会进一步放大,在这种情况下,对数据集中多数类别的预测中的任何偏差都会对性能产生重大影响,因为这些预测被用作进一步训练的代理标签。

We use a loss weighting vector λ to assign different weights to each class in our predictions to help counteract this effect. Typically, median inverse frequency based approaches are used for weighting, but we find the calculated class weighting has extreme values, destabilizing the training. Instead, we choose specific classes for which the loss weight is doubled, based on validation set performance.

我们在预测中使用损失加权向量λ为每个类别分配不同的权重,以帮助抵消这种影响。 通常,使用基于中频逆频率的方法进行加权,但是我们发现计算出的类加权具有极高的值,从而破坏了训练的稳定性。 取而代之的是,根据验证集性能,我们选择损失权重加倍的特定类。

Target Easy Mining. For gradient filtering, the first assumption we make is that gradients from hard target domain samples may be unsuitable for updating the network. When both the decoders are unable to find consensus regarding the class prediction at a pixel, one of the two decoders is definitely making an incorrect prediction. By masking out

the self-training gradients for these pixels, we avoid a large number of erroneous weight updates.

449/5000

** Target Easy Mining。**对于梯度过滤,我们做出的第一个假设是来自硬目标域样本的梯度可能不适合更新网络。 当两个解码器都无法找到关于像素的类预测的共识时,两个解码器之一肯定会做出错误的预测。 通过屏蔽这些像素的自训练梯度,我们避免了许多错误的权重更新。

Source Hard Mining. Studies in tasks with class imbalance have shown how gradients can be overwhelmed by the component coming from well-classified samples [24]. We would like to minimize the data being used to train the network from the source domain during later stages of training, and propose to do this by removing the gradients coming

from these well-classified samples.

**Source Hard Mining。**对具有类不平衡性的任务的研究表明,来自分类良好的样本的成分如何使梯度变得不堪重负[24]。 我们希望在训练的后期阶段将用于从源域训练网络的数据最小化,并建议通过删除来自这些分类良好的样本的梯度来做到这一点。

We achieve this by randomly masking gradients of easy pixels from the source domain. This leads to us choosing more hard pixels, where one (or both) of the decoders made a classification error. The network is allowed to consistently improve its performance in the source domain but minimize the number of source domain training images used for weight updates. In this way, we make the features less domain-specific.

我们通过从源域中随机掩盖容易像素的梯度来实现此目的。 这导致我们选择更多的硬像素,其中一个(或两个)解码器产生了分类错误。 允许网络持续改善其在源域中的性能,但最小化用于权重更新的源域训练图像的数量。 通过这种方式,我们使功能减少了特定领域的需求。

Experiments

Conclusion

In this paper, we proposed a method to exploit unlabeled data for semantic segmentation by training with proxy labels. It combines self-training with a gradient filtering strategy using a mixture of labeled and unlabeled training data.For improving unsupervised domain adaptation, we introduce three modifications: class-wise weighting of the loss function, easy mining in the proxy-labeled target domain samples, and hard mining in the labeled source domain samples. To mine easy and hard pixels, we proposed an architecture with two decoder branches. The agreement map between these branches can be used to visualize the model’s uncertainty in its predictions. Our approach obtains stateof-the-art results on unsupervised domain adaptation from the synthetic to real data. Moreover, our approach is orthogonal to existing techniques based on feature alignment,and could potentially be combined with these for further improvements in performance. We further validate our idea with strong results on additional domain adaptation tasks with the challenging BDD100k benchmark.

在本文中,我们提出了一种通过使用代理标签进行训练来利用未标签数据进行语义分割的方法。它结合了有标签和无标签训练数据的自训练和梯度过滤策略。为改善无监督域自适应,我们引入了三种修改方法:损失函数的类加权,易于在代理标记的目标域样本中进行挖掘,并在标记的源域样本中进行硬挖掘。为了挖掘容易和困难的像素,我们提出了具有两个解码器分支的体系结构。这些分支之间的协议图可用于可视化模型预测中的不确定性。我们的方法获得了从合成数据到真实数据的无监督域适应方面的最新结果。而且,我们的方法与基于特征对齐的现有技术正交,并且可能与这些技术结合以进一步提高性能。通过具有挑战性的BDD100k基准测试,我们在其他领域适应任务上取得了丰硕的成果,进一步验证了我们的想法。