吴恩达深度学习学习笔记——C1W3——浅层神经网络——作业——平面数据分类

这里主要梳理一下作业的主要内容和思路,完整作业文件可参考:

https://github.com/pandenghuang/Andrew-Ng-Deep-Learning-notes/tree/master/assignments/C1W3

作业完整截图,参考本文结尾:作业完整截图。

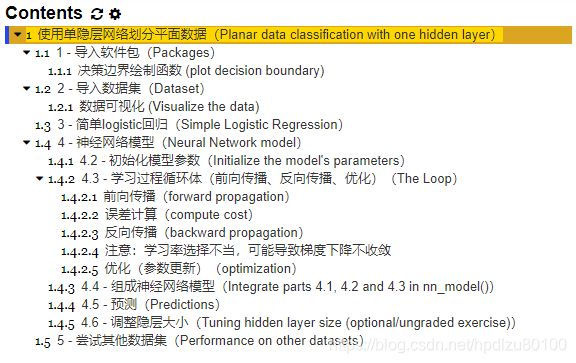

作业目录:

作业目标:

Planar data classification with one hidden layer

Welcome to your week 3 programming assignment. It's time to build your first neural network, which will have a hidden layer. You will see a big difference between this model and the one you implemented using logistic regression.

You will learn how to:

- Implement a 2-class classification neural network with a single hidden layer

- Use units with a non-linear activation function, such as tanh

- Compute the cross entropy loss

- Implement forward and backward propagation

...

1 - Packages(所需包)

Let's first import all the packages that you will need during this assignment.

- numpy is the fundamental package for scientific computing with Python.

- sklearn provides simple and efficient tools for data mining and data analysis.

- matplotlib is a library for plotting graphs in Python.

- testCases provides some test examples to assess the correctness of your functions

- planar_utils provide various useful functions used in this assignment

2 - Dataset(数据集)

First, let's get the dataset you will work on. The following code will load a "flower" 2-class dataset into variables X and Y.

...

3 - Simple Logistic Regression(简单Logistic回归的效果,并不理想)

Before building a full neural network, lets first see how logistic regression performs on this problem. You can use sklearn's built-in functions to do that. Run the code below to train a logistic regression classifier on the dataset.

...

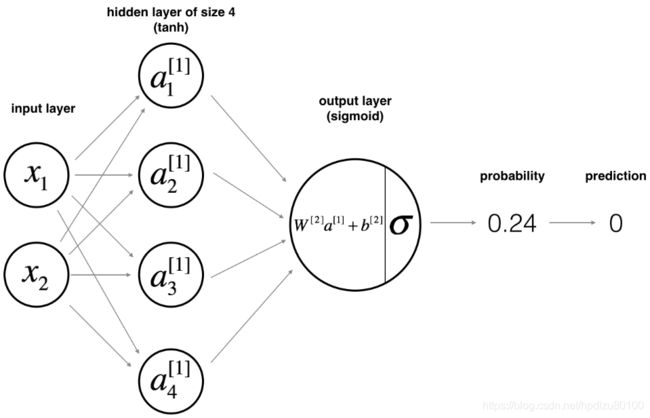

4 - Neural Network model(神经网络模型闪亮登场)

Logistic regression did not work well on the "flower dataset". You are going to train a Neural Network with a single hidden layer.

数学原理:

...

4.2 - Initialize the model's parameters(初始化模型参数)

Exercise: Implement the function initialize_parameters().

Instructions:

- Make sure your parameters' sizes are right. Refer to the neural network figure above if needed.

- You will initialize the weights matrices with random values.

- Use:

np.random.randn(a,b) * 0.01to randomly initialize a matrix of shape (a,b).

- Use:

- You will initialize the bias vectors as zeros.

- Use:

np.zeros((a,b))to initialize a matrix of shape (a,b) with zeros.

- Use:

...

4.3 - The Loop(循环体,对应神经网络单个隐层的功能)

Question: Implement forward_propagation().(前向传播)

Instructions:

- Look above at the mathematical representation of your classifier.

- You can use the function

sigmoid(). It is built-in (imported) in the notebook. - You can use the function

np.tanh(). It is part of the numpy library. - The steps you have to implement are:

- Retrieve each parameter from the dictionary "parameters" (which is the output of

initialize_parameters()) by usingparameters[".."]. - Implement Forward Propagation. Compute [1],[1],[2]Z[1],A[1],Z[2] and [2]A[2] (the vector of all your predictions on all the examples in the training set).

- Retrieve each parameter from the dictionary "parameters" (which is the output of

- Values needed in the backpropagation are stored in "

cache". Thecachewill be given as an input to the backpropagation function.

...

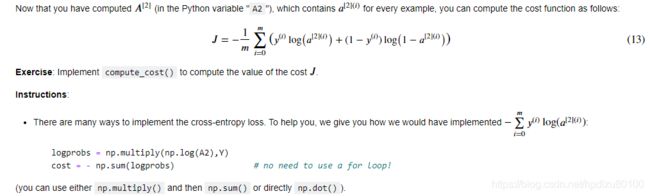

成本函数:

后向传播:

...

选择合适的学习率并更新参数(即反向传播过程):

Question: Implement the update rule. Use gradient descent. You have to use (dW1, db1, dW2, db2) in order to update (W1, b1, W2, b2).

General gradient descent rule: =−∂∂θ=θ−α∂J∂θ where α is the learning rate and θ represents a parameter.

Illustration: The gradient descent algorithm with a good learning rate (converging) and a bad learning rate (diverging). Images courtesy of Adam Harley.

...

4.4 - Integrate parts 4.1, 4.2 and 4.3 in nn_model()(组装模型)

Question: Build your neural network model in nn_model().

Instructions: The neural network model has to use the previous functions in the right order.

...

4.5 Predictions(使用模型计算预测值)

Question: Use your model to predict by building predict(). Use forward propagation to predict results.

Reminder: predictions = ={activation > 0.5}={10if >0.5otherwiseyprediction=1{activation > 0.5}={1if activation>0.50otherwise

As an example, if you would like to set the entries of a matrix X to 0 and 1 based on a threshold you would do: X_new = (X > threshold)

作业完整截屏: