deepstream-image-meta-test解析

0. sample背景介绍

该sample向我们展示如何将encoded image作为meta data然后将其以JPEG的格式保存下来。

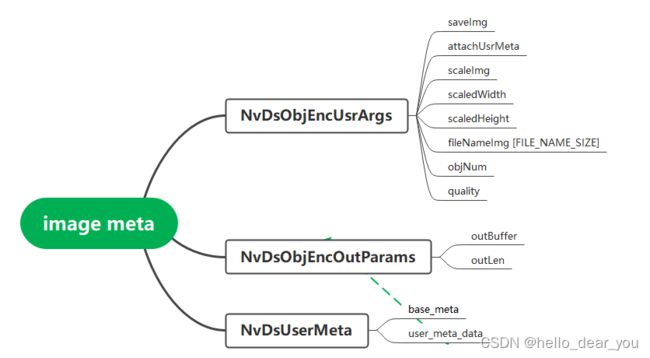

该sample源代码的位置:sources/apps/sample_apps / deepstream-image-meta-test.代码中涉及的主要类及其它们之间的关系可以如下表所示。

1. 将encoded objects转换为metadata

在pgie_src_pad_buffer_probe回调函数中调用nvds_obj_enc_start_encode函数编码detected objects的内容。然后,通过在osd element的sink pad中创建一个probe回调函数来访问encoded objects并将其保存到文件中,此时encoded objects的metadata类型为"NVDS_CROP_IMAGE_META"。

在该sample中,使用的是Object Encoder API。

1.0 创建一个context

通过调用nvds_obj_enc_create_context来创建context并返回一个执行NvObjEncCtx的handle句柄。然后,将context传入probe回调函数。

/* Lets add probe to get informed of the meta data generated, we add probe to

* the srd pad of the pgie element, since by that time, the buffer would have

* had got all the nvinfer metadata. */

pgie_src_pad = gst_element_get_static_pad (pgie, "src");

/*Creat Context for Object Encoding */

NvDsObjEncCtxHandle obj_ctx_handle = nvds_obj_enc_create_context ();

if (!obj_ctx_handle) {

g_print ("Unable to create context\n");

return -1;

}

if (!pgie_src_pad)

g_print ("Unable to get src pad\n");

else

gst_pad_add_probe (pgie_src_pad, GST_PAD_PROBE_TYPE_BUFFER,

pgie_src_pad_buffer_probe, (gpointer) obj_ctx_handle, NULL);

...

/* Destroy context for Object Encoding */

nvds_obj_enc_destroy_context (obj_ctx_handle);1.1 编码objects区域

通过调用nvds_obj_enc_process函数,将物体检测的区域crop下来并编码。

首先,查看nvds_obj_enc_process函数的定义,即需要传入哪些参数。

在原始的frame中执行crop操作并进行JPEG编码。在这个函数中,我们需要传入NvDsObjEncCtxHandle和NvDsObjEncUsrArgs参数,前一个参数就是我们传进来的context,而后一个参数NvDsObjEncUsrArgs的定义如下:

在对所有检测到的objects的区域进行编码之后,需要调用 nvds_obj_enc_finish() to make sure all enqueued object crops have been processed来保证所有待处理的objects被crop下来。

/* pgie_src_pad_buffer_probe will extract metadata received on pgie src pad

* and update params for drawing rectangle, object information etc. We also

* iterate through the object list and encode the cropped objects as jpeg

* images and attach it as user meta to the respective objects.*/

static GstPadProbeReturn

pgie_src_pad_buffer_probe (GstPad * pad, GstPadProbeInfo * info, gpointer ctx)

{

GstBuffer *buf = (GstBuffer *) info->data;

GstMapInfo inmap = GST_MAP_INFO_INIT;

if (!gst_buffer_map (buf, &inmap, GST_MAP_READ)) {

GST_ERROR ("input buffer mapinfo failed");

return GST_FLOW_ERROR;

}

NvBufSurface *ip_surf = (NvBufSurface *) inmap.data; // surface

gst_buffer_unmap (buf, &inmap);

NvDsObjectMeta *obj_meta = NULL;

guint vehicle_count = 0;

guint person_count = 0;

NvDsMetaList *l_frame = NULL;

NvDsMetaList *l_obj = NULL;

NvDsBatchMeta *batch_meta = gst_buffer_get_nvds_batch_meta (buf);

for (l_frame = batch_meta->frame_meta_list; l_frame != NULL;

l_frame = l_frame->next) {

NvDsFrameMeta *frame_meta = (NvDsFrameMeta *) (l_frame->data);

guint num_rects = 0;

for (l_obj = frame_meta->obj_meta_list; l_obj != NULL; l_obj = l_obj->next) {

obj_meta = (NvDsObjectMeta *) (l_obj->data);

if (obj_meta->class_id == PGIE_CLASS_ID_VEHICLE) {

vehicle_count++;

num_rects++;

}

if (obj_meta->class_id == PGIE_CLASS_ID_PERSON) {

person_count++;

num_rects++;

}

/* Conditions that user needs to set to encode the detected objects of

* interest. Here, by default all the detected objects are encoded.

* For demonstration, we will encode the first object in the frame */

if ((obj_meta->class_id == PGIE_CLASS_ID_PERSON

|| obj_meta->class_id == PGIE_CLASS_ID_VEHICLE) && num_rects == 1) {

NvDsObjEncUsrArgs userData = { 0 };

/* To be set by user */

userData.saveImg = save_img;

userData.attachUsrMeta = attach_user_meta;

/* Preset */

userData.objNum = num_rects;

/*Main Function Call */

nvds_obj_enc_process (ctx, &userData, ip_surf, obj_meta, frame_meta);

}

}

}

nvds_obj_enc_finish (ctx);

return GST_PAD_PROBE_OK;

}2. 保存objects区域内容

通过在osd element的sink pad上添加一个probe函数,将objects区域保存成文件。

/* Lets add probe to get informed of the meta data generated, we add probe to

* the sink pad of the osd element, since by that time, the buffer would have

* had got all the metadata. */

/*通过在osd element的sink pad上添加一个probe函数,来通知meta data已经生成,

因为,此时buffer已经获取到所有的metadata

*/

osd_sink_pad = gst_element_get_static_pad (nvosd, "sink");

if (!osd_sink_pad)

g_print ("Unable to get sink pad\n");

else

gst_pad_add_probe (osd_sink_pad, GST_PAD_PROBE_TYPE_BUFFER,

osd_sink_pad_buffer_probe, (gpointer) obj_ctx_handle, NULL);

gst_object_unref (osd_sink_pad);在OSD的sink probe函数中,我们通过循环遍历object meta中的user meta数据,找到类型为"NVDS_CROP_IMAGE_META"的,并将其保存到jpg文件中

/* To verify encoded metadata of cropped objects, we iterate through the

* user metadata of each object and if a metadata of the type

* 'NVDS_CROP_IMAGE_META' is found then we write that to a file as

* implemented below.

*/

/* 为了验证所有的cropped objects编码的metadata,我们通过循环迭代每个object的user metadata

如果找到的metadata类型为 'NVDS_CROP_IMAGE_META',则将其写入到一个文件中

*/

char fileNameString[FILE_NAME_SIZE];

const char *osd_string = "OSD";

int obj_res_width = (int) obj_meta->rect_params.width;

int obj_res_height = (int) obj_meta->rect_params.height;

if(prop.integrated)

{

obj_res_width = GST_ROUND_DOWN_2(obj_res_width);

obj_res_height = GST_ROUND_DOWN_2(obj_res_height);

}

snprintf (fileNameString, FILE_NAME_SIZE, "%s_%d_%d_%d_%s_%dx%d.jpg",

osd_string, frame_number, frame_meta->source_id, num_rects,

obj_meta->obj_label, obj_res_width, obj_res_height);

/* For Demonstration Purposes we are writing metadata to jpeg images of

* only vehicles for the first 100 frames only.

* The files generated have a 'OSD' prefix. */

if (frame_number < 100 && obj_meta->class_id == PGIE_CLASS_ID_VEHICLE)

{

// 从object meta中获取user meta数据

NvDsUserMetaList *usrMetaList = obj_meta->obj_user_meta_list;

FILE *file;

while (usrMetaList != NULL) // 可能存在多个user meta数据,而在本例中只处理NVDS_CROP_IMAGE_META类型的数据

{

// 得到user meta数据

NvDsUserMeta *usrMetaData = (NvDsUserMeta *) usrMetaList->data;

// 判断user meta的类型

if (usrMetaData->base_meta.meta_type == NVDS_CROP_IMAGE_META)

{

NvDsObjEncOutParams *enc_jpeg_image =

(NvDsObjEncOutParams *) usrMetaData->user_meta_data;

/* Write to File */

file = fopen (fileNameString, "wb");

fwrite (enc_jpeg_image->outBuffer, sizeof (uint8_t),

enc_jpeg_image->outLen, file);

fclose (file);

usrMetaList = NULL;

}

else

{

usrMetaList = usrMetaList->next;

}

}

}在上述的代码中,NvDsObjEncOutParams类中包含了cropped objects的数据内容,该类中的outBuffer是一个指向JPEG Encoded Object的指针,outLen表示该JPEG Encode Object的长度,基于这两个参数就可以将object的内容写入到一个文件中。

3. 修改pipeline保存视频结果

原pipeline中检测后的结果直接可视化在屏幕上,而一般对于嵌入式设备不链接显示器,因此可以通过如下的代码将检测的视频结果保存到MP4文件中。具体包含两步:

1. 新建element

/* save file */

GstElement *nvvideoconvert = NULL, *nvv4l2h264enc = NULL, *h264parserenc = NULL;

nvvideoconvert = gst_element_factory_make("nvvideoconvert", "nvvideo-converter2");

nvv4l2h264enc = gst_element_factory_make ("nvv4l2h264enc", "nvv4l2-h264enc");

h264parserenc = gst_element_factory_make ("h264parse", "h264-parserenc");

sink = gst_element_factory_make ("filesink", "filesink");

g_object_set (G_OBJECT (sink), "location", "./output.mp4", NULL);

2. 将element加入到pipeline中

gst_bin_add_many (GST_BIN (pipeline), pgie, tiler, nvvidconv, nvosd,

nvvideoconvert, nvv4l2h264enc, h264parserenc, sink, NULL);

gst_element_link_many (streammux, pgie, tiler, nvvidconv, nvosd,

nvvideoconvert, nvv4l2h264enc, h264parserenc, sink, NULL)同样也可添加fakesink element将检测结果丢弃,具体可以参考链接。

if(prop.integrated)

{

// Jetson平台才需要这个element

transform = gst_element_factory_make ("queue", "nvegl-transform");

}

sink = gst_element_factory_make ("fakesink", "nvvideo-renderer");最后通过如下命令即可运行该sample

# 编译

export CUDA_VER=10.2

make

# 运行

./deepstream-image-meta-test file:///opt/nvidia/deepstream/deepstream-6.0/samples/streams/sample_720p.h264