一、环境介绍

相关软件版本:

kubernetets:v1.22.2

kube-prometheus:release 0.9

二、开始部署

在部署之前注意先去官方查看kube-prometheus与k8s集群的兼容性。kube-prometheus官方:kube-prometheus

在 master 节点下载相关软件包。

root@master01:~/monitor# git clone https://github.com/prometheus-operator/kube-prometheus.git

Cloning into 'kube-prometheus'...

remote: Enumerating objects: 17447, done.

remote: Counting objects: 100% (353/353), done.

remote: Compressing objects: 100% (129/129), done.

remote: Total 17447 (delta 262), reused 279 (delta 211), pack-reused 17094

Receiving objects: 100% (17447/17447), 9.12 MiB | 5.59 MiB/s, done.

Resolving deltas: 100% (11455/11455), done.

root@master01:~/monitor# ls

kube-prometheuskube-prometheus部署:注意manifests/setup/文件夹的资源在部署的时候必须用create不能用apply,否则会提示“The CustomResourceDefinition "prometheuses.monitoring.coreos.com" is invalid: metadata.annotations: Too long: must have at most 262144 bytes”,manifests文件夹中的内容可以用apply创建。

root@master01:~/monitor# ls

kube-prometheus

root@master01:~/monitor/kube-prometheus# kubectl create -f manifests/setup/

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

namespace/monitoring created

root@master01:~/monitor/kube-prometheus# kubectl apply -f manifests/

alertmanager.monitoring.coreos.com/main created

networkpolicy.networking.k8s.io/alertmanager-main created

poddisruptionbudget.policy/alertmanager-main created

prometheusrule.monitoring.coreos.com/alertmanager-main-rules created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager-main created

clusterrole.rbac.authorization.k8s.io/blackbox-exporter created

clusterrolebinding.rbac.authorization.k8s.io/blackbox-exporter created

configmap/blackbox-exporter-configuration created

deployment.apps/blackbox-exporter created

networkpolicy.networking.k8s.io/blackbox-exporter created

service/blackbox-exporter created

serviceaccount/blackbox-exporter created

servicemonitor.monitoring.coreos.com/blackbox-exporter created

secret/grafana-config created

secret/grafana-datasources created

configmap/grafana-dashboard-alertmanager-overview created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-grafana-overview created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes-darwin created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

networkpolicy.networking.k8s.io/grafana created

prometheusrule.monitoring.coreos.com/grafana-rules created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

prometheusrule.monitoring.coreos.com/kube-prometheus-rules created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

networkpolicy.networking.k8s.io/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kube-state-metrics-rules created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

prometheusrule.monitoring.coreos.com/kubernetes-monitoring-rules created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

networkpolicy.networking.k8s.io/node-exporter created

prometheusrule.monitoring.coreos.com/node-exporter-rules created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

networkpolicy.networking.k8s.io/prometheus-k8s created

poddisruptionbudget.policy/prometheus-k8s created

prometheus.monitoring.coreos.com/k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-prometheus-rules created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus-k8s created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

networkpolicy.networking.k8s.io/prometheus-adapter created

poddisruptionbudget.policy/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

servicemonitor.monitoring.coreos.com/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

networkpolicy.networking.k8s.io/prometheus-operator created

prometheusrule.monitoring.coreos.com/prometheus-operator-rules created

service/prometheus-operator created

serviceaccount/prometheus-operator created

servicemonitor.monitoring.coreos.com/prometheus-operator created部署结果验证,可以看到新创建的monitoring名称空间中的POD全部正常启动,相关资源正常被创建。

root@master01:~/monitor/kube-prometheus# kubectl get po -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 2m18s

alertmanager-main-1 2/2 Running 0 2m18s

alertmanager-main-2 2/2 Running 0 2m18s

blackbox-exporter-58d99cfb6d-5f44f 3/3 Running 0 2m30s

grafana-5cfbb9b4c5-7qw6c 1/1 Running 0 2m29s

kube-state-metrics-c9f8b947b-xrhbd 3/3 Running 0 2m29s

node-exporter-gzspv 2/2 Running 0 2m28s

node-exporter-jz588 2/2 Running 0 2m28s

node-exporter-z8vps 2/2 Running 0 2m28s

prometheus-adapter-5bf8d6f7c6-5jf6n 1/1 Running 0 2m26s

prometheus-adapter-5bf8d6f7c6-sz4ns 1/1 Running 0 2m27s

prometheus-k8s-0 2/2 Running 0 2m16s

prometheus-k8s-1 2/2 Running 0 2m16s

prometheus-operator-6cbd5c84fb-8xslw 2/2 Running 0 2m26s

root@master01:~# kubectl get crd -n monitoring

NAME CREATED AT

alertmanagerconfigs.monitoring.coreos.com 2023-01-04T14:54:38Z

alertmanagers.monitoring.coreos.com 2023-01-04T14:54:38Z

podmonitors.monitoring.coreos.com 2023-01-04T14:54:38Z

probes.monitoring.coreos.com 2023-01-04T14:54:38Z

prometheuses.monitoring.coreos.com 2023-01-04T14:54:38Z

prometheusrules.monitoring.coreos.com 2023-01-04T14:54:38Z

servicemonitors.monitoring.coreos.com 2023-01-04T14:54:38Z

thanosrulers.monitoring.coreos.com 2023-01-04T14:54:38Z

root@master01:~# kubectl get secret -n monitoring

NAME TYPE DATA AGE

alertmanager-main Opaque 1 3d21h

alertmanager-main-generated Opaque 1 3d21h

alertmanager-main-tls-assets-0 Opaque 0 3d21h

alertmanager-main-token-6c5pj kubernetes.io/service-account-token 3 3d21h

alertmanager-main-web-config Opaque 1 3d21h

blackbox-exporter-token-22c6s kubernetes.io/service-account-token 3 3d21h

default-token-kff56 kubernetes.io/service-account-token 3 3d22h

grafana-config Opaque 1 3d21h

grafana-datasources Opaque 1 3d21h

grafana-token-7dvc9 kubernetes.io/service-account-token 3 3d21h

kube-state-metrics-token-nzhtn kubernetes.io/service-account-token 3 3d21h

node-exporter-token-8zrpf kubernetes.io/service-account-token 3 3d21h

prometheus-adapter-token-jmdch kubernetes.io/service-account-token 3 3d21h

prometheus-k8s Opaque 1 3d21h

prometheus-k8s-tls-assets-0 Opaque 0 3d21h

prometheus-k8s-token-jfwmh kubernetes.io/service-account-token 3 3d21h

prometheus-k8s-web-config Opaque 1 3d21h

prometheus-operator-token-w4xcf kubernetes.io/service-account-token 3 3d21h

三、服务暴露

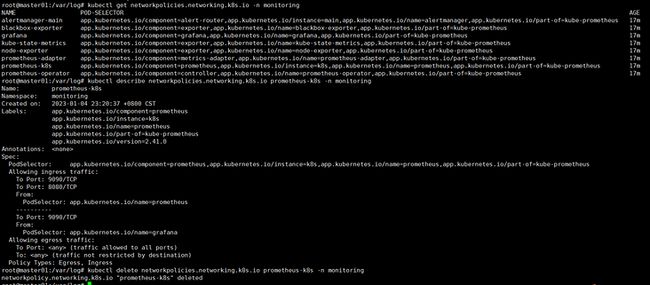

服务暴露分为两种方式,可以使用ingress或NodePort的方式将服务暴露出去使宿主机可以进行访问。注意如果集群使用的如果是calico网络的话会默认创建出若干条网络规则导致服务暴露后也无法在客户端进行访问。可将网络策略修改或直接删除。(注意图片中所操作的策略也需要删除)。

root@master01:~# kubectl get networkpolicies.networking.k8s.io -n monitoring

NAME POD-SELECTOR AGE

alertmanager-main app.kubernetes.io/component=alert-router,app.kubernetes.io/instance=main,app.kubernetes.io/name=alertmanager,app.kubernetes.io/part-of=kube-prometheus 14h

blackbox-exporter app.kubernetes.io/component=exporter,app.kubernetes.io/name=blackbox-exporter,app.kubernetes.io/part-of=kube-prometheus 14h

grafana app.kubernetes.io/component=grafana,app.kubernetes.io/name=grafana,app.kubernetes.io/part-of=kube-prometheus 14h

kube-state-metrics app.kubernetes.io/component=exporter,app.kubernetes.io/name=kube-state-metrics,app.kubernetes.io/part-of=kube-prometheus 14h

node-exporter app.kubernetes.io/component=exporter,app.kubernetes.io/name=node-exporter,app.kubernetes.io/part-of=kube-prometheus 14h

prometheus-adapter app.kubernetes.io/component=metrics-adapter,app.kubernetes.io/name=prometheus-adapter,app.kubernetes.io/part-of=kube-prometheus 14h

prometheus-operator app.kubernetes.io/component=controller,app.kubernetes.io/name=prometheus-operator,app.kubernetes.io/part-of=kube-prometheus 14h

root@master01:~# kubectl delete networkpolicies.networking.k8s.io grafana -n monitoring

networkpolicy.networking.k8s.io "grafana" deleted

root@master01:~# kubectl delete networkpolicies.networking.k8s.io alertmanager-main -n monitoring

networkpolicy.networking.k8s.io "alertmanager-main" deleted3.1 使用NodePort方式暴露服务(可选)

修改prometheus的service文件

root@master01:~/monitor/kube-prometheus# cat manifests/prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.41.0

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9090

targetPort: web

nodePort: 30090

- name: reloader-web

port: 8080

targetPort: reloader-web

selector:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP

root@master01:~/monitor/kube-prometheus# kubectl apply -f manifests/prometheus-service.yaml

service/prometheus-k8s configured修改 grafana 和 alert-manager 的 service 文件。

root@master01:~/monitor/kube-prometheus/manifests# cat grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 9.3.2

name: grafana

namespace: monitoring

spec:

type: NodePort

ports:

- name: http

port: 3000

targetPort: http

nodePort: 30030

selector:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

root@master01:~/monitor/kube-prometheus/manifests# cat alertmanager-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.25.0

name: alertmanager-main

namespace: monitoring

spec:

type: NodePort

ports:

- name: web

port: 9093

targetPort: web

nodePort: 30093

- name: reloader-web

port: 8080

targetPort: reloader-web

selector:

app.kubernetes.io/component: alert-router

app.kubernetes.io/instance: main

app.kubernetes.io/name: alertmanager

app.kubernetes.io/part-of: kube-prometheus

sessionAffinity: ClientIP

修改完后apply

root@master01:~/monitor/kube-prometheus/manifests# kubectl apply -f grafana-service.yaml

service/grafana configured

root@master01:~/monitor/kube-prometheus/manifests# kubectl apply -f alertmanager-service.yaml

service/alertmanager-main configured修改完相关service文件后验证

root@master01:~# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main NodePort 10.100.23.255 9093:30093/TCP,8080:32647/TCP 14h

alertmanager-operated ClusterIP None 9093/TCP,9094/TCP,9094/UDP 14h

blackbox-exporter ClusterIP 10.100.48.67 9115/TCP,19115/TCP 14h

grafana NodePort 10.100.153.195 3000:30030/TCP 14h

kube-state-metrics ClusterIP None 8443/TCP,9443/TCP 14h

node-exporter ClusterIP None 9100/TCP 14h

prometheus-adapter ClusterIP 10.100.226.131 443/TCP 14h

prometheus-k8s NodePort 10.100.206.216 9090:30090/TCP,8080:30019/TCP 14h

prometheus-operated ClusterIP None 9090/TCP 14h

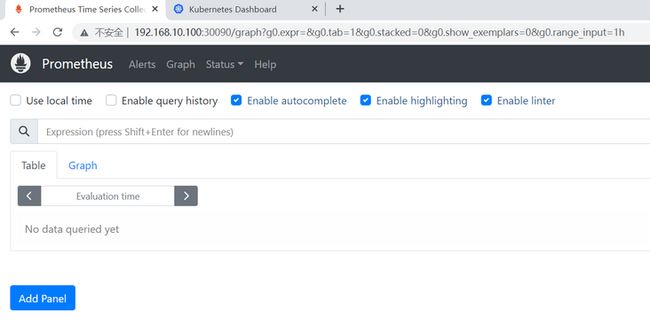

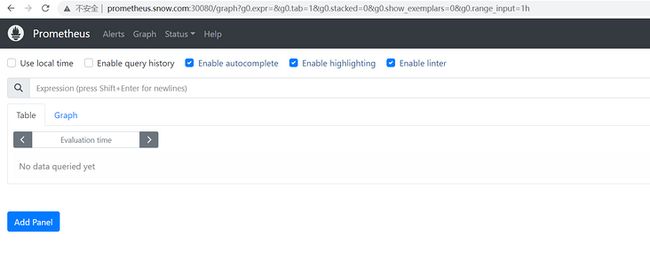

prometheus-operator ClusterIP None 8443/TCP 14h 在客户端用浏览器进行访问验证

上面我们可以看到 Prometheus的POD 是两个副本,我们这里通过 Service 去访问,按正常来说请求是会去轮询访问后端的两个 Prometheus 实例的,但实际上我们这里访问的时候始终是通过service的负载均衡特性调度到后端的任意一个实例上去,因为这里的 Service 在创建的时候添加了 sessionAffinity: ClientIP 这样的属性,会根据 ClientIP 来做 session 亲和性,所以不用担心请求会到不同的副本上去,正常多副本应该是看成高可用的常用方案,理论上来说不同副本本地的数据是一致的,但是需要注意的是 Prometheus 的主动 Pull 拉取监控指标的方式,由于抓取时间不能完全一致,即使一致也不一定就能保证网络没什么问题,所以最终不同副本下存储的数据很大可能是不一样的,所以官方提供的yaml文件里配置了 session 亲和性,可以保证我们在访问数据的时候始终是一致的。

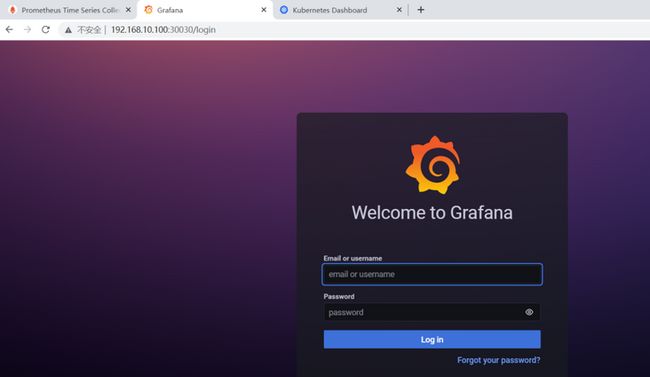

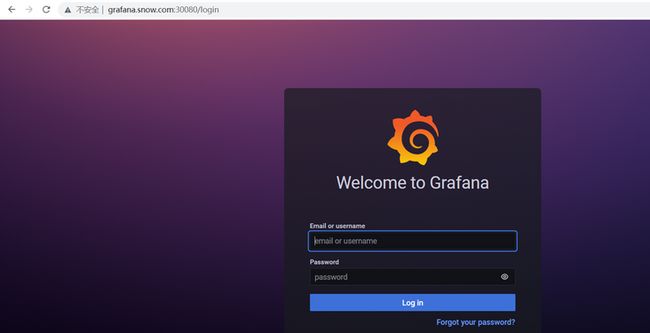

此时可以使用admin:admin,登录granfna平台。

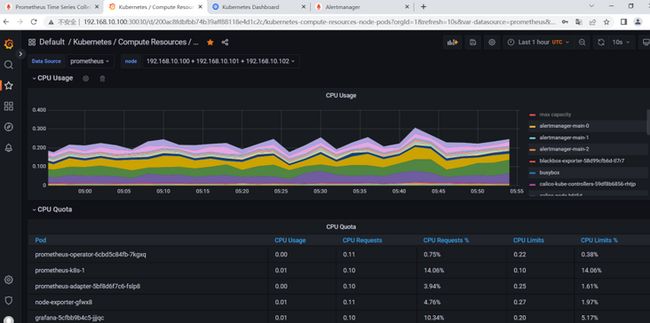

从内置监控模板中选择一个模板测试。可以发现已经可以看到监控数据。

3.2 使用ingress方式暴露服务(可选)

ingress工作逻辑简介:先创建ingress控制器,类似于nginx主进程,监听一个端口,也就是将宿主机的某个端口映射给ingress的controller,所有的请求先到控制器,各种配置文件都在控制器上,之后再将请求转给各个ingress工作线程。

本实验采用的是nginx-ingress测试

root@master01:~# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller NodePort 10.100.184.74 80:30080/TCP,443:30787/TCP 3d6h

ingress-nginx-controller-admission ClusterIP 10.100.216.215 443/TCP 3d6h

root@master01:~# kubectl get po -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-controller-69fbfb4bfd-zjs2w 1/1 Running 1 (9h ago) 9h

编写通过ingress访问监控系统的yaml文件,在编写ingress的yaml文件的时候注意集群版本与api变化。

ingress的api版本历经过多次变化他们的配置项也不太一样分别是:

● extensions/v1beta1:1.16版本之前使用

● networking.k8s.io/v1beta1:1.19版本之前使用

● networking.k8s.io/v1:1.19版本之后使用

编写需要暴露服务的yaml文件并创建相关资源。

root@master01:~/ingress/monitor-ingress# cat promethues-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: prometheus-ingress

namespace: monitoring

spec:

ingressClassName: nginx #此版本ingress控制器由此处指定,不用注解指了

rules:

- host: prometheus.snow.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: prometheus-k8s

port:

number: 9090

root@master01:~/ingress/monitor-ingress# cat grafana-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: grafana-ingress

namespace: monitoring

spec:

ingressClassName: nginx

rules:

- host: grafana.snow.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: grafana

port:

number: 3000

root@master01:~/ingress/monitor-ingress# cat alert-manager-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: alert-ingress

namespace: monitoring

spec:

ingressClassName: nginx

rules:

- host: alert.snow.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: alertmanager-main

port:

number: 9093

root@master01:~/ingress/monitor-ingress# kubectl apply -f promethues-ingress.yaml

ingress.networking.k8s.io/prometheus-ingress created

root@master01:~/ingress/monitor-ingress# kubectl apply -f grafana-ingress.yaml

ingress.networking.k8s.io/grafana-ingress created

root@master01:~/ingress/monitor-ingress# kubectl apply -f alert-manager-ingress.yaml

ingress.networking.k8s.io/alter-ingress created

ingress资源创建完毕后验证

root@master01:~/ingress/monitor-ingress# kubectl get ingress -n monitoring

NAME CLASS HOSTS ADDRESS PORTS AGE

alter-ingress nginx alert.snow.com 10.100.184.74 80 3m42s

grafana-ingress nginx grafana.snow.com 10.100.184.74 80 14h

prometheus-ingress nginx prometheus.snow.com 10.100.184.74 80 12h修改宿主机hosts文件,注意在宿主机进行访问的时候关闭代理工具,clash会使本机的hosts解析失效。

在浏览器使用域名访问测试

ps:以上内容在本人实现环境中已试验成功,如发现有问题或表述不清的地方欢迎指正。