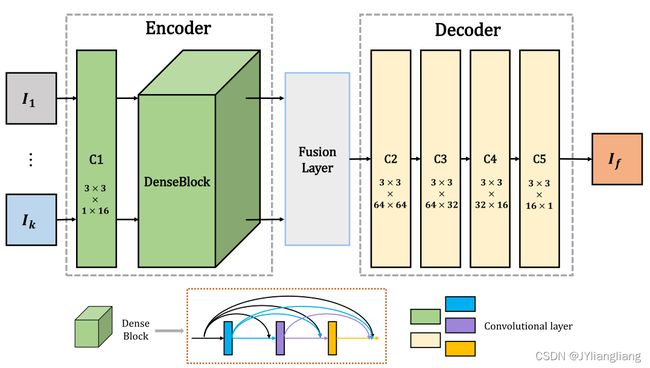

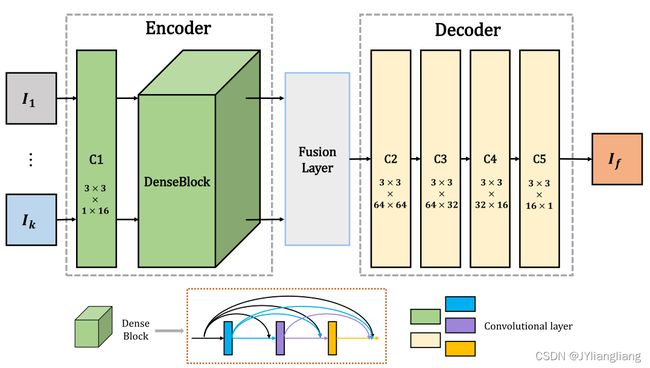

一、网络结构

1、DenseNet网络结构

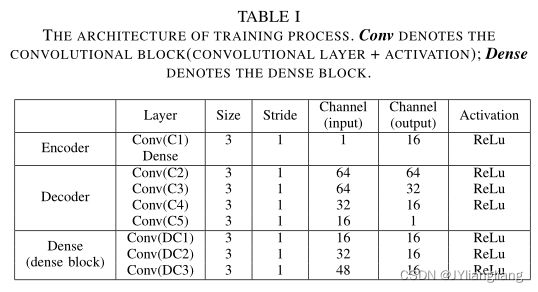

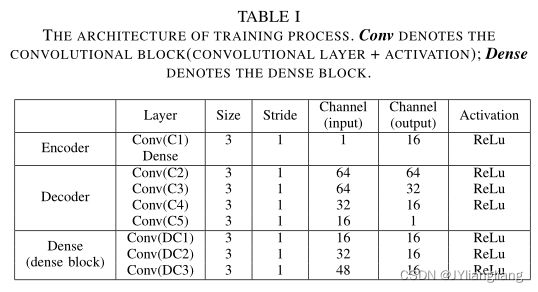

2、密集块及卷积层数据

二、代码详情

1、网络代码

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

import fusion_strategy

# 卷积层

class ConvLayer(torch.nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride, is_last=False):

super(ConvLayer, self).__init__()

reflection_padding = int(np.floor(kernel_size / 2))

self.reflection_pad = nn.ReflectionPad2d(reflection_padding)

self.conv2d = nn.Conv2d(in_channels, out_channels, kernel_size, stride)

self.dropout = nn.Dropout2d(p=0.5)

self.is_last = is_last

def forward(self, x):

out = self.reflection_pad(x)

out = self.conv2d(out)

if self.is_last is False:

# out = F.normalize(out)

out = F.relu(out, inplace=True)

# out = self.dropout(out)

return out

# 密集卷积

class DenseConv2d(torch.nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride):

super(DenseConv2d, self).__init__()

self.dense_conv = ConvLayer(in_channels, out_channels, kernel_size, stride)

def forward(self, x):

out = self.dense_conv(x)

out = torch.cat([x, out], 1)

return out

# 密集块

class DenseBlock(torch.nn.Module):

def __init__(self, in_channels, kernel_size, stride):

super(DenseBlock, self).__init__()

out_channels_def = 16

denseblock = []

denseblock += [DenseConv2d(in_channels, out_channels_def, kernel_size, stride),

DenseConv2d(in_channels + out_channels_def, out_channels_def, kernel_size, stride),

DenseConv2d(in_channels + out_channels_def * 2, out_channels_def, kernel_size, stride)]

self.denseblock = nn.Sequential(*denseblock)

def forward(self, x):

out = self.denseblock(x)

return out

# 密集融合网络

class DenseFuseNet(nn.Module):

def __init__(self, input_nc=1, output_nc=1): # 输入通道、输出通道均为1

super(DenseFuseNet, self).__init__()

denseblock = DenseBlock

nb_filter = [16, 64, 32, 16]

kernel_size = 3

stride = 1

# encoder

self.conv1 = ConvLayer(input_nc, nb_filter[0], kernel_size, stride)

self.DB1 = denseblock(nb_filter[0], kernel_size, stride)

# decoder

self.conv2 = ConvLayer(nb_filter[1], nb_filter[1], kernel_size, stride)

self.conv3 = ConvLayer(nb_filter[1], nb_filter[2], kernel_size, stride)

self.conv4 = ConvLayer(nb_filter[2], nb_filter[3], kernel_size, stride)

self.conv5 = ConvLayer(nb_filter[3], output_nc, kernel_size, stride)

def encoder(self, input):

x1 = self.conv1(input)

x_DB = self.DB1(x1)

return [x_DB]

def fusion(self, en1, en2, strategy_type='addition'):

f_0 = (en1[0] + en2[0])/2

return [f_0]

def decoder(self, f_en):

x2 = self.conv2(f_en[0])

x3 = self.conv3(x2)

x4 = self.conv4(x3)

output = self.conv5(x4)

return [output]

2、训练代码

# Training DenseFuse network

# auto-encoder

import os

import sys

import time

import numpy as np

from tqdm import tqdm, trange # 进度条显示工具

import scipy.io as scio

import random

import torch

from torch.optim import Adam

from torch.autograd import Variable

import utils

from net import DenseFuseNet

from args_fusion import args

import pytorch_msssim

def main():

# os.environ["CUDA_VISIBLE_DEVICES"] = "3"

original_imgs_path = utils.list_images(args.dataset) # 根据训练图片路径获取图片

train_num = 40000

original_imgs_path = original_imgs_path[:train_num]

random.shuffle(original_imgs_path)

# for i in range(5):

i = 2

train(i, original_imgs_path)

def train(i, original_imgs_path):

batch_size = args.batch_size

# 网络图像类型 1:灰度图 3:RGB图

in_c = 1 # 输入通道 1 - gray; 3 - RGB

if in_c == 1:

img_model = 'L'

else:

img_model = 'RGB'

input_nc = in_c

output_nc = in_c

densefuse_model = DenseFuseNet(input_nc, output_nc) # 获取融合网络模型

if args.resume is not None:

print('Resuming, initializing using weight from {}.'.format(args.resume))

densefuse_model.load_state_dict(torch.load(args.resume))

print(densefuse_model)

optimizer = Adam(densefuse_model.parameters(), args.lr) # 优化器

mse_loss = torch.nn.MSELoss() # 均方差损失函数

ssim_loss = pytorch_msssim.msssim # SSIM损失函数

if args.cuda:

densefuse_model.cuda()

tbar = trange(args.epochs) # 进度条

print('Start training.....')

# 创建保存路径

temp_path_model = os.path.join(args.save_model_dir, args.ssim_path[i])

if os.path.exists(temp_path_model) is False:

os.mkdir(temp_path_model)

temp_path_loss = os.path.join(args.save_loss_dir, args.ssim_path[i])

if os.path.exists(temp_path_loss) is False:

os.mkdir(temp_path_loss)

loss_pixel = []

loss_ssim = []

loss_all = []

all_ssim_loss = 0.

all_pixel_loss = 0.

for e in tbar: # 批次

print('Epoch %d.....' % e)

# 加载训练数据

image_set_ir, batches = utils.load_dataset(original_imgs_path, batch_size)

densefuse_model.train()

count = 0

for batch in range(batches):

image_paths = image_set_ir[batch * batch_size:(batch * batch_size + batch_size)]

img = utils.get_train_images_auto(image_paths, height=args.HEIGHT, width=args.WIDTH, mode=img_model)

count += 1

optimizer.zero_grad()

img = Variable(img, requires_grad=False)

if args.cuda:

img = img.cuda()

# 获得融合图像

# encoder

en = densefuse_model.encoder(img)

# decoder

outputs = densefuse_model.decoder(en)

# resolution loss

x = Variable(img.data.clone(), requires_grad=False)

ssim_loss_value = 0.

pixel_loss_value = 0.

for output in outputs:

pixel_loss_temp = mse_loss(output, x)

ssim_loss_temp = ssim_loss(output, x, normalize=True)

ssim_loss_value += (1 - ssim_loss_temp)

pixel_loss_value += pixel_loss_temp

ssim_loss_value /= len(outputs)

pixel_loss_value /= len(outputs)

# total loss

total_loss = pixel_loss_value + args.ssim_weight[i] * ssim_loss_value

total_loss.backward()

optimizer.step()

all_ssim_loss += ssim_loss_value.item()

all_pixel_loss += pixel_loss_value.item()

if (batch + 1) % args.log_interval == 0:

mesg = "{}\tEpoch {}:\t[{}/{}]\t pixel loss: {:.6f}\t ssim loss: {:.6f}\t total: {:.6f}".format(

time.ctime(), e + 1, count, batches,

all_pixel_loss / args.log_interval,

all_ssim_loss / args.log_interval,

(args.ssim_weight[i] * all_ssim_loss + all_pixel_loss) / args.log_interval

)

tbar.set_description(mesg)

loss_pixel.append(all_pixel_loss / args.log_interval)

loss_ssim.append(all_ssim_loss / args.log_interval)

loss_all.append((args.ssim_weight[i] * all_ssim_loss + all_pixel_loss) / args.log_interval)

all_ssim_loss = 0.

all_pixel_loss = 0.

if (batch + 1) % (200 * args.log_interval) == 0:

# save model

densefuse_model.eval()

densefuse_model.cpu()

save_model_filename = args.ssim_path[i] + '/' + "Epoch_" + str(e) + "_iters_" + str(count) + "_" + \

str(time.ctime()).replace(' ', '_').replace(':', '_') + "_" + args.ssim_path[i] + ".model"

save_model_path = os.path.join(args.save_model_dir, save_model_filename)

torch.save(densefuse_model.state_dict(), save_model_path)

# save loss data

# pixel loss

loss_data_pixel = np.array(loss_pixel)

loss_filename_path = args.ssim_path[i] + '/' + "loss_pixel_epoch_" + str(

args.epochs) + "_iters_" + str(count) + "_" + str(time.ctime()).replace(' ', '_').replace(':', '_') + "_" + \

args.ssim_path[i] + ".mat"

save_loss_path = os.path.join(args.save_loss_dir, loss_filename_path)

scio.savemat(save_loss_path, {'loss_pixel': loss_data_pixel})

# SSIM loss

loss_data_ssim = np.array(loss_ssim)

loss_filename_path = args.ssim_path[i] + '/' + "loss_ssim_epoch_" + str(

args.epochs) + "_iters_" + str(count) + "_" + str(time.ctime()).replace(' ', '_').replace(':', '_') + "_" + \

args.ssim_path[i] + ".mat"

save_loss_path = os.path.join(args.save_loss_dir, loss_filename_path)

scio.savemat(save_loss_path, {'loss_ssim': loss_data_ssim})

# all loss

loss_data_total = np.array(loss_all)

loss_filename_path = args.ssim_path[i] + '/' + "loss_total_epoch_" + str(

args.epochs) + "_iters_" + str(count) + "_" + str(time.ctime()).replace(' ', '_').replace(':', '_') + "_" + \

args.ssim_path[i] + ".mat"

save_loss_path = os.path.join(args.save_loss_dir, loss_filename_path)

scio.savemat(save_loss_path, {'loss_total': loss_data_total})

densefuse_model.train()

densefuse_model.cuda()

tbar.set_description("\nCheckpoint, trained model saved at", save_model_path)

# pixel loss

loss_data_pixel = np.array(loss_pixel)

loss_filename_path = args.ssim_path[i] + '/' + "Final_loss_pixel_epoch_" + str(

args.epochs) + "_" + str(time.ctime()).replace(' ', '_').replace(':','_') + "_" + \

args.ssim_path[i] + ".mat"

save_loss_path = os.path.join(args.save_loss_dir, loss_filename_path)

scio.savemat(save_loss_path, {'loss_pixel': loss_data_pixel})

# SSIM loss

loss_data_ssim = np.array(loss_ssim)

loss_filename_path = args.ssim_path[i] + '/' + "Final_loss_ssim_epoch_" + str(

args.epochs) + "_" + str(time.ctime()).replace(' ', '_').replace(':', '_') + "_" + \

args.ssim_path[i] + ".mat"

save_loss_path = os.path.join(args.save_loss_dir, loss_filename_path)

scio.savemat(save_loss_path, {'loss_ssim': loss_data_ssim})

# all loss

loss_data_total = np.array(loss_all)

loss_filename_path = args.ssim_path[i] + '/' + "Final_loss_total_epoch_" + str(

args.epochs) + "_" + str(time.ctime()).replace(' ', '_').replace(':', '_') + "_" + \

args.ssim_path[i] + ".mat"

save_loss_path = os.path.join(args.save_loss_dir, loss_filename_path)

scio.savemat(save_loss_path, {'loss_total': loss_data_total})

# save model

densefuse_model.eval()

densefuse_model.cpu()

save_model_filename = args.ssim_path[i] + '/' "Final_epoch_" + str(args.epochs) + "_" + \

str(time.ctime()).replace(' ', '_').replace(':', '_') + "_" + args.ssim_path[i] + ".model"

save_model_path = os.path.join(args.save_model_dir, save_model_filename)

torch.save(densefuse_model.state_dict(), save_model_path)

print("\nDone, trained model saved at", save_model_path)

if __name__ == "__main__":

main()

3、测试代码

# test phase

import torch

from torch.autograd import Variable

from net import DenseFuseNet

import utils

from args_fusion import args

import numpy as np

import time

import cv2

import os

def load_model(path, input_nc, output_nc):

nest_model = DenseFuseNet(input_nc, output_nc)

nest_model.load_state_dict(torch.load(path))

para = sum([np.prod(list(p.size())) for p in nest_model.parameters()])

type_size = 4

print('Model {} : params: {:4f}M'.format(nest_model._get_name(), para * type_size / 1000 / 1000))

nest_model.eval()

nest_model.cuda()

return nest_model

def _generate_fusion_image(model, strategy_type, img1, img2):

# encoder

# test = torch.unsqueeze(img_ir[:, i, :, :], 1)

en_r = model.encoder(img1)

# vision_features(en_r, 'ir')

en_v = model.encoder(img2)

# vision_features(en_v, 'vi')

# fusion

f = model.fusion(en_r, en_v, strategy_type=strategy_type)

# f = en_v

# decoder

img_fusion = model.decoder(f)

return img_fusion[0]

def run_demo(model, infrared_path, visible_path, output_path_root, index, fusion_type, network_type, strategy_type, ssim_weight_str, mode):

# if mode == 'L':

ir_img = utils.get_test_images(infrared_path, height=None, width=None, mode=mode)

vis_img = utils.get_test_images(visible_path, height=None, width=None, mode=mode)

# else:

# img_ir = utils.tensor_load_rgbimage(infrared_path)

# img_ir = img_ir.unsqueeze(0).float()

# img_vi = utils.tensor_load_rgbimage(visible_path)

# img_vi = img_vi.unsqueeze(0).float()

# dim = img_ir.shape

if args.cuda:

ir_img = ir_img.cuda()

vis_img = vis_img.cuda()

ir_img = Variable(ir_img, requires_grad=False)

vis_img = Variable(vis_img, requires_grad=False)

dimension = ir_img.size()

img_fusion = _generate_fusion_image(model, strategy_type, ir_img, vis_img)

# multi outputs ##############################################

file_name = 'fusion_' + fusion_type + '_' + str(index) + '_network_' + network_type + '_' + strategy_type + '_' + ssim_weight_str + '.png'

output_path = output_path_root + file_name

# # save images

# utils.save_image_test(img_fusion, output_path)

# utils.tensor_save_rgbimage(img_fusion, output_path)

if args.cuda:

img = img_fusion.cpu().clamp(0, 255).data[0].numpy()

else:

img = img_fusion.clamp(0, 255).data[0].numpy()

img = img.transpose(1, 2, 0).astype('uint8')

utils.save_images(output_path, img)

print(output_path)

def vision_features(feature_maps, img_type):

count = 0

for features in feature_maps:

count += 1

for index in range(features.size(1)):

file_name = 'feature_maps_' + img_type + '_level_' + str(count) + '_channel_' + str(index) + '.png'

output_path = 'outputs/feature_maps/' + file_name

map = features[:, index, :, :].view(1, 1, features.size(2), features.size(3))

map = map*255

# save images

utils.save_image_test(map, output_path)

def main():

# run demo

# test_path = "images/test-RGB/"

test_path = "images/IV_images/"

network_type = 'densefuse'

fusion_type = 'auto' # auto, fusion_layer, fusion_all

strategy_type_list = ['addition', 'attention_weight'] # addition, attention_weight, attention_enhance, adain_fusion, channel_fusion, saliency_mask

output_path = './outputs/'

strategy_type = strategy_type_list[0]

if os.path.exists(output_path) is False:

os.mkdir(output_path)

# in_c = 3 for RGB images; in_c = 1 for gray images

in_c = 1

if in_c == 1:

out_c = in_c

mode = 'L'

model_path = args.model_path_gray

else:

out_c = in_c

mode = 'RGB'

model_path = args.model_path_rgb

with torch.no_grad():

print('SSIM weight ----- ' + args.ssim_path[2])

ssim_weight_str = args.ssim_path[2]

model = load_model(model_path, in_c, out_c)

for i in range(1):

index = i + 1

infrared_path = test_path + 'IR' + str(index) + '.jpg'

visible_path = test_path + 'VIS' + str(index) + '.jpg'

run_demo(model, infrared_path, visible_path, output_path, index, fusion_type, network_type, strategy_type, ssim_weight_str, mode)

print('Done......')

if __name__ == '__main__':

main()