KNN算法癌症诊断

邻近算法(KNN,K-NearestNeighbor)是数据挖掘分类技术方法之一。K最近邻,就是K个最近的邻居的意思,每个样本都可以用它最接近的K个邻近值来代表。近邻算法就是将数据集合中每一个记录进行分类的方法,因此可以将该算法用于癌症分类中去。

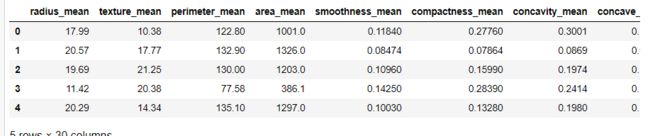

此数据为乳腺癌的癌症,获得的数据属性有乳腺的细胞核的一些特征,【包括半径,质地,光滑度,面积等等,这些都能用仪器测出来】。医生可以根据这些特征判断你是否得病了,同样,算法也一样,通过这些特征判断你是否得病了。比如KNN算法。

导包

import numpy as np

import pandas as pd

from pandas import Series,DataFrame

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import train_test_split

# grid网格,search搜索,cv:cross_validation

# 搜索算法最合适的参数

from sklearn.model_selection import GridSearchCV

from sklearn.metrics import accuracy_score加载数据

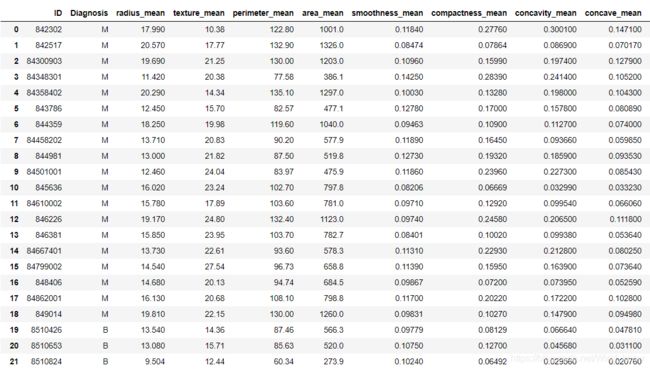

cancer = pd.read_csv('./cancer.csv',sep = '\t')#分隔符参数sep

cancer

删除无价值数据

cancer.drop('ID',axis = 1,inplace=True)#inplace = True:不创建新的对象,直接对原始对象进行修改

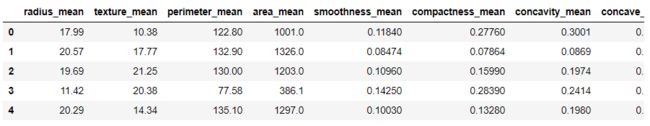

X = cancer.iloc[:,1:]#从下标1列开始到最后

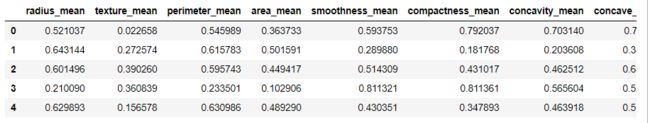

X.head()

目标值

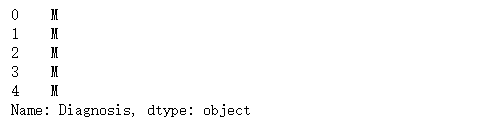

y = cancer['Diagnosis']

y.head()M为恶性,B为良性。

分割数据

X_train,X_test,y_train,y_test = train_test_split(X,y,test_size = 0.2)

网格搜索GridSearchCV进行最佳参数的查找

knn = KNeighborsClassifier()

params = {'n_neighbors':[i for i in range(1,30)],

'weights':['distance','uniform'],

'p':[1,2]}

# cross_val_score类似

gcv = GridSearchCV(knn,params,scoring='accuracy',cv = 6)

gcv.fit(X_train,y_train)查看了GridSearchCV最佳的参数组合

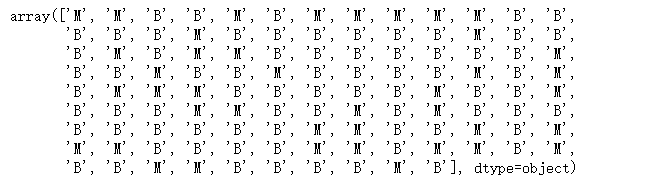

gcv.best_params_预测值

y_ = gcv.predict(X_test)

y_准确率

accuracy_score(y_test,y_)交叉表

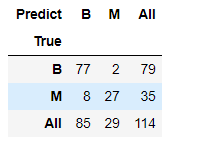

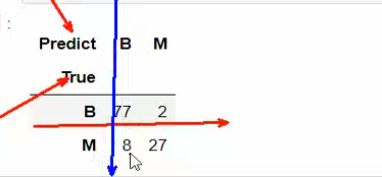

pd.crosstab(index = y_test,columns=y_,rownames=['True'],colnames=['Predict'], margins=True)#边界统计行为真实值,列为预测值。margin:统计行列数。

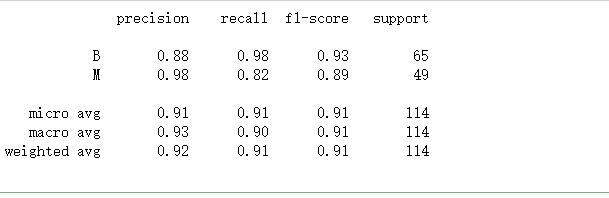

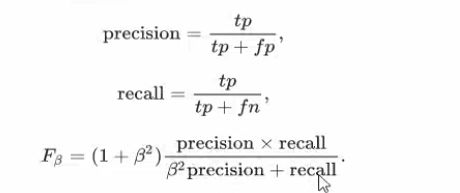

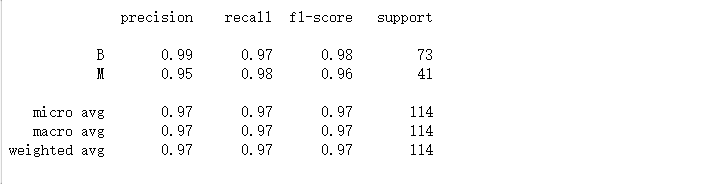

Classification_report展示

# 精确率、召回率、f1-score调和平均值

from sklearn.metrics import classification_report

print(classification_report(y_test,y_,target_names = ['B','M']))# 真实的 以行为单位

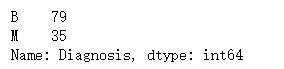

y_test.value_counts()# 预测 以列为单位

Series(y_).value_counts()混合矩阵

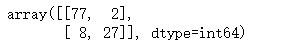

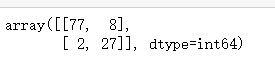

confusion_matrix(y_test,y_)confusion_matrix(y_test,y_)

confusion_matrix(y_test,y_)提升准确率,提升精确率,提升召回率

归一化操作

数值比较大,所以进行归一化操作

手写归一化

# 归一化操作

X_norm1 = (X - X.min())/(X.max() - X.min())

X_norm1.head()归一化后的数据训练

X_train,X_test,y_train,y_test = train_test_split(X_norm1,y,test_size = 0.2)

knn = KNeighborsClassifier()

params = {'n_neighbors':[i for i in range(1,30)],

'weights':['uniform','distance'],

'p':[1,2]}#p=1位曼哈顿距离,p=2位欧式距离

gcv = GridSearchCV(knn,params,scoring='accuracy',cv = 6)

gcv.fit(X_train,y_train)

accuracy_score(y_test,y_)由此可看准确率提升了。

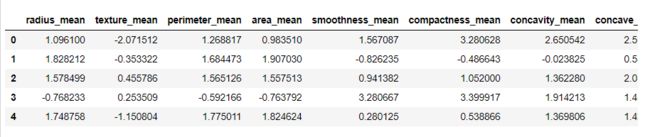

第二种归一化:Z-Score归一化

X_norm2 = (X - X.mean())/X.std()

X_norm2.head()X_train,X_test,y_train,y_test = train_test_split(X_norm2,y,test_size = 0.2)

knn = KNeighborsClassifier()

params = {'n_neighbors':[i for i in range(1,30)],

'weights':['uniform','distance'],

'p':[1,2]}

gcv = GridSearchCV(knn,params,scoring='accuracy',cv = 6)

gcv.fit(X_train,y_train)

y_ = gcv.predict(X_test)

accuracy_score(y_test,y_)第三种归一化

from sklearn.preprocessing import MinMaxScaler,StandardScaler #inplace = True:不创建新的对象,直接对原始对象进行修改;

# MinMaxScaler 和最大值最小值归一化效果一样

mms = MinMaxScaler()

mms.fit(X)

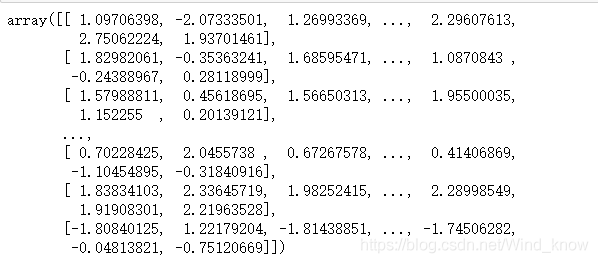

X2 = mms.transform(X)

X2.round(6)sklearn归一化方法和手写的归一化方法一样。

归一化方法四

ss = StandardScaler()

X3 = ss.fit_transform(X)

X3