YOLOv5 数据增强data augmentation (七)

1.数据增强介绍

当我们训练集中的图片比较少时,容易造成网络的过拟合。为了避免这种情况一般我们要经过图像处理的方法,人为地去增加些图片数据,这样就会增加我们可用图片的数目,减少过拟合的可能性。

- 可以通过像素级的剪裁(Crop)、旋转(Rotation)、反转(Flip)、色调(Hue)、饱和度(Saturation)、曝光量(Exposure)、宽高比(Aspect)来做数据增强。

- 另外还可以在图片级数据增强,比如MixUp、CurMix、Mosaic、Blur

2.图片级像素增强

![]()

- Mixup: 如图在一张狗的图片中,叠加一只猫的图片,这样经过两幅图片的加权运算可以看到,可以看到这幅新的图片上既有狗又有猫。

- Cutout: 如图,将图片中某一块区域,填充为某种颜色,比如图中填充为黑色

- CutMix: 如图,将图片某一块区域剪裁掉,然后用另外一幅图像来填充剪裁区域

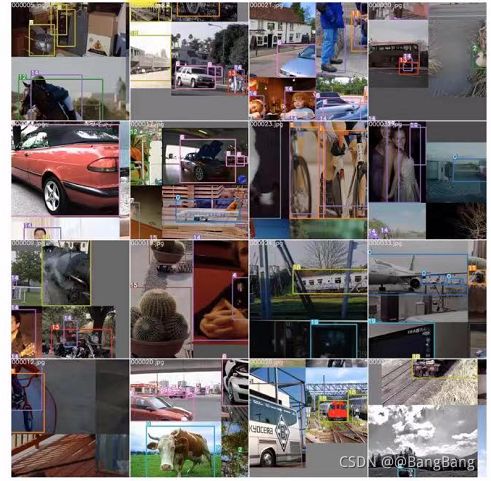

- Mosaic 数据增强:它是把四副图片拼成一幅大图,在YOLOv5中数据增强就是采用Mosaic方法,该方法由YOLOv5作者提出。如下图所示

YOLOv5在训练过程中,将4副小图拼成一幅大图,4副小图在拼接时做了随机的处理,所以4副小图的大小形状是不一样的。 - 我们可以通过

train.py --rect去省略掉mosaic --rect,通过对整个数据集的宽高比进行排序,然后对相似的宽高比例图片组合在一起。- 按宽高比排序的好处是,可以降低FLOPS运算,加速数据处理

3. 代码讲解

3.1 mosaic 代码

代码位置yolov5-3.1 > utils > datasets.py

def load_mosaic(self, index):

# loads images in a mosaic

labels4 = []

s = self.img_size

#随机取mosaic中心点

yc, xc = [int(random.uniform(-x, 2 * s + x)) for x in self.mosaic_border] # mosaic center x, y

#随机取其他三张图片的索引

indices = [index] + [random.randint(0, len(self.labels) - 1) for _ in range(3)] # 3 additional image indices

for i, index in enumerate(indices):

# Load image

# load_image 加载图片并根据设定的输入大小与图片原大小的比例ratio进行resize

img, _, (h, w) = load_image(self, index)

# 初始化大图 img4

if i == 0: # top left(左上角)

img4 = np.full((s * 2, s * 2, img.shape[2]), 114, dtype=np.uint8) # base image with 4 tiles

# 设置大图上的位置(左上角)

x1a, y1a, x2a, y2a = max(xc - w, 0), max(yc - h, 0), xc, yc # xmin, ymin, xmax, ymax (large image)

# 选取小图上的位置

x1b, y1b, x2b, y2b = w - (x2a - x1a), h - (y2a - y1a), w, h # xmin, ymin, xmax, ymax (small image)

elif i == 1: # top right

x1a, y1a, x2a, y2a = xc, max(yc - h, 0), min(xc + w, s * 2), yc

x1b, y1b, x2b, y2b = 0, h - (y2a - y1a), min(w, x2a - x1a), h

elif i == 2: # bottom left

x1a, y1a, x2a, y2a = max(xc - w, 0), yc, xc, min(s * 2, yc + h)

x1b, y1b, x2b, y2b = w - (x2a - x1a), 0, w, min(y2a - y1a, h)

elif i == 3: # bottom right

x1a, y1a, x2a, y2a = xc, yc, min(xc + w, s * 2), min(s * 2, yc + h)

x1b, y1b, x2b, y2b = 0, 0, min(w, x2a - x1a), min(y2a - y1a, h)

img4[y1a:y2a, x1a:x2a] = img[y1b:y2b, x1b:x2b] # img4[ymin:ymax, xmin:xmax]

#计算小图到大图上时所产生的偏移,用来计算mosaic增强后的标签的位置

padw = x1a - x1b

padh = y1a - y1b

# Labels

x = self.labels[index]

labels = x.copy()

# 根据偏移量更新目标框位置

if x.size > 0: # Normalized xywh to pixel xyxy format

labels[:, 1] = w * (x[:, 1] - x[:, 3] / 2) + padw

labels[:, 2] = h * (x[:, 2] - x[:, 4] / 2) + padh

labels[:, 3] = w * (x[:, 1] + x[:, 3] / 2) + padw

labels[:, 4] = h * (x[:, 2] + x[:, 4] / 2) + padh

labels4.append(labels)

# Concat/clip labels

if len(labels4):

labels4 = np.concatenate(labels4, 0)

np.clip(labels4[:, 1:], 0, 2 * s, out=labels4[:, 1:]) # use with random_perspective

# img4, labels4 = replicate(img4, labels4) # replicate

# Augment

# 进行mosaic的时候将四张图片整合到一起之后shape为[2*img_size,2*img_size]

# 对mosaic 整合的图片进行随机旋转、平移、缩放、裁剪,并resize为输入大小img_size

img4, labels4 = random_perspective(img4, labels4,

degrees=self.hyp['degrees'],

translate=self.hyp['translate'],

scale=self.hyp['scale'],

shear=self.hyp['shear'],

perspective=self.hyp['perspective'],

border=self.mosaic_border) # border to remove

return img4, labels4

3.2 load_img 代码

# load_image加载图片并根据设定的输入大小与图片原大小的比例ratio进行resize

def load_image(self, index):

# loads 1 image from dataset, returns img, original hw, resized hw

img = self.imgs[index]

if img is None: # not cached

path = self.img_files[index]

img = cv2.imread(path) # BGR

assert img is not None, 'Image Not Found ' + path

h0, w0 = img.shape[:2] # orig hw

r = self.img_size / max(h0, w0) # resize image to img_size

if r != 1: # always resize down, only resize up if training with augmentation

interp = cv2.INTER_AREA if r < 1 and not self.augment else cv2.INTER_LINEAR

img = cv2.resize(img, (int(w0 * r), int(h0 * r)), interpolation=interp)

return img, (h0, w0), img.shape[:2] # img, hw_original, hw_resized

else:

return self.imgs[index], self.img_hw0[index], self.img_hw[index] # img, hw_original, hw_resized

3.3 random_perspective

#随机透视变换

#计算方法为坐标向量和变换矩阵的乘积

def random_perspective(img, targets=(), degrees=10, translate=.1, scale=.1, shear=10, perspective=0.0, border=(0, 0)):

# torchvision.transforms.RandomAffine(degrees=(-10, 10), translate=(.1, .1), scale=(.9, 1.1), shear=(-10, 10))

# targets = [cls, xyxy]

height = img.shape[0] + border[0] * 2 # shape(h,w,c)

width = img.shape[1] + border[1] * 2

# Center

C = np.eye(3)

C[0, 2] = -img.shape[1] / 2 # x translation (pixels)

C[1, 2] = -img.shape[0] / 2 # y translation (pixels)

# Perspective

P = np.eye(3)

P[2, 0] = random.uniform(-perspective, perspective) # x perspective (about y)

P[2, 1] = random.uniform(-perspective, perspective) # y perspective (about x)

# Rotation and Scale

R = np.eye(3)

a = random.uniform(-degrees, degrees)

# a += random.choice([-180, -90, 0, 90]) # add 90deg rotations to small rotations

s = random.uniform(1 - scale, 1 + scale)

# s = 2 ** random.uniform(-scale, scale)

R[:2] = cv2.getRotationMatrix2D(angle=a, center=(0, 0), scale=s)

# Shear

S = np.eye(3)

S[0, 1] = math.tan(random.uniform(-shear, shear) * math.pi / 180) # x shear (deg)

S[1, 0] = math.tan(random.uniform(-shear, shear) * math.pi / 180) # y shear (deg)

# Translation

T = np.eye(3)

T[0, 2] = random.uniform(0.5 - translate, 0.5 + translate) * width # x translation (pixels)

T[1, 2] = random.uniform(0.5 - translate, 0.5 + translate) * height # y translation (pixels)

# @表示矩阵乘法运算

# Combined rotation matrix

M = T @ S @ R @ P @ C # order of operations (right to left) is IMPORTANT

if (border[0] != 0) or (border[1] != 0) or (M != np.eye(3)).any(): # image changed

if perspective:

#透视变换函数,可保持直线不变形,但是平行线可能不再平行

img = cv2.warpPerspective(img, M, dsize=(width, height), borderValue=(114, 114, 114))

else: # affine

# 仿射变换函数,可实现旋转、平移、缩放;变换后的平行线依旧平行

img = cv2.warpAffine(img, M[:2], dsize=(width, height), borderValue=(114, 114, 114))

# Visualize

# import matplotlib.pyplot as plt

# ax = plt.subplots(1, 2, figsize=(12, 6))[1].ravel()

# ax[0].imshow(img[:, :, ::-1]) # base

# ax[1].imshow(img2[:, :, ::-1]) # warped

# Transform label coordinates

n = len(targets)

if n:

# warp points

xy = np.ones((n * 4, 3))

xy[:, :2] = targets[:, [1, 2, 3, 4, 1, 4, 3, 2]].reshape(n * 4, 2) # x1y1, x2y2, x1y2, x2y1

xy = xy @ M.T # transform

if perspective:

xy = (xy[:, :2] / xy[:, 2:3]).reshape(n, 8) # rescale

else: # affine

xy = xy[:, :2].reshape(n, 8)

# create new boxes

x = xy[:, [0, 2, 4, 6]]

y = xy[:, [1, 3, 5, 7]]

xy = np.concatenate((x.min(1), y.min(1), x.max(1), y.max(1))).reshape(4, n).T

# # apply angle-based reduction of bounding boxes

# radians = a * math.pi / 180

# reduction = max(abs(math.sin(radians)), abs(math.cos(radians))) ** 0.5

# x = (xy[:, 2] + xy[:, 0]) / 2

# y = (xy[:, 3] + xy[:, 1]) / 2

# w = (xy[:, 2] - xy[:, 0]) * reduction

# h = (xy[:, 3] - xy[:, 1]) * reduction

# xy = np.concatenate((x - w / 2, y - h / 2, x + w / 2, y + h / 2)).reshape(4, n).T

# clip boxes

# 去除进行上面一系列操作后被裁剪过小的框;reject warped points outside of image

xy[:, [0, 2]] = xy[:, [0, 2]].clip(0, width)

xy[:, [1, 3]] = xy[:, [1, 3]].clip(0, height)

# filter candidates

i = box_candidates(box1=targets[:, 1:5].T * s, box2=xy.T)

targets = targets[i]

targets[:, 1:5] = xy[i]

return img, targets