变电站火灾检测项目(tf2)

目录

1. 项目背景

2. 项目研究数据集介绍(变电站火灾检测图像数据集)

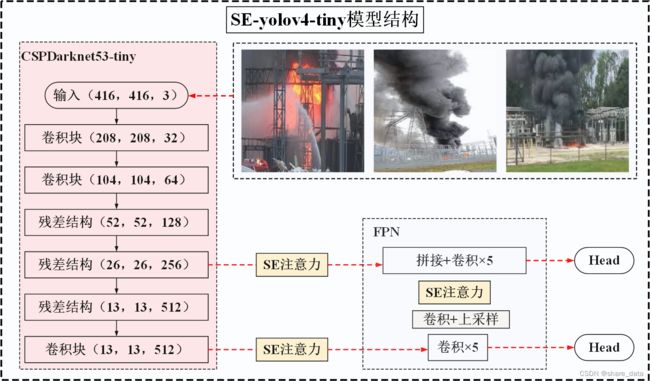

3. 目标检测模型介绍(SE改进的YOLOv4-tiny模型)

4. 模型训练及测试

1. 项目背景

我们的日常生活与电力息息相关,变电站作为输配电系统的关键环节,若出现事故,则有可能对整个系统带来很严重的影响。比如变电站出现火灾、爆炸等,这都很有可能对供电系统带来严重的危害。虽然供电系统对变电站做了很多的防火措施,但是变电站火灾与爆炸等事故还是时有发生。变电站着火属于电气火灾,这类火灾的特点就是一旦出现就会以极快的速度在短时间内迅速燃烧,甚至在一刹那之间就可以将一整个电力系统的设备损坏。但是,只要我们可以经常定期对电气设备进行防火检查,这种情况都是可以预防和避免的。尤其是对于电气设备较多的变电站,做好定期的巡视检查和相关知识学习是不可或缺的。

伊拉克变电站发生火灾

伊拉克变电站发生火灾

2. 项目研究数据集介绍(变电站火灾检测图像数据集)

变电站火灾检测图像数据集示例

变电站火灾检测图像数据集示例

本项目共收集变电站火灾检测图像3600多幅,分辨率均为768×768。并利用labelimg标注两类目标:1)火灾;2)烟火。按9:1的比例随机选取训练集和测试集,标签预处理及划分的相关程序如下:

import os

import random

import xml.etree.ElementTree as ET

import numpy as np

from utils.utils import get_classes

annotation_mode = 0

classes_path = 'model_data/defect.txt'

trainval_percent = 0.9

train_percent = 0.9

VOCdevkit_path = 'VOCdevkit'

VOCdevkit_sets = [('2007', 'train'), ('2007', 'val')]

classes, _ = get_classes(classes_path)

#-------------------------------------------------------#

photo_nums = np.zeros(len(VOCdevkit_sets))

nums = np.zeros(len(classes))

def convert_annotation(year, image_id, list_file):

in_file = open(os.path.join(VOCdevkit_path, 'VOC%s/Annotations/%s.xml'%(year, image_id)), encoding='utf-8')

tree=ET.parse(in_file)

root = tree.getroot()

for obj in root.iter('object'):

difficult = 0

if obj.find('difficult')!=None:

difficult = obj.find('difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult)==1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (int(float(xmlbox.find('xmin').text)), int(float(xmlbox.find('ymin').text)), int(float(xmlbox.find('xmax').text)), int(float(xmlbox.find('ymax').text)))

list_file.write(" " + ",".join([str(a) for a in b]) + ',' + str(cls_id))

nums[classes.index(cls)] = nums[classes.index(cls)] + 1

if __name__ == "__main__":

random.seed(0)

if " " in os.path.abspath(VOCdevkit_path):

raise ValueError("数据集存放的文件夹路径与图片名称中不可以存在空格")

if annotation_mode == 0 or annotation_mode == 1:

print("Generate txt in ImageSets.")

xmlfilepath = os.path.join(VOCdevkit_path, 'VOC2007/Annotations')

saveBasePath = os.path.join(VOCdevkit_path, 'VOC2007/ImageSets/Main')

temp_xml = os.listdir(xmlfilepath)

total_xml = []

for xml in temp_xml:

if xml.endswith(".xml"):

total_xml.append(xml)

num = len(total_xml)

list = range(num)

tv = int(num*trainval_percent)

tr = int(tv*train_percent)

trainval= random.sample(list,tv)

train = random.sample(trainval,tr)

print("train and val size",tv)

print("train size",tr)

ftrainval = open(os.path.join(saveBasePath,'trainval.txt'), 'w')

ftest = open(os.path.join(saveBasePath,'test.txt'), 'w')

ftrain = open(os.path.join(saveBasePath,'train.txt'), 'w')

fval = open(os.path.join(saveBasePath,'val.txt'), 'w')

for i in list:

name=total_xml[i][:-4]+'\n'

if i in trainval:

ftrainval.write(name)

if i in train:

ftrain.write(name)

else:

fval.write(name)

else:

ftest.write(name)

ftrainval.close()

ftrain.close()

fval.close()

ftest.close()

print("Generate txt in ImageSets done.")

if annotation_mode == 0 or annotation_mode == 2:

print("Generate 2007_train.txt and 2007_val.txt for train.")

type_index = 0

for year, image_set in VOCdevkit_sets:

image_ids = open(os.path.join(VOCdevkit_path, 'VOC%s/ImageSets/Main/%s.txt'%(year, image_set)), encoding='utf-8').read().strip().split()

list_file = open('%s_%s.txt'%(year, image_set), 'w', encoding='utf-8')

for image_id in image_ids:

list_file.write('%s/VOC%s/JPEGImages/%s.jpg'%(os.path.abspath(VOCdevkit_path), year, image_id))

convert_annotation(year, image_id, list_file)

list_file.write('\n')

photo_nums[type_index] = len(image_ids)

type_index += 1

list_file.close()

print("Generate 2007_train.txt and 2007_val.txt for train done.")

def printTable(List1, List2):

for i in range(len(List1[0])):

print("|", end=' ')

for j in range(len(List1)):

print(List1[j][i].rjust(int(List2[j])), end=' ')

print("|", end=' ')

print()

str_nums = [str(int(x)) for x in nums]

tableData = [

classes, str_nums

]

colWidths = [0]*len(tableData)

len1 = 0

for i in range(len(tableData)):

for j in range(len(tableData[i])):

if len(tableData[i][j]) > colWidths[i]:

colWidths[i] = len(tableData[i][j])

printTable(tableData, colWidths)

3. 目标检测模型介绍(SE改进的YOLOv4-tiny模型)

SE改进的YOLOv4-tiny的主要组成部分如下:

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_1 (InputLayer) [(None, 416, 416, 3) 0

__________________________________________________________________________________________________

zero_padding2d (ZeroPadding2D) (None, 417, 417, 3) 0 input_1[0][0]

__________________________________________________________________________________________________

conv2d (Conv2D) (None, 208, 208, 32) 864 zero_padding2d[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 208, 208, 32) 128 conv2d[0][0]

__________________________________________________________________________________________________

leaky_re_lu (LeakyReLU) (None, 208, 208, 32) 0 batch_normalization[0][0]

__________________________________________________________________________________________________

zero_padding2d_1 (ZeroPadding2D (None, 209, 209, 32) 0 leaky_re_lu[0][0]

__________________________________________________________________________________________________

conv2d_1 (Conv2D) (None, 104, 104, 64) 18432 zero_padding2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 104, 104, 64) 256 conv2d_1[0][0]

__________________________________________________________________________________________________

leaky_re_lu_1 (LeakyReLU) (None, 104, 104, 64) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

conv2d_2 (Conv2D) (None, 104, 104, 64) 36864 leaky_re_lu_1[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 104, 104, 64) 256 conv2d_2[0][0]

__________________________________________________________________________________________________

leaky_re_lu_2 (LeakyReLU) (None, 104, 104, 64) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

lambda (Lambda) (None, 104, 104, 32) 0 leaky_re_lu_2[0][0]

__________________________________________________________________________________________________

conv2d_3 (Conv2D) (None, 104, 104, 32) 9216 lambda[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 104, 104, 32) 128 conv2d_3[0][0]

__________________________________________________________________________________________________

leaky_re_lu_3 (LeakyReLU) (None, 104, 104, 32) 0 batch_normalization_3[0][0]

__________________________________________________________________________________________________

conv2d_4 (Conv2D) (None, 104, 104, 32) 9216 leaky_re_lu_3[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 104, 104, 32) 128 conv2d_4[0][0]

__________________________________________________________________________________________________

leaky_re_lu_4 (LeakyReLU) (None, 104, 104, 32) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

concatenate (Concatenate) (None, 104, 104, 64) 0 leaky_re_lu_4[0][0]

leaky_re_lu_3[0][0]

__________________________________________________________________________________________________

conv2d_5 (Conv2D) (None, 104, 104, 64) 4096 concatenate[0][0]

__________________________________________________________________________________________________

batch_normalization_5 (BatchNor (None, 104, 104, 64) 256 conv2d_5[0][0]

__________________________________________________________________________________________________

leaky_re_lu_5 (LeakyReLU) (None, 104, 104, 64) 0 batch_normalization_5[0][0]

__________________________________________________________________________________________________

concatenate_1 (Concatenate) (None, 104, 104, 128 0 leaky_re_lu_2[0][0]

leaky_re_lu_5[0][0]

__________________________________________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 52, 52, 128) 0 concatenate_1[0][0]

__________________________________________________________________________________________________

conv2d_6 (Conv2D) (None, 52, 52, 128) 147456 max_pooling2d[0][0]

__________________________________________________________________________________________________

batch_normalization_6 (BatchNor (None, 52, 52, 128) 512 conv2d_6[0][0]

__________________________________________________________________________________________________

leaky_re_lu_6 (LeakyReLU) (None, 52, 52, 128) 0 batch_normalization_6[0][0]

__________________________________________________________________________________________________

lambda_1 (Lambda) (None, 52, 52, 64) 0 leaky_re_lu_6[0][0]

__________________________________________________________________________________________________

conv2d_7 (Conv2D) (None, 52, 52, 64) 36864 lambda_1[0][0]

__________________________________________________________________________________________________

batch_normalization_7 (BatchNor (None, 52, 52, 64) 256 conv2d_7[0][0]

__________________________________________________________________________________________________

leaky_re_lu_7 (LeakyReLU) (None, 52, 52, 64) 0 batch_normalization_7[0][0]

__________________________________________________________________________________________________

conv2d_8 (Conv2D) (None, 52, 52, 64) 36864 leaky_re_lu_7[0][0]

__________________________________________________________________________________________________

batch_normalization_8 (BatchNor (None, 52, 52, 64) 256 conv2d_8[0][0]

__________________________________________________________________________________________________

leaky_re_lu_8 (LeakyReLU) (None, 52, 52, 64) 0 batch_normalization_8[0][0]

__________________________________________________________________________________________________

concatenate_2 (Concatenate) (None, 52, 52, 128) 0 leaky_re_lu_8[0][0]

leaky_re_lu_7[0][0]

__________________________________________________________________________________________________

conv2d_9 (Conv2D) (None, 52, 52, 128) 16384 concatenate_2[0][0]

__________________________________________________________________________________________________

batch_normalization_9 (BatchNor (None, 52, 52, 128) 512 conv2d_9[0][0]

__________________________________________________________________________________________________

leaky_re_lu_9 (LeakyReLU) (None, 52, 52, 128) 0 batch_normalization_9[0][0]

__________________________________________________________________________________________________

concatenate_3 (Concatenate) (None, 52, 52, 256) 0 leaky_re_lu_6[0][0]

leaky_re_lu_9[0][0]

__________________________________________________________________________________________________

max_pooling2d_1 (MaxPooling2D) (None, 26, 26, 256) 0 concatenate_3[0][0]

__________________________________________________________________________________________________

conv2d_10 (Conv2D) (None, 26, 26, 256) 589824 max_pooling2d_1[0][0]

__________________________________________________________________________________________________

batch_normalization_10 (BatchNo (None, 26, 26, 256) 1024 conv2d_10[0][0]

__________________________________________________________________________________________________

leaky_re_lu_10 (LeakyReLU) (None, 26, 26, 256) 0 batch_normalization_10[0][0]

__________________________________________________________________________________________________

lambda_2 (Lambda) (None, 26, 26, 128) 0 leaky_re_lu_10[0][0]

__________________________________________________________________________________________________

conv2d_11 (Conv2D) (None, 26, 26, 128) 147456 lambda_2[0][0]

__________________________________________________________________________________________________

batch_normalization_11 (BatchNo (None, 26, 26, 128) 512 conv2d_11[0][0]

__________________________________________________________________________________________________

leaky_re_lu_11 (LeakyReLU) (None, 26, 26, 128) 0 batch_normalization_11[0][0]

__________________________________________________________________________________________________

conv2d_12 (Conv2D) (None, 26, 26, 128) 147456 leaky_re_lu_11[0][0]

__________________________________________________________________________________________________

batch_normalization_12 (BatchNo (None, 26, 26, 128) 512 conv2d_12[0][0]

__________________________________________________________________________________________________

leaky_re_lu_12 (LeakyReLU) (None, 26, 26, 128) 0 batch_normalization_12[0][0]

__________________________________________________________________________________________________

concatenate_4 (Concatenate) (None, 26, 26, 256) 0 leaky_re_lu_12[0][0]

leaky_re_lu_11[0][0]

__________________________________________________________________________________________________

conv2d_13 (Conv2D) (None, 26, 26, 256) 65536 concatenate_4[0][0]

__________________________________________________________________________________________________

batch_normalization_13 (BatchNo (None, 26, 26, 256) 1024 conv2d_13[0][0]

__________________________________________________________________________________________________

leaky_re_lu_13 (LeakyReLU) (None, 26, 26, 256) 0 batch_normalization_13[0][0]

__________________________________________________________________________________________________

concatenate_5 (Concatenate) (None, 26, 26, 512) 0 leaky_re_lu_10[0][0]

leaky_re_lu_13[0][0]

__________________________________________________________________________________________________

max_pooling2d_2 (MaxPooling2D) (None, 13, 13, 512) 0 concatenate_5[0][0]

__________________________________________________________________________________________________

conv2d_14 (Conv2D) (None, 13, 13, 512) 2359296 max_pooling2d_2[0][0]

__________________________________________________________________________________________________

batch_normalization_14 (BatchNo (None, 13, 13, 512) 2048 conv2d_14[0][0]

__________________________________________________________________________________________________

leaky_re_lu_14 (LeakyReLU) (None, 13, 13, 512) 0 batch_normalization_14[0][0]

__________________________________________________________________________________________________

conv2d_15 (Conv2D) (None, 13, 13, 256) 131072 leaky_re_lu_14[0][0]

__________________________________________________________________________________________________

batch_normalization_15 (BatchNo (None, 13, 13, 256) 1024 conv2d_15[0][0]

__________________________________________________________________________________________________

leaky_re_lu_15 (LeakyReLU) (None, 13, 13, 256) 0 batch_normalization_15[0][0]

__________________________________________________________________________________________________

conv2d_18 (Conv2D) (None, 13, 13, 128) 32768 leaky_re_lu_15[0][0]

__________________________________________________________________________________________________

batch_normalization_17 (BatchNo (None, 13, 13, 128) 512 conv2d_18[0][0]

__________________________________________________________________________________________________

leaky_re_lu_17 (LeakyReLU) (None, 13, 13, 128) 0 batch_normalization_17[0][0]

__________________________________________________________________________________________________

up_sampling2d (UpSampling2D) (None, 26, 26, 128) 0 leaky_re_lu_17[0][0]

__________________________________________________________________________________________________

concatenate_6 (Concatenate) (None, 26, 26, 384) 0 up_sampling2d[0][0]

leaky_re_lu_13[0][0]

__________________________________________________________________________________________________

conv2d_16 (Conv2D) (None, 13, 13, 512) 1179648 leaky_re_lu_15[0][0]

__________________________________________________________________________________________________

conv2d_19 (Conv2D) (None, 26, 26, 256) 884736 concatenate_6[0][0]

__________________________________________________________________________________________________

batch_normalization_16 (BatchNo (None, 13, 13, 512) 2048 conv2d_16[0][0]

__________________________________________________________________________________________________

batch_normalization_18 (BatchNo (None, 26, 26, 256) 1024 conv2d_19[0][0]

__________________________________________________________________________________________________

leaky_re_lu_16 (LeakyReLU) (None, 13, 13, 512) 0 batch_normalization_16[0][0]

__________________________________________________________________________________________________

leaky_re_lu_18 (LeakyReLU) (None, 26, 26, 256) 0 batch_normalization_18[0][0]

__________________________________________________________________________________________________

conv2d_17 (Conv2D) (None, 13, 13, 255) 130815 leaky_re_lu_16[0][0]

__________________________________________________________________________________________________

conv2d_20 (Conv2D) (None, 26, 26, 255) 65535 leaky_re_lu_18[0][0]

==================================================================================================tf2版本 SE注意力机制模块的实现如下:

def se_block(input_feature, ratio=16, name=""):

channel = K.int_shape(input_feature)[-1]

se_feature = GlobalAveragePooling2D()(input_feature)

se_feature = Reshape((1, 1, channel))(se_feature)

se_feature = Dense(channel // ratio,

activation='relu',

kernel_initializer='he_normal',

use_bias=False,

name = "se_block_one_"+str(name))(se_feature)

se_feature = Dense(channel,

kernel_initializer='he_normal',

use_bias=False,

name = "se_block_two_"+str(name))(se_feature)

se_feature = Activation('sigmoid')(se_feature)

se_feature = multiply([input_feature, se_feature])

return se_featuretf2版本 CSPDarknet53-tiny的实现如下:

from functools import wraps

import tensorflow as tf

from tensorflow.keras.initializers import RandomNormal

from tensorflow.keras.layers import (BatchNormalization, Concatenate,

Conv2D, Lambda, LeakyReLU,

MaxPooling2D, ZeroPadding2D)

from tensorflow.keras.regularizers import l2

from utils.utils import compose

def route_group(input_layer, groups, group_id):

convs = tf.split(input_layer, num_or_size_splits=groups, axis=-1)

return convs[group_id]

@wraps(Conv2D)

def DarknetConv2D(*args, **kwargs):

darknet_conv_kwargs = {'kernel_initializer' : RandomNormal(stddev=0.02), 'kernel_regularizer' : l2(kwargs.get('weight_decay', 5e-4))}

darknet_conv_kwargs['padding'] = 'valid' if kwargs.get('strides')==(2, 2) else 'same'

try:

del kwargs['weight_decay']

except:

pass

darknet_conv_kwargs.update(kwargs)

return Conv2D(*args, **darknet_conv_kwargs)

def DarknetConv2D_BN_Leaky(*args, **kwargs):

no_bias_kwargs = {'use_bias': False}

no_bias_kwargs.update(kwargs)

return compose(

DarknetConv2D(*args, **no_bias_kwargs),

BatchNormalization(),

LeakyReLU(alpha=0.1))

def resblock_body(x, num_filters, weight_decay=5e-4):

x = DarknetConv2D_BN_Leaky(num_filters, (3,3), weight_decay=weight_decay)(x)

route = x

x = Lambda(route_group,arguments={'groups':2, 'group_id':1})(x)

x = DarknetConv2D_BN_Leaky(int(num_filters/2), (3,3), weight_decay=weight_decay)(x)

route_1 = x

x = DarknetConv2D_BN_Leaky(int(num_filters/2), (3,3), weight_decay=weight_decay)(x)

x = Concatenate()([x, route_1])

x = DarknetConv2D_BN_Leaky(num_filters, (1,1), weight_decay=weight_decay)(x)

feat = x

x = Concatenate()([route, x])

x = MaxPooling2D(pool_size=[2,2],)(x)

return x, feat

def darknet_body(x, weight_decay=5e-4):

x = ZeroPadding2D(((1,0),(1,0)))(x)

x = DarknetConv2D_BN_Leaky(32, (3,3), strides=(2,2), weight_decay=weight_decay)(x)

x = ZeroPadding2D(((1,0),(1,0)))(x)

x = DarknetConv2D_BN_Leaky(64, (3,3), strides=(2,2), weight_decay=weight_decay)(x)

x, _ = resblock_body(x, num_filters = 64, weight_decay=weight_decay)

x, _ = resblock_body(x, num_filters = 128, weight_decay=weight_decay)

x, feat1 = resblock_body(x, num_filters = 256, weight_decay=weight_decay)

x = DarknetConv2D_BN_Leaky(512, (3,3), weight_decay=weight_decay)(x)

feat2 = x

return feat1, feat2

4. 模型训练及测试

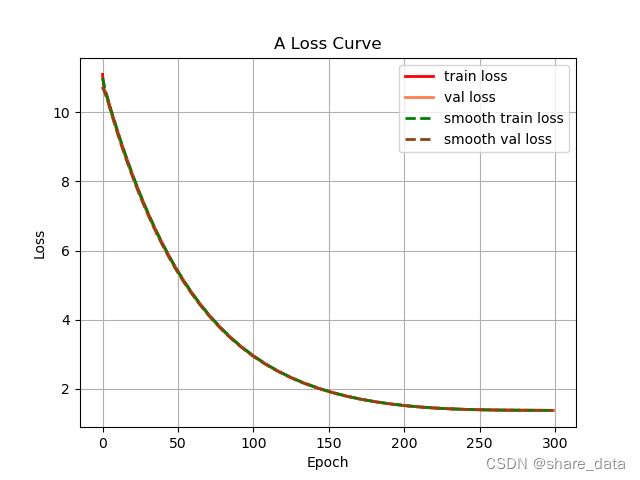

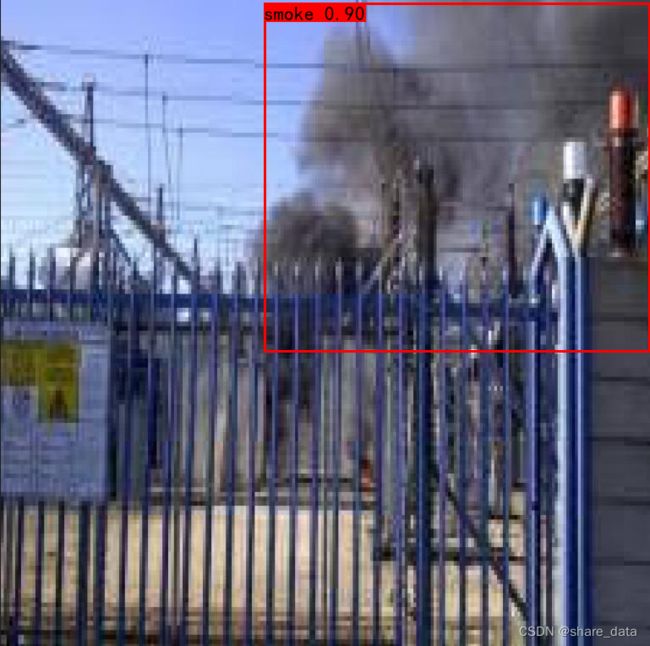

模型训练硬件环境3060,windows10系统。采用冻结训练加速模型拟合,batchsize=32。迭代训练300个epoch。训练得到模型的mAP值=78.79,fps=168.18。

博客中涉及一些网络资源,如有侵权请联系删除。