【进阶篇】全流程学习《20天掌握Pytorch实战》纪实 | Day10 | 高阶API示范

作者简介:大家好,我是车神哥,府学路18号的车神

⚡About—>车神:从寝室到实验室最快3分钟,最慢3分半(半分钟献给红绿灯)

个人主页:车手只需要车和手,压力来自论文_府学路18号车神_CSDN博客

官方认证:人工智能领域优质创作者

点赞➕评论➕收藏 == 养成习惯(一键三连)⚡希望大家多多支持~一起加油

专栏

《20天掌握Pytorch实战》

不定期学习《20天掌握Pytorch实战》,有兴趣就跟着专栏一起吧~

开源自由,知识无价~

自动微分机制

- 一、前言

- 二、线性回归模型

- 三、DNN二分类模型

- 往期纪实

- 总结

- Reference

所用到的源代码及书籍+数据集已帮各位小伙伴下载放在文末,自取即可~

一、前言

Pytorch没有官方的高阶API,一般需要用户自己实现训练循环、验证循环、和预测循环。

作者通过仿照

tf.keras.Model的功能对Pytorch的nn.Module进行了封装,设计了torchkeras.Model类,实现了 fit, validate,predict, summary 方法,相当于用户自定义高阶API。

并示范了用它实现线性回归模型。

此外,作者还通过借用pytorch_lightning的功能,封装了类Keras接口的另外一种实现,即torchkeras.LightModel类。

并示范了用它实现DNN二分类模型。

老规矩:

import os

import datetime

#打印时间

def printbar():

nowtime = datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')

print("\n"+"=========="*8 + "%s"%nowtime)

#mac系统上pytorch和matplotlib在jupyter中同时跑需要更改环境变量

os.environ["KMP_DUPLICATE_LIB_OK"]="TRUE"

二、线性回归模型

此范例我们通过继承torchkeras.Model模型接口,实现线性回归模型。

- 准备数据

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

import torch

from torch import nn

import torch.nn.functional as F

from torch.utils.data import Dataset,DataLoader,TensorDataset

#样本数量

n = 400

# 生成测试用数据集

X = 10*torch.rand([n,2])-5.0 #torch.rand是均匀分布

w0 = torch.tensor([[2.0],[-3.0]])

b0 = torch.tensor([[10.0]])

Y = X@w0 + b0 + torch.normal( 0.0,2.0,size = [n,1]) # @表示矩阵乘法,增加正态扰动

# 数据可视化

%matplotlib inline

%config InlineBackend.figure_format = 'svg'

plt.figure(figsize = (12,5))

ax1 = plt.subplot(121)

ax1.scatter(X[:,0],Y[:,0], c = "b",label = "samples")

ax1.legend()

plt.xlabel("x1")

plt.ylabel("y",rotation = 0)

ax2 = plt.subplot(122)

ax2.scatter(X[:,1],Y[:,0], c = "g",label = "samples")

ax2.legend()

plt.xlabel("x2")

plt.ylabel("y",rotation = 0)

plt.show()

#构建输入数据管道

ds = TensorDataset(X,Y)

ds_train,ds_valid = torch.utils.data.random_split(ds,[int(400*0.7),400-int(400*0.7)])

dl_train = DataLoader(ds_train,batch_size = 10,shuffle=True,num_workers=2)

dl_valid = DataLoader(ds_valid,batch_size = 10,num_workers=2)

- 定义模型

这点和低阶和中阶API定义模型有所不同。

# 继承用户自定义模型

from torchkeras import Model

class LinearRegression(Model):

def __init__(self):

super(LinearRegression, self).__init__()

self.fc = nn.Linear(2,1)

def forward(self,x):

return self.fc(x)

model = LinearRegression()

查看构建的模型:

model.summary(input_shape = (2,))

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Linear-1 [-1, 1] 3

================================================================

Total params: 3

Trainable params: 3

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.000008

Forward/backward pass size (MB): 0.000008

Params size (MB): 0.000011

Estimated Total Size (MB): 0.000027

----------------------------------------------------------------

Process finished with exit code 0

- 训练模型

### 使用fit方法进行训练

def mean_absolute_error(y_pred,y_true):

return torch.mean(torch.abs(y_pred-y_true))

def mean_absolute_percent_error(y_pred,y_true):

absolute_percent_error = (torch.abs(y_pred-y_true)+1e-7)/(torch.abs(y_true)+1e-7)

return torch.mean(absolute_percent_error)

model.compile(loss_func = nn.MSELoss(),

optimizer= torch.optim.Adam(model.parameters(),lr = 0.01),

metrics_dict={"mae":mean_absolute_error,"mape":mean_absolute_percent_error})

dfhistory = model.fit(200,dl_train = dl_train, dl_val = dl_valid,log_step_freq = 20)

Duang~

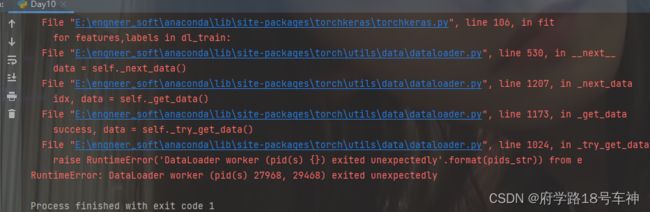

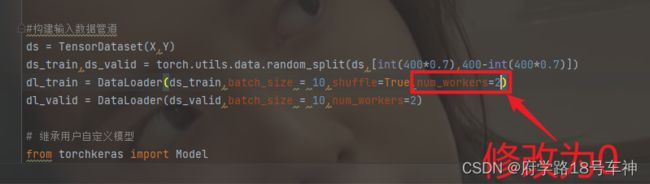

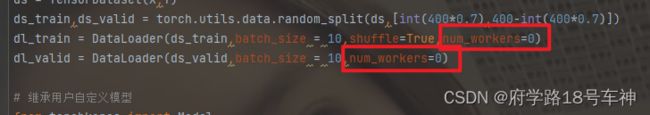

貌似是由于DataLoader worker中的pis(s)存在异常,因此推断为num_workers=2,设置有问题。

两个都得修改哦!!!修改完毕之后就可以训练啦~

训练过程:

Start Training ...

================================================================================2022-05-12 13:16:46

{'step': 20, 'loss': 3.937, 'mae': 1.565, 'mape': 0.427}

+-------+-------+-------+-------+----------+---------+----------+

| epoch | loss | mae | mape | val_loss | val_mae | val_mape |

+-------+-------+-------+-------+----------+---------+----------+

| 192 | 3.592 | 1.513 | 0.427 | 3.006 | 1.425 | 0.267 |

+-------+-------+-------+-------+----------+---------+----------+

================================================================================2022-05-12 13:16:46

{'step': 20, 'loss': 3.469, 'mae': 1.45, 'mape': 0.369}

+-------+------+-------+-------+----------+---------+----------+

| epoch | loss | mae | mape | val_loss | val_mae | val_mape |

+-------+------+-------+-------+----------+---------+----------+

| 193 | 3.58 | 1.511 | 0.422 | 3.001 | 1.423 | 0.267 |

+-------+------+-------+-------+----------+---------+----------+

================================================================================2022-05-12 13:16:46

{'step': 20, 'loss': 3.546, 'mae': 1.505, 'mape': 0.371}

+-------+-------+-------+-------+----------+---------+----------+

| epoch | loss | mae | mape | val_loss | val_mae | val_mape |

+-------+-------+-------+-------+----------+---------+----------+

| 194 | 3.572 | 1.511 | 0.422 | 3.013 | 1.425 | 0.267 |

+-------+-------+-------+-------+----------+---------+----------+

================================================================================2022-05-12 13:16:46

{'step': 20, 'loss': 3.807, 'mae': 1.555, 'mape': 0.426}

+-------+-------+-------+-------+----------+---------+----------+

| epoch | loss | mae | mape | val_loss | val_mae | val_mape |

+-------+-------+-------+-------+----------+---------+----------+

| 195 | 3.573 | 1.509 | 0.421 | 3.022 | 1.427 | 0.267 |

+-------+-------+-------+-------+----------+---------+----------+

================================================================================2022-05-12 13:16:46

{'step': 20, 'loss': 3.636, 'mae': 1.528, 'mape': 0.441}

+-------+-------+-------+-------+----------+---------+----------+

| epoch | loss | mae | mape | val_loss | val_mae | val_mape |

+-------+-------+-------+-------+----------+---------+----------+

| 196 | 3.591 | 1.516 | 0.424 | 2.996 | 1.422 | 0.267 |

+-------+-------+-------+-------+----------+---------+----------+

================================================================================2022-05-12 13:16:46

{'step': 20, 'loss': 3.716, 'mae': 1.541, 'mape': 0.418}

+-------+-------+-------+------+----------+---------+----------+

| epoch | loss | mae | mape | val_loss | val_mae | val_mape |

+-------+-------+-------+------+----------+---------+----------+

| 197 | 3.592 | 1.513 | 0.42 | 3.033 | 1.429 | 0.267 |

+-------+-------+-------+------+----------+---------+----------+

================================================================================2022-05-12 13:16:46

{'step': 20, 'loss': 3.543, 'mae': 1.521, 'mape': 0.459}

+-------+-------+-------+------+----------+---------+----------+

| epoch | loss | mae | mape | val_loss | val_mae | val_mape |

+-------+-------+-------+------+----------+---------+----------+

| 198 | 3.573 | 1.511 | 0.42 | 3.005 | 1.422 | 0.266 |

+-------+-------+-------+------+----------+---------+----------+

================================================================================2022-05-12 13:16:46

{'step': 20, 'loss': 3.88, 'mae': 1.57, 'mape': 0.461}

+-------+-------+------+-------+----------+---------+----------+

| epoch | loss | mae | mape | val_loss | val_mae | val_mape |

+-------+-------+------+-------+----------+---------+----------+

| 199 | 3.586 | 1.51 | 0.425 | 2.964 | 1.415 | 0.266 |

+-------+-------+------+-------+----------+---------+----------+

================================================================================2022-05-12 13:16:46

{'step': 20, 'loss': 3.686, 'mae': 1.533, 'mape': 0.456}

+-------+-------+-------+-------+----------+---------+----------+

| epoch | loss | mae | mape | val_loss | val_mae | val_mape |

+-------+-------+-------+-------+----------+---------+----------+

| 200 | 3.585 | 1.513 | 0.422 | 3.027 | 1.427 | 0.266 |

+-------+-------+-------+-------+----------+---------+----------+

================================================================================2022-05-12 13:16:46

Finished Training...

Process finished with exit code 0

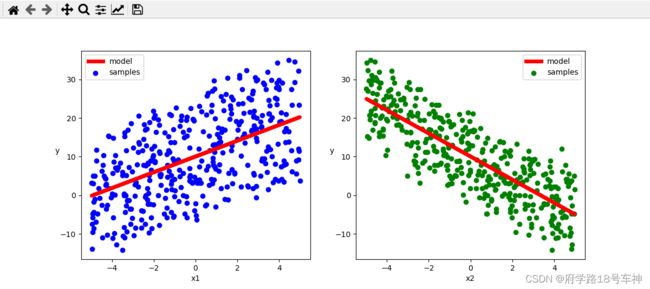

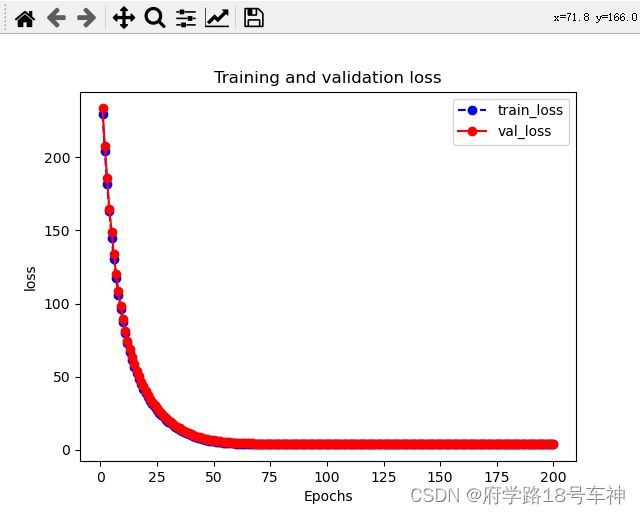

可视化看一下结果:

# 结果可视化

%matplotlib inline

%config InlineBackend.figure_format = 'svg'

w,b = model.state_dict()["fc.weight"],model.state_dict()["fc.bias"]

plt.figure(figsize = (12,5))

ax1 = plt.subplot(121)

ax1.scatter(X[:,0],Y[:,0], c = "b",label = "samples")

ax1.plot(X[:,0],w[0,0]*X[:,0]+b[0],"-r",linewidth = 5.0,label = "model")

ax1.legend()

plt.xlabel("x1")

plt.ylabel("y",rotation = 0)

ax2 = plt.subplot(122)

ax2.scatter(X[:,1],Y[:,0], c = "g",label = "samples")

ax2.plot(X[:,1],w[0,1]*X[:,1]+b[0],"-r",linewidth = 5.0,label = "model")

ax2.legend()

plt.xlabel("x2")

plt.ylabel("y",rotation = 0)

plt.show()

- 评估模型

loss mae mape val_loss val_mae val_mape

195 4.048818 1.607230 0.404676 3.287203 1.434746 0.275100

196 4.022431 1.604069 0.403087 3.268545 1.438853 0.284785

197 4.033030 1.606301 0.398481 3.279242 1.433997 0.280365

198 4.025631 1.604305 0.403040 3.277311 1.436844 0.285086

199 4.024773 1.602430 0.405442 3.277929 1.431414 0.280449

import matplotlib.pyplot as plt

def plot_metric(dfhistory, metric):

train_metrics = dfhistory[metric]

val_metrics = dfhistory['val_'+metric]

epochs = range(1, len(train_metrics) + 1)

plt.plot(epochs, train_metrics, 'bo--')

plt.plot(epochs, val_metrics, 'ro-')

plt.title('Training and validation '+ metric)

plt.xlabel("Epochs")

plt.ylabel(metric)

plt.legend(["train_"+metric, 'val_'+metric])

plt.show()

plot_metric(dfhistory,"loss")

plot_metric(dfhistory,"mape")

# 评估

model.evaluate(dl_valid)

{'val_loss': 3.423220564921697, 'val_mae': 1.5170900424321492, 'val_mape': 0.46843311563134193}

- 使用模型

# 预测

dl = DataLoader(TensorDataset(X))

model.predict(dl)[0:10]

tensor([[ 0.8931],

[21.5440],

[10.8904],

[25.0322],

[-6.3273],

[25.2734],

[16.8184],

[-4.1007],

[26.4564],

[16.8246]])

# 预测

print(model.predict(dl_valid)[0:10])

tensor([[18.4650],

[20.6201],

[23.7864],

[ 1.9807],

[ 4.6205],

[13.8735],

[-6.2053],

[ 5.6800],

[10.7453],

[23.7684]])

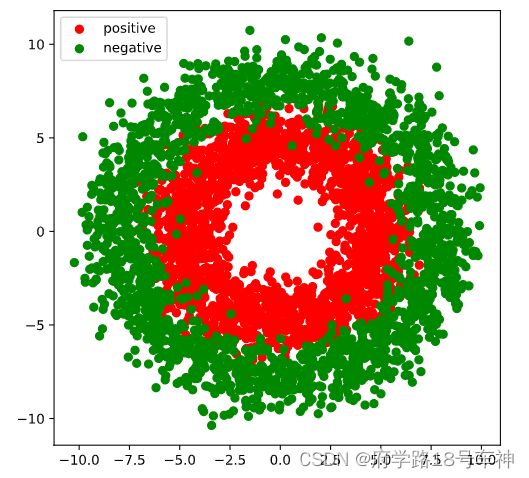

三、DNN二分类模型

此范例我们通过继承torchkeras.LightModel模型接口,实现DNN二分类模型。

- 准备数据

import numpy as np

import pandas as pd

from matplotlib import pyplot as plt

import torch

from torch import nn

import torch.nn.functional as F

from torch.utils.data import Dataset,DataLoader,TensorDataset

import torchkeras

import pytorch_lightning as pl

%matplotlib inline

%config InlineBackend.figure_format = 'svg'

#正负样本数量

n_positive,n_negative = 2000,2000

#生成正样本, 小圆环分布

r_p = 5.0 + torch.normal(0.0,1.0,size = [n_positive,1])

theta_p = 2*np.pi*torch.rand([n_positive,1])

Xp = torch.cat([r_p*torch.cos(theta_p),r_p*torch.sin(theta_p)],axis = 1)

Yp = torch.ones_like(r_p)

#生成负样本, 大圆环分布

r_n = 8.0 + torch.normal(0.0,1.0,size = [n_negative,1])

theta_n = 2*np.pi*torch.rand([n_negative,1])

Xn = torch.cat([r_n*torch.cos(theta_n),r_n*torch.sin(theta_n)],axis = 1)

Yn = torch.zeros_like(r_n)

#汇总样本

X = torch.cat([Xp,Xn],axis = 0)

Y = torch.cat([Yp,Yn],axis = 0)

#可视化

plt.figure(figsize = (6,6))

plt.scatter(Xp[:,0],Xp[:,1],c = "r")

plt.scatter(Xn[:,0],Xn[:,1],c = "g")

plt.legend(["positive","negative"]);

ds = TensorDataset(X,Y)

ds_train,ds_valid = torch.utils.data.random_split(ds,[int(len(ds)*0.7),len(ds)-int(len(ds)*0.7)])

dl_train = DataLoader(ds_train,batch_size = 100,shuffle=True,num_workers=2)

dl_valid = DataLoader(ds_valid,batch_size = 100,num_workers=2)

- 训练模型

class Net(nn.Module):

def __init__(self):

super().__init__()

self.fc1 = nn.Linear(2,4)

self.fc2 = nn.Linear(4,8)

self.fc3 = nn.Linear(8,1)

def forward(self,x):

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

y = nn.Sigmoid()(self.fc3(x))

return y

class Model(torchkeras.LightModel):

#loss,and optional metrics

def shared_step(self,batch)->dict:

x, y = batch

prediction = self(x)

loss = nn.BCELoss()(prediction,y)

preds = torch.where(prediction>0.5,torch.ones_like(prediction),torch.zeros_like(prediction))

acc = pl.metrics.functional.accuracy(preds, y)

# attention: there must be a key of "loss" in the returned dict

dic = {"loss":loss,"acc":acc}

return dic

#optimizer,and optional lr_scheduler

def configure_optimizers(self):

optimizer = torch.optim.Adam(self.parameters(), lr=1e-2)

lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.0001)

return {"optimizer":optimizer,"lr_scheduler":lr_scheduler}

pl.seed_everything(1234)

net = Net()

model = Model(net)

torchkeras.summary(model,input_shape =(2,))

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Linear-1 [-1, 4] 12

Linear-2 [-1, 8] 40

Linear-3 [-1, 1] 9

================================================================

Total params: 61

Trainable params: 61

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.000008

Forward/backward pass size (MB): 0.000099

Params size (MB): 0.000233

Estimated Total Size (MB): 0.000340

----------------------------------------------------------------

- 训练模型

ckpt_cb = pl.callbacks.ModelCheckpoint(monitor='val_loss')

# set gpus=0 will use cpu,

# set gpus=1 will use 1 gpu

# set gpus=2 will use 2gpus

# set gpus = -1 will use all gpus

# you can also set gpus = [0,1] to use the given gpus

# you can even set tpu_cores=2 to use two tpus

trainer = pl.Trainer(max_epochs=100,gpus = 0, callbacks=[ckpt_cb])

trainer.fit(model,dl_train,dl_valid)

================================================================================2021-01-16 23:41:38

epoch = 0

{'val_loss': 0.6706896424293518, 'val_acc': 0.5558333396911621}

{'acc': 0.5157142281532288, 'loss': 0.6820458769798279}

================================================================================2021-01-16 23:41:39

epoch = 1

{'val_loss': 0.653035581111908, 'val_acc': 0.5708333849906921}

{'acc': 0.5457143783569336, 'loss': 0.6677185297012329}

================================================================================2021-01-16 23:41:40

epoch = 2

{'val_loss': 0.6122683882713318, 'val_acc': 0.6533333659172058}

{'acc': 0.6132143139839172, 'loss': 0.6375874876976013}

================================================================================2021-01-16 23:41:40

epoch = 3

{'val_loss': 0.5168119668960571, 'val_acc': 0.7708333134651184}

{'acc': 0.6842857003211975, 'loss': 0.574131190776825}

================================================================================2021-01-16 23:41:41

epoch = 4

{'val_loss': 0.3789764940738678, 'val_acc': 0.8766666054725647}

{'acc': 0.7900000214576721, 'loss': 0.4608381390571594}

================================================================================2021-01-16 23:41:41

epoch = 5

{'val_loss': 0.2496153712272644, 'val_acc': 0.9208332896232605}

{'acc': 0.8982142806053162, 'loss': 0.3223666250705719}

================================================================================2021-01-16 23:41:42

epoch = 6

{'val_loss': 0.21876734495162964, 'val_acc': 0.9124999642372131}

{'acc': 0.908214271068573, 'loss': 0.24333418905735016}

================================================================================2021-01-16 23:41:43

epoch = 7

{'val_loss': 0.19420616328716278, 'val_acc': 0.9266666769981384}

{'acc': 0.9132143259048462, 'loss': 0.2207658737897873}

================================================================================2021-01-16 23:41:44

epoch = 8

{'val_loss': 0.1835813671350479, 'val_acc': 0.9225000739097595}

{'acc': 0.9185715317726135, 'loss': 0.20826208591461182}

================================================================================2021-01-16 23:41:45

epoch = 9

{'val_loss': 0.17465434968471527, 'val_acc': 0.9300000071525574}

{'acc': 0.9189285039901733, 'loss': 0.20436131954193115}

# 结果可视化

fig, (ax1,ax2) = plt.subplots(nrows=1,ncols=2,figsize = (12,5))

ax1.scatter(Xp[:,0],Xp[:,1], c="r")

ax1.scatter(Xn[:,0],Xn[:,1],c = "g")

ax1.legend(["positive","negative"]);

ax1.set_title("y_true");

Xp_pred = X[torch.squeeze(model.forward(X)>=0.5)]

Xn_pred = X[torch.squeeze(model.forward(X)<0.5)]

ax2.scatter(Xp_pred[:,0],Xp_pred[:,1],c = "r")

ax2.scatter(Xn_pred[:,0],Xn_pred[:,1],c = "g")

ax2.legend(["positive","negative"]);

ax2.set_title("y_pred");

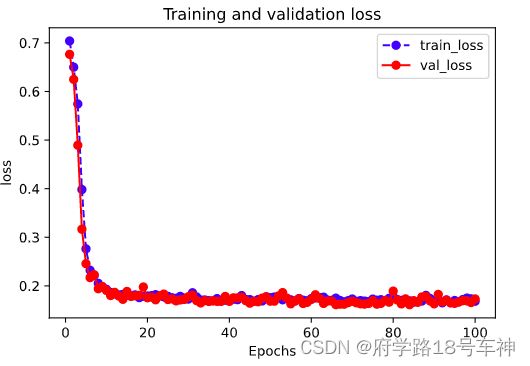

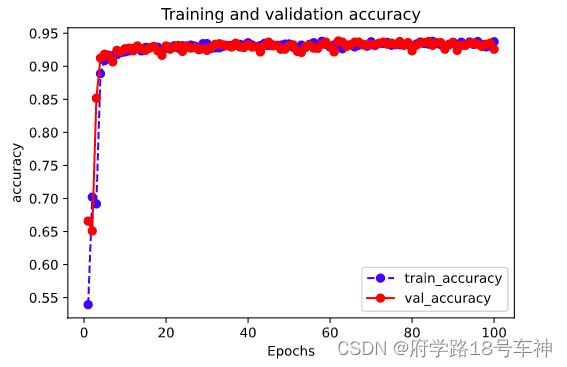

- 评估模型

import pandas as pd

history = model.history

dfhistory = pd.DataFrame(history)

dfhistory

import matplotlib.pyplot as plt

def plot_metric(dfhistory, metric):

train_metrics = dfhistory[metric]

val_metrics = dfhistory['val_'+metric]

epochs = range(1, len(train_metrics) + 1)

plt.plot(epochs, train_metrics, 'bo--')

plt.plot(epochs, val_metrics, 'ro-')

plt.title('Training and validation '+ metric)

plt.xlabel("Epochs")

plt.ylabel(metric)

plt.legend(["train_"+metric, 'val_'+metric])

plt.show()

plot_metric(dfhistory,"loss")

plot_metric(dfhistory,"acc")

results = trainer.test(model, test_dataloaders=dl_valid, verbose = False)

print(results[0])

{'test_loss': 0.18403057754039764, 'test_acc': 0.949999988079071}

- 使用模型

def predict(model,dl):

model.eval()

prediction = torch.cat([model.forward(t[0].to(model.device)) for t in dl])

result = torch.where(prediction>0.5,torch.ones_like(prediction),torch.zeros_like(prediction))

return(result.data)

result = predict(model,dl_valid)

result

结果:

tensor([[0.],

[0.],

[0.],

...,

[1.],

[1.],

[1.]])

往期纪实

| Date | 《20天掌握Pytorch实战》 |

|---|---|

| Day01 | 【进阶篇】全流程学习《20天掌握Pytorch实战》纪实 | Day01 | 结构化数据建模流程范例 |

| Day02 | 【进阶篇】全流程学习《20天掌握Pytorch实战》纪实| Day02 | 图片数据建模流程范例 |

| Day03 | 【进阶篇】全流程学习《20天掌握Pytorch实战》纪实 | Day03 | 文本数据建模流程范例 |

| Day04 | 【进阶篇】全流程学习《20天掌握Pytorch实战》纪实 | Day04 | 时间序列建模流程范例 |

| Day05 | 【进阶篇】全流程学习《20天掌握Pytorch实战》纪实 | Day05 | 张量数据结构 |

| Day06 | 【进阶篇】全流程学习《20天掌握Pytorch实战》纪实 | Day06 | 自动微分机制 |

| Day07 | 【进阶篇】全流程学习《20天掌握Pytorch实战》纪实 | Day07 | 动态计算图 |

| Day08 | 【进阶篇】全流程学习《20天掌握Pytorch实战》纪实 | Day08 | 低阶API示范 |

| Day09 | 【进阶篇】全流程学习《20天掌握Pytorch实战》纪实 | Day09 | 中阶API示范 |

总结

本期介绍了高阶API示范,通过仿照tf.keras.Model的功能对Pytorch的nn.Module进行了封装,设计了torchkeras.Model类,实现了 fit, validate,predict, summary 方法,相当于用户自定义高阶API。

本文示例主要解释了张量数据结构的基本操作。对于0基础的同学来说可能还是稍有难度,因此,本文中给出了大部分使用到的库的解释,同时给出了部分代码的注释,以便小伙伴的理解,仅供参考,如有错误,请留言指出,最后一句:开源万岁~

同时为原作者打Call:

如果本书对你有所帮助,想鼓励一下作者,记得给本项目加一颗星星star⭐️,并分享给你的朋友们喔!

地址在这里哦:https://github.com/lyhue1991/eat_pytorch_in_20_days

Reference

- https://github.com/lyhue1991/eat_pytorch_in_20_days

书籍源码在此:

链接:https://pan.baidu.com/s/1P3WRVTYMpv1DUiK-y9FG3A

提取码:yyds

❤坚持读Paper,坚持做笔记,坚持学习,坚持刷力扣LeetCode❤!!!

坚持刷题!!!

⚡To Be No.1⚡⚡哈哈哈哈

⚡创作不易⚡,过路能❤关注、收藏、点个赞❤三连就最好不过了

ღ( ´・ᴗ・` )

❤