java爬虫

1. 基础知识

1.1 网络爬虫的基本概念

- 爬虫引入:

随着互联网的迅速发展,网络资源越来越丰富,信息需求者如何从网络中抽取信息变得至关重要。目前,有效的获取网络数据资源的重要方式,便是网络爬虫技术。简单的理解,比如您对百度贴吧的一个帖子内容特别感兴趣,而帖子的回复却有1000多页,这时采用逐条复制的方法便不可行。而采用网络爬虫便可以很轻松地采集到该帖子下的所有内容。

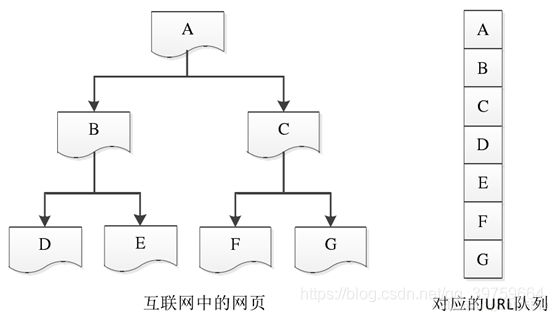

网络爬虫技术最广泛的应用是在搜索引擎中,如百度、Google、Bing 等,它完成了搜索过程中的最关键的步骤,即网页内容的抓取。下图为简单搜索引擎原理图。 - 什么是网络爬虫:

网络爬虫(Web Crawler),又称为网络蜘蛛(Web Spider)或 Web 信息采集器,是一种按照一定规则,自动抓取或下载网络信息的计算机程序或自动化脚本。 - 狭义上理解:

利用标准的 HTTP 协议,根据网络超链接(如https://www.baidu.com/)和 Web 文档检索的方法(如深度优先)遍历万维网信息空间的软件程序。 - 功能上理解:

确定待爬的 URL 队列,获取每个 URL 对应的网页内容(如 HTML/JSON),解析网页内容,并存储对应的数据。

本质:

网络爬虫实际上是通过模拟浏览器的方式获取服务器数据.

1.2 网络爬虫的分类

- 通用网络爬虫

爬行对象从一些种子 URL 扩充到整个 Web,主要为门户站点搜索引擎和大型 Web 服务提供商采集数据。通用网络爬虫的爬取范围和数量巨大,对于爬行速度和存储空间要求较高,对于爬行页面的顺序要求较低,通常采用并行工作方式,有较强的应用价值。 - 聚焦网络爬虫

又称为主题网络爬虫:是指选择性地爬行那些与预先定义好的主题相关的页面。和通用爬虫相比,聚焦爬虫只需要爬行与主题相关的页面,极大地节省了硬件和网络资源,保存的页面也由于数量少而更新快,可以很好地满足一些特定人群对特定领域信息的需求。通常在设计聚焦网络爬虫时,需要加入链接和内容筛选模块。 - 增量网络爬虫

对已下载网页采取增量式更新和只爬行新产生的或者已经发生变化网页的爬虫,它能够在一定程度上保证所爬行的页面是尽可能新的页面,历史已经采集过的页面不重复采集。增量网络爬虫避免了重复采集数据,可以减小时间和空间上的耗费。通常在设计网络爬虫时,需要在数据库中,加入时间戳,基于时间戳上的先后,判断程序是否继续执行。 - Deep Web 爬虫

- Deep Web 爬虫

指大部分内容不能通过静态链接获取,只有用户提交一些表单信息才能获取的 Web 页面。例如,需要模拟登陆的网络爬虫便属于这类网络爬虫。

另外,还有一些需要用户提交关键词才能获取的内容,如京东淘宝提交关键字、价格区间获取产品的相关信息。

1.3 网络爬虫的流程

1.4 网络爬虫的策略

2.java爬虫入门

2.1 环境准备

jar包准备

<dependency>

<groupId>org.apache.httpcomponentsgroupId>

<artifactId>httpclientartifactId>

<version>4.5.3version>

dependency>

<dependency>

<groupId>org.jsoupgroupId>

<artifactId>jsoupartifactId>

<version>1.10.3version>

dependency>

<dependency>

<groupId>junitgroupId>

<artifactId>junitartifactId>

<version>4.12version>

dependency>

<dependency>

<groupId>org.apache.commonsgroupId>

<artifactId>commons-lang3artifactId>

<version>3.7version>

dependency>

<dependency>

<groupId>commons-iogroupId>

<artifactId>commons-ioartifactId>

<version>2.6version>

dependency>

<dependency>

<groupId>org.slf4jgroupId>

<artifactId>slf4j-log4j12artifactId>

<version>1.7.25version>

dependency>

log4j.properties

log4j.rootLogger=DEBUG,A1

log4j.logger.cn.itcast = DEBUG

log4j.appender.A1=org.apache.log4j.ConsoleAppender

log4j.appender.A1.layout=org.apache.log4j.PatternLayout

log4j.appender.A1.layout.ConversionPattern=%-d{yyyy-MM-dd HH:mm:ss,SSS} [%t] [%c]-[%p] %m%n

2.2 URLConnection

URLConnection 是 JDK 自带的一个抽象类,其代表应用程序和 URL 之间的通信链接。在网络爬虫中,我们可以使用 URLConnection 请求一个 URL 地址,然后获取流信息,通过对流信息的操作,可获得请求到的实体内容.

@Test

public void testGet() throws Exception{

//1. 确定要访问/爬取的URL

URL url = new URL("http://www.itcast.cn/?username=xxx");

//2. 获取连接对象

HttpURLConnection urlConnection = (HttpURLConnection) url.openConnection();

//3. 设置连接信息 请求方式 请求参数 请求头

urlConnection.setRequestMethod("GET");//这里一定要大写

urlConnection.setRequestProperty("Pragma","no-cache");

urlConnection.setConnectTimeout(30000);

//4. 获取数据

InputStream in = urlConnection.getInputStream();

BufferedReader bufferedReader = new BufferedReader(new InputStreamReader(in));

String line;

String html="";

while ((line=bufferedReader.readLine())!=null){

html+=line+"\n";

}

System.out.println(html);

//5. 关闭资源

in.close();

bufferedReader.close();

}

@Test

public void testPost() throws Exception{

//1.确定首页的URL

URL url = new URL("http://www.itcast.cn");

//2.获取远程连接

HttpURLConnection urlConnection =(HttpURLConnection) url.openConnection();

//3.设置请求方式 请求参数 请求头

urlConnection.setRequestMethod("POST");

urlConnection.setDoOutput(true); // 原生jdk默认关闭了输出流

urlConnection.setRequestProperty("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.108 Safari/537.36");

urlConnection.setConnectTimeout(30000); //连接超时 单位毫秒

urlConnection.setReadTimeout(30000); //读取超时 单位毫秒

OutputStream out = urlConnection.getOutputStream();

out.write("username=zhangsan&password=123".getBytes());

//4.获取数据

InputStream in = urlConnection.getInputStream();

BufferedReader reader = new BufferedReader(new InputStreamReader(in));

String line;

String html = "";

while ((line = reader.readLine()) != null) {

html += line + "\n";

}

System.out.println(html);

in.close();

reader.close();

}

2.3 HttpClient

经常使用 HttpClient 获取网页内容,使用 jsoup 解析网页内容。

/**

*

* 演示使用httpClient实现网络爬虫

*/

public class HttpClient {

@Test

//httpClient 演示get请求

public void testGet() throws Exception {

//1. 创建httpClient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//2. 创建httpGet请求,并进行相关的配置

HttpGet httpGet = new HttpGet("http://www.itcast.cn/?username=java");

httpGet.setHeader("Pragma","no-cache");

//3. 发起请求

CloseableHttpResponse response = httpClient.execute(httpGet);

//4.判断响应状态码,并获取响应数据

if(response.getStatusLine().getStatusCode()==200){

String html = EntityUtils.toString(response.getEntity(),"utf-8");

System.out.println(html);

}

//5. 关闭资源

httpClient.close();

response.close();

}

@Test

public void testPost() throws Exception {

//1. 创建httpclient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//2. 创建httppost请求

HttpPost httpPost = new HttpPost("http://www.itcast.cn/");

//准备用集合来存放参数

ArrayList<NameValuePair> params = new ArrayList<>();

params.add(new BasicNameValuePair("username","java"));

UrlEncodedFormEntity entity = new UrlEncodedFormEntity(params, "utf-8");

httpPost.setEntity(entity);//设置请求体或参数

httpPost.setHeader("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.108 Safari/537.36");

//3. 发送请求体

CloseableHttpResponse response = httpClient.execute(httpPost);

//4. 判断响应状态码并获取数据

if(response.getStatusLine().getStatusCode()==200){

String html = EntityUtils.toString(response.getEntity(),"utf-8");

System.out.println(html);

}

//5. 关闭资源

response.close();

httpClient.close();

}

}

2.4 连接池

@Test

public void test_pool()throws Exception{

//创建httpClient连接管理器

PoolingHttpClientConnectionManager cm = new PoolingHttpClientConnectionManager();

//设置参数

cm.setMaxTotal(200);//设置最大连接数

cm.setDefaultMaxPerRoute(20);//设置每个主机的最大并发

doGet(cm);

doGet(cm);

}

private void doGet ( PoolingHttpClientConnectionManager cm) throws Exception{

//3. 从连接池中获取httpclient对象

CloseableHttpClient httpClient = HttpClients.custom().setConnectionManager(cm).build();

//创建httpGet对象

HttpGet httpGet = new HttpGet("http://www.itcast.cn/");

//发送请求

CloseableHttpResponse response = httpClient.execute(httpGet);

//获取数据

if(response.getStatusLine().getStatusCode()==200){

String html = EntityUtils.toString(response.getEntity(), "utf-8");

System.out.println(html);

}

//关闭资源

response.close();

//httpClient对象需要还回去而不是关闭

}

2.5 设置连接超时时间

//超时时间的设置

@Test

public void testConfig()throws Exception{

//创建请求配置对象

RequestConfig requestConfig=RequestConfig.custom()

.setSocketTimeout(10000)//设置连接超时时间

.setConnectTimeout(10000)//设置创建连接超时时间

.setConnectionRequestTimeout(10000)//设置请求超时时间

.setProxy(new HttpHost("47.116.76.219",80))//添加代理服务器

.build();

//1.创建httpClient对象

CloseableHttpClient httpClient = HttpClients.custom().setDefaultRequestConfig(requestConfig).build();

//2.创建httpget对象

HttpGet httpGet = new HttpGet("http://www.itcast.cn/");

//3.发起请求

CloseableHttpResponse response = httpClient.execute(httpGet);

//4. 获取响应数据

if(response.getStatusLine().getStatusCode()==200){

String html = EntityUtils.toString(response.getEntity(), "utf-8");

System.out.println(html);

}

//关闭服务

response.close();

}

其中设置了一个代理服务器,先访问代理服务器,由代理服务器访问将要爬取的网站

2.6 HttpClient----HttpUtils的包装

package com.tjk.utils;

import org.apache.http.client.config.RequestConfig;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.impl.conn.PoolingHttpClientConnectionManager;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import java.util.Random;

public abstract class HttpUtils {

private static PoolingHttpClientConnectionManager cm=null;//声明一个httpClient连接管理器

private static RequestConfig config=null;//创建请求配置对象

private static List<String> userAgentList=null;//代理对象集合

//静态代码块会在类进行加载的时候执行

static {

cm=new PoolingHttpClientConnectionManager();

cm.setMaxTotal(200);//设置最大连接数

cm.setDefaultMaxPerRoute(20);//设置每个主机的最大并发

config=RequestConfig.custom()

.setConnectTimeout(10000)//设置创建连接超时时间

.setSocketTimeout(10000)//设置连接超时时间

.setConnectionRequestTimeout(10000)//设置请求超时时间

.build();

userAgentList = new ArrayList<String>();

userAgentList.add("Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.132 Safari/537.36");

userAgentList.add("Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:73.0) Gecko/20100101 Firefox/73.0");

userAgentList.add("Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_3) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/13.0.5 Safari/605.1.15");

userAgentList.add("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36 Edge/16.16299");

userAgentList.add("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.98 Safari/537.36");

userAgentList.add("Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:63.0) Gecko/20100101 Firefox/63.0");

}

public static String getHtml(String url){

//1.从连接池中获取httpClient对象

CloseableHttpClient httpClient = HttpClients.custom().setConnectionManager(cm).build();

//2. 创建httpGet对象

HttpGet httpGet = new HttpGet(url);

//3. 设置请求配置对象和请求头

httpGet.setConfig(config);

httpGet.setHeader("User-Agent",userAgentList.get(new Random().nextInt(userAgentList.size())));

CloseableHttpResponse response=null;

//发送请求

try {

response = httpClient.execute(httpGet);

//5. 获取响应内容

if(response.getStatusLine().getStatusCode()==200){

String html="";

if(response.getEntity()!=null) {

html = EntityUtils.toString(response.getEntity(), "utf-8");

}

return html;

}

} catch (IOException e) {

e.printStackTrace();

}finally {

try {

response.close();

// httpClient.close();这里的httpclient是从cm连接池管理器拿到的,所以不用关闭

} catch (IOException e) {

e.printStackTrace();

}

}

return null;

}

public static void main(String[] args) {

String html = HttpUtils.getHtml("http://www.itcast.cn");

System.out.println(html);

}

}

2.7 Jsoup介绍

jsoup 是一款基于 Java 语言的 HTML 请求及解析器,可直接请求某个 URL 地址、解析 HTML 文本内容。它提供了一套非常省力的 API,可通过 DOM、CSS 以及类似于 jQuery 的操作方法来取出和操作数据。

jsoup的主要功能如下:

package cn.itcast;

import org.apache.commons.io.FileUtils;

import org.jsoup.Jsoup;

import org.jsoup.nodes.Document;

import org.jsoup.nodes.Element;

import org.jsoup.select.Elements;

import org.junit.Test;

import java.io.File;

/**

* Author itcast

* Date 2020/5/27 10:02

* Desc 演示使用Jsoup实现页面解析

*/

public class JsoupTest {

@Test

public void testGetDoument() throws Exception {

//Document doc = Jsoup.connect("http://www.itcast.cn/").get();

//Document doc = Jsoup.parse(new URL("http://www.itcast.cn/"), 1000);

//Document doc = Jsoup.parse(new File("jsoup.html"), "UTF-8");

String htmlStr = FileUtils.readFileToString(new File("jsoup.html"), "UTF-8");

Document doc = Jsoup.parse(htmlStr);

System.out.println(doc);

Element titleElement = doc.getElementsByTag("title").first();

String title = titleElement.text();

System.out.println(title);

}

@Test

public void testGetElement() throws Exception {

Document doc = Jsoup.parse(new File("jsoup.html"), "UTF-8");

//System.out.println(doc);

//根据id获取元素getElementById

Element element = doc.getElementById("city_bj");

String text = element.text();

//System.out.println(text);

//根据标签获取元素getElementsByTag

Elements elements = doc.getElementsByTag("title");

Element titleElement = elements.first();

String title = titleElement.text();

//System.out.println(title);

//根据class获取元素getElementsByClass

Element element1 = doc.getElementsByClass("s_name").last();

//System.out.println(element1.text());

//根据属性获取元素

String abc = doc.getElementsByAttribute("abc").first().text();

System.out.println(abc);

}

@Test

public void testElementOperator() throws Exception {

Document doc = Jsoup.parse(new File("jsoup.html"), "UTF-8");

Element element = doc.getElementsByAttributeValue("class", "city_con").first();

//获取元素中的id

String id = element.id();

System.out.println(id);

//获取元素中的classname

String className = element.className();

System.out.println(className);

//获取元素中的属性值

String id1 = element.attr("id");

System.out.println(id1);

//获取元素中所有的属性

String attrs = element.attributes().toString();

System.out.println(attrs);

//获取元素中的文本内容

String text = element.text();

System.out.println(text);

}

@Test

public void testSelect() throws Exception {

Document doc = Jsoup.parse(new File("jsoup.html"), "UTF-8");

//根据标签名获取元素

Elements spans = doc.select("span");

for (Element span : spans) {

//System.out.println(span.text());

}

//根据id获取元素

String text = doc.select("#city_bj").text();

//System.out.println(text);

//根据class获取元素

String text1 = doc.select(".class_a").text();

//System.out.println(text1);

//根据属性获取元素

String text2 = doc.select("[abc]").text();

//System.out.println(text2);

//根据属性值获取元素

String text3 = doc.select("[class=s_name]").text();

System.out.println(text3);

}

@Test

public void testSelect2() throws Exception {

Document doc = Jsoup.parse(new File("jsoup.html"), "UTF-8");

//根据标签名+id组合选取元素

String text = doc.select("li#test").text();

//System.out.println(text);

//根据标签名+class

String text1 = doc.select("li.class_a").text();

//System.out.println(text1);

//根据标签名+元素名

String text2 = doc.select("span[abc]").text();

//System.out.println(text2);

//任意组合

String text3 = doc.select("span[abc].s_name").text();

//System.out.println(text3);

//查找某个元素下的直接子元素

String text4 = doc.select(".city_con > ul > li").text();

//System.out.println(text4);

//查找某个元素下的所有子元素

String text5 = doc.select(".city_con li").text();

//System.out.println(text5);

//查找某个元素下的所有直接子元素

String text6 = doc.select(".city_con > *").text();

System.out.println(text6);

}

}