mxnet to onnx to caffe,步骤以及问题解决

三个步骤

1、mxnet to onnx

https://blog.csdn.net/lhl_blog/article/details/90672695

2、onnx 经过简化操作去除onnx 自己加的层

https://github.com/daquexian/onnx-simplifier

3、onnx to caffe,

https://github.com/MTlab/onnx2caffe

4、检查前五位精度

#!/usr/bin/env python

# -*- coding=utf-8 -*-

import sys

sys.path.insert(0, "/home/shiyy/nas/NVCaffe/python")

import caffe

import onnx

import numpy as np

import caffe2.python.onnx.backend as onnx_caffe2_backend

import mxnet.contrib.onnx as onnx_mxnet

import mxnet as mx

from mxnet import gluon,nd

def test_mx_onnx():

onnx_path="./11.onnx"

sym, arg_params, aux_params = onnx_mxnet.import_model(onnx_path)

model_metadata = onnx_mxnet.get_model_metadata(onnx_path)

# obtain the data names of the inputs to the model by using the model metadata API:

# get in out name

print(model_metadata)

data_names = [inputs[0] for inputs in model_metadata.get('input_tensor_data')]

print("input variable:",data_names) #input_tensor_data': [(u'data', (1L, 3L, 224L, 224L))]},input is data

ctx = mx.cpu()

# import warnings

# with warnings.catch_warnings():

# warnings.simplefilter("ignore")

# net = gluon.nn.SymbolBlock(outputs=sym, inputs=mx.sym.var('data')) #

# net_params = net.collect_params()

# for param in arg_params:

# if param in net_params:

# net_params[param]._load_init(arg_params[param], ctx=ctx)

# for param in aux_params:

# if param in net_params:

# net_params[param]._load_init(aux_params[param], ctx=ctx)

# print batch.asnumpy()

# results = []

# out = net(batch)

# results.extend([o for o in out.asnumpy()])

data_names = [graph_input for graph_input in sym.list_inputs()

if graph_input not in arg_params and graph_input not in aux_params]

print(data_names)#['data']

onnx_mod = mx.mod.Module(symbol=sym, data_names=['data'], context=ctx, label_names=None)

batch = nd.array(nd.random.randn(1,3,224,224),ctx=ctx).astype(np.float32)

onnx_mod.bind(for_training=False, data_shapes=[(data_names[0], batch.shape)], label_shapes=None)

onnx_mod.set_params(arg_params=arg_params, aux_params=aux_params, allow_missing=True, allow_extra=True)

from collections import namedtuple

Batch=namedtuple("Batch",["data"])

onnx_mod.forward(Batch([batch]))#[bchw]

results = []

out = onnx_mod.get_outputs()

results.extend([o for o in out[0].asnumpy()])

################################### caffe out

caffe_model = caffe.Net("./mynet.prototxt", "./mynet.caffemodel", caffe.TEST)

# reshape network inputs

blobs = {}

blobs["data"] = batch.asnumpy()

caffe_model.blobs["data"].reshape(*blobs["data"].shape)

# do forward

forward_kwargs = {'data': blobs['data'].astype(np.float32, copy=False)}

output_blobs = caffe_model.forward_all(**forward_kwargs)

caffe_out = output_blobs["fc1"].flatten()

print results[0][0:10]

print ("mx onnx model out lenght",len(results[0]))

print "\n ######################################## \n"

print caffe_out[0:10]

print ("to caffe model length",len(caffe_out))

print "\n ######################################## \n"

np.testing.assert_almost_equal(results[0],caffe_out, decimal=5)

print("Exported model has been executed decimal=5 and the result looks good!")

test_mx_onnx()

1、raise MXNetError(py_str(_LIB.MXGetLastError()))

mxnet.base.MXNetError: [10:51:40] src/ndarray/ndarray.cc:1805: Check failed: fi->Read(data) Invalid NDArray file format

模型虽然下载了,但是没有下载完全,

2、ImportError: numpy.core.multiarray failed to import

更新numpy 版本

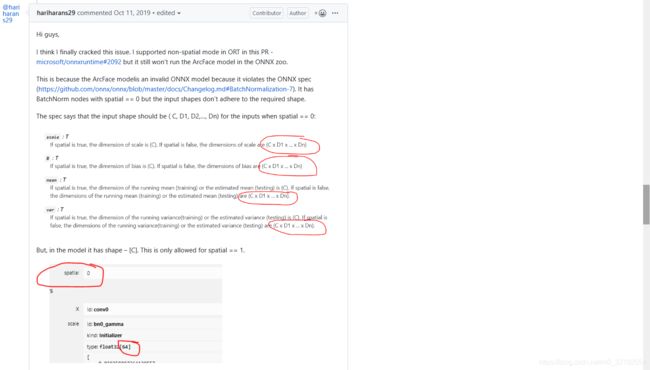

3、 mxnet onnx to simplifer onnx, error

onnxruntime::BatchNorm < T >::BatchNorm(const

onnxruntime::OpKernelInfo &) [

with T = float] spatial == 1 was false.BatchNormalization kernel for CPU provider does not support non-spatial cases

mxnet 转onnx的代码,里面的bn设置

https://github.com/apache/incubator-mxnet/blob/745a41ca1a6d74a645911de8af46dece03db93ea/python/mxnet/contrib/onnx/mx2onnx/_op_translations.py#L357

@mx_op.register("BatchNorm")

def convert_batchnorm(node, **kwargs):

"""Map MXNet's BatchNorm operator attributes to onnx's BatchNormalization operator

and return the created node.

"""

name, input_nodes, attrs = get_inputs(node, kwargs)

momentum = float(attrs.get("momentum", 0.9))

eps = float(attrs.get("eps", 0.001))

bn_node = onnx.helper.make_node(

"BatchNormalization",

input_nodes,

[name],

name=name,

epsilon=eps,

momentum=momentum,

# MXNet computes mean and variance per feature for batchnorm

# Default for onnx is across all spatial features. So disabling the parameter.

spatial=0

)

return [bn_node]

问题原因是 mxnet 转换onnx spatial ==0,然后再onnxruntime 里面说不支持spatial=0,需要spatial=1,所以就报错了

方法1,改动onnxruntime,源码编译

onnxruntime 修改,支持这个操作,

https://github.com/microsoft/onnxruntime/pull/2092

详细修改过程,需要重新编译onnxruntime

https://github.com/microsoft/onnxruntime/pull/2092/files/830dba84578cbe88b382559dd7ae55cffe147104

方法2,改变spatial 从0到1 的理由

https://github.com/onnx/models/issues/156

方法3,模型修改值重新保存,重新保存,亲测有效

mxnet转onnx ,没有问题,

onnx 转换,onnx_simplify.py ,后 生成 new.onnx

new.onnx 转换到caffe,没有问题,

最后mxnet 测试的一个错误

sym, arg_params, aux_params = onnx_mxnet.import_model(onnx_path)

这种前向写法是官网提供的,

http://mxnet.incubator.apache.org/api/python/docs/tutorials/packages/onnx/inference_on_onnx_model.html?highlight=onnx_mxnet%20import_model

net = gluon.nn.SymbolBlock(outputs=sym, inputs=mx.sym.var(‘data’)) #这句接口调用报错如下

倒数第二层也是错误输出,实际上就一个全连接分类输出,

ValueError: There are multiple outputs with name “flatten0_output”

解决参考:

https://discuss.gluon.ai/t/topic/8178/2

不用gluon 接口,用最常见的方式,修改如下

onnx_path="./new.onnx"

sym, arg_params, aux_params = onnx_mxnet.import_model(onnx_path)

model_metadata = onnx_mxnet.get_model_metadata(onnx_path)

# obtain the data names of the inputs to the model by using the model metadata API:

# get in out name

print(model_metadata)

data_names = [inputs[0] for inputs in model_metadata.get('input_tensor_data')]

print("input variable:",data_names) #input_tensor_data': [(u'data', (1L, 3L, 224L, 224L))]},input is data

ctx = mx.cpu()

# import warnings

# with warnings.catch_warnings():

# warnings.simplefilter("ignore")

# net = gluon.nn.SymbolBlock(outputs=sym, inputs=mx.sym.var('data')) #

# net_params = net.collect_params()

# for param in arg_params:

# if param in net_params:

# net_params[param]._load_init(arg_params[param], ctx=ctx)

# for param in aux_params:

# if param in net_params:

# net_params[param]._load_init(aux_params[param], ctx=ctx)

# print batch.asnumpy()

# results = []

# out = net(batch)

# results.extend([o for o in out.asnumpy()])

data_names = [graph_input for graph_input in sym.list_inputs()

if graph_input not in arg_params and graph_input not in aux_params]

print(data_names)#['data']

onnx_mod = mx.mod.Module(symbol=sym, data_names=['data'], context=ctx, label_names=None)

batch = nd.array(nd.random.randn(1,3,224,224),ctx=ctx).astype(np.float32)

onnx_mod.bind(for_training=False, data_shapes=[(data_names[0], batch.shape)], label_shapes=None)

onnx_mod.set_params(arg_params=arg_params, aux_params=aux_params, allow_missing=True, allow_extra=True)

from collections import namedtuple

Batch=namedtuple("Batch",["data"])

onnx_mod.forward(Batch([batch]))#[bchw]

results = []

out = onnx_mod.get_outputs()

results.extend([o for o in out[0].asnumpy()])

以上resnet 系列可以转换

但是arcface(insightface) 模型不可以,因为转换的onnx 模型成功但是不能运行 onnxruntime,shape 不对

ShapeInferenceError] First input does not have rank 2

原因,slope for prelu operator imcorrected ,mxnet prelu转换到 onnx 有一个slope 过程,官方接口代码,不正确,

解决方案

1、https://github.com/microsoft/onnxruntime/issues/2045,onnxruntime,github 上该问题的描述讨论,是转换的onnx模型不对,形状不对

https://github.com/onnx/models/issues/91 #讨论arcface 转换onnx 问题中的prelu 和GEMM问题,

对于onnx prelu修复问题,代码添加,两个都添加,

https://github.com/apache/incubator-mxnet/pull/13460/commits/f1a6df82a40d1d9e8be6f7c3f9f4dcfe75948bd6在这里插入代码片

添加 ONNX export: Add Flatten before Gemm,添加flatten

https://github.com/apache/incubator-mxnet/pull/13356/files

2、arcface 是 bn conv ,的结构所以不能合并bn层, 一般是conv bn 的顺序把参数 合并到bn

3、caffe bn 支持四维操作,不支持二维

所以,arcface 模型的最后特征全连接输出 再接bn 参数,会报错

注意重点

1、onnx 直接导出的模型结果是正确的和mxnet模型相比,

input_shape=(1,3,112,112)

sym = './model-symbol.json'

params = './model-0000.params'

###########################################

onnx_file="./mynet.onnx"

print ("************************")

# ??????API????????onnx?????

converted_model_path = onnx_mxnet.export_model(sym,

params,

[input_shape],

np.float32,

onnx_file,

verbose=True #print node information data ,Output node is: softmax

)

2、但是 是onnxruntime,调用是不对的,需要添加代码,修改prelu形状到四维,Gemm输入变成二维。然后添加,然后bn 层saptial 值变成1(mxnet1.4.0)对下面的修理代码改完后,再运行这两段代码重新生产onnx 模型,结果是正确的,而且可以通过onnxrutime,

model = onnx.load(r'mynet.onnx')

for node in model.graph.node:

if (node.op_type == "BatchNormalization"):

for attr in node.attribute:

if (attr.name == "spatial"): #0 to 1

attr.i = 1 ## use to onnxruntime , not to effect output

onnx.save(model, r'mynet.onnx')

对于onnx prelu修复问题,代码添加,两个都添加,

Prelu修复,形状从【64】变成【1,64,1,1】

https://github.com/apache/incubator-mxnet/pull/13460/commits/f1a6df82a40d1d9e8be6f7c3f9f4dcfe75948bd6

添加 ONNX export: Add Flatten before Gemm,添加flatten

https://github.com/apache/incubator-mxnet/pull/13356/files

3、arcface onnx to caffe,需要删除前两层,并且,第一层卷积修改输入名字

有前两层的Sub Mul 是mxnet 网络中-127.5 *0.0078125

if node.op_type==u"Sub" and node.name == u'_minusscalar0': #this arcfaemodel

print ("arcface delete Sub")

print (node)

continue

if node.op_type==u"Mul" and node.name == "_mulscalar0":#this arcfaemodel

print("arcface delete Mul")

print (node)

continue

if node.op_type ==u"Conv" and node.name == u'conv0':#this arcfaemodel

print ("fix onnx conv0 input name ")

node.inputs[0]="data"

print (node.inputs[0]) #conv0 need to fix input name

4、onnx 人脸识别模型测试,官方代码

https://github.com/onnx/models/blob/master/vision/body_analysis/arcface/arcface_inference.ipynb

5、caffe relu prelu leakrelu的区别

https://blog.csdn.net/cham_3/article/details/56049205

negative_slope 是一个固定的小数值

参数ai 是一组参数,需要学习的,不是固定的值。

参数ai 是一组参数,需要学习的,不是固定的值。

所以 onnx,prelu,层需要拷贝参数到caffe.model

relu 没有参数,只是一个计算转换。