KNN算法小结

前言:文章内容参考了以下书籍:【机器学习实战】、【深入浅出Python机器学习】,主要是对KNN算法的实现进行总结归纳;

使用编程语言:python3;

目录

一、kNN代码实现

1、classify0函数实现KNN算法(对应程序清单2-1)

2、file2matrix函数转换文本记录(对应程序清单2-2)

3、autoNorm函数归一化特征值(对应程序清单2-3)

4、datingClassTest函数(对应程序清单2-4)

5、classifyPerson函数(对应程序清单2-5)

6、handwritingClassTest函数(对应程序清单2-6)

二、KNN算法运用

1、使用范例

2、KNN实现多元分类

3、KNN用于回归分析

4、KNN用于酒的分类

5、KNN识别手写数字

三、KNN小结

附录

一、kNN代码实现

1、classify0函数实现KNN算法(对应程序清单2-1)

import operator

from numpy import *

# inX:待分类数组 dataSet:训练集 labels:测试集 k:邻居数量

def classify0(inX,dataSet,labels,k):

# 获取训练集行数

dataSetSize=dataSet.shape[0]

# tile(X,(m,n)) 将数组X转为m行n列矩阵

diffMat=tile(inX,(dataSetSize,1))-dataSet

sqDiffMat=diffMat**2

# axis=1使sum函数作用于矩阵每一行

sqDistances=sqDiffMat.sum(axis=1)

distances=sqDistances**0.5

# argsort 将数组从小到大排列并返回数组元素对应的索引

sortedDistIndicies=distances.argsort()

classCount={}

# classCount.get(voteIlabel,0) get:返回字典key对应的value值,没有该key时返回0

# 该循环构建了一个字典:key值为邻居的分类标签,value值为标签对应的数量

for i in range(k):

voteIlabel=labels[sortedDistIndicies[i]]

classCount[voteIlabel]=classCount.get(voteIlabel,0)+1

# items函数返回列表[(key1,value1),(key2,value2),...]

# sorted(iterable,cmp=None,key=None,reverse=False) key为比较的元素,reverse=True 降序

# operator.itemgetter(1) 获取(key,value)第二个域,即value值

# sortedClassCount = sorted(classCount.items(), key=lambda a: a[1], reverse=True)

sortedClassCount=sorted(classCount.items(),key=operator.itemgetter(1),reverse=True)

return sortedClassCount[0][0]classify0函数计算输入数组与训练集-矩阵每一行的距离,将距离从小到大排序,即可知道距离最近k个邻居的索引和标签;创建一个字典存储邻居标签的个数:key值为标签,value值为标签数量;

将字典转化为存储(key,value)值的列表,调用内置函数sorted(),通过参数key指定用value值进行排序,参数reverse指定排序为降序;函数最后返回有序列表第一个(key,value)中的key值,即k个邻居中数量最多的标签;

因次,classify0函数实现了KNN算法;

注意:

- classCount.iteritems()是python2用法,python3中需使用classCount.items();

- 函数调用了模块operator,若不想使用该模块可将代码改为:

sortedClassCount = sorted(classCount.items(), key=lambda a: a[1], reverse=True)2、file2matrix函数转换文本记录(对应程序清单2-2)

def file2matrix(filename):

fr=open(filename)

# readlines 读取文件所有行,并返回列表['40920\t8.326976\t0.953952\t3\n','14488\t7.153469\t1.673904\t2\n',...]

arrayOLines=fr.readlines()

numberOfLines=len(arrayOLines)

returnMat=zeros((numberOfLines,3))

classLabelVector=[]

index=0

for line in arrayOLines:

# 文件每一行去除回车符\n

line=line.strip()

# 文件每一行根据tab字符:\t 分割,返回列表

listFromLine=line.split('\t')

returnMat[index,:]=listFromLine[0:3]

# 不使用int会将元素当做字符串处理

classLabelVector.append(int(listFromLine[-1]))

index+=1

return returnMat,classLabelVectorfile2matrix函数读取文件前3列作为训练集,最后一列作为测试集;

注意点:

classLabelVector.append(int(listFromLine[-1]))int函数将二维列表的最后一列强制转换为数值,若标签对字符串类型,此处应去掉int函数;

import kNN

import imp

imp.reload(kNN)调用reload函数应事先引用模块imp及加载kNN模块;

from numpy import array

import matplotlib.pyplot as plt

fig=plt.figure()

ax=fig.add_subplot(111)

# ax.scatter(datingDataMat[:,1],datingLabels[:,2])

# 二维列表没有切片,使用数组切片会报错:TypeError: list indices must be integers or slices, not tuple

ax.scatter(array([i[1] for i in datingDataMat]),array([i[2] for i in datingDataMat]))

plt.show()在2.2.2创建散点图时会报错:TypeError: list indices must be integers or slices, not tuple;缘由是二维列表没有切片,采用以下片段之一代替

-

[i[1] for i in datingDataMat]

-

array([i[1] for i in datingDataMat])

3、autoNorm函数归一化特征值(对应程序清单2-3)

def autoNorm(dataSet):

# 参数0求每列最小/大值

minVals=dataSet.min(0)

maxVals=dataSet.max(0)

ranges=maxVals-minVals

normDataSet=zeros(shape(dataSet))

m=dataSet.shape[0]

normDataSet=dataSet-tile(minVals,(m,1))

normDataSet=normDataSet/tile(ranges,(m,1))

return normDataSet,ranges,minValsautoNorm函数将训练集[0,1]标准化,数据压缩到0-1之间;对训练集也可使用Z-Score规范化,标准化公式如下:

经过标准化处理后的数据符合均值为0,方差为1的正态分布;

from sklearn import preprocessing

ss=preprocessing.StandardScaler()

x_train=ss.fit_transform(dataSet)4、datingClassTest函数(对应程序清单2-4)

def datingClassTest():

hoRatio = 0.50 #hold out 10%

datingDataMat,datingLabels = file2matrix('G:\Python\PyProject_3.7.7_001\常用算法总结\KNN算法\datingTestSet2.txt') #load data setfrom file

normMat, ranges, minVals = autoNorm(datingDataMat)

m = normMat.shape[0]

numTestVecs = int(m*hoRatio)

errorCount = 0.0

for i in range(numTestVecs):

classifierResult = classify0(normMat[i,:],normMat[numTestVecs:m,:],datingLabels[numTestVecs:m],3)

print("the classifier came back with: %d, the real answer is: %d" % (classifierResult, datingLabels[i]))

if (classifierResult != datingLabels[i]): errorCount += 1.0

print("the total error rate is: %f" % (errorCount/float(numTestVecs)))注意,该函数调用了file2matrix函数,若分类标签为字符串,应修改代码去掉int,此处读取的是datingTestSet2文件,原文读取的是datingTestSet文件;

5、classifyPerson函数(对应程序清单2-5)

def classifyPerson():

resultList=['not at all','in small doses','in large doses']

percentTats=float(input("percentage of time spent playing video games?"))

ffMiles=float(input("frequent flier miles earned per year?"))

iceCream=float(input("liters of ice cream consumed per year?"))

datingDataMat,datingLabels=file2matrix('G:\Python\PyProject_3.7.7_001\常用算法总结\KNN算法\datingTestSet2.txt')

normMat,ranges,minVals=autoNorm(datingDataMat)

inArr=array([ffMiles,percentTats,iceCream])

classifierResult=classify0((inArr-minVals)/ranges,normMat,datingLabels,3)

print("You will probably like this person:",resultList[classifierResult-1])注意,raw_input()是python2用法,此处使用input函数,该函数将输入值转为字符串,故使用float强制转换;

6、handwritingClassTest函数(对应程序清单2-6)

def img2vectory(filename):

returnVect=zeros((1,1024))

fr=open(filename)

for i in range(32):

lineStr=fr.readline()

for j in range(32):

returnVect[0,32*i+j]=int(lineStr[j])

return returnVect

def handwritingClassTest():

hwLabels=[]

trainingFileList=listdir('G:\\Python\\PyProject_3.7.7_001\\常用算法总结\\KNN算法\\trainingDigits')

m=len(trainingFileList)

trainingMat=zeros((m,1024))

for i in range(m):

fileNameStr=trainingFileList[i]

fileStr=fileNameStr.split('.')[0]

classNumstr=int(fileStr.split('_')[0])

# 从文件名获取数字分类标签

hwLabels.append(classNumstr)

trainingMat[i,:]=img2vectory('G:/Python/PyProject_3.7.7_001/常用算法总结/KNN算法/trainingDigits/%s' % fileNameStr)

testFileList=listdir('G:\\Python\\PyProject_3.7.7_001\\常用算法总结\\KNN算法\\testDigits')

errorCount=0.0

mTest=len(testFileList)

for i in range(mTest):

fileNameStr=testFileList[i]

fileStr=fileNameStr.split('.')[0]

classNumstr=int(fileStr.split('_')[0])

vectorUnderTest=img2vectory('G:/Python/PyProject_3.7.7_001/常用算法总结/KNN算法/testDigits/%s' % fileNameStr)

classifierResult=classify0(vectorUnderTest,trainingMat,hwLabels,3)

print("the classifier came back with: %d ,the real answer is: %d" % (classifierResult,classNumstr) )

if (classifierResult!=classNumstr):

errorCount+=1

print("\nThe total number of errors is: %d" % errorCount)

print("\nThe total error rate is: %f" % (errorCount/float(mTest)))注意,文件名包含特殊字符0,读取时应加转义字符,如:

img2vectory('G:/Python/PyProject_3.7.7_001/常用算法总结/KNN算法/trainingDigits/%s' % fileNameStr)

img2vectory('G:/Python/PyProject_3.7.7_001/常用算法总结/KNN算法/testDigits/%s' % fileNameStr)二、KNN算法运用

1、使用范例

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import accuracy_score

knn = KNeighborsClassifier()

# 训练集:x_train 对应标签:y_train

knn.fit(x_train,y_train)

# 测试集:x_test,返回预测结果predict_y

y_predict=knn.predict(x_test)

print("KNN准确率: %.4lf" % accuracy_score(y_test, y_predict))

print("KNN准确率:{:.4f}".format(knn.score(x_test,y_test)))其中,KNeighborsClassifier()主要参数如下:

- n_neighbors:邻居数量,通常使用默认值5;

- weights:邻居权重,weights=uniform 代表所有邻居的权重相同,weights=distance 代表权重是距离的倒数

- algorithm:规定计算邻居的方法;algorithm=auto,根据数据的情况自动选择适合的算法,默认情况选择 auto;algorithm=kd_tree KD树,是多维空间的数据结构,方便对关键数据进行检索,适用于维度少(不超过 20),维数大于20之后效率会下降;algorithm=ball_tree 球树,适用于维度大的情况;algorithm=brute 暴力搜索,采用线性扫描,当训练集大的时候,效率很低

- leaf_size:构造KD树或球树时的叶子数,默认是30,调整leaf_size会影响到树的构造和搜索速度

2、KNN实现多元分类

from sklearn.datasets import make_blobs

from sklearn.neighbors import KNeighborsClassifier

import matplotlib.pyplot as plt

import numpy as np

data2=make_blobs(n_samples=500,centers=5,random_state=8)

x2,y2=data2

plt.scatter(x2[:,0],x2[:,1],c=y2,cmap=plt.cm.spring,edgecolors='k')

plt.show()

clf=KNeighborsClassifier()

clf.fit(x2,y2)

x_min,x_max=x2[:,0].min()-1,x2[:,0].max()+1

y_min,y_max=x2[:,1].min()-1,x2[:,1].max()+1

# meshgrid(X1,X2,indexing='xy')默认参数'xy'返回(len(X1),len(X2))矩阵形状,'ij'返回(len(X2),len(X1))

xx,yy=np.meshgrid(np.arange(x_min,x_max,.02),np.arange(y_min,y_max,.02))

# ravel返回输入元素的一维数组

# np.r_是按列连接两个矩阵,就是把两矩阵上下相加,要求列数相等

# np.c_是按行连接两个矩阵,就是把两矩阵左右相加,要求行数相等

z=clf.predict(np.c_[xx.ravel(),yy.ravel()])

z=z.reshape(xx.shape)

# pcolormesh 用于生成分类图

plt.pcolormesh(xx,yy,z)

plt.scatter(x2[:,0],x2[:,1],c=y2,cmap=plt.cm.spring,edgecolors='k')

plt.xlim(xx.min(),xx.max())

plt.ylim(yy.min(),yy.max())

plt.title("Classifier:KNN")

plt.show()

print("模型正确率:{:.2f}".format(clf.score(x2,y2)))运行代码将会得到下图所示结果,并可知模型正确率为0.96;

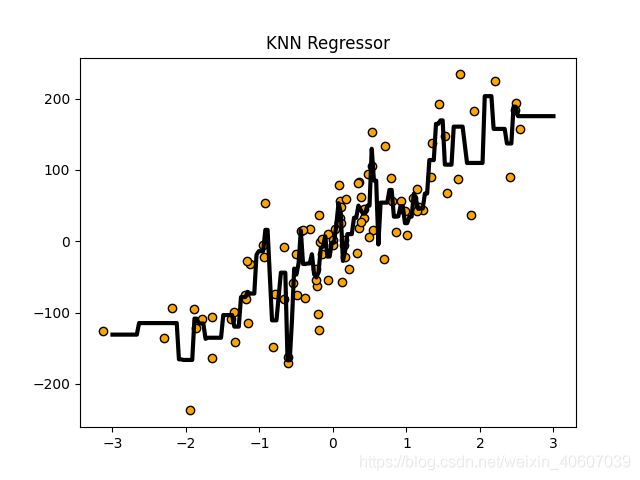

3、KNN用于回归分析

import numpy as np

from sklearn.neighbors import KNeighborsRegressor

from sklearn.datasets import make_regression

# n_samples:样本数 n_features:特征数(自变量个数)n_informative:参与建模特征数

x,y=make_regression(n_features=1,n_informative=1,noise=50,random_state=8)

plt.scatter(x,y,c='orange',edgecolors='k')

plt.show()

# n_neighbors 默认为5,为提高模型准确率调整为2

reg=KNeighborsRegressor(n_neighbors=2)

reg.fit(x,y)

z=np.linspace(-3,3,200).reshape(-1,1)

plt.scatter(x,y,c='orange',edgecolors='k')

plt.plot(z,reg.predict(z),c='k',linewidth=3)

plt.title("KNN Regressor")

plt.show()

print("模型正确率:{:.2f}".format(reg.score(x,y)))运行代码将会得到下图所示结果,并可知模型正确率为0.86;

4、KNN用于酒的分类

from sklearn.datasets import load_wine

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

# wine_dataset为Bunch对象,wine_dataset.keys() wine_dataset.values()

wine_dataset=load_wine()

# random_state:随机数种子,每次都填1,其他参数一样的情况下得到的随机数组是一样的,填0或不填,每次都会不一样

# test_size:样本占比,是整数的话为样本数量

x_train,x_test,y_train,y_test=train_test_split(wine_dataset['data'],wine_dataset['target'],random_state=0)

knn=KNeighborsClassifier(n_neighbors=1)

knn.fit(x_train,y_train)

print("测试集得分:{:.2f}".format(knn.score(x_test,y_test)))

print("测试集预测标签:%s" % wine_dataset['target_names'][knn.predict(x_test)])运行代码得到评分0.76及预测的测试集标签;

5、KNN识别手写数字

from sklearn.neighbors import KNeighborsClassifier

from sklearn.datasets import load_digits

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import matplotlib.pyplot as plt

from sklearn import preprocessing

digits = load_digits()

data=digits.data

# 查看第一幅图像

print(digits.images[0])

# 第一幅图像代表的数字含义

print(digits.target[0])

# 将第一幅图像显示出来

plt.gray()

plt.imshow(digits.images[0])

plt.show()

# 分割数据,将25%的数据作为测试集,test_size:样本占比,

train_x,test_x,train_y,test_y=train_test_split(data,digits.target,test_size=0.25,random_state=33)

# 采用Z-Score规范化

ss=preprocessing.StandardScaler()

train_ss_x=ss.fit_transform(train_x)

test_ss_x=ss.transform(test_x)

knn = KNeighborsClassifier()

knn.fit(train_ss_x,train_y)

predict_y=knn.predict(test_ss_x)

print("KNN准确率: %.4lf" % accuracy_score(test_y, predict_y))

print("KNN准确率:{:.4f}".format(knn.score(test_ss_x,test_y)))运行代码可知模型准确率为0.9756;

三、KNN小结

- 训练集很大时,KNN算法将占用大量存储空间;

- KNN需对数据集中每个数据计算距离值,耗时久;

- 无法给出任何数据的基础结构信息,因此无法知晓平均实例样本和典型实例样本具有什么特征;

附录

维基百科:https://zh.wikipedia.org/wiki/K-%E8%BF%91%E9%82%BB%E7%AE%97%E6%B3%95

源码下载1:www.manning.com/MachineLearninginAction

源码下载2:https://sctrack.sendcloud.net/track/click/eyJuZXRlYXNlIjogImZhbHNlIiwgIm1haWxsaXN0X2lkIjogMCwgInRhc2tfaWQiOiAiIiwgImVtYWlsX2lkIjogIjE2MjQ4MDIwNjk4MjlfNzUzNTlfMTUzOThfOTgzMy5zYy0xMF85XzE3OV8xOTctaW5ib3VuZDAkMTc2NzMzNjMyN0BxcS5jb20iLCAic2lnbiI6ICJhN2E3ODg2MWRiNGVlMTdhYzFkMDI2NWEwMDUxMWFiNyIsICJ1c2VyX2hlYWRlcnMiOiB7fSwgImxhYmVsIjogMCwgInRyYWNrX2RvbWFpbiI6ICJzY3RyYWNrLnNlbmRjbG91ZC5uZXQiLCAicmVhbF90eXBlIjogIjEiLCAibGluayI6ICJodHRwcyUzQS8vd3d3LndxeXVucGFuLmNvbS9ib29rUXIvZmlsZS9kb3dubG9hZCUzRnJlc291cmNlSWQlM0Q1MTc1MDA2MDE3IiwgIm91dF9pcCI6ICIxMDYuNzUuMTYuMTA0IiwgImNvbnRlbnRfdHlwZSI6ICIxIiwgInVzZXJfaWQiOiA3NTM1OSwgIm92ZXJzZWFzIjogImZhbHNlIiwgImNhdGVnb3J5X2lkIjogMTM2MzA0fQ==.html