two layer net及cs231n作业解读

two layer net

为了让自己的cs231n学习更加高效且容易复习,特此在这里记录学习过程,以供参考和自我监督。

目录

- two layer net

-

- 作用机制

- 公式

- Assignment 1的two layer net作业

-

- two_layer_net.ipynb(1)

- neural_net.py

- two_layer_net.ipynb(2)

作用机制

从这节开始就要接触神经网络了,本次涉及到的是一个二层(不包括输入层)的神经网络,我们需要设计向前传播和向后传播的算法。

公式

公式的推导很麻烦,不过还是需要静下心来自己推导一遍,这样对代码的理解也更深刻。

本次题目涉及的激活函数是ReLu函数和softmax函数,两者的公式如下:

ReLu:

L i = ∑ j ≠ y i m a x ( 0 , s j − s y i + Δ ) L_i=\sum_{j\neq y_i}max(0,s_j-s_{y_i}+\Delta) Li=j=yi∑max(0,sj−syi+Δ)

softmax:

L i = − l o g e S y i ∑ j = 1 C e S j = − ( s y i − l o g ∑ j = 1 C e s j ) = − s y i + l o g ∑ j = 1 C e s j L_i=-log\frac{e^{S_{y_i}}}{\sum^C_{j=1}e^{S_j}}=-(s_{y_i}-log\sum_{j=1}^Ce^{s_j})=-s_{y_i}+log\sum_{j=1}^C e^{s_j} Li=−log∑j=1CeSjeSyi=−(syi−logj=1∑Cesj)=−syi+logj=1∑Cesj

这里没看懂也没关系,可以先看代码增强理解,再反过来看原理推导。

Assignment 1的two layer net作业

two_layer_net.ipynb(1)

Implementing a Neural Network

In this exercise we will develop a neural network with fully-connected layers to perform classification, and test it out on the CIFAR-10 dataset.

# A bit of setup

import numpy as np

import matplotlib.pyplot as plt

from cs231n.classifiers.neural_net import TwoLayerNet

%matplotlib inline

plt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plots

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'

# for auto-reloading external modules

# see http://stackoverflow.com/questions/1907993/autoreload-of-modules-in-ipython

%load_ext autoreload

%autoreload 2

def rel_error(x, y):

""" returns relative error """

return np.max(np.abs(x - y) / (np.maximum(1e-8, np.abs(x) + np.abs(y))))

↑第一步还是设置图像参数,加载模块和库

We will use the class TwoLayerNet in the file cs231n/classifiers/neural_net.py to represent instances of our network. The network parameters are stored in the instance variable self.params where keys are string parameter names and values are numpy arrays. Below, we initialize toy data and a toy model that we will use to develop your implementation.

完成下一步检查工作后我们要进入neural_net.py进行编辑了

# Create a small net and some toy data to check your implementations.

# Note that we set the random seed for repeatable experiments.

input_size = 4

hidden_size = 10

num_classes = 3

num_inputs = 5

def init_toy_model():

np.random.seed(0)

return TwoLayerNet(input_size, hidden_size, num_classes, std=1e-1)

def init_toy_data():

np.random.seed(1)

X = 10 * np.random.randn(num_inputs, input_size)

y = np.array([0, 1, 2, 2, 1])

return X, y

net = init_toy_model()

X, y = init_toy_data()

neural_net.py

Forward pass: compute scores

Open the file cs231n/classifiers/neural_net.py and look at the method TwoLayerNet.loss. This function is very similar to the loss functions you have written for the SVM and Softmax exercises: It takes the data and weights and computes the class scores, the loss, and the gradients on the parameters.

Implement the first part of the forward pass which uses the weights and biases to compute the scores for all inputs.

from __future__ import print_function

from builtins import range

from builtins import object

import numpy as np

import matplotlib.pyplot as plt

from past.builtins import xrange

class TwoLayerNet(object):

"""

A two-layer fully-connected neural network. The net has an input dimension of

N, a hidden layer dimension of H, and performs classification over C classes.

We train the network with a softmax loss function and L2 regularization on the

weight matrices. The network uses a ReLU nonlinearity after the first fully

connected layer.

In other words, the network has the following architecture:

input - fully connected layer - ReLU - fully connected layer - softmax

The outputs of the second fully-connected layer are the scores for each class.

"""

def __init__(self, input_size, hidden_size, output_size, std=1e-4): #首先定义了输入层,隐层和输出层神经元数

"""

Initialize the model. Weights are initialized to small random values and

biases are initialized to zero. Weights and biases are stored in the

variable self.params, which is a dictionary with the following keys:

W1: First layer weights; has shape (D, H)

b1: First layer biases; has shape (H,)

W2: Second layer weights; has shape (H, C)

b2: Second layer biases; has shape (C,)

Inputs:

- input_size: The dimension D of the input data.

- hidden_size: The number of neurons H in the hidden layer.

- output_size: The number of classes C.

"""

#初始化权重和偏置

self.params = {}

self.params['W1'] = std * np.random.randn(input_size, hidden_size)

self.params['b1'] = np.zeros(hidden_size)

self.params['W2'] = std * np.random.randn(hidden_size, output_size)

self.params['b2'] = np.zeros(output_size)

def loss(self, X, y=None, reg=0.0): #计算损失函数和梯度

"""

Compute the loss and gradients for a two layer fully connected neural

network.

Inputs:

- X: Input data of shape (N, D). Each X[i] is a training sample.

- y: Vector of training labels. y[i] is the label for X[i], and each y[i] is

an integer in the range 0 <= y[i] < C. This parameter is optional; if it

is not passed then we only return scores, and if it is passed then we

instead return the loss and gradients. y 是一个整数,且0≤y

# Unpack variables from the params dictionary

W1, b1 = self.params['W1'], self.params['b1']

W2, b2 = self.params['W2'], self.params['b2']

N, D = X.shape

# Compute the forward pass

scores = None

#############################################################################

# TODO: Perform the forward pass, computing the class scores for the input. #

# Store the result in the scores variable, which should be an array of #

# shape (N, C). #

#############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

h_output = np.maximum(0, X.dot(W1) + b1) #(N,D) * (D,H) = (N,H)

scores = h_output.dot(W2) + b2

pass

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# If the targets are not given then jump out, we're done

if y is None:

return scores

# Compute the loss

loss = None

#############################################################################

# TODO: Finish the forward pass, and compute the loss. This should include #

# both the data loss and L2 regularization for W1 and W2. Store the result #

# in the variable loss, which should be a scalar. Use the Softmax #

# classifier loss. #

#############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

shift_scores = scores - np.max(scores, axis = 1).reshape(-1,1) #减去每一行最高得分,避免数值爆炸问题

softmax_output = np.exp(shift_scores)/np.sum(np.exp(shift_scores), axis = 1).reshape(-1,1) #softmax公式

loss = -np.sum(np.log(softmax_output[range(N), list(y)]))

loss /= N

loss += 0.5* reg * (np.sum(W1 * W1) + np.sum(W2 * W2))

pass

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# Backward pass: compute gradients

grads = {}

#############################################################################

# TODO: Compute the backward pass, computing the derivatives of the weights #

# and biases. Store the results in the grads dictionary. For example, #

# grads['W1'] should store the gradient on W1, and be a matrix of same size #

#############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

dscores = softmax_output.copy() #这里的dW2偏导其实就是输出层softmax函数的输出

dscores[range(N), list(y)] -= 1 #dscores里面每一行的对应第y个索引-1

dscores /= N #这里都是在对softmax求导

grads['W2'] = h_output.T.dot(dscores) + reg * W2

grads['b2'] = np.sum(dscores, axis = 0)

dh = dscores.dot(W2.T)

dh_ReLu = (h_output > 0) * dh

grads['W1'] = X.T.dot(dh_ReLu) + reg * W1

grads['b1'] = np.sum(dh_ReLu, axis = 0)

pass

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

return loss, grads

def train(self, X, y, X_val, y_val,

learning_rate=1e-3, learning_rate_decay=0.95,

reg=5e-6, num_iters=100,

batch_size=200, verbose=False):

"""

Train this neural network using stochastic gradient descent.

Inputs:

- X: A numpy array of shape (N, D) giving training data.

- y: A numpy array f shape (N,) giving training labels; y[i] = c means that

X[i] has label c, where 0 <= c < C.

- X_val: A numpy array of shape (N_val, D) giving validation data.

- y_val: A numpy array of shape (N_val,) giving validation labels.

- learning_rate: Scalar giving learning rate for optimization.

- learning_rate_decay: Scalar giving factor used to decay the learning rate

after each epoch.

- reg: Scalar giving regularization strength.

- num_iters: Number of steps to take when optimizing.

- batch_size: Number of training examples to use per step.

- verbose: boolean; if true print progress during optimization.

"""

num_train = X.shape[0]

iterations_per_epoch = max(num_train / batch_size, 1)

# Use SGD to optimize the parameters in self.model

loss_history = []

train_acc_history = []

val_acc_history = []

for it in range(num_iters):

X_batch = None

y_batch = None

#########################################################################

# TODO: Create a random minibatch of training data and labels, storing #

# them in X_batch and y_batch respectively. #

#########################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

idx = np.random.choice(num_train, batch_size, replace=True)

X_batch = X[idx]

y_batch = y[idx]

pass

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

# Compute loss and gradients using the current minibatch

loss, grads = self.loss(X_batch, y=y_batch, reg=reg)

loss_history.append(loss)

#########################################################################

# TODO: Use the gradients in the grads dictionary to update the #

# parameters of the network (stored in the dictionary self.params) #

# using stochastic gradient descent. You'll need to use the gradients #

# stored in the grads dictionary defined above. #

#########################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

self.params['W2'] += - learning_rate * grads['W2']

self.params['b2'] += - learning_rate * grads['b2']

self.params['W1'] += - learning_rate * grads['W1']

self.params['b1'] += - learning_rate * grads['b1']

pass

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

if verbose and it % 100 == 0:

print('iteration %d / %d: loss %f' % (it, num_iters, loss))

# Every epoch, check train and val accuracy and decay learning rate.

if it % iterations_per_epoch == 0:

# Check accuracy

train_acc = (self.predict(X_batch) == y_batch).mean()

val_acc = (self.predict(X_val) == y_val).mean()

train_acc_history.append(train_acc)

val_acc_history.append(val_acc)

# Decay learning rate

learning_rate *= learning_rate_decay

return {

'loss_history': loss_history,

'train_acc_history': train_acc_history,

'val_acc_history': val_acc_history,

}

def predict(self, X):

"""

Use the trained weights of this two-layer network to predict labels for

data points. For each data point we predict scores for each of the C

classes, and assign each data point to the class with the highest score.

Inputs:

- X: A numpy array of shape (N, D) giving N D-dimensional data points to

classify.

Returns:

- y_pred: A numpy array of shape (N,) giving predicted labels for each of

the elements of X. For all i, y_pred[i] = c means that X[i] is predicted

to have class c, where 0 <= c < C.

"""

y_pred = None

###########################################################################

# TODO: Implement this function; it should be VERY simple! #

###########################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

h = np.maximum(0, X.dot(self.params['W1']) + self.params['b1'])

scores = h.dot(self.params['W2']) + self.params['b2']

y_pred = np.argmax(scores, axis=1)

pass

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

return y_pred

↑相关注释写在代码里了,这里补几张思路图:

two_layer_net.ipynb(2)

scores = net.loss(X)

print('Your scores:')

print(scores)

print()

print('correct scores:')

correct_scores = np.asarray([

[-0.81233741, -1.27654624, -0.70335995],

[-0.17129677, -1.18803311, -0.47310444],

[-0.51590475, -1.01354314, -0.8504215 ],

[-0.15419291, -0.48629638, -0.52901952],

[-0.00618733, -0.12435261, -0.15226949]])

print(correct_scores)

print()

# The difference should be very small. We get < 1e-7

print('Difference between your scores and correct scores:')

print(np.sum(np.abs(scores - correct_scores)))

↑计算得到的分数和正确分数之差:

Your scores:

[[-0.81233741 -1.27654624 -0.70335995]

[-0.17129677 -1.18803311 -0.47310444]

[-0.51590475 -1.01354314 -0.8504215 ]

[-0.15419291 -0.48629638 -0.52901952]

[-0.00618733 -0.12435261 -0.15226949]]

correct scores:

[[-0.81233741 -1.27654624 -0.70335995]

[-0.17129677 -1.18803311 -0.47310444]

[-0.51590475 -1.01354314 -0.8504215 ]

[-0.15419291 -0.48629638 -0.52901952]

[-0.00618733 -0.12435261 -0.15226949]]

Difference between your scores and correct scores:

3.6802720496109664e-08

Forward pass: compute loss

In the same function, implement the second part that computes the data and regularization loss.

loss, _ = net.loss(X, y, reg=0.05)

correct_loss = 1.30378789133

# should be very small, we get < 1e-12

print('Difference between your loss and correct loss:')

print(np.sum(np.abs(loss - correct_loss)))

↑计算loss:

Difference between your loss and correct loss:

0.01896541960606335

反向传播

Backward pass

Implement the rest of the function. This will compute the gradient of the loss with respect to the variables W1, b1, W2, and b2. Now that you (hopefully!) have a correctly implemented forward pass, you can debug your backward pass using a numeric gradient check:

from cs231n.gradient_check import eval_numerical_gradient

# Use numeric gradient checking to check your implementation of the backward pass.

# If your implementation is correct, the difference between the numeric and

# analytic gradients should be less than 1e-8 for each of W1, W2, b1, and b2.

loss, grads = net.loss(X, y, reg=0.05)

# these should all be less than 1e-8 or so

for param_name in grads:

f = lambda W: net.loss(X, y, reg=0.05)[0]

param_grad_num = eval_numerical_gradient(f, net.params[param_name], verbose=False)

print('%s max relative error: %e' % (param_name, rel_error(param_grad_num, grads[param_name])))

↑反向传播求得各个梯度:

W2 max relative error: 3.440708e-09

b2 max relative error: 3.865091e-11

W1 max relative error: 3.561318e-09

b1 max relative error: 1.555470e-09

Train the network

To train the network we will use stochastic gradient descent (SGD), similar to the SVM and Softmax classifiers. Look at the function TwoLayerNet.train and fill in the missing sections to implement the training procedure. This should be very similar to the training procedure you used for the SVM and Softmax classifiers. You will also have to implement TwoLayerNet.predict, as the training process periodically performs prediction to keep track of accuracy over time while the network trains.

Once you have implemented the method, run the code below to train a two-layer network on toy data. You should achieve a training loss less than 0.02.

net = init_toy_model()

stats = net.train(X, y, X, y,

learning_rate=1e-1, reg=5e-6,

num_iters=100, verbose=False)

print('Final training loss: ', stats['loss_history'][-1])

# plot the loss history

plt.plot(stats['loss_history'])

plt.xlabel('iteration')

plt.ylabel('training loss')

plt.title('Training Loss history')

plt.show()

↑训练集训练过程可视化:

Load the data

Now that you have implemented a two-layer network that passes gradient checks and works on toy data, it’s time to load up our favorite CIFAR-10 data so we can use it to train a classifier on a real dataset.

from cs231n.data_utils import load_CIFAR10

def get_CIFAR10_data(num_training=49000, num_validation=1000, num_test=1000):

"""

Load the CIFAR-10 dataset from disk and perform preprocessing to prepare

it for the two-layer neural net classifier. These are the same steps as

we used for the SVM, but condensed to a single function.

"""

# Load the raw CIFAR-10 data

cifar10_dir = 'cs231n/datasets/cifar-10-batches-py'

# Cleaning up variables to prevent loading data multiple times (which may cause memory issue)

try:

del X_train, y_train

del X_test, y_test

print('Clear previously loaded data.')

except:

pass

X_train, y_train, X_test, y_test = load_CIFAR10(cifar10_dir)

# Subsample the data

mask = list(range(num_training, num_training + num_validation))

X_val = X_train[mask]

y_val = y_train[mask]

mask = list(range(num_training))

X_train = X_train[mask]

y_train = y_train[mask]

mask = list(range(num_test))

X_test = X_test[mask]

y_test = y_test[mask]

# Normalize the data: subtract the mean image

mean_image = np.mean(X_train, axis=0)

X_train -= mean_image

X_val -= mean_image

X_test -= mean_image

# Reshape data to rows

X_train = X_train.reshape(num_training, -1)

X_val = X_val.reshape(num_validation, -1)

X_test = X_test.reshape(num_test, -1)

return X_train, y_train, X_val, y_val, X_test, y_test

# Invoke the above function to get our data.

X_train, y_train, X_val, y_val, X_test, y_test = get_CIFAR10_data()

print('Train data shape: ', X_train.shape)

print('Train labels shape: ', y_train.shape)

print('Validation data shape: ', X_val.shape)

print('Validation labels shape: ', y_val.shape)

print('Test data shape: ', X_test.shape)

print('Test labels shape: ', y_test.shape)

↑输入CIFAR-10的数据,显示三个数据集的大小:

Train data shape: (49000, 3072)

Train labels shape: (49000,)

Validation data shape: (1000, 3072)

Validation labels shape: (1000,)

Test data shape: (1000, 3072)

Test labels shape: (1000,)

Train a network

To train our network we will use SGD. In addition, we will adjust the learning rate with an exponential learning rate schedule as optimization proceeds; after each epoch, we will reduce the learning rate by multiplying it by a decay rate.

input_size = 32 * 32 * 3

hidden_size = 50

num_classes = 10

net = TwoLayerNet(input_size, hidden_size, num_classes)

# Train the network

stats = net.train(X_train, y_train, X_val, y_val,

num_iters=1000, batch_size=200,

learning_rate=1e-4, learning_rate_decay=0.95,

reg=0.25, verbose=True)

# Predict on the validation set

val_acc = (net.predict(X_val) == y_val).mean()

print('Validation accuracy: ', val_acc)

↑利用SGD训练网络:

iteration 0 / 1000: loss 2.302762

iteration 100 / 1000: loss 2.302358

iteration 200 / 1000: loss 2.297404

iteration 300 / 1000: loss 2.258897

iteration 400 / 1000: loss 2.202975

iteration 500 / 1000: loss 2.116816

iteration 600 / 1000: loss 2.049789

iteration 700 / 1000: loss 1.985711

iteration 800 / 1000: loss 2.003726

iteration 900 / 1000: loss 1.948076

Validation accuracy: 0.287

Debug the training

With the default parameters we provided above, you should get a validation accuracy of about 0.29 on the validation set. This isn’t very good.

One strategy for getting insight into what’s wrong is to plot the loss function and the accuracies on the training and validation sets during optimization.

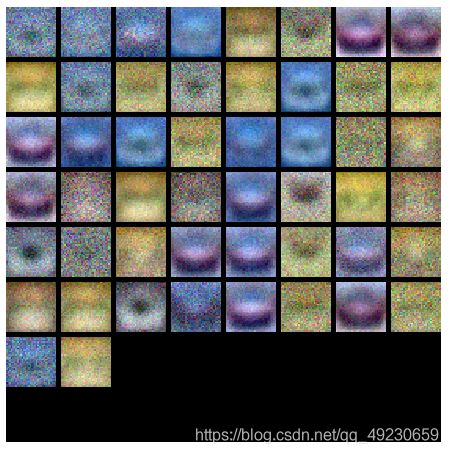

Another strategy is to visualize the weights that were learned in the first layer of the network. In most neural networks trained on visual data, the first layer weights typically show some visible structure when visualized.

# Plot the loss function and train / validation accuracies

plt.subplot(2, 1, 1)

plt.plot(stats['loss_history'])

plt.title('Loss history')

plt.xlabel('Iteration')

plt.ylabel('Loss')

plt.subplot(2, 1, 2)

plt.plot(stats['train_acc_history'], label='train')

plt.plot(stats['val_acc_history'], label='val')

plt.title('Classification accuracy history')

plt.xlabel('Epoch')

plt.ylabel('Classification accuracy')

plt.legend()

plt.show()

from cs231n.vis_utils import visualize_grid

# Visualize the weights of the network

def show_net_weights(net):

W1 = net.params['W1']

W1 = W1.reshape(32, 32, 3, -1).transpose(3, 0, 1, 2)

plt.imshow(visualize_grid(W1, padding=3).astype('uint8'))

plt.gca().axis('off')

plt.show()

show_net_weights(net)

Tune your hyperparameters

What’s wrong?. Looking at the visualizations above, we see that the loss is decreasing more or less linearly, which seems to suggest that the learning rate may be too low. Moreover, there is no gap between the training and validation accuracy, suggesting that the model we used has low capacity, and that we should increase its size. On the other hand, with a very large model we would expect to see more overfitting, which would manifest itself as a very large gap between the training and validation accuracy.

Tuning. Tuning the hyperparameters and developing intuition for how they affect the final performance is a large part of using Neural Networks, so we want you to get a lot of practice. Below, you should experiment with different values of the various hyperparameters, including hidden layer size, learning rate, numer of training epochs, and regularization strength. You might also consider tuning the learning rate decay, but you should be able to get good performance using the default value.

Approximate results. You should be aim to achieve a classification accuracy of greater than 48% on the validation set. Our best network gets over 52% on the validation set.

Experiment: You goal in this exercise is to get as good of a result on CIFAR-10 as you can (52% could serve as a reference), with a fully-connected Neural Network. Feel free implement your own techniques (e.g. PCA to reduce dimensionality, or adding dropout, or adding features to the solver, etc.).

Explain your hyperparameter tuning process below.

Y:

best_net = None # store the best model into this

#################################################################################

# TODO: Tune hyperparameters using the validation set. Store your best trained #

# model in best_net. #

# #

# To help debug your network, it may help to use visualizations similar to the #

# ones we used above; these visualizations will have significant qualitative #

# differences from the ones we saw above for the poorly tuned network. #

# #

# Tweaking hyperparameters by hand can be fun, but you might find it useful to #

# write code to sweep through possible combinations of hyperparameters #

# automatically like we did on the previous exercises. #

#################################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

hidden_size = [75, 100, 125]

results = {}

best_val_acc = 0

best_net = None

learning_rates = np.array([0.7, 0.8, 0.9, 1, 1.1])*1e-3

regularization_strengths = [0.75, 1, 1.25]

best_val = -1

input_size = 32 * 32 * 3

hidden_size = 100

num_classes = 10

net = TwoLayerNet(input_size, hidden_size,num_classes)

learing_rates = [1e-3, 1.5e-3, 2e-3]

regularizations = [0.2, 0.35, 0.5]

for lr in learing_rates:

for reg in regularizations:

stats = net.train(X_train, y_train, X_val, y_val,

num_iters=1500,batch_size=200,

learning_rate=lr,learning_rate_decay=0.95,

reg=reg, verbose=False)

val_acc = (net.predict(X_val) == y_val).mean()

if val_acc > best_val:

best_val = val_acc

best_net = net

print ("lr ",lr, "reg ", reg, "val accuracy:", val_acc)

print ("best validation accuracyachieved during cross-validation: ", best_val)

pass

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

↑调整并选取最佳的超参数:

lr 0.001 reg 0.2 val accuracy: 0.514

lr 0.001 reg 0.35 val accuracy: 0.521

lr 0.001 reg 0.5 val accuracy: 0.522

lr 0.0015 reg 0.2 val accuracy: 0.49

lr 0.0015 reg 0.35 val accuracy: 0.53

lr 0.0015 reg 0.5 val accuracy: 0.523

lr 0.002 reg 0.2 val accuracy: 0.52

lr 0.002 reg 0.35 val accuracy: 0.506

lr 0.002 reg 0.5 val accuracy: 0.524

best validation accuracyachieved during cross-validation: 0.53

# Print your validation accuracy: this should be above 48%

val_acc = (best_net.predict(X_val) == y_val).mean()

print('Validation accuracy: ', val_acc)

↑验证集准确率:

Validation accuracy: 0.524

# Visualize the weights of the best network

show_net_weights(best_net)

Run on the test set

When you are done experimenting, you should evaluate your final trained network on the test set; you should get above 48%.

# Print your test accuracy: this should be above 48%

test_acc = (best_net.predict(X_test) == y_test).mean()

print('Test accuracy: ', test_acc)

↑测试集准确度:

Test accuracy: 0.531

最后的小问题:

Inline Question

Now that you have trained a Neural Network classifier, you may find that your testing accuracy is much lower than the training accuracy. In what ways can we decrease this gap? Select all that apply.

1.Train on a larger dataset.

2.Add more hidden units.

3.Increase the regularization strength.

4.None of the above.

Y:

Y: