keras搭建unet模型—语义分割

在前一篇文章基于keras的全卷积网络FCN—语义分割中,博主用keras搭建了fcn模型,使用猫狗数据集做了训练。本文在此基础上搭建了unet模型,数据介绍请看上面这篇文章,本文直接展示unet的模型。

本文的环境是tensorflow2.4,gpu版本。

unet:

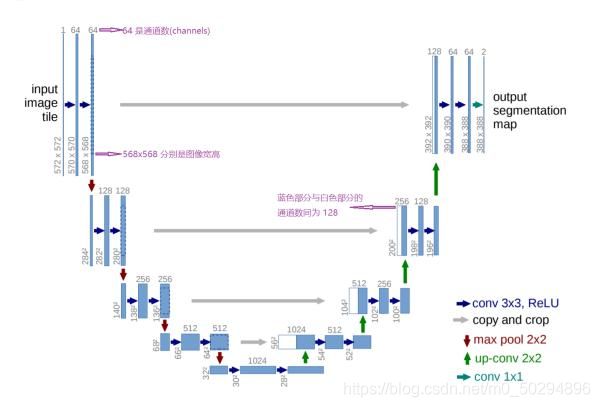

unet是u型结构,前面的层不断下采样,后面的层在不断上采样,同stage的跳接以保存信息。unet的层数不是很深,在医学领域上用于细胞检测效果显著。

搭建unet

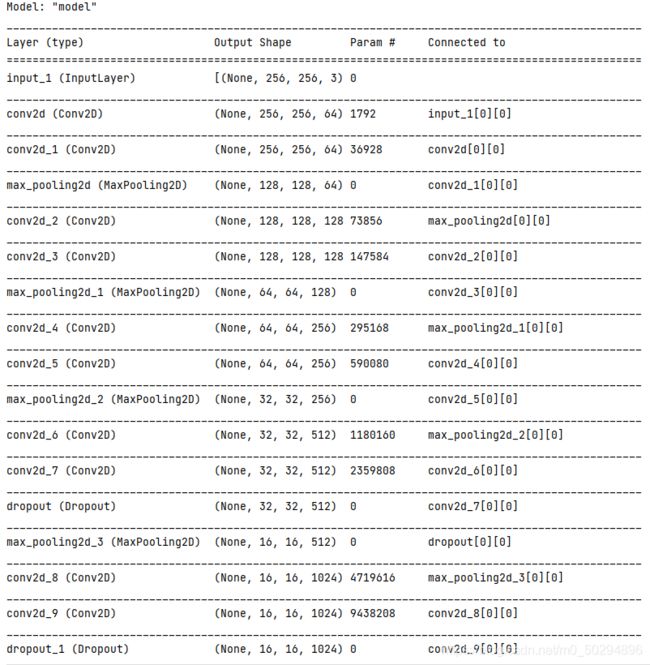

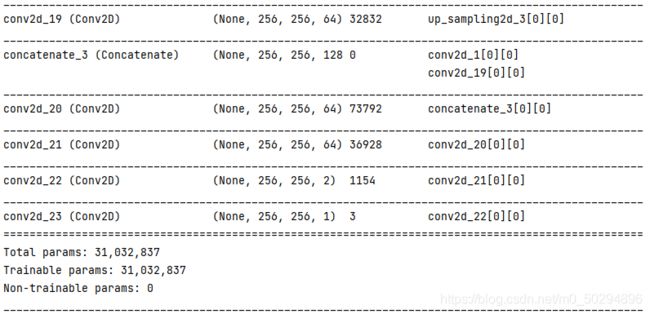

def unet(pretrained_weights=None, input_size=(256, 256, 3)):

inputs = Input(input_size)

conv1 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(inputs)

conv1 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv1)

pool1 = MaxPooling2D(pool_size=(2, 2))(conv1)

conv2 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool1)

conv2 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv2)

pool2 = MaxPooling2D(pool_size=(2, 2))(conv2)

conv3 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool2)

conv3 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv3)

pool3 = MaxPooling2D(pool_size=(2, 2))(conv3)

conv4 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool3)

conv4 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv4)

drop4 = Dropout(0.5)(conv4)

pool4 = MaxPooling2D(pool_size=(2, 2))(drop4)

conv5 = Conv2D(1024, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool4)

conv5 = Conv2D(1024, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv5)

drop5 = Dropout(0.5)(conv5)

up6 = Conv2D(512, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(drop5))

merge6 = concatenate([drop4, up6], axis=3)

conv6 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge6)

conv6 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv6)

up7 = Conv2D(256, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(conv6))

merge7 = concatenate([conv3, up7], axis=3)

conv7 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge7)

conv7 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv7)

up8 = Conv2D(128, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(conv7))

merge8 = concatenate([conv2, up8], axis=3)

conv8 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge8)

conv8 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv8)

up9 = Conv2D(64, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(conv8))

merge9 = concatenate([conv1, up9], axis=3)

conv9 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge9)

conv9 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv9)

conv9 = Conv2D(2, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv9)

conv10 = Conv2D(1, 1, activation='sigmoid')(conv9)

model = Model(inputs=inputs, outputs=conv10)

model.summary()

if (pretrained_weights):

model.load_weights(pretrained_weights)

return model

训练

训练这部分用的数据集准备可以详细参考这里,你也可以查看附录中的全部代码,内容是相同的。

训练fcn时我们使用的是adam优化器,loss函数选择的是sparse_categorical_crossentropy。训练unet时,adagrad更适合做unet的优化器,如果用adam可能需要调整一下学习率,loss函数选择binary_crossentropy较好。这是博主调试代码后选择的,你也可以根据自己的想法更换它们。

# 调整显存使用情况,避免显存占满

config = ConfigProto()

config.gpu_options.allow_growth = True

session = InteractiveSession(config=config)

data_train, data_test = dataset()

# unet

model = unet()

model.compile(optimizer='adagrad', loss='binary_crossentropy', metrics=['acc'])

tensorboard_callback = tf.keras.callbacks.TensorBoard(log_dir="E:\jupyter\log2", histogram_freq=1)

model.fit(data_train, epochs=1, batch_size = 8, validation_data=data_test, callbacks = [tensorboard_callback])

model.save('FCN_model.h5')

训练结果

![]() 仍然只训练了一个epoch

仍然只训练了一个epoch

tensorboard的使用方法如果有疑问可以参考win10+keras+tensorboard的使用

全部代码

dataset_my.py

import tensorflow as tf

import numpy as np

import glob

"""数据整理,用的是猫狗数据集"""

def read_jpg(path):

img=tf.io.read_file(path)

img=tf.image.decode_jpeg(img,channels=3)

return img

def read_png(path):

img=tf.io.read_file(path)

img=tf.image.decode_png(img,channels=1)

return img

#现在编写归一化的函数

def normal_img(input_images,input_anno):

input_images=tf.cast(input_images,tf.float32)

input_images=input_images/127.5-1

input_anno-=1

return input_images,input_anno

#加载函数

def load_images(input_images_path,input_anno_path):

input_image=read_jpg(input_images_path)

input_anno=read_png(input_anno_path)

input_image=tf.image.resize(input_image,(256,256))

input_anno=tf.image.resize(input_anno,(256,256))

return normal_img(input_image,input_anno)

def dataset():

# 读取图像和目标图像

images = glob.glob(r"images\*.jpg")

anno = glob.glob(r"annotations\trimaps\*.png")

# 现在对读取进来的数据进行制作batch

np.random.seed(1)

index = np.random.permutation(len(images)) # 随机打乱7390个数

images = np.array(images)[index]

anno = np.array(anno)[index]

# 创建dataset

dataset = tf.data.Dataset.from_tensor_slices((images, anno))

# 测试数据量和训练数据量,20%测试。

test_count = int(len(images) * 0.2)

train_count = len(images) - test_count

# 取出训练数据和测试数据

data_train = dataset.skip(test_count) # 跳过前test的数据

data_test = dataset.take(test_count) # 取前test的数据

data_train = data_train.map(load_images, num_parallel_calls=tf.data.experimental.AUTOTUNE)

data_test = data_test.map(load_images, num_parallel_calls=tf.data.experimental.AUTOTUNE)

# 现在开始batch的制作,不制作batch会使维度由4维降为3维

BATCH_SIZE = 3

data_train = data_train.shuffle(100).batch(BATCH_SIZE)

data_test = data_test.batch(BATCH_SIZE)

return data_train, data_test

model_my.py

import tensorflow as tf

from tensorflow.keras.models import *

from tensorflow.keras.layers import *

from tensorflow.keras.applications import VGG16

"""模型架构,fcn和unet"""

def fcn(pretrained_weights=None, input_size=(256, 256, 3)):

# 加载vgg16

conv_base = VGG16(weights='imagenet', input_shape=input_size, include_top=False)

# 现在创建多输出模型,三个output

layer_names = [

'block5_conv3',

'block4_conv3',

'block3_conv3',

'block5_pool']

# 得到这几个曾输出的列表,为了方便就直接使用列表推倒式了

layers_output = [conv_base.get_layer(layer_name).output for layer_name in layer_names]

# 创建一个多输出模型,这样一张图片经过这个网络之后,就会有多个输出值了

multiout_model = Model(inputs=conv_base.input, outputs=layers_output)

multiout_model.trainable = True

inputs = Input(shape=input_size)

# 这个多输出模型会输出多个值,因此前面用多个参数来接收即可。

out_block5_conv3, out_block4_conv3, out_block3_conv3, out = multiout_model(inputs)

# 现在将最后一层输出的结果进行上采样,然后分别和中间层多输出的结果进行相加,实现跳级连接

x1 = Conv2DTranspose(512, 3, strides=2, padding='same', activation='relu')(out)

# 上采样之后再加上一层卷积来提取特征

x1 = Conv2D(512, 3, padding='same', activation='relu')(x1)

# 与多输出结果的倒数第二层进行相加,shape不变

x2 = tf.add(x1, out_block5_conv3)

# x2进行上采样

x2 = Conv2DTranspose(512, 3, strides=2, padding='same', activation='relu')(x2)

# 直接拿到x3,不使用

x3 = tf.add(x2, out_block4_conv3)

# x3进行上采样

x3 = Conv2DTranspose(256, 3, strides=2, padding='same', activation='relu')(x3)

# 增加卷积提取特征

x3 = Conv2D(256, 3, padding='same', activation='relu')(x3)

x4 = tf.add(x3, out_block3_conv3)

# x4还需要再次进行上采样,得到和原图一样大小的图片,再进行分类

x5 = Conv2DTranspose(128, 3, strides=2, padding='same', activation='relu')(x4)

# 继续进行卷积提取特征

x5 = Conv2D(128, 3, padding='same', activation='relu')(x5)

# 最后一步,图像还原

preditcion = tf.keras.layers.Conv2DTranspose(3, 3, strides=2, padding='same', activation='softmax')(x5)

model = Model(inputs=inputs, outputs=preditcion)

model.summary()

# 加载预训练模型

if (pretrained_weights):

model.load_weights(pretrained_weights)

return model

def unet(pretrained_weights=None, input_size=(256, 256, 3)):

inputs = Input(input_size)

conv1 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(inputs)

conv1 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv1)

pool1 = MaxPooling2D(pool_size=(2, 2))(conv1)

conv2 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool1)

conv2 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv2)

pool2 = MaxPooling2D(pool_size=(2, 2))(conv2)

conv3 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool2)

conv3 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv3)

pool3 = MaxPooling2D(pool_size=(2, 2))(conv3)

conv4 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool3)

conv4 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv4)

drop4 = Dropout(0.5)(conv4)

pool4 = MaxPooling2D(pool_size=(2, 2))(drop4)

conv5 = Conv2D(1024, 3, activation='relu', padding='same', kernel_initializer='he_normal')(pool4)

conv5 = Conv2D(1024, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv5)

drop5 = Dropout(0.5)(conv5)

up6 = Conv2D(512, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(drop5))

merge6 = concatenate([drop4, up6], axis=3)

conv6 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge6)

conv6 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv6)

up7 = Conv2D(256, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(conv6))

merge7 = concatenate([conv3, up7], axis=3)

conv7 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge7)

conv7 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv7)

up8 = Conv2D(128, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(conv7))

merge8 = concatenate([conv2, up8], axis=3)

conv8 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge8)

conv8 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv8)

up9 = Conv2D(64, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(conv8))

merge9 = concatenate([conv1, up9], axis=3)

conv9 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(merge9)

conv9 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv9)

conv9 = Conv2D(2, 3, activation='relu', padding='same', kernel_initializer='he_normal')(conv9)

conv10 = Conv2D(1, 1, activation='sigmoid')(conv9)

model = Model(inputs=inputs, outputs=conv10)

model.summary()

if (pretrained_weights):

model.load_weights(pretrained_weights)

return model

main.py

from tensorflow.compat.v1 import ConfigProto

from tensorflow.compat.v1 import InteractiveSession

from model_my import fcn, unet

from dataset_my import dataset

import tensorflow as tf

# 调整显存使用情况,避免显存占满

config = ConfigProto()

config.gpu_options.allow_growth = True

session = InteractiveSession(config=config)

data_train, data_test = dataset()

# unet

model = unet()

model.compile(optimizer='adagrad', loss='binary_crossentropy', metrics=['acc'])

# fcn

# model = fcn()

# model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['acc'])

tensorboard_callback = tf.keras.callbacks.TensorBoard(log_dir="E:\jupyter\log2", histogram_freq=1)

model.fit(data_train, epochs=1, batch_size = 8, validation_data=data_test, callbacks = [tensorboard_callback])

model.save('FCN_model.h5')

# 加载保存的模型

# new_model=tf.keras.models.load_model('FCN_model.h5')