Point GNN环境配置及运行

Point GNN配置及运行

文章目录

- Point GNN配置及运行

-

-

- 1. 官方项目地址

- 2. 多CUDA共存

- 3. Python3.6 VSCode 调试不可用

- 4. 因protobuf版本太高,tensorflow无法import

- 5. 检查TensorFlow GPU支持

- 6. KITTI数据集下载

- 7. KITTI bat点云转pcd

- 8. 官方代码没有给3D检测框,你可以找到这部分代码,修改并查看结果

-

1. 官方项目地址

GitHub - WeijingShi/Point-GNN: Point-GNN: Graph Neural Network for 3D Object Detection in a Point Cloud, CVPR 2020.

2. 多CUDA共存

1.1 多CUDA共存

建议使用和tensorflow1.15.0版本匹配的CUDA,例如CUDA10.0,使用该版本CUDA后,可以正常运行项目,不存在报错

可参考以下链接,修改环境变量以选择使用哪个版本的CUDA

win10系统上安装多个版本的cuda - Trouvaille_fighting - 博客园 (cnblogs.com)

1.2 修改CUDA DLL名称

我的电脑装了CUDA11.1,但TensorFlow 1.x不能用到高版本的CUDA,为支持TensorFlow 1.x, 可以将CUDA一些DLL名字从11/110,改为10/100

尝试直接拷贝v11.1重命名为v10.xfake, 并修改下面的dll名称后可用

不建议使用此方法,导致了后续tensorflow session跑不了

3. Python3.6 VSCode 调试不可用

升级到Python3.7版本后,Python Interpreter 可使用VSCode调试

(11条消息) vscode python3.6无法debug解决方案_Johnny__Wang__的博客-CSDN博客_vscode插件降级

4. 因protobuf版本太高,tensorflow无法import

(11条消息) 1. Downgrade the protobuf package to 3.20.x or lower._weixin_44834086的博客-CSDN博客

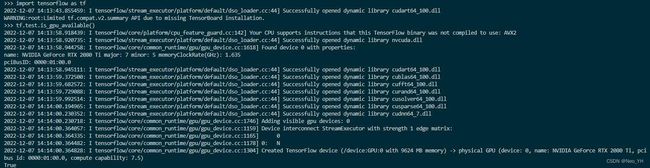

5. 检查TensorFlow GPU支持

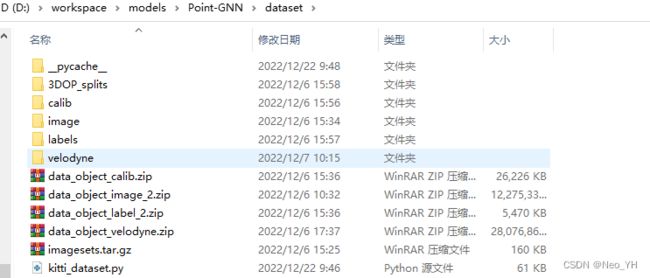

6. KITTI数据集下载

https://www.cvlibs.net/datasets/kitti/eval_object.php?obj_benchmark=3d

数据集较大38.5G左右,分为几个部分,我的组织方式如下

可参考官方项目操作,左值和右值图片只用下其中一个就行

7. KITTI bat点云转pcd

直接读bin文件也是可以的,提供这个方法用于在cloud compare等工具查看点云

KITTI数据集之点云建图 - 灰信网(软件开发博客聚合) (freesion.com)

int32_t num = 1000000;

int distance_threshold=20;

float *data = (float*)malloc(num * sizeof(float));

//

float *px = data + 0;

float *py = data + 1;

float *pz = data + 2;

float *pr = data + 3;//

//

FILE *stream;

fopen_s(&stream, inptfile, "rb");

num = fread(data, sizeof(float), num, stream) / 4;//

fclose(stream);

int32_t after_erase_num = 0;

int distance_threshold=20;

float *px_t = px;

float *py_t = py;

float *pz_t = pz;

float *pr_t = pr;//

for (int32_t i = 0; i < num; i++)

{

//setting a threshold according to the distance between the point and centre to decrease the points

double distance = sqrt((*px_t)*(*px_t) + (*py_t)*(*py_t) + (*pz_t)*(*pz_t));

if (distance < distance_threshold) {

fprintf(writePCDStream, "%f %f %f %f\n", *px, *py, *pz, *pr);

}

px_t += 4; py_t += 4; pz_t += 4; pr_t += 4;

}

fclose(writePCDStream);

8. 官方代码没有给3D检测框,你可以找到这部分代码,修改并查看结果

# convert to KITTI ================================================

detection_boxes_3d_corners = nms.boxes_3d_to_corners(

detection_boxes_3d)

pred_labels = []

for i in range(len(detection_boxes_3d_corners)):

detection_box_3d_corners = detection_boxes_3d_corners[i]

corners_cam_points = Points(xyz=detection_box_3d_corners, attr=None)

corners_img_points = dataset.cam_points_to_image(corners_cam_points, calib)

corners_xy = corners_img_points.xyz[:, :2]

if config['label_method'] == 'yaw':

all_class_name = ['Background', 'Car', 'Car', 'Pedestrian',

'Pedestrian', 'Cyclist', 'Cyclist', 'DontCare']

if config['label_method'] == 'Car':

all_class_name = ['Background', 'Car', 'Car', 'DontCare']

if config['label_method'] == 'Pedestrian_and_Cyclist':

all_class_name = ['Background', 'Pedestrian', 'Pedestrian',

'Cyclist', 'Cyclist', 'DontCare']

if config['label_method'] == 'alpha':

all_class_name = ['Background', 'Car', 'Car', 'Pedestrian',

'Pedestrian', 'Cyclist', 'Cyclist', 'DontCare']

class_name = all_class_name[class_labels[i]]

# for i in range(len(corners_xy)):

# cv2.circle(image, (int(corners_xy[i][0]), int(corners_xy[i][1])), 3, (255, 255, 125), -1)

# for i in range(len(corners_xy)):

# for j in range(len(corners_xy)):

# cv2.line(image, (int(corners_xy[i][0]), int(corners_xy[i][1])), (int(corners_xy[j][0]), int(corners_xy[j][1])), (0, 255, 0), 1)

cv2.line(image, (int(corners_xy[0][0]), int(corners_xy[0][1])), (int(corners_xy[1][0]), int(corners_xy[1][1])), (0, 255, 0), 1)

cv2.line(image, (int(corners_xy[0][0]), int(corners_xy[0][1])), (int(corners_xy[3][0]), int(corners_xy[3][1])), (0, 255, 0), 1)

cv2.line(image, (int(corners_xy[1][0]), int(corners_xy[1][1])), (int(corners_xy[2][0]), int(corners_xy[2][1])), (0, 255, 0), 1)

cv2.line(image, (int(corners_xy[2][0]), int(corners_xy[2][1])), (int(corners_xy[3][0]), int(corners_xy[3][1])), (0, 255, 0), 1)

cv2.line(image, (int(corners_xy[4][0]), int(corners_xy[4][1])), (int(corners_xy[5][0]), int(corners_xy[5][1])), (0, 255, 0), 1)

cv2.line(image, (int(corners_xy[6][0]), int(corners_xy[6][1])), (int(corners_xy[7][0]), int(corners_xy[7][1])), (0, 255, 0), 1)

cv2.line(image, (int(corners_xy[4][0]), int(corners_xy[4][1])), (int(corners_xy[7][0]), int(corners_xy[7][1])), (0, 255, 0), 1)

cv2.line(image, (int(corners_xy[5][0]), int(corners_xy[5][1])), (int(corners_xy[6][0]), int(corners_xy[6][1])), (0, 255, 0), 1)

cv2.line(image, (int(corners_xy[0][0]), int(corners_xy[0][1])), (int(corners_xy[4][0]), int(corners_xy[4][1])), (0, 255, 0), 1)

cv2.line(image, (int(corners_xy[1][0]), int(corners_xy[1][1])), (int(corners_xy[5][0]), int(corners_xy[5][1])), (0, 255, 0), 1)

cv2.line(image, (int(corners_xy[3][0]), int(corners_xy[3][1])), (int(corners_xy[7][0]), int(corners_xy[7][1])), (0, 255, 0), 1)

cv2.line(image, (int(corners_xy[2][0]), int(corners_xy[2][1])), (int(corners_xy[6][0]), int(corners_xy[6][1])), (0, 255, 0), 1)

'''

xmin, ymin = np.amin(corners_xy, axis=0)

xmax, ymax = np.amax(corners_xy, axis=0)

print(xmin, ymin, xmax, ymax)

clip_xmin = max(xmin, 0.0)

clip_ymin = max(ymin, 0.0)

clip_xmax = min(xmax, 1242.0)

clip_ymax = min(ymax, 375.0)

height = clip_ymax - clip_ymin

truncation_rate = 1.0 - (clip_ymax - clip_ymin)*(

clip_xmax - clip_xmin)/((ymax - ymin)*(xmax - xmin))

if truncation_rate > 0.4:

continue

x3d, y3d, z3d, l, h, w, yaw = detection_boxes_3d[i]

assert l > 0, str(i)

score = box_probs[i]

if USE_BOX_SCORE:

tmp_label = {"x3d": x3d, "y3d" : y3d, "z3d": z3d,

"yaw": yaw, "height": h, "width": w, "length": l}

# Rescore or not ===========================================

inside_mask = dataset.sel_xyz_in_box3d(tmp_label,

last_layer_points_xyz[box_indices])

points_inside = last_layer_points_xyz[

box_indices][inside_mask]

score_inside = box_probs_ori[inside_mask]

score = (1+occlusion(tmp_label, points_inside))*score

pred_labels.append((class_name, -1, -1, 0,

clip_xmin, clip_ymin, clip_xmax, clip_ymax,

h, w, l, x3d, y3d, z3d, yaw, score))

if VISUALIZATION_LEVEL > 0:

cv2.rectangle(image,

(int(clip_xmin), int(clip_ymin)),

(int(clip_xmax), int(clip_ymax)), (0, 255, 0), 2)

if class_name == "Pedestrian":

cv2.putText(image, '{:s} | {:.3f}'.format('P', score),

(int(clip_xmin), int(clip_ymin)-int(clip_xmin/10)),

cv2.FONT_HERSHEY_SIMPLEX, 0.4, (255, 255, 0) ,1)

else:

cv2.putText(image, '{:s} | {:.3f}'.format('C', score),

(int(clip_xmin), int(clip_ymin)),

cv2.FONT_HERSHEY_SIMPLEX, 0.4, (0, 255, 0) ,1)

'''

nms_time = time.time()

time_dict['nms'] = time_dict.get('nms', 0) + nms_time - decode_time

推理的结果