Flink 详解与分析一

Flink 详解与分析

Flink是构建在数据流之上的一款有状态的流计算框架,通常被人们称为第三代大数据分析方案

第一代大数据处理方案: 基于Hadoop的MapReduce 静态批处理 | Storm实时流计算,两套独立的计算引擎,难度大(2014年9月)

第二代大数据处理方案:Spark RDD静态批处理、Spark Streaming(DStream) 实时流计算(实时性差),统一的计算引擎,难度小(2014年2月)

第三代大数据分析方案:Flink DataSet批处理框架。Apache Flink DataStream 流处理框架(2014年12月)

可以看出Spark和Flink几乎同时诞生,但是Flink之所以成为第三代大数据处理方案,主要是因为早期人们对大数据分析的认知不够深刻或者业务场景大都局限在批处理领域,从而导致了Flink的发展相比于Spark较为缓慢,直到2017年人们才开始慢慢将批处理转向流处理

更多介绍:https://blog.csdn.net/weixin_38231448/article/details/100062961

流计算场景**:

实时计算领域、系统监控、舆情监控、交通预测、国家电网、疾病预测、银行/金融风控等领域

Spark VS Flink

Flink的核心是一个流式的数据流执行引擎,针对数据流的分布式计算 ,他提供了数据分布。数据通信以及容错机制等功能。基于流执行引擎,Flink提供了诸多更高抽象层的API以便用户编写分布式任务,例如:

DataSet API**

,对静态数据进行批处理操作,将静态数据抽象成分布式的数据集,用户可以方便地使用Flink提供的各种操作符对分布式数据集进行处理,支持Java、Scala和Python语言。

DataStream API**

,对数据流进行流处理操作,将流式的数据抽象成分布式的数据流,用户可以方便地对分布式数据流进行各种操作,支持Java和Scala语言。

Table API

,对结构化数据进行查询操作,将结构化数据抽象成关系表,并通过类SQL的DSL对关系表进行各种查询操作,支持Java和Scala语言。

此外,Flink还针对特定的应用领域提供了领域库,例如:

Flink ML**

,Flink的机器学习库,提供了机器学习Pipelines API,并实现了多种机器学习算法。

Gelly**

,Flink的图计算库,提供了图计算的相关API及多种图计算的算法实现。

Flink 架构

Flink概念

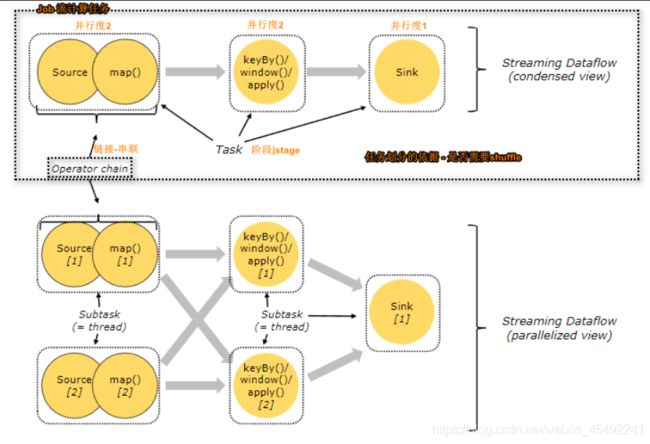

Tasks and Operator Chains(阶段划分)

对于Flink分布式任务的执行,Flink尝试根据任务计算的并行度,将若干个操作符连接成一个任务Task(相当于Spark框架中的阶段-Stage),一个Flink计算任务通常会被拆分成若干个Task(阶段),每一个Task都有自己的并行度,每一个并行度表示一个线程(SubTask)。

Task等价于Spark任务中的Stage

Operator Chain,Flink通过Operator Chain方式实现Task划分,有点类似于Spark的宽窄依赖,Operator Chain方式有两种:forward、hash | rebalance

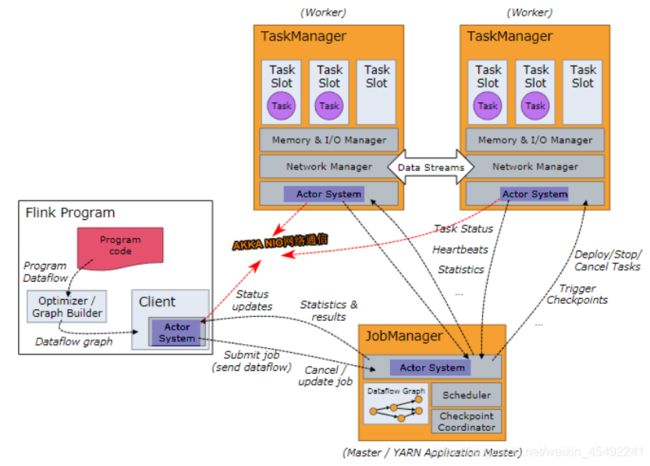

Job Managers、Task Managers、Clients

JobManagers**(Master) - 协调并行计算任务,负责调度Task、协调CheckPoint以及故障恢复,它等价于Spark中的Master+Driver。

TaskManagers**(

Slaves)- 真正负责Task划分的执行节点(执行SubTask或线程),同时需要向JobManagers汇报节点状态以及工作负荷。

Clients

与Spark不同,Client并不是集群计算的一部分,它只负责将任务Dataflow(类似Spark DAG图)提交给JobManager,任务提交完成可以退出,而Spark中的Client被称为Driver,负责生产DAG并且监控整个任务的执行过程和故障恢复。

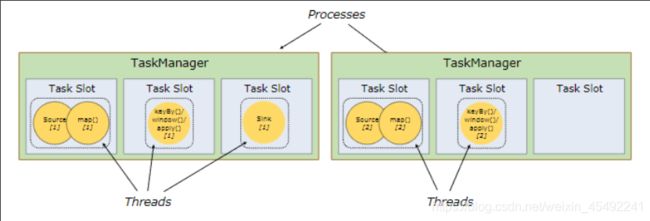

Task Slots and Resources

每个Worker(TaskManager)是一个JVM进程,可以执行一个或多个子任务(Thread或SubTask),为了控制Woker能够接受多少个任务,Woker具有所谓的Task Slot(至少一个Task Slot)。

每个Task Slot代表TaskManager资源的固定子集。例如具有3个Task Slot的TaskManager,则每个Task Slot表示占用当前TaskManager进程1/3的内存,每个Job在启动时都有自己的Task Slot,数目固定,这样通过Task Slot的划分就可以避免不同Job的SubTask之间竞争内存资源,以下表示一个Job获取6个Task Slot,但是仅仅只有5个线程,3个Task。

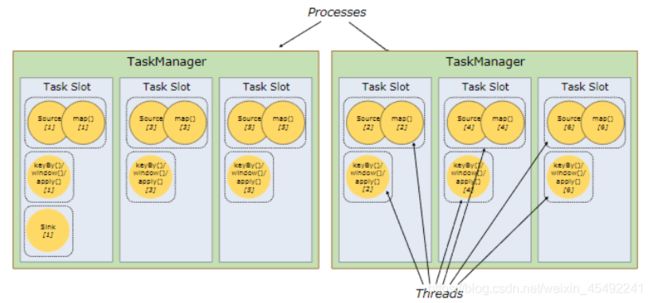

- Flink集群所需的任务槽与作业中使用的最高并行度恰好一样多。

- 更容易获得更好的资源利用率。如果没有Task Slot共享,则非密集型source子任务将阻塞与资源密集型window子任务一样多的资源,通过Task Slot共享可以将任务并行度由2增加到6,从而得到如下资源分配:

- Flink基础环境

前提条件

- JDK1.8+安装完成

- HDFS正常启动(SSH免密认证)

Flink安装

上传并解压flink

[root@centos ~]# tar -zxf flink-1.8.1-bin-scala_2.11.tgz -C /usr/

配置flink-conf.yaml

[root@centos ~]# vi /usr/flink-1.8.1/conf/flink-conf.yaml

jobmanager.rpc.address: centos

taskmanager.numberOfTaskSlots: 4

parallelism.default: 3

配置slaves

[root@centos ~]# vi /usr/flink-1.8.1/conf/slaves

centos

启动Flink

[root@centos flink-1.8.1]# ./bin/start-cluster.sh

## 快速入门

引入依赖

Client程序

//1.创建流处理的环境 -远程发布|本地执行

val env = StreamExecutionEnvironment.getExecutionEnvironment

//2.读取外围系统数据 -细化

val lines:DataStream[String]=env.socketTextStream("centos",9999)

lines.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(t=>t._1)

.sum(1)

.print()

// print(env.getExecutionPlan)

//3.执行流计算任务

env.execute("wordcount")

## 将程序打包

[root@centos flink-1.8.1]# ./bin/flink run --class com.baizhi.demo01.FlinkWordCounts --detached --parallelism 3 /root/original-flink-1.0-SNAPSHOT.jar

Starting execution of program

Job has been submitted with JobID 221d5fa916523f88741e2abf39453b81

[root@centos flink-1.8.1]#

[root@centos flink-1.8.1]# ./bin/flink list -m centos:8081

Waiting for response…

------------------ Running/Restarting Jobs -------------------

14.10.2019 17:15:31 : 221d5fa916523f88741e2abf39453b81 : wordcount (RUNNING)

No scheduled jobs.

## 取消任务

[root@centos flink-1.8.1]# ./bin/flink cancel -m centos:8081 221d5fa916523f88741e2abf39453b81

Cancelling job 221d5fa916523f88741e2abf39453b81.

Cancelled job 221d5fa916523f88741e2abf39453b81.

## 程序部署方式

脚本

[root@centos flink-1.8.1]# ./bin/flink run

–class com.baizhi.demo01.FlinkWordCounts

–detached //后台运行

–parallelism 3 //指定并行度

/root/original-flink-1.0-SNAPSHOT.jar

## 跨平台

val jarFiles=“flink\target\original-flink-1.0-SNAPSHOT.jar” //测试

val env = StreamExecutionEnvironment.createRemoteEnvironment(“centos”,8081,jarFiles)

## 本地模拟

val env = StreamExecutionEnvironment.createLocalEnvironment(3)

或者

val env = StreamExecutionEnvironment.getExecutionEnvironment //自动识别运行环境,一般用于生产

## DataStream API

### Data Sources

Source是程序读取其输入的位置,您可以使用`env.addSource(sourceFunction)`将Source附加到程序中。Flink内置了许多预先实现的SourceFunction,但是您始终可以通过实现SourceFunction(non-parallel sources)来编写自定义Source,或者通过继承RichParallelSourceFunction或实现ParallelSourceFunction接口来实现并行Source.

#### File-based

`readTextFile(path)` - 逐行读取文本文件,底层使用TextInputFormat规范读取文件,并将其作为字符串返回

val env = StreamExecutionEnvironment.getExecutionEnvironment

val lines:DataStream[String]=env.readTextFile(“file:///E:\demo\words”)

lines.flatMap(.split("\s+"))

.map((,1))

.keyBy(t=>t._1)

.sum(1)

.print()

env.execute(“wordcount”)

`readFile(fileInputFormat, path)` - 根据指定的文件输入格式读取文件(仅仅读取一次,类似批处理)

val env = StreamExecutionEnvironment.getExecutionEnvironment

val inputFormat=new TextInputFormat(null)

val lines:DataStream[String]=env.readFile(inputFormat,“file:///E:\demo\words”)

lines.flatMap(.split("\s+"))

.map((,1))

.keyBy(t=>t._1)

.sum(1)

.print()

env.execute(“wordcount”)

readFile(fileInputFormat, path, watchType, interval, pathFilter)` - 这是前两个内部调用的方法。它根据给定的FileInputFormat读取指定路径下的文件,可以根据watchType定期检测指定路径下的文件,其中watchType的可选值为`FileProcessingMode.PROCESS_CONTINUOUSLY`或者`FileProcessingMode.PROCESS_ONCE`,检查的周期由interval参数决定。用户可以使用pathFilter参数排除该路径下需要排除的文件。如果指定watchType的值被设置为`PROCESS_CONTINUOUSLY`,表示一旦文件内容发生改变,整个文件内容会被重复处理。

val env = StreamExecutionEnvironment.getExecutionEnvironment

val inputFormat=new TextInputFormat(null)

val lines:DataStream[String]=env.readFile(

inputFormat,"file:///E:\\demo\\words",

FileProcessingMode.PROCESS_CONTINUOUSLY,

5000,new FilePathFilter {

override def filterPath(filePath: Path): Boolean = {

filePath.getPath.endsWith(".txt")

}

})

lines.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(t=>t._1)

.sum(1)

.print()

env.execute("wordcount")

val env = StreamExecutionEnvironment.getExecutionEnvironment

val lines:DataStream[String]=env.socketTextStream("centos",9999)

lines.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(t=>t._1)

.sum(1)

.print()

env.execute("wordcount")

Collection-based(测试)

val env = StreamExecutionEnvironment.getExecutionEnvironment

val lines:DataStream[String]=env.fromCollection(List("this is a demo","good good"))

//val lines:DataStream[String]=env.fromElements("this is a demo","good good")

lines.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(t=>t._1)

.sum(1)

.print()

env.execute("wordcount")

Custom Source

import org.apache.flink.streaming.api.functions.source.{ParallelSourceFunction, SourceFunction}

import scala.util.Random

class CustomSourceFunction extends ParallelSourceFunction[String]{

@volatile

var isRunning:Boolean = true

val lines:Array[String] = Array("this is a demo","hello word","are you ok")

override def run(ctx: SourceFunction.SourceContext[String]): Unit = {

while(isRunning){

Thread.sleep(1000)

ctx.collect(lines(new Random().nextInt(lines.length)))//将数据输出给下游

}

}

override def cancel(): Unit = {

isRunning=false

}

}

val env = StreamExecutionEnvironment.getExecutionEnvironment

val lines:DataStream[String]=env.addSource[String](new CustomSourceFunction)

lines.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(t=>t._1)

.sum(1)

.print()

env.execute("wordcount")

FlinkKafkaConsumer√

org.apache.flink

flink-connector-kafka_${scala.version}

${flink.version}

val env = StreamExecutionEnvironment.getExecutionEnvironment

val props = new Properties()

props.setProperty("bootstrap.servers", "centos:9092")

props.setProperty("group.id", "g1")

val lines=env.addSource(new FlinkKafkaConsumer("topic01",new SimpleStringSchema(),props))

lines.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(t=>t._1)

.sum(1)

.print()

env.execute("wordcount")

如果使用SimpleStringSchema,仅仅能获取value,如果用户希望获取更多信息,比如 key/value/partition/offset ,用户可以通过继承KafkaDeserializationSchema类自定义反序列化对象。

import org.apache.flink.api.common.typeinfo.TypeInformation

import org.apache.flink.streaming.connectors.kafka.KafkaDeserializationSchema

import org.apache.kafka.clients.consumer.ConsumerRecord

import org.apache.flink.streaming.api.scala._

class UserKafkaDeserializationSchema extends KafkaDeserializationSchema[(String,String)] {

//这个方法永远返回false

override def isEndOfStream(nextElement: (String, String)): Boolean = {

false

}

override def deserialize(record: ConsumerRecord[Array[Byte], Array[Byte]]): (String, String) = {

var key=""

if(record.key()!=null && record.key().size!=0){

key=new String(record.key())

}

val value=new String(record.value())

(key,value)

}

//告诉Flink tuple元素类型

override def getProducedType: TypeInformation[(String, String)] = {

createTypeInformation[(String, String)]

}

}

val env = StreamExecutionEnvironment.getExecutionEnvironment

val props = new Properties()

props.setProperty("bootstrap.servers", "centos:9092")

props.setProperty("group.id", "g1")

val lines:DataStream[(String,String)]=env.addSource(new FlinkKafkaConsumer("topic01",new UserKafkaDeserializationSchema(),props))

lines.map(t=>t._2).flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(t=>t._1)

.sum(1)

.print()

env.execute("wordcount")

如果

Kafka

存储的都是json格式的字符串数据,用户可以使用系统自带的一些支持json的Schema,推荐使用:

- JsonNodeDeserializationSchema:要求value必须是json格式的字符串

- JSONKeyValueDeserializationSchema(meta):要求key、value都必须是josn格式数据,同时可以携带元数据(分区、 offset等)

- `val env = StreamExecutionEnvironment.getExecutionEnvironment

val props = new Properties()

props.setProperty("bootstrap.servers", "centos:9092")

props.setProperty("group.id", "g1")

val jsonData:DataStream[ObjectNode]=env.addSource(new FlinkKafkaConsumer("topic01",new JSONKeyValueDeserializationSchema(true),props))

jsonData.map(on=> (on.get("value").get("id").asInt(),on.get("value").get("name")))

.print()

env.execute("wordcount")`

Data Sinks

Data Sinks接收DataStream数据,并将其转发到指定文件,socket,外部存储系统或者print它们,Flink预定义一些输出Sink。

File-based

write*:writeAsText|writeAsCsv(…)|writeUsingOutputFormat,请注意DataStream上的write*()方法主要用于调试目的。

val env = StreamExecutionEnvironment.getExecutionEnvironment

val props = new Properties()

props.setProperty("bootstrap.servers", "centos:9092")

props.setProperty("group.id", "g1")

env.addSource(new FlinkKafkaConsumer[String]("topic01",new SimpleStringSchema(),props))

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.sum(1)

.writeAsText("file:///E:/results/text",WriteMode.OVERWRITE)

env.execute("wordcount")

以上写法只能保证at_least_once的语义处理,如果是在生产环境下,推荐使用flink-connector-filesystem将数据写到外围系统,可以保证exactly-once语义处理。

val env = StreamExecutionEnvironment.getExecutionEnvironment

val props = new Properties()

props.setProperty("bootstrap.servers", "centos:9092")

props.setProperty("group.id", "g1")

val bucketingSink = new BucketingSink[(String,Int)]("hdfs://centos:9000/BucketingSink")

bucketingSink.setBucketer(new DateTimeBucketer("yyyyMMddHH"))//文件目录

bucketingSink.setBatchSize(1024)

env.addSource(new FlinkKafkaConsumer[String]("topic01",new SimpleStringSchema(),props))

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.sum(1)

.addSink(bucketingSink)

.setParallelism(6)

env.execute("wordcount")

print() | printToErr()

val env = StreamExecutionEnvironment.getExecutionEnvironment

val props = new Properties()

props.setProperty("bootstrap.servers", "centos:9092")

props.setProperty("group.id", "g1")

fsEnv.addSource(new FlinkKafkaConsumer[String]("topic01",new SimpleStringSchema(),props))

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.sum(1)

.print("测试") //输出前缀 当有多个流输出到控制台时,可以添加前缀加以区分

.setParallelism(2)

env.execute("wordcount")

Custom Sink

class CustomSinkFunction extends RichSinkFunction[(String,Int)]{

override def open(parameters: Configuration): Unit = {

println("初始化连接")

}

override def invoke(value: (String, Int), context: SinkFunction.Context[_]): Unit = {

println(value)

}

override def close(): Unit = {

println("关闭连接")

}

}

val env = StreamExecutionEnvironment.getExecutionEnvironment

val props = new Properties()

props.setProperty("bootstrap.servers", "centos:9092")

props.setProperty("group.id", "g1")

env.addSource(new FlinkKafkaConsumer[String]("topic01",new SimpleStringSchema(),props))

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.sum(1)

.addSink(new CustomSinkFunction)

env.execute("wordcount")

RedisSink√

org.apache.bahir

flink-connector-redis_2.11

1.0

class UserRedisMapper extends RedisMapper[(String,Int)]{

// 设置数据类型

override def getCommandDescription: RedisCommandDescription = {

new RedisCommandDescription(RedisCommand.HSET,"wordcount")

}

override def getKeyFromData(data: (String, Int)): String = {

data._1

}

override def getValueFromData(data: (String, Int)): String = {

data._2.toString

}

}

val env = StreamExecutionEnvironment.getExecutionEnvironment

val props = new Properties()

props.setProperty("bootstrap.servers", "centos:9092")

props.setProperty("group.id", "g1")

val jedisConfig=new FlinkJedisPoolConfig.Builder()

.setHost("centos")

.setPort(6379)

.build()

env.addSource(new FlinkKafkaConsumer[String]("topic01",new SimpleStringSchema(),props))

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.sum(1)

.addSink(new RedisSink[(String, Int)](jedisConfig,new UserRedisMapper))

env.execute("wordcount")

FlinkKafkaProducer√

class UserKeyedSerializationSchema extends KeyedSerializationSchema[(String,Int)]{

Int

override def serializeKey(element: (String, Int)): Array[Byte] = {

element._1.getBytes()

}

override def serializeValue(element: (String, Int)): Array[Byte] = {

element._2.toString.getBytes()

}

//可以覆盖 默认是topic,如果返回值为null,表示将数据写入到默认的topic中

override def getTargetTopic(element: (String, Int)): String = {

null

}

}

val env = StreamExecutionEnvironment.getExecutionEnvironment

val props1 = new Properties()

props1.setProperty(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "centos:9092")

props1.setProperty(ConsumerConfig.GROUP_ID_CONFIG, "g1")

val props2 = new Properties()

props2.setProperty(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "centos:9092")

props2.setProperty(ProducerConfig.BATCH_SIZE_CONFIG,"100")

props2.setProperty(ProducerConfig.LINGER_MS_CONFIG,"500")

props2.setProperty(ProducerConfig.ACKS_CONFIG,"all")

props2.setProperty(ProducerConfig.RETRIES_CONFIG,"2")

env.addSource(new FlinkKafkaConsumer[String]("topic01",new SimpleStringSchema(),props1))

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.sum(1)

.addSink(new FlinkKafkaProducer[(String, Int)]("topic02",new UserKeyedSerializationSchema,props2))

env.execute("wordcount")

DataStream Transformations

Map

Takes one element and produces one element.

dataStream.map { x => x * 2 }

FlatMap

Takes one element and produces zero, one, or more elements.

dataStream.flatMap { str => str.split(" ") }

Filter

Evaluates a boolean function for each element and retains those for which the function returns true.

dataStream.filter { _ != 0 }

Union

Union of two or more data streams creating a new stream containing all the elements from all the streams.

dataStream.union(otherStream1, otherStream2, …)

Connect

“Connects” two data streams retaining their types, allowing for shared state between the two streams.

val stream1 = env.socketTextStream("centos",9999)

val stream2 = env.socketTextStream("centos",8888)

stream1.connect(stream2).flatMap(line=>line.split("\\s+"),line=>line.split("\\s+"))

.map(Word(_,1))

.keyBy("word")

.sum("count")

.print()

Split

Split the stream into two or more streams according to some criterion.

val split = someDataStream.split(

(num: Int) =>

(num % 2) match {

case 0 => List("even")

case 1 => List("odd")

}

)

Select

Select one or more streams from a split stream.

val even = split select "even"

val odd = split select "odd"

val all = split.select("even","odd")

val lines = env.socketTextStream("centos",9999)

val splitStream: SplitStream[String] = lines.split(line => {

if (line.contains("error")) {

List("error") //分支名称

} else {

List("info") //分支名称

}

})

splitStream.select("error").print("error")

splitStream.select("info").print("info")

Side Out

val lines = env.socketTextStream("centos",9999)

//设置边输出标签

val outTag = new OutputTag[String]("error")

val results = lines.process(new ProcessFunction[String, String] {

override def processElement(value: String, ctx: ProcessFunction[String, String]#Context, out: Collector[String]): Unit = {

if (value.contains("error")) {

ctx.output(outTag, value)

} else {

out.collect(value)

}

}

})

results.print("正常结果")

//获取边输出

results.getSideOutput(outTag)

.print("错误结果")

KeyBy

Logically partitions a stream into disjoint partitions, each partition containing elements of the same key. Internally, this is implemented with hash partitioning.

dataStream.keyBy("someKey") // Key by field "someKey"

dataStream.keyBy(0) // Key by the first element of a Tuple

Reduce

A “rolling” reduce on a keyed data stream. Combines the current element with the last reduced value and emits the new value.

env.socketTextStream("centos",9999)

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.reduce((t1,t2)=>(t1._1,t1._2+t2._2))

.print()

Fold

A “rolling” fold on a keyed data stream with an initial value. Combines the current element with the last folded value and emits the new value.

env.socketTextStream("centos",9999)

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.fold(("",0))((t1,t2)=>(t2._1,t1._2+t2._2))

.print()

Aggregations

Rolling aggregations on a keyed data stream. The difference between min and minBy is that min returns the minimum value, whereas minBy returns the element that has the minimum value in this field (same for max and maxBy).

在这里插入代码片

zs 001 1200

ww 001 1500

zl 001 1000

env.socketTextStream(“centos”,9999)

.map(.split("\s+"))

.map(ts=>(ts(0),ts(1),ts(2).toDouble))

.keyBy(1)

.minBy(2)//输出含有最小值的记录

.print()

1> (zs,001,1200.0)

1> (zs,001,1200.0)

1> (zl,001,1000.0)

env.socketTextStream(“centos”,9999)

.map(.split("\s+"))

.map(ts=>(ts(0),ts(1),ts(2).toDouble))

.keyBy(1)

.min(2)

.print()

1> (zs,001,1200.0)

1> (zs,001,1200.0)

1> (zs,001,1000.0)

State 和 Fault Tolerance(重点)

有状态操作或者操作算子在处理DataStream的元素或者事件的时候需要存储计算的中间状态,这就使得状态在整个Flink的精细化计算中有着非常重要的地位:

- 记录数据从某一个过去时间点到当前时间的状态信息。

- 以每分钟/小时/天汇总事件时,状态将保留待处理的汇总记录。

- 在训练机器学习模型时,状态将保持当前版本的模型参数。

Flink在管理状态

方面,使用Checkpoint和Savepoint实现状态容错。Flink的状态在计算规模发生变化的时候,可以自动在并行实例间实现状态的重新分发,底层使用State Backend策略存储计算状态,State Backend决定了状态存储的方式和位置(后续章节介绍)。

Flink在状态管理中将所有能操作的状态分为Keyed State和Operator State,其中Keyed State类型的状态同key一一绑定,并且只能在KeyedStream中使用。所有non-KeyedStream状态操作都叫做Operator State。Flink在底层做状态管理时,将Keyed State和

Flink在分发Keyed State状态的时候,不是以key为单位,而是以Key Group为最小单元分发

Operator State (也称为 non-keyed state),每一个operator state 会和一个parallel operator instance进行绑定。Keyed State 和 Operator State 以两种形式存在( managed(管理)和 raw(原生)),所有Flink已知的操作符都支持Managed State,但是Raw State仅仅在用户自定义Operator时使用,并且不支持在并行度发生变化的时候重新分发状态,因此,虽然Flink支持Raw State,但是在绝大多数的应用场景下,一般使用的都是Managed State。

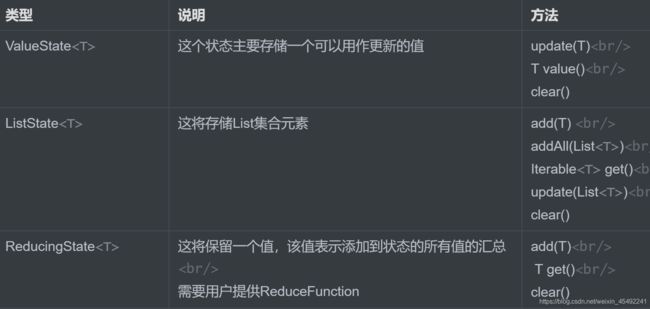

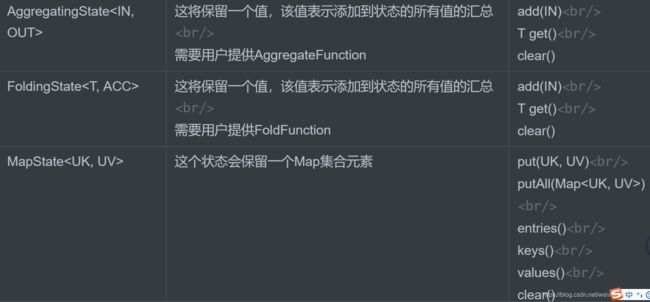

Keyed State

Keyed-state接口提供对不同类型状态的访问,所有状态都限于当前输入元素的key。

ValueSate

var env=StreamExecutionEnvironment.getExecutionEnvironment

env.socketTextStream("centos",9999)

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.map(new RichMapFunction[(String,Int),(String,Int)] {

var vs:ValueState[Int]=_

override def open(parameters: Configuration): Unit = {

val vsd=new ValueStateDescriptor[Int]("valueCount",createTypeInformation[Int])

vs=getRuntimeContext.getState[Int](vsd)

}

override def map(value: (String, Int)): (String, Int) = {

val histroyCount = vs.value()

val currentCount=histroyCount+value._2

vs.update(currentCount)

(value._1,currentCount)

}

}).print()

env.execute("wordcount")

AggregatingState

var env=StreamExecutionEnvironment.getExecutionEnvironment

env.socketTextStream("centos",9999)

.map(_.split("\\s+"))

.map(ts=>(ts(0),ts(1).toInt))

.keyBy(0)

.map(new RichMapFunction[(String,Int),(String,Double)] {

var vs:AggregatingState[Int,Double]=_

override def open(parameters: Configuration): Unit = {

val vsd=new AggregatingStateDescriptor[Int,(Double,Int),Double]("avgCount",new AggregateFunction[Int,(Double,Int),Double] {

override def createAccumulator(): (Double, Int) = {

(0.0,0)

}

override def add(value: Int, accumulator: (Double, Int)): (Double, Int) = {

(accumulator._1+value,accumulator._2+1)

}

override def merge(a: (Double, Int), b: (Double, Int)): (Double, Int) = {

(a._1+b._1,a._2+b._2)

}

override def getResult(accumulator: (Double, Int)): Double = {

accumulator._1/accumulator._2

}

},createTypeInformation[(Double,Int)])

vs=getRuntimeContext.getAggregatingState(vsd)

}

override def map(value: (String, Int)): (String, Double) = {

vs.add(value._2)

val avgCount=vs.get()

(value._1,avgCount)

}

}).print()

env.execute("wordcount")

MapState

var env=StreamExecutionEnvironment.getExecutionEnvironment

//001 zs 202.15.10.12 日本 2019-10-10

env.socketTextStream("centos",9999)

.map(_.split("\\s+"))

.map(ts=>Login(ts(0),ts(1),ts(2),ts(3),ts(4)))

.keyBy("id","name")

.map(new RichMapFunction[Login,String] {

var vs:MapState[String,String]=_

override def open(parameters: Configuration): Unit = {

val msd=new MapStateDescriptor[String,String]("mapstate",createTypeInformation[String],createTypeInformation[String])

vs=getRuntimeContext.getMapState(msd)

}

override def map(value: Login): String = {

println("历史登录")

for(k<- vs.keys().asScala){

println(k+" "+vs.get(k))

}

var result=""

if(vs.keys().iterator().asScala.isEmpty){

result="ok"

}else{

if(!value.city.equalsIgnoreCase(vs.get("city"))){

result="error"

}else{

result="ok"

}

}

vs.put("ip",value.ip)

vs.put("city",value.city)

vs.put("loginTime",value.loginTime)

result

}

}).print()

env.execute("wordcount")

总结

new Rich[Map|FaltMap]Function {

var vs:XxxState=_ //状态声明

override def open(parameters: Configuration): Unit = {

val xxd=new XxxStateDescription //完成状态的初始化

vs=getRuntimeContext.getXxxState(xxd)

}

override def xxx(value: Xx): Xxx = {

//状态操作

}

}

- ValueState getState(ValueStateDescriptor)`

- `ReducingState getReducingState(ReducingStateDescriptor)`

- `ListState getListState(ListStateDescriptor)`

- `AggregatingState getAggregatingState(AggregatingStateDescriptor)`

- `FoldingState getFoldingState(FoldingStateDescriptor)`

- `MapState getMapState(MapStateDescriptor)`

State Time-To-Live(TTL)

基本使用

可以将state存活时间(TTL)分配给任何类型的keyed-state,如果配置了TTL且状态值已过期,则Flink将尽力清除存储的历史状态值。

import org.apache.flink.api.common.state.StateTtlConfig

import org.apache.flink.api.common.state.ValueStateDescriptor

import org.apache.flink.api.common.time.Time

val ttlConfig = StateTtlConfig

.newBuilder(Time.seconds(1))

.setUpdateType(StateTtlConfig.UpdateType.OnCreateAndWrite)

.setStateVisibility(StateTtlConfig.StateVisibility.NeverReturnExpired)

.build

val stateDescriptor = new ValueStateDescriptor[String]("text state", classOf[String])

stateDescriptor.enableTimeToLive(ttlConfig)

案例

var env=StreamExecutionEnvironment.getExecutionEnvironment

env.socketTextStream("centos",9999)

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.map(new RichMapFunction[(String,Int),(String,Int)] {

var vs:ValueState[Int]=_

override def open(parameters: Configuration): Unit = {

val vsd=new ValueStateDescriptor[Int]("valueCount",createTypeInformation[Int])

val ttlConfig = StateTtlConfig.newBuilder(Time.seconds(5)) //过期时间5s

.setUpdateType(UpdateType.OnCreateAndWrite)//创建和修改的时候更新过期时间

.setStateVisibility(StateVisibility.NeverReturnExpired)//永不返回过期的数据

.build()

vsd.enableTimeToLive(ttlConfig)

vs=getRuntimeContext.getState[Int](vsd)

}

override def map(value: (String, Int)): (String, Int) = {

val histroyCount = vs.value()

val currentCount=histroyCount+value._2

vs.update(currentCount)

(value._1,currentCount)

}

}).print()

env.execute("wordcount")

注意:开启TTL之后,系统会额外消耗内存存储时间戳(Processing Time),如果用户以前没有开启TTL配置,在启动之前修改代码开启了TTL,在做状态恢复的时候系统启动不起来,会抛出兼容性失败以及StateMigrationException的异常。

清除Expired State

在默认情况下,仅当明确读出过期状态时,通过调用ValueState.value()方法才会清除过期的数据,这意味着,如果系统一直未读取过期的状态,则不会将其删除,可能会导致存储状态数据的文件持续增长。

清除Expired State

在默认情况下,仅当明确读出过期状态时,通过调用ValueState.value()方法才会清除过期的数据,这意味着,如果系统一直未读取过期的状态,则不会将其删除,可能会导致存储状态数据的文件持续增长。

import org.apache.flink.api.common.state.StateTtlConfig

import org.apache.flink.api.common.time.Time

val ttlConfig = StateTtlConfig

.newBuilder(Time.seconds(1))

.cleanupFullSnapshot

.build

只能用于memory或者snapshot状态的后端实现,不支持RocksDB State Backend。

Cleanup in background

可以开启后台清除策略,根据State Backend采取默认的清除策略(不同状态的后端存储,清除策略不同)

import org.apache.flink.api.common.state.StateTtlConfig

val ttlConfig = StateTtlConfig

.newBuilder(Time.seconds(1))

.cleanupInBackground

.build

Incremental cleanup(基于内存backend)

import org.apache.flink.api.common.state.StateTtlConfig

val ttlConfig = StateTtlConfig.newBuilder(Time.seconds(5))

.setUpdateType(UpdateType.OnCreateAndWrite)

.setStateVisibility(StateVisibility.NeverReturnExpired)

.cleanupIncrementally(100,true) //默认值 5 | false

.build()

第一个参数表示每一次触发cleanup的时候,系统会一次处理100个元素。第二个参数是false,表示只要用户对任意一个state进行操作,系统都会触发cleanup策略;第二个参数是true,表示只要系统接收到记录数(即使用户没有操作状态)就会触发cleanup策略。

-

RocksDB compaction

RocksDB是一个嵌入式的key-value存储,其中key和value是任意的字节流,底层进行异步压缩,会将key相同的数据进行compact(压缩),以减少state文件大小,但是并不对过期的state进行清理,因此可以通过配置compactFilter,让RocksDB在compact的时候对过期的state进行排除,RocksDB数据库的这种过滤特性,默认关闭,如果想要开启,可以在flink-conf.yaml中配置 state.backend.rocksdb.ttl.compaction.filter.enabled:true 或者在应用程序的API里设置RocksDBStateBackend::enableTtlCompactionFilter。